Compositionality of meaning

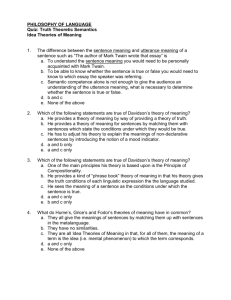

advertisement

Logic, language and cognition

Henriëtte de Swart and Yoad Winter

Master program CAI, RMA Linguistics

Utrecht, nov 2010 - jan 2011

Background and preliminaries I

• BA: intro to linguistics/logic/cognition,

and developing beginning expertise in

reading academic literature, starting to

work out research questions, developing

relatively small applications.

• MA: introduce advanced methodology

and tools, develop higher level

academic skills, leading to more

advanced research projects and

independent research.

Background and preliminaries II

• MA CAI: Balance between mandatory

courses and optional courses.

• Two tracks:

Cognitive modelling

Logic and intelligent systems

• LLC is a mandatory course offered by

linguistics and feeding into both tracks.

• Core focus: meaning as core business of

language.

• Background reading on semantics for

today: Schlenker (2008).

Background and preliminaries III

• Relevant recommended courses related to LLC in

later periods of the year:

Neurocognition of language (Avrutin, period 2)

Semantic Structures (de Swart/Le Bruyn, 3)

Het sociale semantische Web (Monachesi, 3)

Conceptual Semantics (Zwarts, period 4)

Learning in Computational Linguistics (Winter,

period 4)

Language and cognition

• Language is means of communication in social

community: transfer information from the head

of the speaker to the head of the hearer.

• Questions about language in the mind (language

as part of human cognitive architecture).

• Questions about meaning as content of linguistic

expressions, and target of communication

process.

• Relation between mind and world: truth

conditions.

• Questions about processing meaning in real time.

Logic, language and cognition

• Formal approaches to meaning: role of

logic, syntax-semantics interface,

complexity of grammars, expressivity.

• Embedding in cognitive system: what

model of semantics suits the mind?

Experimental approaches to semantic

processing.

• Applications in artificial systems: use of

grammatical formalisms for computational

applications, modelling meaning in

intelligent systems.

Academic skills acquired in LLC

• Acquire knowledge, tools, methodology and

approaches for doing linguistic research in the

area of cognitive modelling and logic and

intelligent systems.

• Develop skills in reading primary texts in logic,

language and cognition, and being able to

extract the main points, discuss their

contribution to the field, criticize weakness,

suggest further research questions and

alternative approaches.

• Develop skills in formulating research

questions, carrying out this research and

reporting on results.

Approach

• Reading up-to-date literature exposing

students to ‘hot’ questions in the area of logic,

language and cognition.

• Presentation and discussion of foundational

questions defining the field, investigation of

cutting edge tools and methodologies.

• Homeworks developing expertise in tools and

methodologies.

• Balance between instructors’ activity and

students’ activity in the classroom.

• Support in developing academic skills and

individual research.

Overview of the course

• http://www.let.uu.nl/~Henriette.deSwar

t/personal/Classes/LLC10/index.html

• General, Calendar, Exercices, Results

• Requirements: present a paper (25%),

do homeworks (25%), write a final

paper (50%) on a topic related to the

course (meet with one of the teachers

for approval).

• N.B. first paper presentation on Mo Nov

22 – distribute papers over students on

We Nov 17.

Compositionality of meaning as

starting point of semantics

• Core insight: the meaning of any complex

expression is determined by the meanings of

its parts, and the way they are put together.

• Old insight: versions of compositionality have

been around since BC.

• Heuristic principle: semantic theories should

respect principle of compositionality of

meaning.

• But: compositional analysis not always

obvious. How exactly should we formulate the

notion of compositionality? How do we deal

with compositionality problems?

Implications for architecture

• Principle of compositionality has implications

ranging from the lexicon via syntax-semantics

interface to pragmatics and semantics

processing.

• Implications for theory of the lexicon: how to

define the meaning of words in such a way

that they can constitute the input to

compositional rules? What kind of ontology do

we need? Relation between linguistic

knowledge and knowledge of the world.

Implications for syntax-semantics

interface

• How does construction of syntactic trees

relate to construction of complex

meaning? Is there a one-one mapping?

• Background: type theory, basic

syntactic theory about (binary) trees,

categorial grammar.

• Different formalisms, balance between

complicating syntax or complicating

semantics.

Implications for communication

• Implications for semantics-pragmatics

interface: what aspects of meaning are

not immediately derived from

lexicon+syntactic composition but come

in through pragmatic reasoning?

• What are the rules of conversation that

come in at the level of communication

between intelligent agents?

• What is the position of pragmatics in

linguistic theory?

Implications for processing

• Semantic processing by humans: how do we

understand sentences in real time?

• How is semantic processing affected by

compositionality problems?

• How is processing affected by pragmatic

reasoning?

• Processing by computers: how do we design

intelligent systems that process languages as

well as humans?

• Which formal methods and technologies work

best in natural language processing?

Compositionality and implications

• Approach taken in LLC: start with the principle

of compositionality of meaning (today!).

• Explore implications of compositionality of

meaning for theories of the lexicon (We),

syntax-semantics interface and pragmatics.

• At each level, investigate consequences for

semantic processing in experimental

psychology (starting next week).

• Also: consequences for natural language

grammatical formalisms and computational

applications (mostly second half of the course,

focussing on syntax-semantics interface)

Compositionality of meaning

• Reading: Peter Pagin & Dag Westerståhl (2008).

• Questions:

How to formulate the principle of

compositionality of meaning? What are the

advantages/disadvantages of different versions?

Why is it useful to assume that natural language

is compositional for the relation between logic,

language and cognition?

Is it uncontroversial to assume compositionality?

What problems arise if we do/don’t?

Do problems imply we have to abandon the

principle of compositionality of meaning?

Learnability as an argument

• A natural language has infinitely many

meaningful sentences, expressing an infinite

range of propositions.

• It is impossible for a human speaker to learn

the meaning of each sentence one by one.

• It must be possible to learn the language by

learning the meaning of a finite number of

expressions, and a finite number of

construction forms.

• For this to be possible, the language must

have a compositional semantics.

Pagin & Westerståhl (2008: 15).

Novelty as an argument for

compositionality

• Hearers are able to understand sentences they

have never heard before.

• Understanding novel sentences is possible only

if the hearer can assign to new sentences the

meaning that they independently have on the

basis of their knowledge of individual

expressions and rules of putting these

meanings together.

• So understanding novel sentences is possible

only if language is compositional.

Pagin and Westerståhl (2008: 16).

Productivity and systematicity as

arguments for compositionality

• Generative syntax (Chomsky): creativity of

language, production of infinite set of sentences.

• Productivity argument relevant to

compositionality of meaning in so far as new

sentences need to be understood.

(P&W 2008: 15-16)

• Fodor: if a hearer understand a sentence of

the form tRu, she will also understand the

corresponding sentence uRT, and this is best

explained by an appeal to compositionality.

(P&W 2008: 16)

Induction on synonymy

• Fodor: if a hearer understand a sentence of

the form tRu, she will also understand the

corresponding sentence uRT.

• Induction on synonymy: in case after case, we

find the result of substitution synonymous with

the original expression, if the new part is

taken as synonymous with the old.

• Inductive generalization: substitution by

synonyms is meaning preserving.

P&W (2008: 18)

Intersubjectivity and communication

• In most cases, speakers of the same language

interpret new sentences similarly:

intersubjective agreement in interpretation.

• So the meaning of sentences is computable,

not guessed.

• Frege: the proposition has a structure that

mirrors the structure of the sentence:

isomorphism.

• Without this structural correspondence,

communicative success with new propositions

would not be possible.

Critical remarks

• Arguments from learnability and novelty rely

on creativity. Is it justified to assume an

infinite set of propositions?

• Arguments from production are more syntaxoriented than semantics-oriented.

• Not all arguments require full-fledged

compositionality. Often, some notion of

computability is enough.

And yet..

• Even if they are inference to the best

explanation, compositional semantics is very

simple.

• Compositional semantics implies that a

minimal number of processing steps are

needed by the speaker to arrive at a full

expression, and the hearer for arriving at a full

interpretation, and there be communicative

success.

• If we assume compositionality as a viable

starting point – how are we going to define it?

Substitution and function

• Frege: “Let us assume that the sentence has a

reference. If we now replace one word of the

sentences by another having the same

reference, this can have no bearing upon the

reference of the sentence.”

Substitutional version, see P&W (2008: 2).

• Compositionality of meaning requires the

meaning (value) of a compound expression to

be a function of other meanings (values) and a

‘mode of composition’.

Functional version, see P&W (2008: 3)

Structured expressions and grammar

• Set E of linguistic expressions as a domain

over which syntactic rules are defined.

• E is generated by syntactic operations from a

subset A of atoms (primitive expressions,

words).

• is a set of syntactic operations, defined as

partical functions from En to E, such that E is

generated from A via .

• A grammar E is {E, A, }.

• A grammatical term is the analysis tree

associated with the expression (string). GTE is

the set of grammatical terms for E.

Semantics as mapping from terms to

meanings

• Semantics is function from GTE to a set of

meanings M.

• This means that the expression has meaning

derivatively, relative to a way of constructing

it, i.e. to a corresponding grammatical term.

• Distinction between grammaticality (being

derivable by the grammar rules) and

meaningfulness (being in the domain of ).

• No structure imposed upon M besides a

relation of synonymy: u t iff (u) and (t)

are both defined, and (u) = (t).

Basic compositionality: functional

version

• Funct(): For every rule , there is a

meaning operation r such that if (u1,…,un)

has meaning, ((u1,…,un)) = r ((u1), …,

(un)).

• Domain Principle: subterms of meaningful

terms are themselves meaningful.

Basic compositionality: substitution

version

• Subst(): If s[u1,…,un] and s[t1,…,tn] are both

meaningful terms, and if ui ti for 1 i n,

then s[u1,…,un] s[t1,…,tn].

• The notation s[u1,…,un] indicates that the term

s contains – not necessarily immediate –

disjoint occurrences of subterms among

u1,…,un and s[t1,…,tn] results from replacing

each ui by ti.

• DP is not presupposed for the substitution

version.

Functional and substitution version:

equivalence

• Under DP, Funct() and Subst() are

equivalent.

• Basic compositionality takes the

meaning of a complex term to be

determined by the meanings of the

immediate sub-terms and the top-level

syntactic operation.

• Weaker versions of compositionality

arise if we define compositionality at

lower levels of syntactic structure.

Stronger compositionality

• Enlarging the domain of the semantic

function.

• Zabo (2000): same meaning operations

define semantic functions in all possible

human languages, not just for all

sentences in each language taken by

itself.

• Cross-linguistic stability in semantic

operations (‘universal’ semantics).

Additional restrictions on

meaningfulness.

• Husserl property: Synonymous terms

belong to the same semantic category.

Synonymous terms can be intersubstituted without loss of

meaningfulness.

• Huss: u ~ t if, for every term s in E,

s[u] dom() iff s[t] dom().

• Huss imposes more structure on the

syntactic side.

Additional restrictions on meaning

composition operations

• The function version is given by recursion over

syntax, but only if the meanings are defined

by recursion over meanings do we have a a

recursive semantics.

• Rec(): There is a function b and for every

and operation r such that for every

meaningful expression s,

(s) = b(x) if s is atomic

(s) = r((u1), .. (un), u1… un)

if s = (u1… un).

• Standard semantic theories are both

compositional and recursive.

Frege’s Context Principle

• Frege’s Context Principle: The meaning of a

term is the contribution it makes to the

meanings of complex terms of which it is a

part.

• InvSubst(): If u is not t, then there is

some term s such that either exactly one of

s[u] and s[t] are meaningful, or both are, and

s[u] is not s[t].

• That is, if two terms of the same semantic

category make the same contribution to all

complex terms, their meanings cannot be

distinguished.

Strengthening the Context Principle

• Strengthening InvSubst to InvSubst, we can

require that substitution of terms by terms

with different meanings always changes

meaning:

• InvSubst(): If for some i, 0 i n,

ni is not ti, then for every term s[u1,…un] it

holds that either exactly one of s[u1,…un] and

s[t1,…tn] is meaningful, or both are, and

s[u1,…un] is not s[t1,…tn].

Implications for synonymy

• InvSubst disallows synonymy between

complex terms that can be transformed

into each other by substitution of

constituents at least some of which are

non-synonymous, but it allows two

terms with different structure to be

synonymous.

• How closely related should two terms be

in syntactic structure in order to qualify

as synonyms?

Congruence

• Two terms t and u are -congruent iff:

• (i) r or u is atomic, t u, and neither is

a constituent of the other, or

• (ii) t = (t1,…tn), u = (u1,…un), ti and ui

are -congruent for all i, 1 i n, and

for all s1,…sn, (s1,…sn) (s1,…sn) if

either is defined.

• Cong: If t u, then t and u are congruent.

Inverse Functional compositionality

• Cong leads to inverse functional

compositionality:

• InvFunct(): The syntactic expression of

a complex meaning m is determined, up

to -congruence, by the composition of

m and the syntactic expressions of its

parts.

Direct compositionality

• Jacobson (2002).

• In strong direct compositionality, expressions

are built up from sub-expressions by means of

concatenation (left or right).

• In weak direct compositionality, one

expression may wrap around another (as call

up wraps around him in call him up).

• In the weak version of direct compositionality,

substrings are allowed to have discontinuous

occurrences.

Indirect compositionality

• For indirect compositionality, syntactic

operations are loosened up to include

string deletion, string reordering, term

substitution and insertion of elements.

• Distinction between direct and indirect

compositionality relevant for the

definition of the syntax-semantics

interface: what choice of syntactic

theory is compatible with compositional

semantics?

• Important focus of second half course!

Language signs

• Abstract categorial grammar: signs.

• Sign is a triple consisting of a string, a

syntactic category and a meaning.

• Grammar is defined in terms of partial

functions from signs to signs.

• Requires different way of organizing relation

between grammatical terms and their

meaning.

• Abstract categorial grammar will be discussed

in the second half of the course.

Arguments against compositionality

• How controversial is it to assume that

compositionality holds?

• Empirical arguments that certain

constructions are non-compositional.

Counterexamples falsify principle of

compositionality of meaning for natural

language.

• Arguments that compositionality is

trivial, not needed, or not suited to

actual linguistic communication.

Superfluity/unsuitability

• Schiffer (1987): compositionality superfluous

for mental processing.

• But how to translate sentence representations

to mental representations?

• Recanati (2004): pragmatic compositionality,

enrichment of meaning. Requires more

thought about the semantics-pragmatics

interface.

• Radical contextualism, e.g. Sperber and Wilson

(1992): evaluation in context underdetermined

by literal meaning.

Potential counterexamples to

compositionality

• Belief sentences: synonymy not preserved

under verbs of mental attitude.

• Quotation: brother and male sibling are

synonyms, but ‘brother’ and ‘male sibling’ are

not.

• Idioms: kick the bucket means ‘to die’.

• Ambiguities that are not clearly visible in

lexical or structural differences, e.g. scope.

Compositionality for logic and

language

• Principle of compositionality of meaning has

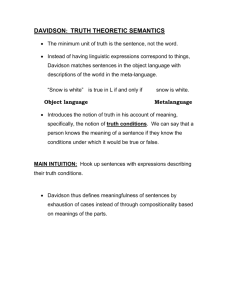

shaped semantic theory.

• Formal approaches favor use of type theory as

a logical language that allows compositional

analysis, cf. Schlenker (2008).

• Primitive objects: Semantics usually defines

interpretation in terms of a domain of

individuals and a set of truth values.

• Rules of interpretation: typically function

application.

Potential problems

• What happens if we encounter natural

language expressions that cannot be defined in

terms of individuals (mass nouns, plurals).

How to extend our ontology? What are the

implications for construction rules?

• What happens if type theory does not allow

composition by function application? E.g. type

conflicts – see coercion stuff!

• Is a syntax-semantics interface using

categories, type theory, and variables

desirable, or should we explore different

approaches – variable free semantics,

‘abstract’ categorial grammar.

Theory-dependent compositionality

problems

• Compositionality problems that arise under

particular assumptions of syntactic or semantic

operations, e.g. negative concord.

• Assume that our semantic composition rule is

function application.

• How to account for the contrast between:

• Nobody talked to nobody [English: ]

• Nessuno ha parlato con nessuno. [Ital: xy]

• Solutions: different lexical entries (2nd nessuno

is not negative), ambiguities (nessuno can

mean either x or x), different semantic

operations from function application (polyadic

quantification).

Processing compositional meaning

• Do ‘repairs’ of compositionality problems (e.g.

coercion in the case of type conflicts) have a

reflection in real time semantic processing

(e.g. slower understanding)?

• How do we deal with ‘enriched’ meanings

(presupposition, implicature): semanticspragmatics interface? What grammatical and

cognitive mechanisms are involved in

pragmatic reasoning?

• How can processing evidence bear on semantic

theory?

Back to class overview

• Organization of topics: relevance of

compositionality for study of logic, language

and cognition.

• First half of course: start with the role of the

lexicon in compositionality problems. Build up

grammar, semantics-pragmatics interface.

Provide processing evidence to test linguistic

theory.

• Second half of the course: Formal modelling of

compositionality problems, scope, dependency

relations. Balance between complicating

syntax/complicating semantics.