DNA Microarrays - Department of Statistics and Probability

advertisement

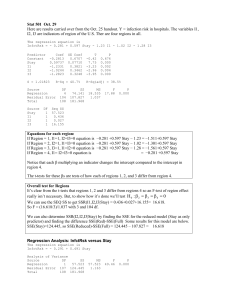

Applied Linear Regression CSTAT Workshop March 16, 2007 Vince Melfi References • “Applied Linear Regression,” Third Edition by Sanford Weisberg. • “Linear Models with R,” by Julian Faraway. • Countless other books on Linear Regression, statistical software, etc. Statistical Packages • • • • • • • Minitab (we’ll use this today) SPSS SAS R Splus JMP ETC!! Outline I. II. III. IV. V. Simple linear regression review Multiple Regression: Adding predictors Inference in Regression Regression Diagnostics Model Selection Savings Rate Data Data on Savings Rate and other variables for 50 countries. Want to explore the effect of variables on savings rate. • SaveRate: Aggregate Personal Savings divided by disposable personal income. (Response variable.) • Pop>75: Percent of the population over 75 years old. (One of the predictors.) I. Simple Linear Regression Review 5 Scatterplot of SaveRate vs pop>75 20 SaveRate 15 10 5 0 0 1 2 3 4 5 pop>75 I. Simple Linear Regression Review 6 Regression Output The regression equation is SaveRate = 7.152 + 1.099 pop>75 Fitted model S = 4.29409 R-Sq = 10.0% R-Sq(adj) = 8.1% Analysis of Variance R2 (coeff. of determination) Source DF SS MS F P Regression 1 98.545 98.5454 5.34 0.025 Error 48 885.083 18.4392 Total 49 983.628 Testing the model I. Simple Linear Regression Review 7 Importance of Plots • Four data sets • All have – Regression line Y = 3 + 0.5 x – R2 = 66.7% – S = 1.24 – Same t statistics, etc., etc. • Without looking at plots, the four data sets would seem similar. Importance of Plots (1) Fitted Line Plot y1 = 3.000 + 0.5001 x1 11 S R-Sq R-Sq(adj) 10 1.23660 66.7% 62.9% 9 y1 8 7 6 5 4 5.0 7.5 10.0 12.5 15.0 x1 I. Simple Linear Regression Review 9 Importance of Plots (2) Fitted Line Plot y2 = 3.001 + 0.5000 x1 S R-Sq R-Sq(adj) 10 9 1.23721 66.6% 62.9% 8 y2 7 6 5 4 3 5.0 7.5 10.0 12.5 15.0 x1 I. Simple Linear Regression Review 10 Importance of Plots (3) Fitted Line Plot y3 = 3.002 + 0.4997 x1 13 S R-Sq R-Sq(adj) 12 1.23631 66.6% 62.9% 11 y3 10 9 8 7 6 5 4 5.0 7.5 10.0 12.5 15.0 x1 I. Simple Linear Regression Review 11 Importance of Plots (4) Fitted Line Plot y4 = 3.002 + 0.4999 x2 13 S R-Sq R-Sq(adj) 12 1.23570 66.7% 63.0% 11 y4 10 9 8 7 6 5 8 I. Simple Linear Regression Review 10 12 14 x2 16 18 20 12 The model • Yi = β0 + β1xi + ei, for i = 1, 2, …, n • “Errors” e1, e2, …, en are assumed to be independent. • Usually e1, e2, …, en are assumed to have the same standard deviation, σ. • Often e1, e2, …, en are assumed to be normally distributed. I. Simple Linear Regression Review 13 Least Squares • The regression line (line of best fit) is based on “least squares.” • The regression line is the line that minimizes the sum of the squared deviations from the data. • The least squares line has certain optimality properties. • The least squares line is denoted Yˆi ˆ ˆ X i ei I. Simple Linear Regression Review 14 9 8 7 6 5 4 eˆi Yi Yˆi Y • The residuals represent the difference between the data and the least squares line: 10 Residuals 1 2 3 4 5 6 7 X I. Simple Linear Regression Review 15 Checking assumptions • Residuals are the main tool for checking model assumptions, including linearity and constant variance. • Plotting the residuals versus the fitted values is always a good idea, to check linearity and constant variance. • Histograms and Q-Q plots (normal probability plots) of residuals can help to check the normality assumption. I. Simple Linear Regression Review 16 I. Simple Linear Regression Review 17 I. Simple Linear Regression Review 18 I. Simple Linear Regression Review 19 I. Simple Linear Regression Review 20 “Four in one” plot from Minitab Residual Plots for SaveRate Normal Probability Plot Versus Fits 99 10 Residual Percent 90 50 5 0 10 -5 1 -10 -10 -5 0 Residual 5 10 8 9 Histogram 10 11 Fitted Value 12 Versus Order 16 12 Residual Frequency 10 8 4 0 5 0 -5 -10 -5 I. Simple Linear Regression Review 0 5 Residual 10 -10 1 5 10 15 20 25 30 35 Observation Order 40 45 50 21 Coefficient of determination (R2) Residual sum of squares, aka sum of squares for error: Total sum of squares: Coefficient of determination: I. Simple Linear Regression Review RSS SSE i 1 eˆi n 2 SST TSS i 1 ( yi y ) 2 n TSS RSS R TSS 2 22 R2 • The coefficient of determination, R2, measures the proportion of the variability in Y that is explained by the linear relationship with X. • It’s also the square of the Pearson correlation coefficient I. Simple linear regression review 23 Adding a predictor • Recall: Fitted model was SaveRate = 7.152 + 1.099 pop>75 (p-value for test of whether pop>75 is significant was 0.025.) • Another predictor: DPI (per-capita income) • Fitted model: SaveRate = 8.57 + 0.000996 DPI (p-value for DPI: 0.124) II. Multiple regression: Adding predictors 24 Adding a predictor (2) • Model with both pop>75 and DPI is SaveRate = 7.06 + 1.30 pop>75 - 0.00034 DPI • p-values are 0.100 and 0.738 for pop>75 and DPI • The sign of the coefficient of DPI has changed! • pop>75 was significant alone, but neither it nor DPI are significant together! II. Multiple regression: Adding predictors 25 Adding a predictor (3) •What happened?? S R-Sq R-Sq(adj) 5 0.804599 61.9% 61.1% 4 pop>75 •The predictors pop>75 and DPI are highly correlated Fitted Line Plot pop>75 = 1.158 + 0.001025 DPI 3 2 1 0 0 II. Multiple regression: Adding predictors 1000 2000 DPI 3000 4000 26 Added variable plots and partial correlation 1. Residuals from a fit of SaveRate versus pop>75 give the variability in SaveRate that’s not explained by pop>75. 2. Residuals from a fit of DPI versus pop>75 give the variability in DPI that’s not explained by pop>75. 3. A fit of the residuals from (1) versus the residuals from (2) gives the relationship between SaveRate and DPI after adjusting for pop>75. This is called an “added variable plot.” 4. The correlation between the residuals from (1) and the residuals from (2) is the “partial correlation” between SaveRate and DPI adjusted for pop>75. II. Multiple regression: Adding predictors 27 Added variable plot Note that the slope term, II. Multiple regression: Adding predictors 15 S R-Sq R-Sq(adj) 4.28891 0.2% 0.0% 10 RESSRvspop>75 -0.000341, is the same as the slope term for DPI in the two-predictor model Fitted Line Plot RESSRvspop>75 = 0.0000 - 0.000341 RESDPIvspop>75 5 0 -5 -10 -1000 -500 0 500 1000 RESDPIvspop>75 1500 2000 2500 28 Scatterplot matrices (Matrix Plots) • With one predictor X, a scatterplot of Y vs. X is very informative. • With more than one predictor, scatterplots of Y vs. each of the predictors, and of each of the predictors vs. each other, is needed. • A scatterplot matrix (or matrix plot) is just an organized display of the plots II. Multiple regression: Adding predictors 29 Matrix Plot of SaveRate, pop<15, ... vs SaveRate, pop<15, ... SaveRate 20 30 40 0 2000 4000 20 10 0 pop<15 40 30 pop>75 20 4 2 0 changeDPI DPI 4000 2000 0 16 8 0 0 10 SaveRate 20 II. Multiple regression: Adding predictors 0 pop<15 2 pop>75 4 0 DPI 8 changeDPI 16 30 Changes in R2 • Consider adding a predictor X2 to a model that already contains the predictor X1 • Let R2,1 be the R2 value for the fit of Y vs. X1, and let R2,2 be the R2 value for the fit of Y vs. X2 II. Multiple regression: Adding predictors 31 Changes in R2 (2) • The R2 value for the multiple regression fit is always larger than R2,1 and R2,2 • The R2 value for the multiple regression fit of Y versus X1 and X2 may be – less than R2,1 + R2,2 (if the two predictors are explaining the same variation) – equal to R2,1 + R2,2 (if the two predictors measure different things) – more than R2,1 + R2,2 (e.g. Response is area of rectangle, and the two predictors are length and width) II. Multiple regression: Adding predictors 32 Multiple regression model • Response variable Y • Predictors X1, X2, …, Xp Yi X 1i X 2i ... p X pi ei •Same assumptions on errors ei (independent, constant variance, normality) II. Multiple regression: Adding predictors 33 Inference in regression • Most inference procedures assume independence, constant variance, and normality of the errors. • Most are “robust” to departures from normality, meaning that the p-values, confidence levels, etc. are approximately correct even if normality does not hold. • In general, techniques like the bootstrap can be used when normality is suspect. III. Inference in regression 34 New data set • Response variable: – Fuel = per-capita fuel consumption (times 1000) • Predictors: – Dlic = proportion of the population who are licensed drivers (times 1000) – Tax = gasoline tax rate – Income = per person income in thousands of dollars – logMiles = base 2 log of federal-aid highway miles in the state III. Inference in regression 35 t tests • Regression Analysis: Fuel versus Tax, Dlic, Income, logMiles • The regression equation is • Fuel = 154 - 4.23 Tax + 0.472 Dlic - 6.14 Income + 18.5 logMiles • • • • • • Predictor Constant Tax Dlic Income logMiles Coef 154.2 -4.228 0.4719 -6.135 18.545 t statistics III. Inference in regression SE Coef 194.9 2.030 0.1285 2.194 6.472 T 0.79 -2.08 3.67 -2.80 2.87 P 0.433 0.043 0.001 0.008 0.006 p values 36 t tests (2) • The t statistics tests the hypothesis that a particular slope parameter is zero. • The formula is t = (coefficient estimate)/(standard error) • degrees of freedom are n-(p+1) • p-values given are for the two-sided alternative • This is like simple linear regression III. Inference in regression 37 F tests • General structure: – Ha: Large model – H0: Smaller model, obtained by setting some parameters in the large model to zero, or equal to each other, or equal to a constant – RSSAH = resid. sum of squares after fitting the large (alt. hypothesis) model – RSSNH = resid. sum of squares after fitting the smaller (null hypothesis) model – dfNH and dfAH are the corresponding degrees of freedom III. Inference in regression 38 F tests (2) • Test statistic: ( RSS NH RSS AH ) F RSS AH (df NH df AH ) df AH •Null distribution: F distribution with dfNH – dfAH numerator and dfAH denominator degrees of freedom III. Inference in regression 39 F test example • Can the “economic” variables tax and income be dropped from the model with all four predictors? • AH model includes all predictors • NH model includes only Dlic and logMiles • Fit both models and get RSS and df values III. Inference in regression 40 F test example (2) • RSSAH = 193700; dfAH = 46 • RSSNH = 243006; dfNH = 48 (243006 193700) /( 48 46) F 5.85 193700 / 46 •P-value is the area to the right of 5.85 under a F(2,46) distribution, approx. 0.0054 •There’s pretty strong evidence that removing both Tax and Income is unwise III. Inference in regression 41 Another F test example • Question: Does it make sense that the two “economic” predictors should have the same coefficient? • Ha: Y = β0 + β1Tax + β2 Dlic+ β3 Income + β4 logMiles + error • H0: Y = β0 + β1Tax + β2 Dlic+ β1 Income + β4 logMiles + error • Note: H0: Y = β0 + β1 (Tax + Income)+ β2 Dlic + β4 logMiles + error III. Inference in regression 42 Another F test example (2) • Fit full model (AH) • Create new predictor “TI” by adding Tax and Income, and fit a model with TI and Dlic and logMiles (NH) (195487 193700) /( 47 46) F 0.424 193700 / 46 •P-value is the area to the right of 5.85 under a F(1,46) distribution, approx. 0.518 •This suggests that the simpler model with the same III. Inference in coefficient for Tax and Income fits well. 43 regression Removing one predictor • We have two ways to test whether one predictor can be removed from the model: – t test – F test • The tests are equivalent, in the sense that t2 = F, and that the p-values will be equivalent. III. Inference in regression 44 Confidence regions • Confidence intervals for one parameter use the familiar t-interval. • For example, to form a 95% confidence interval for the parameter of Income in the context of the full (four predictor) model: • -6.135 ± (2.013)(2.194) = -6.135 ± 4.417. From t distribution with 46 df From Minitab output III. Inference in regression 45 Joint confidence regions • Joint confidence regions for two or more parameters are more complex, and use the F distribution in place of the t distribution. • Minitab (and SPSS, and …) can’t draw these easily • On the next page is a joint confidence region for the parameters of Dlic and Tax, drawn in R. III. Inference in regression 46 1.0 0.2 0.4 Dlic 0.6 0.8 Boundary of confidence region 0.0 (0,0) -8 -6 -4 -2 0 Tax Joint confidence region for Dlic and Tax, with dotted lines indicating individual confidence intervals for the two. III. Inference in regression 47 Prediction • Given a new set of predictor values x1, x2, …, xp, what’s the predicted response? • It’s easy to answer this: Just plug the new predictors into the fitted regression model: Yˆ ˆ ˆ x1 ˆ x2 ... ˆ p x p •But how do we assess the uncertainty in the prediction? How do we form a confidence interval? III. Inference in regression 48 Predicted Values for New Observations New Obs Fit SE Fit 1 613.39 12.44 95% CI 95% PI (588.34, 638.44) (480.39, 746.39) Confidence interval for the average fuel consumption for states with Dlic = 900, Income = 28, logMiles=15, and Tax = 17 Prediction interval for the fuel consumption for a state with Dlic=900, Income = 28, logMiles=15, and Tax = 17 Values of Predictors for New Observations New Obs Dlic Income logMiles Tax 1 900 28.0 15.0 17.0 III. Inference in regression 49 Diagnostics • Want to look for points that have a large influence on the fitted model • Want to look for evidence that one or more model assumptions are untrue. • Tools: – Residuals – Leverage – Influence and Cook’s Distance IV. Regression Diagnostics 50 Leverage • A point whose predictor values are far from the “typical” predictor values has high leverage. • For a high leverage point, the fitted value Yˆi will be close to the data value Yi. • A rule of thumb: Any point with leverage larger than 2(p+1)/n is interesting. • Most statistical packages can compute leverages. IV. Regression Diagnostics 51 Scatterplot with leverages 13 0.236364 12 11 10 0.318182 y3 9 8 7 6 0.318182 0.236364 0.172727 0.127273 0.100000 0.090909 0.100000 0.127273 0.172727 5 4 5.0 7.5 10.0 12.5 15.0 x1 IV. Regression Diagnostics 52 Scatterplot of Leverage vs Index 0.6 Liby a 0.5 Leverage 0.4 UnitedStates 0.3 Japan Ireland 0.2 0.1 0.2 SouthRhodesia Canada Jamaica France Sweden A ustria UnitedKingdom Greece Uruguay Netherlands Portugal Boliv ia China Finland Belgium Germany Luxembourg Venezuela Malta Spain CostaRica Tunisia Switzerland Iceland India Brazil Paraguay Australia Ecuador Guatamala Philippines Peru SouthAfrica ZambiaMalay sia Denmark Honduras ItalyKorea NewZealand Colombia Nicaragua Norway Turk ey Chile Panama 0.0 0 10 20 30 40 50 Index IV. Regression Diagnostics 53 Influential Observations • A data point is influential if it has a large effect on the fitted model. • Put another way, an observation is influential if the fitted model will change a lot if the observation is deleted. • Cook’s Distance is a measure of the influence of an observation. • It may make sense to refit the model after removing a few of the most influential observations. IV. Regression Diagnostics 54 Scatterplot with Cook's Distance (measure of influence) 13 1.39285 12 11 High leverage, low influence High Influence 10 0.30057 y3 9 8 7 6 0.03382 0.00695 0.00052 0.00035 0.00214 0.00547 0.01176 0.02598 0.05954 5 4 5.0 7.5 10.0 12.5 15.0 x1 IV. Regression Diagnostics 55 Scatterplot of Cook's Distance vs Index 0.30 Liby a Cook's Distance 0.25 0.20 Japan 0.15 Zambia 0.10 0.05 0.00 Ireland Iceland Korea Philippines Peru Paraguay Sweden Chile C ostaRica Denmark Jamaica Venezuela France Greece Brazil UnitedKingdom UnitedStates Guatamala Malta Tunisia Uruguay Malay sia China Ecuador Switzerland Belgium Panama SouthRhodesia Finland Luxembourg NewZealand A ustralia Austria Boliv ia Colombia Honduras Norway SpainTurk ey Canada Germany IndiaItaly Netherlands Nicaragua Portugal SouthAfrica 0 10 20 30 40 50 Index IV. Regression Diagnostics 56 Model Selection • Question: With a large number of potential predictors, how do we choose the predictors to include in the model? • Want good prediction, but parsimony: Occam’s Razor. • Also can be thought of as a bias-variance tradeoff. V. Model Selection 57 Model Selection Example • Data on all 50 states, from the 1970s – Life.Exp = Life expectancy (response) – Population (in thousands) – Income = per-capita income – Illiteracy (in percent of population) – Murder = murder rate per 100,000 – HS.Grad (in percent of population) – Frost = mean # days with min. temp < 32F – Area = land area in square miles V. Model Selection 58 Forward Selection • Choose a cutoff α • Start with no predictors • At each step, add the predictor with the lowest p-value less than α • Continue until there are no unused predictors with p-values less than α V. Model Selection 59 • Stepwise Regression: Life.Exp versus Population, Income, ... • Forward selection. • Response is Life.Exp on 7 predictors, with N = 50 • • Step Constant 1 72.97 2 70.30 3 71.04 4 71.03 • • • Murder T-Value P-Value -0.284 -8.66 0.000 -0.237 -6.72 0.000 -0.283 -7.71 0.000 -0.300 -8.20 0.000 • • • HS.Grad T-Value P-Value 0.044 2.72 0.009 0.050 3.29 0.002 0.047 3.14 0.003 • • • Frost T-Value P-Value -0.0069 -2.82 0.007 -0.0059 -2.46 0.018 • • • Population T-Value P-Value • S • R-Sq • R-Sq(adj) • Mallows Cp V. Model Selection Alpha-to-Enter: 0.25 0.00005 2.00 0.052 0.847 60.97 60.16 16.1 0.796 66.28 64.85 9.7 0.743 71.27 69.39 3.7 0.720 73.60 71.26 2.0 60 Variations on FS • Backward elimination – Choose cutoff α – Start with all predictors in the model – Eliminate the predictor with the highest pvalue that is greater than α – ETC • Stepwise: Allow addition or elimination at each step (hybrid of FS and BE) V. Model Selection 61 All subsets • Fit all possible models. • Based on a “goodness” criterion, choose the model that fits best. • Goodness criteria include AIC, BIC, Adjusted R2, Mallow’s Cp • Some of the criteria will be described next V. Model Selection 62 Notation • RSS* = Resid. Sum of Squares for the current model • p* = Number of terms (including intercept) in the current model • n = number of observations • s2 = RSS/(n-(p+1)) = Estimate of σ2 from model with all predictors and intercept term. V. Model Selection 63 Goodness criteria • Smaller is better for AIC, BIC, Cp*. Larger is better for adjR2 • AIC = n log(RSS*/n) + 2p* • BIC = n log(RSS*/n) + p* log(n) • Cp* = RSS*/s2 + 2p* - n • adjR2 n 1 = 1 (1 R 2 ) n ( p 1) V. Model Selection 64 • Best Subsets Regression: Life.Exp versus Population, Income, ... • Response is Life.Exp • • • • • • • • • • Vars • 1 • 2 • 3 • 4 • 5 • 6 • 7 R-Sq 61.0 66.3 71.3 73.6 73.6 73.6 73.6 V. Model Selection R-Sq(adj) 60.2 64.8 69.4 71.3 70.6 69.9 69.2 Mallows Cp 16.1 9.7 3.7 2.0 4.0 6.0 8.0 S 0.84732 0.79587 0.74267 0.71969 0.72773 0.73608 0.74478 P o p u l a t i o n X X X X I n c o m e I l l i t e r a c y M u r d e r X X X X X X X X X X X X H S . G r a d F r o s t X X X X X X X X X X X X A r e a 65 Model selection can overstate significance • Generate Y and X1, X2, …, X50 • All are independent and standard normal. • So none of the predictors are related to the response. • Fit the full model and look at the overall F test. • Use model selection to choose a “good” smaller model, and look at its overall F test V. Model Selection 66 The full model • Results from fitting model with all 50 predictors • Note that the F test is not significant • S = 0.915237 R-Sq = 57.6% R-Sq(adj) = 14.3% • Analysis of Variance • • • • Source Regression Residual Error Total V. Model Selection DF 50 49 99 SS 55.7093 41.0453 96.7546 MS 1.1142 0.8377 F 1.33 P 0.160 67 The “good” small model • Run FS with α = 0.05 • Predictors x38, x41, and x24 are chosen. • Fit that three predictor model. Now the F test is highly significant • Analysis of Variance • • • • Source Regression Residual Error Total V. Model Selection DF 3 96 99 SS 20.9038 75.8508 96.7546 MS 6.9679 0.7901 F 8.82 P 0.000 68 What’s left? • Weighted least squares • Tests for lack of fit • Transformations of response and predictors • Analysis of Covariance • Etc.