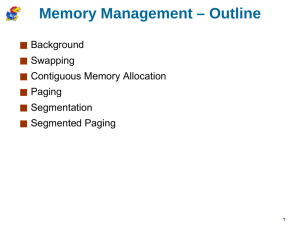

slides - Simon Fraser University

advertisement

School of Computing Science Simon Fraser University CMPT 300: Operating Systems I Ch 8: Memory Management Dr. Mohamed Hefeeda 1 Objectives To provide a detailed description of various ways of organizing memory hardware To discuss various memory-management techniques, including paging and segmentation To provide a detailed description of the Intel Pentium, which supports both pure segmentation and segmentation with paging 2 Background Program must be brought (from disk) into memory and placed within a process to be run Main memory and registers are the only storage that CPU can access directly CPU generates a stream of addresses Memory does not distinguish between instructions and data Register is accessed in one CPU clock Main memory can take many cycles Cache sits between main memory and CPU registers to accelerate memory access 3 Binding of Instructions and Data to Memory Address binding can happen at: Compile time: If memory location is known apriori, absolute code can be generated must recompile code if starting location changes Load time: Must generate relocatable code if memory location is not known at compile time Execution time: Binding delayed until run time process can be moved during its execution from one memory segment to another Need hardware support for address maps Most common (more on this later) 4 Multi-step Processing of a User Program 5 Logical vs. Physical Address Space Logical address generated by the CPU also referred to as virtual address User programs deal with logical addresses; never see the real physical addresses Physical address address seen by the memory unit Both are the same if address binding is done in Compile time or Load time But they differ if address binding is done in Execution time we need to map logical addresses to physical ones 6 Memory Management Unit (MMU) MMU: Hardware device that maps virtual address to physical address MMU also ensures memory protection Protect OS from user processes, and protect user processes from one another Example: Relocation register 7 Memory Protection: Base and Limit Registers A pair of base and limit registers define the logical address space Later, we will see other mechanisms (paging hardware) 8 Memory Allocation Main memory is usually divided into two partitions: Part for the resident OS • usually held in low memory with interrupt vector Another for user processes OS allocates memory to processes Contiguous memory allocation • Process occupies a contiguous space in memory Non-contiguous memory allocation (paging) • Different parts (pages) of the process can be scattered in the memory 9 Contiguous Allocation (cont’d) When a process arrives, OS needs to find a large-enough hole in memory to accommodate it OS maintains information about: allocated partitions free partitions (holes) Holes have different sizes and scattered in the memory Which hole to choose for a process? OS OS OS OS process 5 process 5 process 5 process 5 process 9 process 9 process 8 process 2 process 10 process 2 process 2 process 2 10 Contiguous Allocation (cont’d) Which hole to choose for a process? First-fit: Allocate first hole that is big enough Best-fit: Allocate smallest hole that is big enough must search entire list, unless ordered by size Produces the smallest leftover hole Worst-fit: Allocate the largest hole; must also search entire list Produces the largest leftover hole Performance: First-fit and best-fit perform better than worst-fit in terms of speed and storage utilization What are pros and cons of contiguous allocation? Pros: Simple to implement Cons: Memory fragmentation 11 Contiguous Allocation: Fragmentation External Fragmentation Total memory space exists to satisfy a request, but it is not contiguous Solutions to reduce external fragmentation? Memory compaction • Shuffle memory contents to place all free memory together in one large block • Compaction is possible only if relocation is dynamic, and is done at execution time Internal Fragmentation Occurs when memory has fixed-size partitions (pages) Allocated memory may be larger than requested the difference is internal to the allocated partition, and cannot being used 12 Paging: Non-contiguous Memory Allocation Process is allocated memory wherever it is available Divide physical memory into fixed-sized blocks called frames size is power of 2, between 512 bytes and 8,192 bytes OS keeps track of all free frames Divide logical memory into blocks of same size called pages To run a program of size n pages, need to find n free frames and load program Set up a page table to translate logical to physical addresses 13 Address Translation Scheme Address generated by CPU is divided into: Page number (p) – used as an index into a page table which contains base address of each page in physical memory Page offset (d) – combined with base address to define the physical memory address that is sent to the memory unit page number page offset p d m-n n Address pace = 2m entries Page size = 2n entries Number of page = 2 (m-n) entries 14 Paging Hardware 15 Paging Model of Logical and Physical Memory 16 Paging Example Page size = 4 bytes Memory size = 8 pages = 32 bytes Logical address 0: page = 0/4 = 0, offset = 0%4 = 0 maps to frame 5 + offset 0 physical address 20 Logical address 13: page = 13/4 = 3, offset = 13%4 = 1 maps to frame 2 + offset 1 physical address 9 17 Free Frames Before allocation After allocation Note: Every process must have its own page table 18 Implementation of Page Table Page table is kept in main memory Page-table base register (PTBR) points to page table Page-table length register (PRLR) indicates size of page table What is the downside of keeping P.T. in memory? Memory slow down. Every data/instruction access requires two memory accesses: One for page table and one for data/instruction Solution? use a special fast-lookup associative memory to accelerate memory access • Called Translation Look-aside Buffer (TLB) • Stores part of page table (of currently running process) TLBs store process identifier (address space identifier) in each TLB entry provide address-space protection for that process 19 Paging Hardware With TLB 20 Effective Access Time Associative Lookup = time unit Assume memory cycle time is tm time unit Typically << tm Hit ratio percentage of times a page is found in associative memory Effective Access Time (EAT) = ? EAT = ( + tm) + ( + tm + tm) (1 – ) = + (2 – ) tm As approaches 1, EAT approaches tm 21 Memory Protection Memory protection implemented by associating protection bits with each frame Can specify whether a page is: read only, write, read-write Another bit (valid-invalid) may be used “valid” indicates whether a page is in the process’ address space, i.e., a legal page to access “invalid” indicates that the page is not in the process’ address space 22 Valid (v) or Invalid (i) Bit In A Page Table 23 Shared Pages Suppose that you have code (e.g., emacs) that are being used by several processes at the same time. Does every process need to have a separate copy of that code in memory? NO. Put the code in “Shared Pages” One copy of read-only (reentrant) code shared among processes, e.g., text editors, compilers, … Shared code must appear in same location in the logical address space of all processes What if the shared code creates per-process data? Each process keeps “private pages” for data Private pages can appear anywhere in address space 24 Example: Shared/Private Pages Private page for process P1 Shared pages 25 Structure of the Page Table Assume that we have 32-bit address space, and page size is 1024 byes How many pages do we have? 222 pages What is the maximum size of the page table, assuming that each entry takes 4 bytes? 16 Mbytes Anything wrong with this number? Huge size (for each process, mostly unused). Solutions? Hierarchical Paging Hashed Page Tables Inverted Page Tables 26 Hierarchical Page Tables Break up the logical address space into multiple page tables A simple technique is a two-level page table 27 Two-Level Page-Table Scheme 28 Two-Level Paging Example Logical address (on 32-bit machine with 1K page size) is divided into: a page number consisting of 22 bits a page offset consisting of 10 bits Since page table is paged, page number is further divided into: a 12-bit page number a 10-bit page offset Thus, a logical address is as follows: page number pi 12 page offset p2 d 10 10 where pi is an index into the outer page table, and p2 is the displacement within the page of the outer page table 29 Address Translation in 2-Level Paging Note: OS creates the outer page table and one page of the inner page table. More pages of the inner table are created on-demand. 30 Three-level Paging Scheme • For 64-bit address space, we may have a page table like: • But the outer page table is huge, we may have 3-level: • The outer page table is still large! 31 Hashed Page Tables Common in address spaces > 32 bits Fixed-size hash table Page number is hashed into a page table Collisions may occur An entry in page table may contain a chain of elements that hash to same location 32 Hashed Page Table Page number is hashed If multiple entries, search for the correct one cost of searching, linear in worst-case! 33 Inverted Page Table One entry for each frame of physical memory Entry has: page # stored in that frame, and info about process (PID) that owns that page 34 Inverted Page Table (cont’d) Pros and Cons of inverted page table? Pros Decreases memory needed to store each page table, Cons Increases time needed to search the table when a page reference occurs • Can be alleviated by using a hash table to reduce the search time Difficult to implement shared pages • Because only one entry for each frame in the page table, i.e., one virtual address for each frame Examples 64-bit UltraSPARC and PowerPC 35 Segmentation Memory management scheme that supports user view of memory A program is a collection of segments A segment is a logical unit such as: main program, procedure, function, method, object, local variables, global variables, common block, stack, symbol table, arrays 36 Logical View of Segmentation 1 4 1 2 3 2 4 3 user space physical memory space 37 Segmentation Architecture Logical address has the form: <segment-number, offset> Segment table maps logical address to physical address Each entry has • Base: starting physical address of segment • Limit: length of segment Segment-table base register (STBR) points to location of segment table in memory 38 Segmentation Hardware 39 Segmentation Example 40 Segmentation Architecture (cont’d) Protection With each entry in segment table associate: • validation bit = 0 illegal segment • read/write/execute privileges Protection bits associated with segments code sharing occurs at segment level (more meaningful) Segments vary in length Memory allocation is a dynamic storage-allocation problem Problem? External fragmentation. Solution? Segmentation with paging: divide a segment into pages, pages could be anywhere in memory Get benefits of segmentation (user’s view) and paging (reduced fragmentation) 41 Example: Intel Pentium Supports segmentation and segmentation with paging CPU generates logical address Given to segmentation unit produces linear address Linear address given to paging unit generates physical address in main memory 42 Pentium Segmentation Unit Segment size up to 4 GB Max #segs per process 16 K 8 K private segs use local descriptor table 8 K shared segs use global descriptor table 43 Pentium Paging Unit Page size either: 4 KB two-level paging, or p1 p2 d 4 MB one-level paging 44 Linux on Pentium Linux is designed to run on various processors three-level paging (to support 64-bit architectures) • On Pentium, the middle directory size is set to 0 Limited use of segmentation • On Pentium, Linux uses only six segments 45 Summary CPU generates logical (virtual) addresses Binding to physical addresses can be static (compile time) or dynamic (load or run time) Contiguous memory allocation First-, Best-, and worst-fit Fragmentation (external) Paging: noncontiguous memory allocation Logical address space is divided into pages, which are mapped using page table to memory frames Page table (one table for each process) • Access time: use cache (TLB) • Size: use two- and three-levels for 32 bits address spaces; use hashed and inverted page tables for > 32 bits Segmentation: variable size, user’s view of memory Segmentation + paging: example Intel Pentium 46