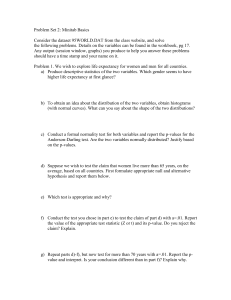

1.1 Basic Equations

advertisement

Two-Sample Problems – Means 1. Comparing two (unpaired) populations 2. Assume: 2 SRSs, independent samples, Normal populations Make an inference for their difference: 1 2 Sample from population 1: n1 , x1 , s1 Sample from population 2: n2 , x2 , s2 1 S.E. – standard error in the two-sample process 2 S .E . 2 s1 s2 n1 n2 Confidence Interval: Estimate ± margin of error ( x1 x2 ) t S .E. * Significance Test: 1 , 2 population means being tested H 0 : 1 2 0 (or 1 2 ) df min( n1 1, n2 1) ( x1 x2 ) 0 Test Stat : t S .E . x1 x2 2 2 s1 s2 n1 n2 2 Using the Calculator Confidence Interval: On calculator: STAT, TESTS, 0:2-SampTInt… Given data, need to enter: Lists locations, C-Level Given stats, need to enter, for each sample: x, s, n and then C-Level Select input (Data or Stats), enter appropriate info, then Calculate 3 Using the Calculator Significance Test: On calculator: STAT, TESTS, 4:2-SampT –Test… Given data, need to enter: Lists locations, Ha Given stats, need to enter, for each sample : x, s, n and then Ha Select input (Data or Stats), enter appropriate info, then Calculate or Draw Output: Test stat, p-value 4 Ex 1. Is one model of camp stove any different at boiling water than another at the 5% significance level? Model 1: n1 10, x1 11.4, s1 2.5 Model 2: n2 12, x2 9.9, s2 3.0 H0 : Ha : Test stat : t p value : 5 Ex 2. Is there evidence that children get more REM sleep than adults at the 1% significance level? Children: n1 11, x1 2.8, s1 0.5 Adults: n2 13, x2 2.1, s2 0.7 H0 : Ha : Test stat : t p value : 6 Ex 3. Create a 98% C.I for estimating the mean difference in petal lengths (in cm) for two species of iris. Iris virginica: n1 35, x1 5.48, s1 0.55 Iris setosa: n2 38, x2 1.49, s2 0.21 t - Interval : margin of error : 7 Ex 4. Is one species of iris any different at petal length than another at the 2% significance level? Iris virginica: n1 35, x1 5.48, s1 0.55 Iris setosa: n2 38, x2 1.49, s2 0.21 -4 -3 -2 -1 0 1 2 3 4 H0 : Ha : Test stat : t p value : 8 Two-Sample Problems – Proportions Make an inference for their difference: p1 p2 Sample from population 1: n1 , x1 , pˆ1 Sample from population 2: n2 , x2 , pˆ 2 9 Using the Calculator Confidence Interval: Estimate ± margin of error * ˆ ˆ ( p1 p2 ) z S .E. On calculator: STAT, TESTS, B:2-PropZInt… Need to enter: n1 , x1 , n2 , x2 , C-Level Enter appropriate info, then Calculate. 10 Using the Calculator Significance Test: p1 , p2 population proportion s being tested H 0 : p1 p2 0 (or p1 p2 ) On calculator: STAT, TESTS, 6:2-PropZTest… Need to enter: n1 , x1 , n2 , x2 , and then Ha Enter appropriate info, then Calculate or Draw Output: Test stat, p-value 11 Ex 5. Create a 95% C.I for the difference in proportions of eggs hatched. Nesting boxes apart/hidden: n1 478, x1 270(hatched ) Nesting boxes close/visible: n2 805, x2 270(hatched ) z - Interval : margin of error : 12 Ex 6. Split 1100 potential voters into two groups, those who get a reminder to register and those who do not. Of the 600 who got reminders, 332 registered. Of the 500 who got no reminders, 248 registered. Is there evidence at the 1% significance level that the proportion of potential voters who registered was greater than in the group that received reminders? Group 1: n1 600, x1 332 Group 2: n2 500, x2 248 13 Ex 6. (continued) H0 : Ha : Test stat : z p value : 14 Ex 7. “Can people be trusted?” Among 250 18-25 year olds, 45 said “yes”. Among 280 35-45 year olds, 72 said “yes”. Does this indicate that the proportion of trusting people is higher in the older population? Use a significance level of α = .05. Group 1: n1 250, x1 45 Group 2: n2 280, x2 72 15 Ex 7. (continued) H0 : Ha : Test stat : z p value : 16 Scatterplots & Correlation Each individual in the population/sample will have two characteristics looked at, instead of one. Goal: able to make accurate predictions for one variable in terms of another variable based on a data set of paired values. 17 Variables Explanatory (independent) variable, x, is used to predict a response. Response (dependent) variable, y, will be the outcome from a study or experiment. height vs. weight, age vs. memory, temperature vs. sales 18 Scatterplots Plot of paired values helps to determine if a relationship exists. Ex: variables – height(in), weight (lb) Height Weight 72 171 65 150 68 180 70 180 72 185 66 165 190 170 150 65 66 68 70 72 19 Scatterplots - Features Direction: negative, positive Form: line, parabola, wave(sine) Strength: how close to following a pattern Direction: Form: Strength: 190 170 150 65 66 70 72 20 Scatterplots – Temp vs Oil used Direction: Form: Strength: 45 35 25 20 30 70 90 21 Correlation Correlation, r, measures the strength of the linear relationship between two variables. r > 0: positive direction r < 0: negative direction Close to +1: Close to -1: Close to 0: 22 .85, -.02, .13, -.79 23 Lines - Review y = a + bx a: b: 3 y 2 x 2 3 2 1 1 2 3 4 24 Regression Looking at a scatterplot, if form seems linear, then use a linear model or regression line to describe how a response variable y changes as an explanatory variable changes. Regression models are often used to predict the value of a response variable for a given explanatory variable. 25 Least-Squares Regression Line The line that best fits the data: yˆ a bx where: br sy sx a y bx 26 Example Fat and calories for 11 fast food chicken sandwiches Fat: x 20.6, s x 9.8 r . 947 Calories: y 472.7, s y 144.2 27 Example Fat and calories for 11 fast food chicken sandwiches Fat: x 20.6, s x 9.8 r .947 Calories: y 472.7, s y 144.2 Calories Fat 28 Example-continued yˆ 185.65 13.93x What is the slope and what does it mean? What is the intercept and what does it mean? How many calories would you predict a sandwich with 40 grams of fat has? 29 Why “Least-squares”? The least-squares lines is the line that minimizes the sum of the squared residuals. Residual: difference between actual and predicted x y ŷ y yˆ 1 10 14 -4 3 25 24 1 … … … … 27 18 9 1 3 30 Scatterplots – Residuals To double-check the appropriateness of using a linear regression model, plot residuals against the explanatory variable. No unusual patterns means good linear relationship. 31 Other things to look for Squared correlation, r2, give the percent of variation explained by the regression line. Chicken data: r .947 32 Other things to look for Influential observations: Prediction vs. Causation: x and y are linked (associated) somehow but we don’t say “x causes y to occur”. Other forces may be causing the relationship (lurking variables). 33 Extrapolation: using the regression for a prediction outside of the range of values for the explanatory variables. age weight 20 180 230 y = 1.6126x + 148.49 R² = 0.7157 220 25 190 32 190 36 200 36 225 40 215 47 220 weight 210 200 y 190 Linear (y) 180 170 160 0 10 20 30 40 50 age 34 On calculator Set up: 2nd 0(catalog), x-1(D), scroll down to “Diagnostic On”, Enter, Enter Scatterplots: 2nd Y=(Stat Plot), 1, On, Select Type And list locations for x values and y values Then, ZOOM, 9(Zoom Stat) Regression: STAT, CALC, 8: LinReg (a + bx), enter, List location for x, list location for y, enter Graph: Y=, enter line into Y1 35 Examples: Cat Chick Dog Duck Goat Lion Bird Pig Bun ny Squir rel x 63 22 63 28 151 108 18 115 31 44 Incubation, days y 11 7.5 11 10 12 9 Lifespan, years x 2 5 1 age, years 2 5 y 16 11 17 10 4 5 10 1 8 1 10 4 2 7 6 12 11 20 19 10 16 11 20 resale, thousands $ 36 Contingency Tables Making comparisons between two categorical variables • Contingency tables summarize all outcomes – Row variable: one row for each possible value – Column variable: one column for each possible value – Each cell (i,j) describes number of individuals with those values for the respective variables. Age\Income <15 15-30 >30 Total <21 5 3 1 9 21-25 4 9 6 19 >25 2 2 8 12 Total 11 14 15 40 37 • Info from the table – # who are over 25 and make under $15,000: – % who are over 25 and make under $15,000: – % who are over 25: – % of the over 25 who make under $15,000: Age\Income <15 15-30 >30 Total <21 5 3 1 9 21-25 4 9 6 19 >25 2 2 8 12 Total 11 14 15 40 38 Age\Income Marginal Distributions <15 15-30 >30 Total <21 5 3 1 9 21-25 4 9 6 19 >25 2 2 8 12 Total 11 14 15 40 – Look to margins of tables for individual variable’s distribution – Marginal distribution for age: Age Freq. <21 9 21-25 19 >25 12 Total 40 Rel. Freq – Marginal distribution for income: Income <15 Freq. 11 15-30 >30 Total 14 15 40 Rel. Freq. 39 Conditional Distributions – Look at one variable’s distribution given another – How does income vary over the different age groups? – Consider each age group as a separate population and compute relative frequencies: Age\Income Age\Income <15 15-30 >30 Total <21 5 3 1 9 21-25 4 9 6 19 >25 2 2 8 12 <15 15-30 >30 Total <21 21-25 >25 40 Independence Revisited Two variables are independent if knowledge of one does not affect the chances of the other. In terms of contingency tables, this means that the conditional distribution of one variable is (almost) the same for all values of the other variable. In the age/income example, the conditionals are not even close. These variables are not independent. There is some association between age and income. 41 Test for Independence Is there an association between two variable? – H0: The variables are ( The two variables are – Ha: The variables (The two variables are ) ) Assuming independence: – Expected number in each cell (i, j): (% of value i for variable 1)x(% of j value for variable 2)x (sample size) = 42 Example of Computing Expected Values Rh\Blood A B AB + 176 28 22 O 198 Total 424 - 30 12 4 Total 206 40 26 30 228 76 500 Expected number in cell (A, +): Rh\Blood + A B Total 206 40 AB O 22.048 193.344 Total 424 3.952 34.656 76 26 228 500 43 Chi-square statistic To measure the difference between the observed table and the expected table, we use the chisquare test statistic: 2 observed count expected count 2 expected count where the summation occurs for each cell in the table. 1. Skewed right 2. df = (r – 1)(c – 1) 3. Right-tailed test 44 Test for Independence – Steps State variables being tested State hypotheses: H0, the null hypothesis, vars independent Ha, the alternative, vars not independent Compute test statistic: if the null hypothesis is true, where does the sample fall? Test stat = X2-score Compute p-value: what is the probability of seeing a test stat as extreme (or more extreme) as that? Conclusion: small p-values lead to strong evidence against H0. 45 ST – on the calculator On calculator: STAT, TESTS, C:X2 –Test Observed: [A] Expected: [B] Enter observed info into matrix A, then perform test with Calculate or Draw. To enter observed info into matrix A: 2nd, x-1 (Matrix), EDIT, 1: A, change dimensions, enter info in each cell. Output: Test stat, p-value, df 46 Ex . Test whether type and rh factor are independent at a 5% significance level. H0 : Ha : Test stat : 2 p value : conclusion : 47 Ex . Test whether age and stance on marijuana legalization are associated. stance\age 18-29 30-49 50Total for against 172 52 313 103 258 119 743 274 Total 224 416 377 1017 H0 : Ha : Test stat : 2 p value : conclusion : 48 Additional Examples personality\college Health extrovert 68 introvert 94 Job grade\marital status 1 2 3 Science 56 81 Lib Arts 62 45 Single 58 222 Married 874 3450 Divorced 15 60 74 1204 93 City size\practice status Government Judicial <250,000 250-500,000 >500,000 30 79 22 Educator 47 66 44 102 34 Private 258 Salaried 36 651 90 127 23 49