slides

advertisement

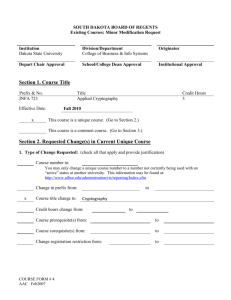

APPLICATIONS AND SOLUTIONS FOR COMPLEXITY Dr. Adam P. Anthony Lecture 27 NEXT WEEK Have your presentations ready! Submit electronic document/project on blackboard ALL PROJECTS ARE DUE NEXT TUESDAY, AT THE START OF CLASS REGARDLESS OF WHEN YOU ARE PRESENTING Presentation Order is posted on the course schedule Complexity in the News November 2010 issue Talks about recent attempt to prove P NP http://science.slashdot.o rg/story/10/08/08/22 6227/Claimed-ProofThat-P--NP Talks about using NPComplete problems to secure elections Dealing with NP-Completeness Not all hope is lost with the class NP! We typically analyze problems in the worst case Many cases are much easier, so we can just use a slow algorithm in this case Even if can’t solve problems perfectly, we can still seek something that is close to perfect An Approximation Algorithm is one that aims to do the best it can at solving a problem, though it may make mistakes Usually we can make a faster algorithm by cutting corners, or making a lucky guess. The Set-Cover Problem Imagine, in a large company that we need: And we have several vendors pushing products: Data Storage (DS) Data Processing (DP) Data Imaging (DI) Image Storage (IS) Image Sharing (IH) Program A can do: (DS, DP) Program B can do: (DP, DI, IH) Program C can do: (IS, IH) Program D can do: (DS) Program E can do: (IS) In large, complex instances this problem is NPComplete! Each product has the same cost, and would equally serve our needs for the specified items. Which do we choose? Greedy Algorithms Remember how nondeterministic machines always make the ‘right’ choice? The efficiency is in the fact that they only make one choice, and throw out all other options Greedy algorithm: don’t know the ‘right’ choice, but do know which one looks best at the time Not always the best! (hindsight is 20/20) Sometimes we tie! (left to guessing) Greedy Set Cover Imagine, in a large company that we need: Data Storage (DS) Data Processing (DP) Data Imaging (DI) Image Storage (IS) Image Sharing (IH) And we have several vendors pushing products: Program A can do: (DS, DP) Program B can do: (DP, DI, IH) Program C can do: (IS, IH) Program D can do: (DS) Program E can do: (IS) GreedySetCover: Repeat Pick the program which solves the most remaining problems Until No Problems Remain This algorithm isn’t perfect, but we can prove that it finds answers that are VERY close to the best answer. Leveraging NP-Completeness for Security Key property of NP: solving the problem is hard, but checking the solution is easy! Imagine a vault with the following lock: Give a particularly difficult instance of the traveling salesman problem If you can solve it, the vault will be opened! TSP is not a good problem for this task, because you can’t ‘reverse engineer’ the problem from a solution A more popular usage is Integer Factorization Cryptography Mostly used in military applications Also Idea: always assume that the enemy will intercept our messages! Can found in business, love we still keep them from reading the message? Cryptography: an ordered process of scrambling (encrypting) a message such that it can only be feasibly unscrambled (decrypted) if you possess the proper knowledge (key) Traditional Cryptography Create message, encrypt using key Deliver message to recipient Separately deliver the key How do we keep it safe? Public Key Cryptography Manufacture 1 key, 1 lock Instead of the SENDER locking up the message, ask the RECIPIENT for a lock Put the message in a case, secure with lock Send case in regular postal mail Recipient already has key, so no separate courier required! For convenience: manufacture 1000+ locks, distribute around country RSA Public Key Encryption System RSA: A popular public key cryptographic algorithm Abbreviation of authors’ last names: Rivest, Shamir Adleman Relies on the (presumed) intractability of the problem of factoring large numbers Public key = 2 numbers, N and e: Used to encrypt messages Private key = 2 numbers, N and d: Used to decrypt messages Replaces the 1000 locks from last slide Replaces the key for that lock N is the same for both public, private key Mathematical relationship between N,e,d is what makes this work Encrypting the Message 10111 Encrypting keys: N = 91 and e = 5 Message: 10111two = 23ten Encryption Step 1: 23e = 235 = 6,436,343 Encryption Step 2: 6,436,343 ÷ 91 has a remainder of 4 4ten = 100two Therefore, encrypted version of 10111 is 100. 12-13 Decrypting the Message 100 Decrypting keys: N = 91, d = 29 Encrypted Message: 100two = 4ten Decryption Step 1: 4d = 429 = 288,230,376,151,711,744 Decryption Step 2: 288,230,376,151,711,744 ÷ 91 has a remainder of 23 Original Message: 23ten = 10111two Therefore, decrypted version of 100 is 10111. 12-14 Establishing an RSA public key encryption system 1. Where did N = 91, e = 5, and d = 29 come from? Pick two prime numbers p, and q 2. 3. 7 and 13 Multiply them together to get N: 7*13 = 91 e and d can be any numbers that satisfy this equation: e*d = k(p-1)(q-1) 4. Short story: Pick p and q and the rest of the numbers fall into place, and the system works! Security of RSA Remember, d is kept secret, and we can’t decrypt without it! But d is computed using p and q! And n = p*q (Uh Oh!) A crook can compute d but only if he/she can factor n into its prime components! Factoring large integers is NP-Complete Easy for small numbers (7,13) Hard for large numbers—RSA uses p,q values with 150+ digits! A (rejected) Homework Problem Find the factors of 107,531 How can we solve this? Maybe we can write a program? http://homepages.bw.edu/~apanthon/courses/CSC18 0/slides/PrimeFactors.sb Parallel Computing If one computer is too slow, try 2! Or 4, or 8, or 16 or 32 or 64 or 128 or 256… Parallel computing involves the process of solving a single problem by: Breaking it into smaller pieces Solving each smaller piece in parallel Putting the small solutions back together Many computers today have 2-8 separate processors, giving it the apparent strength of multiple computers! Types of Parallel Computing Distributed Memory Each processor has its own private RAM storage Total RAM in a distributed computer: Sum of all private RAM sources Must request access to another’s RAM Advantages: different processors can’t interfere with another’s work Easy to expand Shared Memory All processors share a single source of RAM To access memory, just send out a Load/Store command to the Bus Advantages: Hard to program Easier to program, locate data Millions of example computers available Disadvantages: Disadvantages: But what if someone else was using that data??? Memory Bottleneck can serialize the parallelism Have to take care in how data is accessed, used Cost of Parallel Computing Question: A problem is split into 3 parts. Each part can be solved in 5 steps. How long will it take to solve the problem if we do it in parallel? Trick question!! Answer: Cost of parallelism = Cost of Splitting Data + Cost of computing the single largest part + Cost of reassembling partial solutions For Shared memory: cost of splitting/combining is very small, but cost of computing can be much higher For Distributed memory: cost of splitting/combining is larger, and cost of computing is (hopefully) faster Problems with Parallelism Splitting data is not always possible! And when it is, it may be tricky Concurrency problems: Parallel writes Race Conditions Parallel Reads are safe Combining results may be as much work as completing the non-parallel algorithm For these reasons—and more—NP-complete problems remain unsolvable in the infinite case BUT we may be able to solve larger problems than would otherwise be possible Obvious Parallelism Obvious parallelism occurs when data is in a List and the task involves executing a simple action on each item in the list where the action is independent of actions on all other items in the list Many vendors now have limited support for obvious parallelism: apply_parallel( 2 1 3 2 4 ADD-1, List) 3 5 4 5 6 6 7 7 8 8 9 Example problems Which of these are obviously parallel, which are not? Which could still be made parallel? Convert a list of Fahrenheit temperatures to Celsius For each item in a list, compute the product of that number times all the numbers that appear before it in the list 1. 2. Take two lists, multiply each corresponding element in each list, then add the resulting products together. 3. 4. 5. [2 5 8 9 10] computes [2 10 80 270 2700] [2 4 6] and [4 5 9] computes 8 + 20 + 54 = 82 Take a list and confirm whether it is sorted Search dictionary.com and record the definition of each word in a list Quantum Computing Bits can’t get any smaller But electrons can be in multiple quantum states simultaneously (“superpositioning”) qubit: can be in 2 states at once 2 qubits: 4 states at once n qubits: 2n states at once! In effect, we can build massively parallel computers! SciAm Special: How Do Quantum Computers Work? http://www.youtube.com/watch?v=hSr7hyOHO1Q&feature=related Images: ams.org