Practitioners Guide for Assessment Activities and

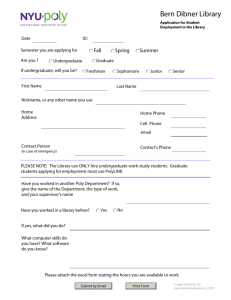

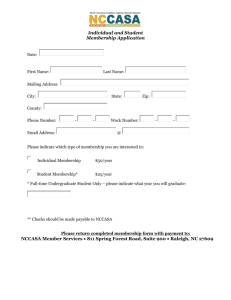

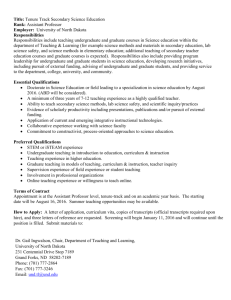

advertisement