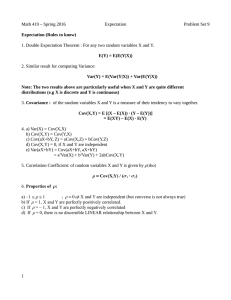

Lecture4

advertisement

Random variables Petter Mostad 2005.09.19 Repetition • Sample space, set theory, events, probability • Conditional probability, Bayes theorem, independence, odds • Random variables, pdf, cdf, expected value, variance Some combinatorics • How many ways can you make ordered selections of s objects from n objects? Answer: n*(n-1)*(n-2)*…*(n-s+1)) • How many ways can you order n objects? Answer: n*(n-1)*…*2*1 = n! (”n faculty”) • How many ways can you make unordered selections of s objects from n objects? Answer: n (n 1) (n s 1) n n! s! s !(n s)! s The Binomial distribution • Bernoulli distribution: One experiment, with probability π. • If you have n independent experiments, each with probability π, what is the probability of s successes? • To compute this probability, we find the probability of getting successes in exactly a particular set of experiments, and multiply with the number of choices of such sets. The Binomial distribution The random variable X has a Binomial distribution if it has the distribution as above: n s ns P( X s) (1 ) s E ( X ) n Var ( X ) n (1 ) Example • In a clinic, 20% of the patients come because of problem X. If the clinic treats 20 patients one day, what is the probability that zero, one, or two patients will come because of problem X? The Hypergeometric distribution • Assume N objects are given, and s of these are ”successes”. Assume n objecs are chosen at random. The distribution of the number of successes among these is the hypergeometric distribution: s N s x n x P( x) N n Example • A class consists of 20 girls and 10 boys. A group of 5 students is selected at random. What is the probability that it will contain 0, 1, or 2 girls? The Poisson distribution • Assume ”successes” happen independently, at a rate λ per time unit. The probability of x successes during a time unit is given by the Poisson distribution: x e P( x) x! E( X ) Var ( X ) Example • Assume patients come to an emergency room at an average rate of 1 per hour. What is the probability that 3 or more patients will come within a particular hour? The Poisson and the Binomial • It is possible to construct a Binomial variable quite similar to a Poisson variable: – Subdivide time unit into n subintervals. – Set the probability of success in each subinterval to λ/n. – Letting n goto infinity, the two processes become identical. • The above argument can be used to find the formula for the Poisson distribution. • Also: Poisson approximates Binomial when n is large and p is small. Bivariate distributions • Probability models where the outcomes are pairs (or vectors) of numbers are called bivariate (or multivariate) random variables: – – – – P ( x, y ) P ( X x Y y ) marginal probability: P( x) P( x, y ) P ( x, y ) P( x | y ) conditional probability: P( y ) X and Y are independent if for all x and y: y P ( x, y ) P ( x ) P ( y ) Example • The probabilities for – A: Rain tomorrow – B: Wind tomorrow are given in the following table: No wind Some wind Strong wind Storm No rain 0.1 0.2 0.05 0.01 Light rain 0.05 0.1 0.15 0.04 Heavy rain 0.05 0.1 0.1 0.05 Covariance and correlation • Covariance measures how two variables vary together: Cov( X , Y ) E ( X E( X ))(Y E(Y )) E( XY ) E( X ) E(Y ) • Correlation is always between -1 and 1: Corr ( X , Y ) Cov( X , Y ) XY Cov( X , Y ) Var ( X )Var (Y ) Properties of the expectation and variance • • • • • • E ( X Y ) E ( X ) E (Y ) E (aX b) aE ( X ) b Var (aX ) a 2Var ( X ) If X,Y independent, then E ( XY ) E ( X ) E (Y ) If X,Y independent, then Cov( X , Y ) 0 If Cov(X,Y)=0 then Var ( X Y ) Var ( X ) Var (Y ) Continuous random variables • Used when the outcomes are best modelled as real numbers • Probabilities are assigned to intervals of numbers; individual numbers generally have probability zero Cdf for continuous random variables • As before, the cumulative distribution function F(x) is equal to the probability of all outcomes less than or equal to x. • Thus we get P(a X b) F (b) F (a) • The probability density function is however b now defined so that P (a X b) f ( x)dx • We get that F ( x0 ) x0 a f ( x) dx Expectations • The expectation of a continuous random variable X is defined as E ( X ) xf ( x)dx • The variance, standard deviation, covariance, and correlation are defined exactly as before, in terms of the expectation, and thus have the same properties Example: The uniform distribution on the interval [0,1] • f(x)=1 • F(x)=x 1 1 1 1 2 • E ( X ) xf ( x)dx xdx 2 x 0 0 0 2 2 Var ( X ) E ( X ) E ( X ) • 1 x d ( x) 0.5 13 14 121 2 0 2 1 2 The normal distribution • The most used continuous probability distribution: – Many observations tend to approximately follow this distribution – It is easy and nice to do computations with – BUT: Using it can result in wrong conclusions when it is not appropriate The normal distribution • The probability density function is f ( x) • • • • 1 2 2 e ( x )2 / 2 2 where E ( X ) Var ( X ) 2 Notation N ( , 2 ) Standard normal distribution N (0,1) Using the normal density is often OK unless the actual distribution is very skewed Normal probability plots Normal Q-Q Plot of Household income in thousands 4 3 2 Expected Normal • Plotting the quantiles of the data versus the quantiles of the distribution. • If the data is approximately normally distributed, the plot will approximately show a straight line 1 0 -1 -2 -3 -200 0 200 400 600 Observed Value 800 1 000 1 200 The Normal versus the Binomial distribution • When n is large and π is not too close to 0 or 1, then the Binomial distribution becomes very similar to the Normal distribution with the same expectation and variance. • This is a phenomenon that happens for all distributions that can be seen as a sum of independent observations. • It can be used to make approximative computations for the Binomial distribution. The Exponential distribution • The exponential distribution is a distribution for positive numbers (parameter λ): f (t ) e t • It can be used to model the time until an event, when events arrive randomly at a constant rate E (T ) 1/ Var (T ) 1/ 2