IS 376: Information Technology & Society Course Overview

advertisement

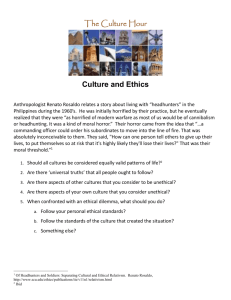

IS 376 Ethics and Convergence Dr. Kapatamoyo 11/20/14 1 2 Defining Terms Society: an association of people organized under a system of rules designed to advance the good of its members over time. Every society has rules of conduct describing what people ought and ought not to do in various situations. These rules are called Morality. A person may belong to various societies, which can lead to some interesting moral dilemmas. Ethics: is the study of morality, a rational examination into people's moral beliefs and behavior. 3 Broad Issues Forming communities allow us to enjoy better lives than if we lived in isolation. Communities facilitate the exchange of goods and services. There is a price associated with being part of a community. Communities impose certain obligations and prohibit some actions. Responsible community members take the needs and desires of other people into account when they make decisions. They recognize that virtually everybody shares the “core values” of life, happiness, and the ability to accomplish goals. The Law of Unintended Consequences Human actions have unintended or unforeseen effects. These effects can be positive or negative, and in some cases perverse (totally in opposite to what was originally intended). Interactions with Robots will also generate unforeseen consequences. Therefore, this law equally applies to the study of information technology or just any other type of technology. 4 5 Is Technology Neutral? A central issue of contention between technological determinists and social determinists is whether technology is neutral. Technological determinism holds that technology is value-free, and is therefore neutral. Technical features determine how people may use a particular technology Social determinism argues that technology is valueladen, and cannot be neutral (cannot exist in a vacuum). What features are put there in the first place? Who makes the decision? 6 Impact of IT IT can have an impact at these levels: Individual Group Organizational Societal National Global The impact can be: Social Economic Political Legal Psychological Historical Ethical 7 Isaac Asimov (1942) Science fiction writer, Asimov, wrote a short story "Runaround”. And included the Three Laws of Robotics: A robot may not injure a human being or, through inaction, allow a human being to come to harm. A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law. 8 Towards an Ethical Robot Alan Winfield (England), When robots are to be trusted, especially when interacting with humans, they will need to be more than just safe. Predicting the consequences of both their own actions, and the actions of other dynamic actors in their environment. With an ‘ethical’ action selection mechanism a robot can sometimes choose actions that compromise its own safety in order to prevent a second robot from coming to harm. 9 Ethics Ethics is the study of what it means to “do the right thing.” It is often equated with moral philosophy because it concerns how one arrives at specific moral choices. Ethical theory posits that people are rational, independent moral agents, and that they make free choices. Computer ethics is a branch of ethics that specifically deals with moral issues in computing (as a profession; other professions have specific ethical standards as well). 10 The Michael Industrial Complex 11 Why Study Ethics? Society is changing rapidly as it incorporates the latest advances in information technology. Many of these interactions have to be analyzed from an ethical standpoint. Ethics is the rational, systematic analysis of conduct that can cause benefit or harm to other people. Its important to note that ethics is focused on the voluntary, moral choices people make because they decided they ought to take one course of action rather than an alternative. It is not concerned about involuntary choices or choices outside the moral realm. 3 Perspectives on CyberEthics Number 1 1. Professional Ethics; The Main emphasis here is on the Designing, Developing and Maintenance of technologies. Most professions have this sort of ethics specific to the field. 12 3 Perspectives on CyberEthics Number 2 Philosophical Ethics; Ethic issues here typically involve concerns of responsibility and obligation affecting individuals as members of a certain profession; or broader concerns such as social policies and individual behavior. Three distinct stages for ethic applications are Identify a particular controversial practice as a moral problem. Describe and analyze the problem by clarifying concepts and examining the factual data associated with that problem. Apply moral theories and principles in the deliberative process in order to reach a position about that particular moral issue. 13 3 Perspectives on CyberEthics Number 3 Sociological Ethics These are non-evaluative and focus on particular moral systems and reports how members of various groups and cultures view particular moral issues. These are descriptive ethics. The first two (Number 1 and 2) are normative ethics. 14 Normative vs. Descriptive Ethics • Normative ethics: focus on • What we should do in making practical moral standards. • Descriptive ethics: focus on • What people actually believe to be right or wrong, • The moral values (or ideals) they hold up to, • How they behave, and • What ethical rules guide their moral reasoning. 15 Deontological Views: Key Principles • The principal philosopher in this tradition is Immanuel Kant (1724-1804, Kalinigrad, Russia). • Ethical decisions should be made solely by considering one's duties and the absolute rights of others. • Act only according to that maxim by which you can at the same time will that it would become a universal law. • There are four key principles to Kant’s Categorical Imperative: • The principle of universality: Rules of behavior should be applied to everyone. No exceptions. • Logic or reason determines rules of ethical behavior. • Treat people as ends in themselves, but not as means to ends. • Absolutism of ethical rules. • e.g., it is wrong to lie (no matter what!) 16 17 Utilitarianism Founded by John Stuart Mill (1806-1873, London, England) An ethical act is one that maximizes the good or “utility” for the greatest number of people. Consequences are quantifiable, and are the main basis of moral decisions. Rule-utilitarianism: Applies the utility principle to general ethical rules rather than to individual acts. The rule that would yield the most happiness for the greatest number of people should be followed. Act-utilitarianism: Applies utilitarianism to individual acts. We must consider the possible consequences of all our possible actions, and then select the one that maximizes happiness to all people involved. 18 Natural Rights • One of the founding fathers is John Locke (1632-1704, Essex, England). • Natural rights are universal rights derived from the law of nature (e.g., inherent rights that people are born with). • Ethical behavior must respect a set of fundamental rights of others. These include the rights of life, liberty, and property. 19 Situational Ethics Originally developed by Joseph Fletcher (19051991, Newark, NJ). There are always 'exceptions to the rule.’ The morality of an act is a function of the state of the system at the time it is performed. “Each situation is so different from every other situation that it is questionable whether a rule which applies to one situation can be applied to all situations like it, since the others may not really be like it. Only the single law of love (agape) is broad enough to be applied to all circumstances and contexts.” A pioneer in the field of bioethics, and involved in the topics of abortion, infanticide, euthanasia, eugenics, and cloning. Negative Rights vs. Positive Rights Negative rights (or liberties) are rights to act without interference. e.g., rights to life, liberty and property; or to vote; You cannot demand (or expect) facilities to be provided to you to exercise your right. Positive rights (or claim-rights) are rare. These are rights that impose an obligation on some people to provide certain things to others. Such as free education for children Controversies often rise as to whose (what) rights should take precedence. 20 21 Normative Questions Should computers, computer systems and robots make human level decisions? Then what? Who shoulders the liability?