Measuring Success Using Performance Measurement by Michelle

advertisement

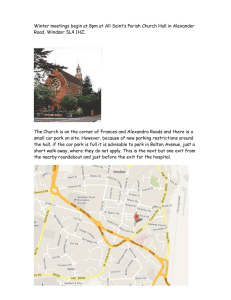

Measuring Success Using Performance Measurement NAEH Conference on Ending Family Homelessness Michelle Abbenante Brooke Spellman February 8, 2008 What is Performance Measurement? Performance measurement is a process that systematically evaluates whether your efforts are making an impact on the clients you are serving or the problem you are targeting. 2008 NAEH Family Conference - Outcomes Workshop 2 Multiple Levels of Performance Measurement 1. Program Level Local Service Provider CoC 2. CoC/System Level 3. State Level 4. National Level 2008 NAEH Family Conference - Outcomes Workshop Program Funding Report, such as HUD APR Ten-Year Plan Report Card or CoC Application State-wide Report or Performance Measurement Tool NAEH Assessment of National Progress or Federal GRPA and PART Reviews 3 Why Should Programs be Interested in Performance Measurement? We are all in the business of helping people, which means we need to… …understand whether current activities are working to achieve intended results. …drive program improvement and share information on effective practices with others. …acknowledge that high-performing programs are more likely to receive funding through competitive funding processes. 2008 NAEH Family Conference - Outcomes Workshop 4 Building Blocks of Performance Measurement Inputs include resources dedicated to, or consumed by, the program—e.g., money, staff and staff time, volunteers and volunteer time, facilities, equipment and supplies. Activities are what the program does with the inputs to fulfill its mission, such as providing shelter, feeding the homeless, or providing job training. Outputs are the direct products of program activities. They usually are presented in terms of the volume of work accomplished—e.g., number of participants served and the number of service engagements. Outcomes are benefits or changes among clients during or after participating in program activities. Outcomes may relate to change in client knowledge, attitudes, values, skills, behaviors, conditions, or other attributes. 2008 NAEH Family Conference - Outcomes Workshop 5 Performance Measurement Process Activities Should we adjust how we spend our resources? Outreach Shelters Case Management Rent Subsidies & Services How do we document our efforts? Outputs Inputs # Clients Served by Program Service Linkages New PSH Units/Subsidies Vacancy Statistics $ (CoC and Other) Programs Infrastructure Staff Should we add or change use of resources to expand our impact? 2008 NAEH Family Conference - Outcomes Workshop Outcomes 30% exited to PH 40% increased income 25% reduction in CH 25% shorter LOS < recidivism What did our efforts achieve? 6 Outputs vs. Outcomes Be mindful to distinguish between outputs and outcomes. An output is: Focused on what the program will do to achieve the outcome. A way to quantify the frequency and intensity of the activity. Specific to the activity described for the program. Feasible and attainable. Whereas, an outcome is: Focused on what the participant will gain from the program. A way to measure the client-level impact with clear targets and methods for measuring change. Attributable (a result of) to that program. Meaningful and attainable. If outcomes show the program works… outputs are needed to understand how to replicate results 2008 NAEH Family Conference - Outcomes Workshop 7 Group Exercise: Outcome, Output, or Neither? Examples Answers 150 clients received prevention counseling and one-time financial assistance. 90% of persons will obtain employment by completion of program. Outcome 75% of program staff will be trained in crisis management techniques. Activity Met 40% (50) of Permanent Supportive Housing goal. Output 65% of clients with chronic medical condition will improve physical health 2008 NAEH Family Conference - Outcomes Workshop Output Outcome 8 Achieving Your Outcomes Achieving your outcomes can be a progression. Monitoring this progression requires data that are collected at different intervals: Short-term outcomes: What change will the client experience within a month of his/her involvement in the program? How will you measure this? Intermediate outcomes: What change will the client experience within a year of being involved in the program? How will you measure this? Long-term outcomes: What is the long-term (e.g., 3year) impact of the program on clients? Has it been sustained? How will it be measured? 2008 NAEH Family Conference - Outcomes Workshop 9 Example: Employment Program’s Impact Over Time 39% of participants (75% of those who get a job) will retain their jobs for > 12 months. 52% of participants (55% of those who complete the job training class) will obtain full-time employment 93% of participants (97% of people who completed the job training class) will show improved job 100 people expected to participate skills in the program annually Long-term All of those who get a job (~52 clients) will receive weekly checkup calls and job counseling, as needed. All of those who complete the training classes (~95 clients) will be referred to jobs and receive job placement counseling. ~95 participants will complete job training Short-term classes. 2008 NAEH Family Conference - Outcomes Workshop 10 Framework for Converting Program Goals into Outcomes Step 2 Step 1 Step 3 1.Who How do I convertWhat program goals into measurable do you Within the base, how is the base foroutcomes? measuring results? 2. hope to achieve many persons with this achieved it? population? What do I need to calculate the outcomes? Step 4 Within the base, how many persons achieved it? Outcome (%) Who is the base population for measuring results? 2008 NAEH Family Conference - Outcomes Workshop 11 Converting Program Goals into Outcomes: Example Program Goal: Supporting participants in stable housing at least 6 months Step 2 Step 3 Goal: remain housed > 6 mo 20 people are still in stable housing (6+mo) or exited after being in housing for 6+ mo Step 1 Base= Persons who have been enrolled > 6 mo or have exited (n=40 people) Step 4 20 40 2008 NAEH Family Conference - Outcomes Workshop 50% remain in stable housing at least 6 months 12 Look Out for Ambiguous Concepts Developing and measuring performance outcomes often invites ambiguous concepts into the process. For example, what do we mean by… • …obtaining stable housing? • • • • …obtaining employment? …increasing income? …accessing services? …becoming more self-sufficient? Which data elements and responses will count? 2008 NAEH Family Conference - Outcomes Workshop 13 HMIS Data Elements Are the Building Blocks of Performance Measurement Universal Data Elements: Program-Specific Data Elements: Name Social Security Number Date of Birth Ethnicity & Race Gender Veteran Status Disabling Condition Residency Prior to Entry Zip Code of Last Permanent Add Entry Date Exit Date Income & Sources * Non-Cash Benefits * Physical Disability Developmental Disability HIV/AIDS Mental Health Substance Abuse Domestic Violence Services Received Destination Reasons for Leaving Person, Program, & HH ID * These data elements are collected at entry and exit. 2008 NAEH Family Conference - Outcomes Workshop 14 Wherever Possible Use HMIS to Define Your Concepts Based on the Destination HMIS data element, we can define stable housing (narrowly) using the following response categories: • • • • Emergency shelter Transitional housing Permanent housing Substance abuse facility or detox center • Hospital (non-psychiatric) • Jail, prison or juvenile detention center • Room, apartment, or house that you rent • Don’t Know 2008 NAEH Family Conference - Outcomes Workshop • Apartment or house that you own • Staying/living with family • Staying/living with friends • Hotel or motel voucher paid for without ES voucher • Foster care home or group home • Place not meant for human habitation Other • • Refused 15 Group Exercise Employment Program The goals of the program are to help unemployed clients obtain employment and help employed clients get “better” jobs. During the past year, the program served 6 (unduplicated) persons: Client ID Entry Date Exit Date Employment Entry Employment Exit 1 1/31/07 9/15/07 Unemployed Employed 2 3/15/07 6/28/07 Unemployed Unemployed 3 7/11/07 4 7/7/07 9/18/07 Employed Same Employment 5 8/2/06 5/12/07 Employed Higher Paying Job 6 11/7/06 8/2/07 Unemployed Employed -- 2008 NAEH Family Conference - Outcomes Workshop Unemployed -- 16 Define the Base Population for Each Goal Is everyone part of the target population? Goal 1: Achieve employment at exit E.g., Do you expect to calculate an outcome for everyone? Goal 2: Obtain “better” employment at exit Client ID Entry Date Exit Date Employment Entry Employment Exit 1 1/31/07 9/15/07 Unemployed Employed 2 3/15/07 6/28/07 Unemployed Unemployed 3 7/11/07 4 7/7/07 9/18/07 Employed Same Employment 5 8/2/06 5/12/07 Employed Higher Paying Job 6 11/7/06 8/2/07 Unemployed Employed -- 2008 NAEH Family Conference - Outcomes Workshop Unemployed -- 17 Calculate the Outcome for Goal 1 Program Goal 1: Obtain Employment at Exit Step 1 Step 2 Step 3 All unemployed persons at entry who exited (N= 3) Achieve employment 2 persons achieved employment Step 4 2 3 2008 NAEH Family Conference - Outcomes Workshop 67% achieved employment 18 Calculate the Outcome for Goal 2 Program Goal 2: Improved Employment at Exit Step 1 Step 2 Step 3 Persons who were employed at entry and exited (N= 2) Improve employment 1 person increased earnings Step 4 1 2 2008 NAEH Family Conference - Outcomes Workshop 50% gained better employment 19 Exercise 2 and the Performance Measurement Process Activities Job Training Classes Interview Assistance Job Placement Services Outputs Inputs 6 enrolled in weekly services 6 employment assessments Referred to av. 4 jobs each Money: $250,000 Staff: 4 FTEs 1 Facility Outcomes 67% achieved empl. 50% improved empl. 2008 NAEH Family Conference - Outcomes Workshop 20 Using Outcomes to Inform Future Program Operations Step 1 Step 2 Step 3 Step 4 Review outcomes with program managers Develop action steps and timelines Implement action steps Regular monitoring 2008 NAEH Family Conference - Outcomes Workshop 21 Step 1: Reviewing Outcomes with Managers What’s Going On? Program director and managers should review outcomes collaboratively to understand what the outcomes are suggesting. Break down the outcomes to understand the underlying forces: • • • What are we doing right? What activities contributed to our ability to meet/exceed our benchmarks? Where do we need to improve? What activities fell short of producing the desired outcomes? What else might be contributing to our outcomes? How can we influence or mitigate these external forces to further our positive outcomes? 2008 NAEH Family Conference - Outcomes Workshop 22 Step 2: Developing Action Steps and Timelines Reinforcing the Good and Adjusting the Bad Outcomes that were achieved/exceeded: Continue to support the activities that led to our positive performance. Outcomes that were not achieved: Allocate our inputs differently to support different types/levels of activities. Set target dates for reviewing all outcomes—e.g., 3month intervals. Collaborate with other service providers to “control” the external impacts on the program. 2008 NAEH Family Conference - Outcomes Workshop 23 Step 3: Implementing the Action Steps Getting Buy-In Through Information Sharing • You can’t implement what you don’t understand: program directors, managers and front-line staff must understand the reasons for making changes in program operations. • Information sharing promotes the idea that “we are all in this together.” • Information sharing is fluid: program directors, managers and front-line staff can learn from one another; it’s not a one-way (top-down) process. 2008 NAEH Family Conference - Outcomes Workshop 24 Step 4: Regular Monitoring It’s Easier to Adjust Program Operations Incrementally than Wholesale • Monitor your progress by generating your performance outcomes at different periods of time— e.g., 3-month intervals. • Adjust your approach as needed, but usually incrementally. • Important to acknowledge that clients’ needs may shift, and thus program goals and approach may also need to shift. 2008 NAEH Family Conference - Outcomes Workshop 25 Comparing Program Results • You can compare results from one program to another to see which programs are working best and which are working least well – With limited dollars, you want to fund the programs that are most effective. – You can use program results to identify best practice programs and those that need TA – You can use results from multiple programs to help set a community expectation or standard of performance 2008 NAEH Family Conference - Outcomes Workshop 26 Case Study: How Washington, D.C. Uses Program Results • Outputs/Efficiency Measures – Clients Served – Chronically Homeless Served – Occupancy: the rate at which program was used • Interim Outcome Measures – Permanent Housing • Positive client destinations at exit (TH programs) • Retain clients for 6+ months (PSH programs) – Income: the amount of income or sources obtained – Self-Sufficiency: change in substance use, education, mental illness or employment • Measures apply differently to each program type and are supplemented with qualitative data for ranking purposes 2008 NAEH Family Conference - Outcomes Workshop 27 Washington, DC FY 07 DHS Performance Measures Provider Performance Measures Clients Served Outreach Programs Severe Weather & Low Barrier Shelters Temporary Shelter Transitional Programs Permanent Supportive Housing Supportive Service Programs Chronically Homeless Occupancy Rate Housing Destinations Income Length of Stay Self Sufficiency* *Self Sufficiency Temporary, Transitional and Permanent Supportive Housing Programs required to submit a Self Sufficiency Indicator will have to choose from Substance Abuse, Education, Mental Illness Assistance or Employment. 2008 NAEH Family Conference - Outcomes Workshop 28 Apples to Apples: Risk Adjustment • Problem: Comparing program results can encourage programs to “cream” to ensure strong results • Solution: Risk adjustment allows you to account for differences in client populations when comparing results across programs Results can be adjusted on the basis of… • Client characteristics, such as demographics, family size, disability • Client history, such as past eviction, criminal background, • Client functionality or level of engagement/commitment to change 2008 NAEH Family Conference - Outcomes Workshop 29 Risk Adjustment Requires Expertise • To adjust for client differences, programs need to collect consistent data on clients to use during analysis of program results – Agree on these standards beforehand • Develop an analysis plan for how you intend to adjust for client differences – Engage a researcher to help develop the plan • Even if you don’t formally adjust results, acknowledge that different programs may have different outcome expectations based on differences in clients targeted and/or served 2008 NAEH Family Conference - Outcomes Workshop 30 Simplified Illustration of Dissecting Client Outcomes on Increased Earned Income Program A Program B All Clients (n=100) All Clients (n=300) 61% (61 clients) 23% (70 clients) CoC Outcome Results By Population Disabled Clients Disabled Clients Disabled Clients 10% (1 of 10 persons) Non-disabled Clients 12% (30 of 250 persons) Non-disabled Clients 12% (31 of 260 persons) Non-disabled Clients 67% (60 of 90 persons) 80% (40 of 50 persons) 71% (100 of 140 persons) By establishing targets, programs can be compared against CoC expectations in the future to determine if program performance is higher or lower than expected. 2008 NAEH Family Conference - Outcomes Workshop 31 System Performance Measurement • Are your actions achieving your intended goals at the system level? – Does the system work? – If yes, what makes it work? – If no, what part doesn’t work, and how do you fix it to make it work? • Note that you may have system goals that only relate to certain types of clients or parts of the system (e.g., different goals for severely disabled persons) 2008 NAEH Family Conference - Outcomes Workshop 32 Sample Impact Measures • Incidence of homelessness - Is homelessness declining? • Incidence of street or CH - Is street or chronic homelessness declining? • Length of stay in system, across all homeless programs - Do people stay homeless for shorter periods of time? • Prevention – Are fewer people experiencing homelessness for the first-time? • Rates of Recidivism – Are repeat occurrences of homelessness avoided or declining? Cross-tabulate results by core characteristics to understand if/how results vary for different subpopulations 2008 NAEH Family Conference - Outcomes Workshop 33 Steps to Calculate System Length of Stay Create table with all Entry/Exit Dates by Client De-duplicate clients across programs Step 4 Consolidate sequential stays into single episode (gaps < 30 days = same episode) Step 3 Step 2 Step 1 Calculate LOS for each Stay Client ID Prog ID Entry Date Exit Date LOS 1 A 5/8/06 5/30/07 22 1 B 6/1/07 9/01/07 114 92 Step 5 2 A 3/1/07 5/21/07 81 Calculate mean (168 days), low (81 days), high (309 days) 3 C 2/1/06 12/7/06 309 2008 NAEH Family Conference - Outcomes Workshop 34 Some notes of caution… • There is more to performance measurement than conducting the analysis – Educate, train, obtain buy-in • Be careful about how you interpret and use the data 1. Jump in, but don’t be careless in how you use the results 2. Look at the results within the context of all the outputs, interim measures and impact measures to validate the interpretation that’s being made 3. Vet the results before publicly releasing anything 4. Appropriately caveat the limitations of the data and analysis 2008 NAEH Family Conference - Outcomes Workshop 35 Summary of System Performance Measurement Activities Adjust type and intensity of activities based on outcomes; track if improves Outputs Inputs Document the level of effort provided Use to ensure activities delivered efficiently Use program-level & system-wide results to adjust use of resources Outcomes Interim Outcomes signal client success; Impact outcomes track progress to goals 2008 NAEH Family Conference - Outcomes Workshop 36 Questions? Contact us for more information or assistance: Michelle Abbenante, michelle_abbenante@abtassoc.com Brooke Spellman, brooke_spellman@abtassoc.com