garth-Programming - School of Computer Science

advertisement

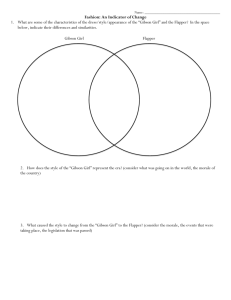

Programming Models Cloud Computing Garth Gibson Greg Ganger Majd Sakr Raja Sambasivan G. Gibson, Mar 3, 2014 1 Recall the SaaS, PaaS, IaaS Taxonomy • Service, Platform or Infrastructure as a Service o SaaS: service is a complete application (client-server computing) • Not usually a programming abstraction o PaaS: high level (language) programming model for cloud computer • Eg. Rapid prototyping languages • Turing complete but resource management hidden o IaaS: low level (language) computing model for cloud computer • Eg. Assembler as a language • Basic hardware model with all (virtual) resources exposed • For PaaS and IaaS, cloud programming is needed o How is this different from CS 101? Scale, fault tolerance, elasticity, …. G. Gibson, Mar 3, 2014 2 Embarrassingly parallel “Killer app:” Web servers • Online retail stores (like the ice.com example) o Most of the computational demand is for browsing product marketing, forming and rendering web pages, managing customer session state • Actual order taking and billing not as demanding, have separate specialized services (Amazon bookseller backend) • • o One customer session needs a small fraction of one server o No interaction between customers (unless inventory near exhaustion) Parallelism is more cores running identical copy of web server Load balancing, maybe in name service, is parallel programming o Elasticity needs template service, load monitoring, cluster allocation o These need not require user programming, just configuration G. Gibson, Mar 3, 2014 3 Eg., Obama for America Elastic Load Balancer G. Gibson, Mar 3, 2014 4 What about larger apps? • • Parallel programming is hard – how can cloud frameworks help? Collection-oriented languages (Sipelstein&Blelloch, Proc IEEE v79, n4, 1991) o Also known as Data-parallel o Specify a computation on an element; apply to each in collection • Analogy to SIMD: single instruction on multiple data o Specify an operation on the collection as a whole • Union/intersection, permute/sort, filter/select/map • Reduce-reorderable (A) /reduce-ordered (B) – (A) Eg., ADD(1,7,2) = (1+7)+2 = (2+1)+7 = 10 – (B) Eg., CONCAT(“the “, “lazy “, “fox “) = “the lazy fox “ • Note the link to MapReduce …. its no accident G. Gibson, Mar 3, 2014 5 High Performance Computing Approach • • HPC was almost the only home for parallel computing in the 90s Physical simulation was the killer app – weather, vehicle design, explosions/collisions, etc – replace “wet labs” with “dry labs” o Physics is the same everywhere, so define a mesh on a set of particles, code the physics you want to simulate at one mesh point as a property of the influence of nearby mesh points, and iterate o Bulk Synchronous Processing (BSP): run all updates of mesh points in parallel based on value at last time point, form new set of values & repeat • Defined “Weak Scaling” for bigger machines – rather than make a fixed problem go faster (strong scaling), make bigger problem go same speed o Most demanding users set problem size to match total available memory G. Gibson, Mar 3, 2014 6 High Performance Computing Frameworks • • Machines cost O($10-100) million, so o emphasis was on maximizing utilization of machines (congress checks) o low-level speed and hardware specific optimizations (esp. network) o preference for expert programmers following established best practices Developed MPI (Message Passing Interface) framework (eg. MPICH) o Launch N threads with library routines for everything you need: • Naming, addressing, messaging, synchronization (barriers) • Transforms, physics modules, math libraries, etc • o Resource allocators and schedulers space share jobs on physical cluster o Fault tolerance by checkpoint/restart requiring programmer save/restore o Proto-elasticity: kill N-node job & reschedule a past checkpoint on M nodes Very manual, deep learning curve, few commercial runaway successes G. Gibson, Mar 3, 2014 7 Broadening HPC: Grid Computing • • Grid Computing started with commodity servers (predates Cloud) Frameworks were less specialized, easier to use (& less efficient) o • • • Beowulf, PVM (parallel virtual machine), Condor, Rocks, Sun Grid Engine For funding reasons grid emphasized multi-institution sharing o So authentication, authorization, single-signon, parallel-ftp o Heterogeneous workflow (run job A on mach. B, then job C on mach. D) Basic model: jobs selected from batch queue, take over cluster Simplified “pile of work”: when a core comes free, take a task from the run queue and run to completion G. Gibson, Mar 3, 2014 8 Cloud Programming, back to the future • • • HPC demanded too much expertise, too many details and tuning Cloud frameworks all about making parallel programming easier o Willing to sacrifice efficiency (too willing perhaps) o Willing to specialize to application (rather than machine) Canonical BigData user has data & processing needs that require lots of computer, but doesn’t have CS or HPC training & experience o Wants to learn least amount of computer science to get results this week o Might later want to learn more if same jobs become a personal bottleneck G. Gibson, Mar 3, 2014 9 Cloud Programming Case Studies • MapReduce o Package two Sipelstein91 operators filter/map and reduce as the base of a data parallel programming model built around Java libraries • DryadLinq o Compile workflows of different data processing programs into schedulable processes • GraphLab o Specialize to iterative machine learning with local update operations and dynamic rescheduling of future updates G. Gibson, Mar 3, 2014 10 MapReduce (Majd) G. Gibson, Mar 3, 2014 11 DryadLinq • Simplify efficient data parallel code o Compiler support for imperative and declarative (eg., database) operations o Extends MapReduce to workflows that can be collectively optimized • • • Data flows on edges between processes at vertices (workflows) Coding is processes at vertices and expressions representing workflow Interesting part of the compiler operates on the expressions o Inspired by traditional database query optimizations – rewrite the execution plan with equivalent plan that is expected to execute faster G. Gibson, Mar 3, 2014 12 DryadLinq • • Data flowing through a graph abstraction o Vertices are programs (possibly different with each vertex) o Edges are data channels (pipe-like) o Requires programs to have no side-effects (no changes to shared state) o Apply function similar to MapReduce reduce – open ended user code Compiler operates on expressions, rewriting execution sequences o Exploits prior work on compiler for workflows on sets (LINQ) o Extends traditional database query planning with less type restrictive code • Unlike traditional plans, virtualizes resources (so might spill to storage) o Knows how to partition sets (hash, range and round robin) over nodes o Doesn’t always know what processes do, so less powerful optimizer than database – where it can’t infer what is happening, it takes hints from users o Can auto-pipeline, remove redundant partitioning, reorder partitionings, etc G. Gibson, Mar 3, 2014 13 Example: MapReduce (reduce-reorderable) • DryadLinq compiler can pre-reduce, partition, sort-merge, partially aggregate • In MapReduce you “configure” this youself G. Gibson, Mar 3, 2014 14 Background on Regression • Regression problem: For given input A, and observation Y, find unknown x parameter A: n by m matrix ….. ….. ….. X = …….. …. • x: m by 1 Y: n by 1 Eg. Alzheimer Disease data 463 sample by 509K features. In case of pair-wise study, # of features would be inflated to 1011. Sparse regression is one variation of regression that favors a small number of non-zero parameters corresponding to the most relevant features. G. Gibson, Mar 3, 2014 15 Eg. Medical Research • Find genetic patterns predicting disease Genetic variation associated with disease Samples (patients) AT…….CG T AAA AT…….CG G AAA AT…….CG T AAA AT…….CG T AAA • Millions to 1011 (pair-wise gene study) dimensions G. Gibson, Mar 3, 2014 16 HPC Style: Bulk Synchronous Parallel Iterative Improvement of Estimated “Solution” Thread 1 1 2 3 4 5 Thread 2 1 2 3 4 5 Thread 3 1 2 3 4 5 Thread 4 1 2 3 4 5 Time Threads synchronize (wait for each other) every iteration Parameters read/updated at synchronization barriers Repetitive file processing: Mahout, DryadLinq, Spark Distributed shared memory: Pregel, Hama, Giraph, GraphLab G. Gibson, Mar 3, 2014 17 Synchronization can be costly Thread 1 1 Thread 2 1 Thread 3 1 Thread 4 1 2 3 2 3 2 2 3 3 Time Machines performance or work assigned unequal So threads must wait for each other And larger clusters suffer longer communication in barrier sync If you can, do more work between syncs, but not always possible G. Gibson, Mar 3, 2014 18 Can we just run asynchronous? Parameters read/updated at any time Thread 1 1 2 Thread 2 1 2 Thread 3 1 2 Thread 4 1 2 3 4 5 3 4 5 3 3 4 4 5 5 6 6 6 6 Time Threads proceed to next iteration without waiting Threads not on same iteration # In most computations this leads to wrong answer In search/solve, however, it might still converge, but it might also diverge G. Gibson, Mar 3, 2014 19 GraphLab: managing asynch ML • GraphLab provides a higher level programming model o o o • Data is associated with vertices and edges between vertices, inherently sparse (or we’d use a matrix representation instead) Program a vertex update based on its edges & neighbor vertices Background code used to test if its time to terminate updating BSP can be cumbersome & inefficient o o Iterative algorithms may do little work per iteration and may not need to move all the data each iteration Use traditional database transaction isolation for consistency G. Gibson, Mar 3, 2014 20 Scheduling is green field in ML • Graphlab proposes updates do own scheduling o o o Baseline: sequential update of each vertex once per iteration Sparseness allows non-overlapping updates to execute in parallel Opportunity for smart schedulers to exploit more app properties • Prioritize specific updates over other updates because these communicate more information more quickly G. Gibson, Mar 3, 2014 21 One way to think about scheduling • • “Partial” synchronicity o Spread network communication evenly (don’t sync unless needed) o Threads usually shouldn’t wait – but mustn’t drift too far apart! Straggler tolerance o Slow threads must somehow catch up Make thread 1 catch up Force threads to sync up Thread 1 1 Thread 2 1 2 Thread 3 1 2 Thread 4 1 G. Gibson, Mar 3, 2014 2 2 3 3 4 4 3 5 4 3 4 22 5 6 5 5 6 6 6 Time Stale Synchronous Parallel (SSP) Staleness Threshold 3 Thread 1 waits until Thread 2 has reached iter 4 Thread 1 Thread 2 Thread 3 Thread 4 0 1 2 3 4 5 6 7 8 9 Iteration Note: x-axis is now iteration count, not time! Allow threads to usually run at own pace: mostly asynch Fastest/slowest not allowed to drift >S iterations apart: bound error Threads cache (stale) versions of parameters, to reduce network traffic G. Gibson, Mar 3, 2014 23 Why does SSP converge? Instead of xtrue, SSP sees xstale = xtrue + error The error caused by staleness is bounded Over many iterations, average error goes to zero G. Gibson, Mar 3, 2014 24 SSP uses networks efficiently Time Breakdown: Compute vs Network LDA 32 machines (256 cores), 10% data per iter 8000 Seconds 7000 6000 Network waiting time 5000 Compute time 4000 3000 2000 1000 0 0 BSP 8 16 24 32 40 48 Staleness SSP balances network and compute time G. Gibson, Mar 3, 2014 25 Advanced Cloud Computing Programming Models • Ref 1: MapReduce: simplified data processing on large clusters. Jeffrey Dean and Sanjay Ghemawat. OSDI’04. 2004. http://static.usenix.org/event/osdi04/tech/full_papers/dean/dean.pdf • Ref 2: DyradLinQ: A system for general-purpose distributed data-parallel computing using a high-level language. Yuan Yu, Michael Isard, Dennis Fetterly, Mihai Budiu, Ulfar Erlingsson, Pradeep Kumar Gunda, Jon Currey. OSDI’08. http://research.microsoft.com/enus/projects/dryadlinq/dryadlinq.pdf • Ref 3: Yucheng Low, Joseph Gonzalez, Aapo Kyrola, Danny Bickson, Carlos Guestrin, and Joseph M. Hellerstein (2010). "GraphLab: A New Parallel Framework for Machine Learning." Conference on Uncertainty in Artificial Intelligence (UAI). http://www.select.cs.cmu.edu/publications/scripts/papers.cgi G. Gibson, Mar 3, 2014 26