8.0_Impact Evaluation Logic, Benefits, and Limitations by Joseph

advertisement

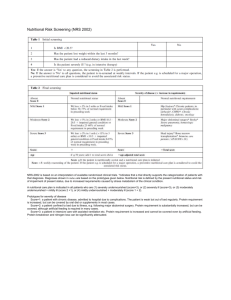

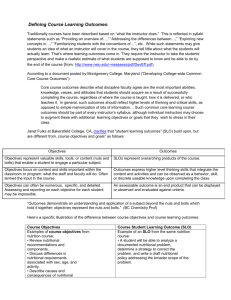

Impact Evaluation: Logic, Benefits and Limitations Outline Theory of change Experimental method – RCT Quasi-experimental methods Managing impact evaluations Think about theory Test scores Test scores Pupil teacher ratio So we need evidence to know which theory is right Pupil teacher ratio Examples of ‘atheoretical’ IEs School capitation grant studies that don’t ask how the money was used BCC intervention studies that don’t ask if behaviour has changed (indeed, almost any study that does not capture behavior change) Microfinance studies that don’t look at use of funds and cash flow Studies of capacity development that don’t ask if knowledge acquired and used Common questions in TBIE Design Implementation Evaluation questions Targeting The right people? Type I and II errors Why is targeting failing? (protocols faulty, not being followed, corruption ..) Training / capacity building Right people? Is it appropriate? Mechanisms to ensure skills utilized Quality of delivery Skills / knowledge acquired and used Have skills / knowledge changed? Are skills / knowledge being applied? Do they make a difference to outcomes? Intervention delivery Tackling a binding constraint? Appropriate? Within local institutional capacity Delivered as intended: protocols followed, no leakages, technology functioning and maintained What problems have been encountered in implementation? When did first benefits start being realized? How is the intervention perceived by IA staff and beneficiaries? Behavior change Is desired BC culturally possible and appropriate; will it benefit intended beneficiaries? Is BC being promoted as intended (right people, right message, right media?) Is behavior change occurring? If not, why not? ToC: micro-insurance Design Design of insurance product Marketing Intermediate outcomes Final outcomes (insured) Consumption smoothing and assets protected Average income may be lower Savings utilized and for more productive (possibly riskier) investments Income increase Employment generation Increased utilization health services (and better quality health services) Better health Positive health spillover effects Adoption of insurance product Ambiguous impact on out of pocket expenses. Likely reduction in catastrophic expenses A s s u m p t i o n s Design is appropriate (something people need) (Relevance) Final outcomes (uninsured) Product is well-marketed to target market Concept of insurance is well-understood Premiums are affordable Take up is sufficient for product to be sustainable (Sustainability) Premiums are paid Lack of adverse selection in measurement of impact on utilization Insurance pays out in a timely manner Insurance accepted by service providers Absence of moral hazard in behavioural response of insured Lack of adverse selection in measurement of impact on health status Why did the Bangladesh Integrated Nutrition Program (BINP) fail? Why did the Bangladesh Integrated Nutrition Project (BINP) fail? Comparison of impact estimates Summary of theory Target group participate in program (mothers of young children) Target group for nutritional counselling is the relevant one Exposure to nutritional counselling results in knowledge acquisition and behaviour change Behaviour change sufficient to change child nutrition Children are correctly identified to be enrolled in the program Food is delivered to those enrolled Supplementary feeding is supplemental, i.e. no leakage or substitution Improved nutritional outcomes Food is of sufficient quantity and quality The theory of change Target group participate in program (mothers of young children) Right target group for nutritional PARTICIPATION counselling RATES WERE UP TO Target group for nutritional counselling is the relevant one Exposure to nutritional counselling results in knowledge acquisition and behaviour change Children are Food is correctly delivered to identified enrolled 30%to LOWERthose FOR be enrolled in WOMEN LIVING the program WITH THEIR MOTHER-IN-LAW Behaviour change sufficient to change child nutrition Supplementary feeding is supplemental, i.e. no leakage or substitution Food is of sufficient quantity and quality Improved nutritional outcomes The theory of change Target group participate in program (mothers of young children) Target group for nutritional counselling is the relevant one Exposure to nutritional counselling results in knowledge acquisition and behaviour change Children are correctly identified to be enrolled in the program Food is delivered to those enrolled Knowledge acquired and used Behaviour change sufficient to change child nutrition Supplementary feeding is supplemental, i.e. no leakage or substitution Food is of sufficient quantity and quality Improved nutritional outcomes The theory of change Target group participate in program (mothers of young children) Target group for nutritional counselling is the relevant one Exposure to nutritional counselling results in knowledge acquisition and behaviour change Behaviour change sufficient to change child nutrition Children are correctly identified to be enrolled in the program Food is delivered to those enrolled Supplementary feeding is supplemental, i.e. no leakage or substitution Improved nutritional outcomes Food is of sufficient quantity and quality The theory of change Target group participate in program (mothers of young children) Target group for nutritional counselling is the relevant one Exposure to nutritional counselling results in knowledge acquisition and behaviour change Children are correctly identified to be enrolled in the program Food is delivered to those enrolled Supplementary feeding is supplementary Behaviour change sufficient to change child nutrition Improved nutritional outcomes Supplementary feeding is supplemental, i.e. no leakage or substitution Food is of sufficient quantity and quality Lessons from BINP Apparent successes can turn out to be failures Outcome monitoring does not tell us impact and can be misleading A theory based impact evaluation shows if something is working and why Quality of match for rigorous study Independent study got different findings from project commissioned study Problems in implementing rigorous impact evaluation: selecting a comparison group Spill over effects: effects on non-target group Contagion (aka contamination): other interventions or self-contamination from spillovers Selection bias: beneficiaries are different Ethical and political considerations The problem of selection bias Program participants are not chosen at random, but selected through Program placement Self selection This is a problem if the correlates of selection are also correlated with the outcomes of interest, since those participating would do better (or worse) than others regardless of the intervention Selection bias from program placement A program of post-conflict social cohesion programs in communities with most conflict-affected persons Since these areas have high numbers of conflict affected persons social cohesion may be lower than elsewhere to start with So comparing these communities with other communities will find lower social cohesion in project communities – because of how they were chosen, not because the project isn’t working The comparison group has to be drawn from a group of schools in similarly deprived areas Selection bias from self-selection A community fund is available for community-identified projects An intended outcome is to build social capital for future community development activities But those communities with higher degrees of cohesion and social organization (i.e. social capital) are more likely to be able to make proposals for financing Hence social capital is higher amongst beneficiary communities than non-beneficiaries regardless of the intervention, so a comparison between these two groups will overstate program impact Examples of selection bias Hospital delivery in Bangladesh (0.115 vs 0.067) Secondary education and teenage pregnancy in Zambia Male circumcision and HIV/AIDS in Africa HIV/AIDs and circumcision: geographical overlay Main point There is ‘selection’ in who benefits from nearly all interventions. So need to get a comparison group which has the same characteristics as those selected for the intervention. Dealing with selection bias Need to use experimental (RCTs) or quasi-experimental methods to cope with selection bias this is what has been meant by rigorous impact evaluation Experimental method Called by different names Random assignment studies Randomized field trials Social experiments Randomized controlled trials (RCTs) Randomized controlled experiments Randomization (RCTs) Randomization addresses the problem of selection bias by the random allocation of the treatment Randomization may not be at the same level as the unit of intervention Randomize across schools but measure individual learning outcomes Randomize across sub-districts but measure village-level outcomes The less units over which you randomize the higher your standard errors But you need to randomize across a ‘reasonable number’ of units At least 30 for simple randomized design (though possible imbalance considered a problem for n < 200) Can possibly be as few as 10 for matched pair randomization, though literature is not clear on this Claim: Experimental method produces estimates of missing counterfactuals by randomization (Angrist and Pischke, 2008) – that is, randomization solves the selection problem How? The independence of outcomes (Y) and treatment assignment (T) in randomized experiment allows one to substitute the observable mean outcome of the untreated E[Y0|T=0] for the missing mean outcome of the treated when not treated E[Y0|T=1] Randomized assignment implies that the distribution of both observable and unobservable characteristics in the treatment and control groups are statistically identical. That is, members of the groups (treatment and control) do not differ systematically at the outset of the experiment. Key advantage of experimental method: Because members of the groups (treatment and control) do not differ systematically at the outset of the experiment, any difference that subsequently arises between them after administering treatment can be attributed to the treatment rather than to other factors. Typical experimental design involves two randomizations Experimental method usually refers to the 2nd randomization – assignment to treatment and control POPULATION Randomized sampling SAMPLE Randomized assignment TREATMENT CONTROL The first stage ensures that the results of the sample will represent the results in the population within a defined level of sampling error (external validity) The second stage ensures that the observed effect on the outcome is due to treatment rather than other confounding factors (internal validity) Sample Size and Power How large does a sample need to be in order to credibly detect a given effect size at a predetermined power? Definition of power (common threshold is 80% and above) A power of 80% tells us that, in 80% of the experiments of this sample size conducted in this population, if H0 is in fact false (i.e., treatment effect is not zero), we will be able to detect it Determinants of sample size given power How big an effect are we measuring? How noisy is the measure of outcome? The noisier the measure, the larger the sample size Do we have a baseline? The larger the effect size, the smaller the sample size Presence of a baseline (which is presumably correlated with subsequent outcomes) reduces the sample size Are individual responses correlated with each other? The more correlated the individual responses, the larger the sample size. Impact estimator: Experimental method This is the difference in mean outcomes of those with project and those without project in a given sample. Conducting an RCT Has to be an ex-ante design Has to be politically feasible, and confidence that program managers will maintain integrity of the design Perform power calculation to determine sample size (and therefore cost) A, B and A+B designs (factorial designs) (and possibly no ‘untreated control’) Ethics and RCT designs Rarely is an intervention universal from day one If incomplete geographical coverage can exploit that fact to get control If rolled out over time can use roll out design (pipeline or stepped wedge design) Or can use an ‘encouragement design’ Doesn’t stop you targeting: randomize across eligible population not whole population … but might used “raised threshold” randomization And randomization is more transparent What is really unethical is to do things that don’t work Ethics and RCT designs Summary: •Simple RCT: individual or cluster •Across pipeline •Expand ‘potential’ geographic area •Encouragement design •Randomize around threshold (possibly with raised threshold) Spillover effects Otherwise uncounted benefits (word of mouth knowledge transfer) or losses (water diversion) Program theory should allow identification of these effects Untreated in treated communities (bed nets, also consider herd effects), easily dealt with if collect data Spillover to other communities (data collection implications; contagion) Contagion (aka contamination) Comparison group are treated By your own intervention: spillover or benign but ill-informed intervention errors By other similar interventions (or to affect same outcomes) Make sure you know it’s happening: data collection is not just outcomes in the comparison group Contagion: just deal with it Total contamination: different counterfactual or dose response model Partial: could drop contaminated observations especially if matched pairs Give up The comparison group location trade off C2 C1 C2 T T T C1 C1 C2 Bottom lines You need skills on RCTs, and to encourage these designs where possible Think about how likely are spillover and contagion and incorporate them in your design if necessary Buying-in academic expertise will help… but beware of differing incentives Quasi-experimental approaches (advantage is can be ex post, but can also be ex ante) Where o where art thou, baseline? Existing datasets Previous rates Monitoring data, but no comparison Recreating baselines From existing data (e.g. 3ie working paper on Pakistan post-disaster) Using recall: be realistic Matching methods Quasi-experimental methods (construct a comparison group) Propensity score matching (PSM) Regression discontinuity design (RDD) ‘Intuitive matching’ Regression-based Instrumental variables: need to be wellmotivated Tips on ex post matching Always check and report quality of the match, and take seriously any mis-match (you can redefine matching method) Baseline data allowing double differencing will add credibility to your results All above points about spillovers and contagion apply All methods (including RCT) using regression approach to ‘iron out’ remaining differences between treatment and comparison Propensity score matching Need someone with all the same age, education, religion etc. But, matching on a single number calculated as a weighted average of these characteristics gives the same result and matching individually on every characteristic – this is the basis of propensity score matching The weights are given by the ‘participation equation’, that is a probit equation of whether a person participates in the project or not PSM: What you need Can be based on ex post single difference, though double difference is better Need common survey for treatment and potential comparison, or survey with common sections for matching variables and outcomes Impact of WS on child health in rural Philippines: Comparison groups All rural households with children younger than 5 years from the 1993, 1998, 2003 and 2008 rounds of the NDHS Some of these children had diarrhea during the two-week period prior to the interview Treatment vs. control Children in households with piped water vs. children in households without piped water Children in households with their own flush toilets vs. children in households without their own flush toilets. Impact measure: Average treatment effect on the treated ATT(X) = E(D1|T=1,p(X))- E(D0|T=1, p(X)) = E(D1|T=1,p(X))- E(D0|T=0, p(X)) where D1 = with diarrhea, D0= without diarrhea, T= treatment indicator (=1 if with piped water or flush toilet, 0 if without), p(X) =propensity scored defined over a vector of covariates X PSM estimates: Impact of piped water and flush toilet in rural Philippines Treatment/ matching algorithm Piped water NN5 (0.001) NN5 (0.01) NN5 (0.02) NN5 (0.03) Kernel (0.03) Kernel (0.05) Own flush toilet NN5 (0.001) NN5 (0.01) NN5 (0.02) NN5 (0.03) Kernel (0.03) Kernel (0.05) 1993 ATT (X) Std. errors 1998 ATT (X) Std. errors 2003 ATT (X) Std. errors 2008 ATT (X) Std. errors -0.020c -0.015 -0.009 -0.013 -0.002 -0.001 0.014 0.013 0.013 0.013 0.012 0.012 0.012 0.008 0.012 0.013 0.014 0.014 0.015 0.015 0.015 0.015 0.013 0.013 -0.032b -0.014 -0.012 -0.015 -0.010 -0.005 0.018 0.017 0.017 0.017 0.015 0.015 -0.029b -0.040a -0.045a -0.042a -0.028b -0.018b 0.017 0.015 0.015 0.015 0.013 0.013 -0.017 -0.013 -0.012 -0.015 -0.015 -0.016 0.016 0.014 0.014 0.014 0.013 0.013 -0.010 -0.003 -0.001 -0.005 0.002 0.002 0.013 0.012 0.012 0.012 0.011 0.011 -0.025c -0.026b -0.027b -0.030b -0.028b -0.027b 0.016 0.015 0.015 0.015 0.014 0.014 -0.034b -0.100a -0.090a -0.087a -0.073a -0.068a 0.018 0.020 0.019 0.019 0.018 0.018 Notes: "NN5(...)" means nearest-5 neighbor matching with the caliper size in parenthesis. a statistically significant at p<0.01. b statistically significant at p<0.05. c statistically significant at p<0.10. Regression discontinuity: an example – agricultural input supply program Naïve impact estimates Total = income(treatment) – income(comparison) = 9.6 Agricultural h/h only = 7.7 But there is a clear link between net income and land holdings And it turns out that the program targeted those households with at least 1.5 ha of land (you can see this in graph) So selection bias is a real issue, as the treatment group would have been better off in absence of program, so single difference estimate is upward bias Regression discontinuity Where there is a ‘threshold allocation rule’ for program participation, then we can estimate impact by comparing outcomes for those just above and below the threshold (as these groups are very similar) We can do that by estimating a regression with a dummy for the threshold value (and possibly also a slope dummy) – see graph In our case the impact estimate is 4.5, which is much less than that from the naïve estimates (less than half) MANAGING IMPACT EVALUATIONS When to do an impact evaluation Different stuff Pilot programs Innovative programs New activity areas Established stuff Representative programs Important programs Look to fill gaps What do IE managers need to know? If an IE is needed and viable Your role as champion The importance of ex ante designs with baseline (building evaluation into design) Funding issues The importance of a credible design with a strong team (and how to recognize that) Help on design Ensure management feedback loops Issues in managing IEs Different objective functions of managers and study teams Project management buy-in Trade-offs On time On richness of study design Overview on data collection Baseline, midterm and endline Treatment and comparison Process data Capture contagion and spillovers Quant and qual Different levels (e.g. facility data, worker data) – link the data Multiple data sources Data used in BINP study Project evaluation data (three rounds) Save the Children evaluation Helen Keller Nutritional Surveillance Survey DHS (one round) Project reports Anthropological studies of village life Action research (focus groups, CNP survey) Piggybacking Use of existing survey Add Oversample project areas Additional module(s) Lead time is longer, not shorter But probably higher quality data and less effort in managing data collection Some timelines Ex post 12-18 months Ex ante: lead time for survey design 3-6 months Post-survey to first impact estimates 6-9 months Report writing and consultation 3-6 months Budget and timeline Ex post or ex ante Existing data or new data How many rounds of data collection? How large is sample? When is it sensible to estimate impact? Remember Results means impact But be selective Be issues-driven not methods driven Find best available method for evaluation questions at hand Randomization often is possible But do ask, is this sufficiently credible to be worth doing? References P. Gertler et al. (2011). Impact evaluation in practice. World Bank. Washington, DC. H. White (2009). Theory-based impact evaluation: principles and practice. 3ie working paper 3. 3ie, New Delhi. H. White (2012). Quality impact evaluation: An introductory workshop. 3ie, New Delhi. Thank you!