Lecture 01 of IB Databases Course

advertisement

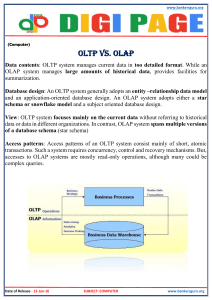

Lecture 1: Introduction to databases Timothy G. Griffin Easter Term 2008 – IB/Dip/IIG www.cl.cam.ac.uk/Teaching/current/Databases/ 1 Database Prehistory Data entry Query processing Storage and retrieval Sorting 2 Early Automation • Data management and application code were all tangled together – Hard to modify – Hard to generalize • Many competing approaches • Data manipulation code written at very low levels of abstraction 3 Our Hero --- E. F. Codd Edgar F. "Ted" Codd ( August 23, 1923 - April 18, 2003) was a British computer scientist who invented relational databases while working for IBM. He was born in Portland, Dorset, studied maths and chemistry at Oxford. He was a pilot in the Royal Air Force during WWII. In 1948 he joined IBM in New York as a mathematical programmer. He fled the USA to Canada during the McCarthy period. Later, he returned to the USA to earn a doctorate in CS from the University of Michigan in Ann Arbor. He then joined IBM research in San Jose. His 1970 paper “A Relational Model of Data for Large Shared Data Banks” changed everything. In the mid 1990’s he coined the term OLAP. 4 Database Management Systems (DBMSs) Your Applications Go Here DBMS Raw Resources (bare metal) Database abstractions allow this interface to be cleanly defined and this allows applications and data management systems to be implemented separately. 5 Today, Database Systems are Ubiquitous Database system design from the European Bioinformatics Institute (Hinxton UK) Service Tools Database design Submission tools Submitters Development DB Q/C etc Production DB End Users Other archives Service DB Add value (computation) Add value (review etc.) Releases Releases & & 6 Updates Updates What is a database system? • A database is a large, integrated collection of data • A database contains a model of something! • A database management system (DBMS) is a software system designed to store, manage and facilitate access to the database 7 What does a database system do? • Manages Very Large Amounts of Data • Supports efficient access to Very Large Amounts of Data • Supports concurrent access to Very Large Amounts of Data • Supports secure, atomic access to Very Large Amounts of Data 8 Databases are a Rich Area for Computer Science • Programming languages and software engineering (obviously) • Data structures and algorithms (obviously) • Logic, discrete maths, computation theory – Some of today’s most beautiful theoretical results are in “finite model theory” --- an area derived directly from database theory • Systems problems: concurrency, operating systems, file organisation, networks, distributed systems… Many of the concepts covered in this course are “classical” --- they form the heart of the subject. But the field of databases is still evolving and producing new and interesting research (hinted at in lectures 11 & 12). 9 What this course is about • According to Ullman, there are three aspects to studying databases: 1. Modelling and design of databases 2. Programming 3. DBMS implementation • This course addresses 1 and 2 10 Course Outline 1. 2. 3. 4. 5. 6. Introduction Entity-Relationship Model The Relational Model The Relational Algebra The Relational Calculus Schema refinement: Functional dependencies 7. Schema refinement: Normalisation 8. Transactions 9. Online Analytical Processing (OLAP) 10. More OLAP 11. Basic SQL and Integrity Constraints 12. Further relational algebra, further SQL 11 Recommended Reading • Date, “An introduction to database systems”, 8th ed. • Elmasri & Navathe, “Fundamentals of database systems”, 4th ed. • Silberschatz, Korth & Sudarshan, “Database system concepts”, 4th ed. • Ullman & Widom, “A first course in database systems”. • OLAP – DB2/400: Mastering Data Warehousing Functions. (IBM Redbook) Chapters 1 & 2 only. http://www.redbooks.ibm.com/abstracts/sg245184.html – Data Warehousing and OLAP Hector Garcia-Molina (Stanford University) http://www.cs.uh.edu/~ceick/6340/dw-olap.ppt – Data Warehousing and OLAP Technology for Data Mining Department of Computing London Metropolitan University http://learning.unl.ac.uk/csp002n/CSP002N_wk2.ppt 12 Some systems to play with 1. mysql: • www.mysql.org • Open source, quite powerful 2. PostgreSQL: • www.postgresql.org • Open source, powerful 3. Microsoft Access: • Simple system, lots of nice GUI wrappers 4. Commercial systems: • • • Oracle 10g (www.oracle.com) SQL Server 2000 (www.microsoft.com/sql) DB2 (www.ibm.com/db2) 13 Database system architecture • It is common to describe databases in two ways – The logical level: • What users see, the program or query language interface, … – The physical level: • How files are organised, what indexing mechanisms are used, … • It is traditional to split the logical level into two: overall database design (conceptual) and the views that various users get to see • A schema is a description of a database 14 Three-level architecture External Schema 1 Conceptual level Physical level External Schema 2 Conceptual Schema External … Schema n External level Internal Schema 15 Logical and physical data independence • Data independence is the ability to change the schema at one level of the database system without changing the schema at the next higher level • Logical data independence is the capacity to change the conceptual schema without changing the user views • Physical data independence is the capacity to change the internal schema without having to change the conceptual schema or user views 16 Database design process • Requirements analysis – User needs; what must database do? • Conceptual design Next Lecture – High-level description; often using E/R model • Logical design – Translate E/R model into (typically) relational schema • Schema refinement – Check schema for redundancies and anomalies • Physical design/tuning – Consider typical workloads, and further optimise 17 The Fundamental Tradeoff of Database Performance Tuning • De-normalized data can often result in faster query response • Normalized data leads to better transaction throughput, and avoids “update anomalies” (corruption of data integrity) Yes, indexing data can speed up transactions, but this just proves the point --- an index IS redundant data. General rule of thumb: indexing will slow down transactions! What is more important in your database --- query response or transaction throughput? The answer will vary. What do the extreme ends of the spectrum look like? 18 A Theme of this Course: OLTP vs. OLAP • OLTP = Online Transaction Processing – Need to support many concurrent transactions (updates and queries) – Normally associated with the “operational database” that supports day-to-day activities of an organization. • OLAP = Online Analytic Processing – Often based on data extracted from operational database, as well as other sources – Used in long-term analysis, business trends. 19 Design Heterogeneity De-normalized Derived Tables --- for fast access Database system design from the European Bioinformatics Institute (Hinxton UK) Other archives Database design Submission tools Submitters Development DB Q/C etc Production DB End Users Service Tools Normalized Tables Service DB Add value (computation) Add value (review etc.) Releases Releases & & 20 Updates Updates