Econ 299 Chapter 05

advertisement

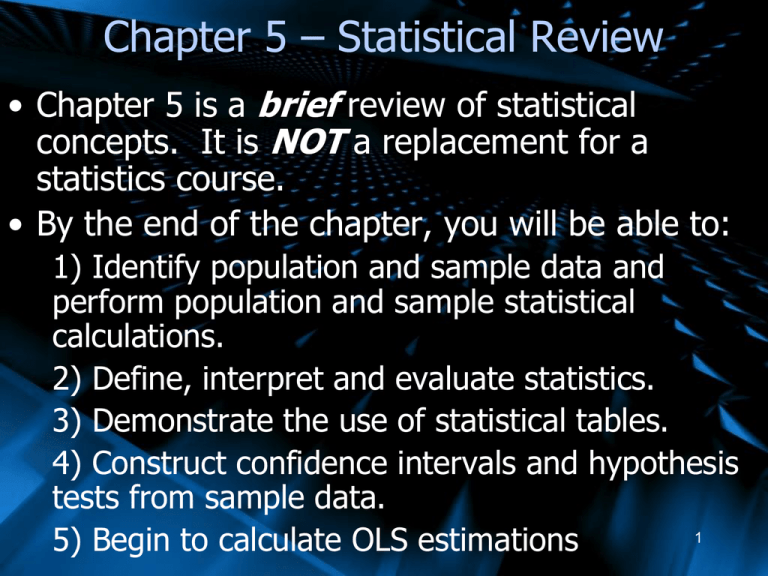

Chapter 5 – Statistical Review

• Chapter 5 is a brief review of statistical

concepts. It is NOT a replacement for a

statistics course.

• By the end of the chapter, you will be able to:

1) Identify population and sample data and

perform population and sample statistical

calculations.

2) Define, interpret and evaluate statistics.

3) Demonstrate the use of statistical tables.

4) Construct confidence intervals and hypothesis

tests from sample data.

1

5) Begin to calculate OLS estimations

5.1 Simple Economic Models

and Random Components

• Consider the linear economic model:

Yi = β1 + β2Xi + єi

• The variable Y is related to another variable X

– Utility is related to hours of TV watched

• Єi (or epsilon) represents error; everything

included in Y that is not explained by X

– Ie: Quality of TV show, Quality of Popcorn,

Other Facts of Life

2

5.1 Observed or Random Components

• Єi (or epsilon) is the RANDOM ERROR TERM; it

takes on values according to chance

• Since Yi depends on Єi, it is also random

• β1 + β2Xi is assumed to be fixed in most simple

models (which simplifies everything)

– Referred to as the deterministic part of the

model

– X, β1 and β2 are Non-Random

• β1 and β2 are unknown, and must be estimated

3

5.1 Example

• Consider the function:

Utilityi = β1 + β2Sistersi + єi

• Happiness depends on the number of sisters

• єi captures: number of brothers, income, and

other factors (ie: bad data collection and

shocks)

• Utility and Sisters are Observable

• Utility and єi are random

• β1 and β2 must be estimated (< or > 0?) 4

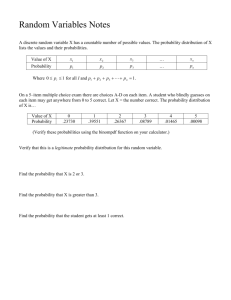

5.2 Random Variables and Probabilities

• Random Variable

–A variable whose value is determined by

the outcome of a chance experiment

• Ie: Sum of a dice roll, card taken out of a deck,

performance of a stock, oil discovered in a

province, gender of a new baby, etc.

• Some outcomes can be more likely than others

(ie: greater chance to discover oil in Alberta,

more likely to roll an 8 than a 5)

5

5.2 Random Variables

• Discrete Variable

–Can take on a finite # of values

–Ie: Dice roll, card picked

• Continuous Variable

–Can take on any value within a range

–Ie: Height, weight, time

• Variables are often assumed discrete to aid in

calculations and economic assumptions (ie:

Money in increments of 1 cent)

6

5.2 Probability Terminology

• Probabilities are assigned to the various

outcomes of random variables

Sample Space – set of all possible outcomes from

a random experiment

-ie S = {2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12}

-ie E = {Pass exam, Fail exam, Fail horribly}

Event – a subset of the sample space

-ie B = {3, 6, 9, 12} ε S

-ie F = {Fail exam, Fail horribly} ε E

7

5.2 Probability Terminology

Mutually Exclusive Events – cannot occur at the

same time

-rolling both a 3 and an 11; being both dead

and alive; having both a son and a daughter

(and only one child)

Exhaustive Events – cover all possible outcomes

-a dice roll must lie within S ε [2,12]

-a person is either married or not married

8

5.2 Quiz Example

Students do a 4-question quiz with each question

worth 2 marks (no part marks). Handing in the

quiz is worth 2 marks, and there is a 1 mark

bonus question.

Events:

-getting a zero (not handing in the quiz)

-getting at least 40% (at least 1 right)

-getting 100% or more (all right or all right plus

the bonus question)

-getting 110% (all right plus the bonus question)

*Events contain one or more

possible outcomes

9

5.2 Probability

Probability = the likelihood of an event

occurring (between 0 and 1)

P(a) = Prob(a) = probability that event a

will occur

P(Y=y) = probability that the random

variable Y will take on value y

P(ylow < Y < yhigh) = probability that the

random variable Y takes on any value

between ylow and yhigh

10

5.2 Probability Examples

P(true love) = probability that you will find

true love

P(Sleep=8 hours) = probability that the

random variable Sleep will take on the

value 8 hours

P($80 < Wedding Gift < $140) = probability

that the random variable Wedding Gift

takes on any value between $80 and $140

11

5.2 Probability Extremes

If Prob(a) = 0, the event will never occur

ie: Canada moves to Europe

ie: the price of cars drops below zero

ie: your instructor turns into a giant llama

If Prob(b) = 1, the event will always occur

ie: you will get a mark on your final exam

ie: you will either marry your true love or not

12

ie: the sun will rise tomorrow

5.2 Probability Rules

1) P(a) must be greater than or equal to 0 and

less than or equal to 1 : 0≤ P(a) ≤1

2) If any set of events (ie: {A,B,C}) are

exhaustive, then

P(A or B or C) = 1

ex) Prob. of winning, losing or tying

3) If any set of events (ie: {A,B,C}) are mutually

exclusive, then

P(A or B or C)=P(A)+P(B)+P(C)

ex) Prob. of marrying the person to the right or left 13

5.2 Probability Examples

1)

2)

3)

4)

5)

6)

7)

8)

P(coin flip=heads) = ½

P(2 coin flips=2 heads) = ¼

Probability of tossing 6 heads in a row = 1/64

Probability of rolling less than 4 with 1 sixsided die = 3/6

Probability of throwing a 13 with 2 dice= 0

Probability of winning rock, paper, scissors =

1/3 (or 3/9)

Probability of being in love or not in love=1

Probability of passing the course = ?

14

5.2.1 Probability Density Functions

•

The probability density function (pdf)

summarizes probabilities associated with

possible outcomes

Discrete Random Variables – pdf

f(y) = Prob (Y=y)

Σf(y) = 1

-(the sum of the probabilities of all possible

outcomes is one)

15

5.2.1 Dice Example

•

The probabilities of

rolling a number with

the sum of two sixsided die

• Each number has

different die

combinations:

7={1+6, 2+5, 3+4, 4+3,

5+2, 6+1}

y

f(y)

2

1/36 8

5/36

3

2/36 9

4/36

4

3/36 10

3/36

5

4/36 11

2/36

6

5/36 12

1/36

Exercise: Construct a

table with one 4-sided

and one 8-sided die

7

6/36

y

f(y)

16

5.2.1 Probability Density Functions

Continuous Random Variables – pdf

f(y) = pdf for continuous random variable Y

∫f(y)dy = 1 (sum/integral of all probabilities of

all possibilities is one)

-probabilities are measured as areas under the

pdf, which must be non-negative

-technically, the probability of any ONE event is

zero

17

5.2.1 Continuous Headache

Continuous Random Variables – pdf

f(y) = 0.2 for 2<y<7

= 0 for y <2 or y >7

7

f(y)

P(3 Y 7)

7

0

.

2

dy

3 [0.2 y ] 0.2(7) 0.2(3) 0.8

y 3

Continuous

probabilities

are the area

under the

pdf curve.

0.2

Y

0

3

7

18

5.3 Expected Values

Expected Value – measure of central tendency;

center of the distribution; population mean

-If the variable is collected an infinite number of

times, what average/mean would we expect?

Discrete Variable:

μY=E(Y) = Σyf(y)

Continuous Variable:

μ(Y)=E(Y) = ∫yf(y)dy

19

5.3 Expected Example

What is the expected value from a dice roll?

E(W) = Σyf(y)

=2(1/36)+3(2/36)+…+11(2/36)+12(1/36)

=7

Exercise: What is the expected value of rolling a

4-sided and an 8-sided die? A 6-sided and a 10sided die?

20

5.3 Expected Application – Pascal’s

Wager

Pascal’s Wager, from Philosopher, Mathematician,

and Physicist Blaise Pascal (1623-62) argued that

belief in God could be justified through expected

value:

-If you live as if God exists, you get huge

rewards if you are right, and wasted some time

and effort if you’re wrong

-If you live as if God does not exist, you save

some time and effort if you’re right, and suffer

21

huge penalties if you’re wrong

5.3 Expected Application – Pascal’s

Wager

Mathematically:

E(belief) = Σutility * f(utility)

=(Utility if God exists)*p(God exists)+

+(Utility if no God)*p(no God)

=1,000,000(0.01)+(50)(0.99)

=10,000+49.52

=10,049.52

22

5.3 Expected Application – Pascal’s

Wager

Mathematically:

E(no belief)= Σutility * f(utility)

=(Utility if God exists)*p(God exists)+

+(Utility if no God)*p(no God)

=-1,000,000(0.01)+(150)(0.99)

=-10,000+148.5

=-9,851.5

Since -9,851.5 is less than 10,049.52, Pascal

argued that belief in God is rational.

23

5.3.1 Properties of Expected Values

a) Constant Property

E(a) = a if a is a constant or non-random variable

Ie: E(14)=14

Ie: E(β1+ β2Xi) = β1+ β2Xi

b) Constants and random variables

E(a+bW) = a+bE(W)

If a and b are non-random and W is random

24

5.3.1 Properties of Expected Values

Applications:

If E(єi) =0, then

E(Yi) = E(β1 + β2Xi + єi)

= β1 + β2Xi + E(єi)

= β1 + β2Xi

E(6sided+10sided)

=E (6-sided) + E (10 sided)

= 3.5 + 5.5

=9

25

5.3.1 Properties of Expected Values

c) “Not so Fast” Property

E(WV) ≠ E(W)E(V)

E(W/V) ≠ E(W)/E(V)

d) Non-Linear Functions

E(Wk) = Σwkf(w)

E(six-sided die2)

=22(1/36)+32(2/36)+

…+112(2/36)+122(1/36)

=54.83

26

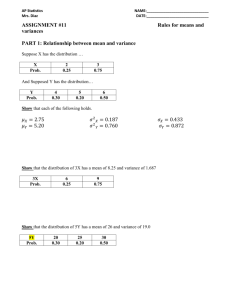

5.4 Variance

Consider the following 3 midterm distributions:

1) Average = 70%; everyone in the class

received 70%

2) Average = 70%; half the class received

50% and half received 90%

3) Average = 70%; most of the class was in

the 70’s, with a few 100’s and a few 40’s

who got a Bachelor in Pottery

27

5.4 Variance

Although these midterm results share the

same average, their distributions differ

greatly.

While the first results are clustered together,

the other two results are quite dispersed

Variance – a measure of dispersion (how far a

distribution is spread out) for a random

variable

28

5.4 Variance Formula

σY2= Var(Y) = E(Y-E(Y))2

= E(Y2) – [E(Y)]2

Discrete Random Variable:

σY2= Var(Y)= Σ(y-E(Y))2f(y)

Continuous Random Variable:

σY2= Var(Y)= ∫(y-E(Y))2f(y)dy

29

5.4 Variances

Example 1:

E(Y)=70

Yi =70 for all i

Var(Y)

= Σ(y-E(Y))2f(y)

= (70-70)2 (1)

= (0)(1)

=0

If all outcomes are the same, there is no

variance.

30

5.4 Variances

Example 2:

E(Y)=70

f(50)=0.5, f(90)=0.5

Var(Y)

= Σ(y-E(Y))2f(y)

= (50-70)2(0.5)+ (90-70)2(0.5)

=200+200

=400

31

5.4 Variances

Example 3:

E(Y)=70

f(40)=1/5, f(70)=3/5, f(100)=1/5

Var(Y)

= Σ(y-E(Y))2f(y)

= (40-70)2(1/5)+ (70-70)2(3/5)+

(100-70)2(1/5)

=900/5+0+900/5

=1800/5

=360

32

5.4 Standard Deviation

While Variance is a good tool for measuring

dispersion, it is difficult to represent

graphically (ie: Bell Curve)

Standard Deviation is more useful for a visual

view of dispersion

Standard Deviation = Variance1/2

sd(W)=[var(W)]1/2

σ= (σ2)1/2

33

5.4 SD Examples

In our first example, σ =01/2=0

No dispersion exists

In our second example, σ =4001/2≈20

In our third example, σ =3601/2=19.0

Results where most dispersed in the second

34

example.

5.4.1 Properties of Variance

a) Constant Property

Var(a) = 0 if a is a constant or non-random

variable

Ie: Var(14)=0

Ie: Var(β1+ β2Xi) = 0

b) Constants and random variables

Var(a+bW) = b2 Var(W)

If a and b are non-random and W is random 35

5.4.1 Properties of Variance

Applications:

If Var(єi) =k, then

Var(Yi)

= Var(β1 + β2Xi + єi)

= 0 + Var(єi)

=k

Exercise: Calculate the variance from:

a) A coin flip

b) A 4-sided die roll

c) Both a and b, where the coin flip represents 0 or 1.

36

5.4.1 Properties of Variance

c) Covariance Property

If W and V are random variables, and a, b, and c

are non-random, then

Var(a+bW+cV)= Var(bW+cV)

= b2 Var(W) + c2 Var (V)

+2bcCov(W,V)

Where Covariance will be examined in 5.6

37

5.4.1 Properties of Variance

Application:

If Var(Cost of Gas)=10 cents

And Var(Cost of a Slurpee)=5 cents

And Cov(Cost of Gas, Cost of Slurpee)=-1 cent

Var(Cost of Gas+Cost of 2 Slurpees)

= b2 Var(G) + c2 Var (Sl)+2bcCov(G,Sl)

=12(10)+22(5)+2(2)(-1)

=10+20-4

=26 cents

38

5.5 Joint Probability Density Functions

Sometimes we are interested in the isolated

occurrence or effects of one variable. In this

case, a simple pdf is appropriate.

Often we are interested in more than one

variable or effect. In this case it is useful to use:

Joint Probability Density Functions

Conditional Probability Density Functions

39

5.5 Joint Probability Density Functions

Joint Probability Density Function-summarizes the probabilities associated

with the outcomes of pairs of random variables

f(w,z) = Prob(W=w and Z=z)

∑ f(w,z) = 1

Similar statements are valid for continuous

random variables.

40

5.5 Joint PDF and You

Love and Econ Example:

On Valentine’s Day, Jonny both wrote an Econ

299 midterm and sent a dozen roses to his love

interest.

He can either pass or fail the midterm, and his

beloved can either embrace or spurn him.

E = {Pass, Fail}; L = {Embrace, Spurn}

41

5.5 Joint PDF and You

Love and Econ Example:

Joint pdf’s are expressed as follows:

P(pass and embrace) = 0.32

P(pass and spurn) = 0.08

P(fail and embrace) = 0.48

P(fail and spurn) = 0.12

(Notice that:

∑f(E,L) = 0.32+0.08+0.48+0.12 = 1)

42

5.5 Joint and Marginal Pdf’s

Marginal (individual) pdf’s can be determined

from joint pdf’s. Simply add all of the joint

probabilities containing the desired outcome of

one of the variables.

Ie: f(Y=7)=∑f(Y=7,Z=zi)

Probability that Y=7 = sum of ALL joint

probabilities where Y=7

43

5.5 Love and Economics

f(pass) = f(pass and embrace)+f(pass and spurn)

= 0.32 + 0.08

= 0.40

f(fail) = f(fail and embrace)+f(fail and spurn)

= 0.48 + 0.12

= 0.60

Exercise: Find f(embrace) and f(spurn)

44

5.5 Love and Economics

Notice:

Since passing or failing are exhaustive outcomes,

Prob (pass or fail) = 1

Also, since they are mutually exclusive,

Prob (pass or fail)

= Prob (pass) + Prob (fail)

45

= 0.4 + 0.6

=1

5.5 Conditional Probability

Density Functions

Conditional Probability Density Function-summarizes the probabilities associated

with the possible outcomes of one random

variable conditional on the occurrence of a

specific value of another random variable

Conditional pdf = joint pdf/marginal pdf

Or

Prob(a|b) = Prob(a&b) / Prob(b)

(Probability of “a” GIVEN “b”) 46

5.5 Conditional Love and Economics

From our previous example:

Prob(pass|embrace) = Prob(pass and embrace)/

Prob (embrace)

= 0.32/0.80

= 0.4

Prob(fail|embrace) = Prob(fail and embrace)/

Prob (embrace)

= 0.48/0.80

= 0.6

47

5.5 Conditional Love and Economics

From our previous example:

Prob(spurn|pass) = Prob(pass and spurn)/

Prob (pass)

= 0.08/0.40

= 0.2

Prob(spurn|fail)

= Prob(fail and spurn)/

Prob (fail)

= 0.12/0.60

= 0.2

48

5.5 Conditional Love and Economics

Exercise: Calculate the other conditional pdf’s:

Prob(pass|spurn)

Prob(fail|spurn)

Prob(embrace|pass)

Prob(embrace|fail)

49

5.5 Statistical Independence

If two random variables (W and V) are

statistically independent (one’s outcome

doesn’t affect the other at all), then

f(w,v)=f(w)f(v)

And:

1) f(w)=f(w|any v)

2) f(v)=f(v|any w)

As seen in the Love and Economics example.50

5.5 Statistically Dependent Example

Bob can either watch Game of Thrones or

Yodeling with the Stars: W={T, Y}. He can

either be happy or sad V={H,S}. Joint pdf’s are

as follows:

Prob(Thrones and Happy) = 0.7

Prob(Thrones and Sad)=0.05

Prob(Yodeling and Happy)=0.10

Prob(Yodeling and Sad)=0.15

51

5.5 Statistically Dependent Example

Calculate Marginal pdf’s:

Prob(H)=Prob(T and H) + Prob(Y and H)

=0.7+0.10

=0.8

Prob(S)=Prob(T and S) + Prob(Y and S)

=0.05+0.15

=0.2

Prob(H)+Prob(S)=1

52

5.5 Statistically Dependent Example

Calculate Marginal pdf’s:

Prob(T)=Prob(T and H) + Prob(T and S)

=0.7+0.05

=0.75

Prob(Y)

=Prob(Y and H) + Prob(Y and S)

=0.10+0.15

=0.25

Prob(T)+Prob(Y)=1

53

5.5 Statistically Dependent Example

Calculate Conditional pdf’s:

Prob(H|T)=Prob(T and H)/Prob(T)

=0.7/0.75

=0.93

Prob(H|Y)=Prob(Y and H)/Prob(Y)

=0.10/0.25

=0.4

Exercise: Calculate the other conditional pdf’s54

5.5 Statistically Depressant Example

Notice that since these two variables are NOT

statistically independent – Game of Thrones is

utility enhancing – our above property does

not hold.

P(Happy) ≠ P(Happy given Thrones)

0.8 ≠ 0.93

P(Sad) ≠ P(Sad given Yodeling)

0.2 ≠ 0.4 (1-0.6)

55

5.5 Conditional Expectations and Variance

Assuming that our variables take numerical

values (or can be interpreted numerically),

conditional expectations and variances can be

taken:

E(P|Q=500)=Σpf(p|Q=500)

Var(P|Q=500)=Σ[p-E(P|Q=500)]2f(p|Q=500)

Ie) money spent on a car and resulting utility

56

(both random variables expressed numerically).

5.5 Conditional Expectations and Variance

Example: A consumer can spend $5000 or

$10,000 on a car, yielding utility of 10 or 20. The

conditional probabilities are :

f(10|$5,000)=0.7

f(20|$5,000)=0.3

E(U|P=$5000) =ΣUf(U|P=$5000)

=10(0.7) +20(0.3) =13

Var(U|P=$5K) =Σ[U-E(U|P=$5K)]2f(U|P=$5K)

=(10-13)2(0.7)+(20-13)2(0.3)

57

= 21

5.6 Covariance and Correlation

If two random variables are NOT statistically

independent, it is important to measure the

amount of their interconnectedness.

Covariance and Correlation are useful for this.

Covariance and Correlation are also useful in

model testing, as you will learn in Econ 399.

58

5.6 Covariance

Covariance – a measure of the degree of linear

dependence between two random variables. A

positive covariance indicates some degree of

positive linear association between the two

variables (the opposite likewise applies)

Cov(V,W)=E{[W-E(W)][V-E(V)]}

59

5.6 Discrete and Continuous

Covariance

Discrete Random Variable:

Cov(V ,W ) (v E (v))( w E ( w)) f (v, w)

v

w

Continuous Random Variable:

Cov(V ,W ) (v E (v))( w E ( w)) f (v, w)vw

v w

60

5.6 Covariance Example

Joe can buy either a burger ($2) or ice cream

($1) and experience utility of 1 or zero.

C={$1, $2}, U={0,1}

Prob($1

Prob($1

Prob($2

Prob($2

and

and

and

and

0)=0.2

1)=0.6

0)=0.1

1)=0.1

61

5.6 Covariance Example

Prob($1

Prob($1

Prob($2

Prob($2

and

and

and

and

0)=0.2

1)=0.6

0)=0.1

1)=0.1

Prob($1)=0.2+0.6=0.8

Prob($2)=0.1+0.1=0.2

Prob(0)=0.2+0.1=0.3

Prob(1)=0.6+0.1=0.7

62

5.6 Covariance Example

E(C) =∑cf(c)

=$1(0.8)+$2(0.2)

=$1.20

E(U) = ∑uf(u)

= 0(0.3)+1(0.7)

=0.7

63

5.6 Covariance Example

E(C) =$1.20

E(U) =0.7

Cov(C,U)=∑∑(c-E(C))(u-E(U))f(c,u)

=(1-1.20)(0-0.7)(0.2)

+(1-1.20)(1-0.7)(0.6)+(2-1.20)(0-0.7)(0.1)

+(2-1.20)(1-0.7))0.1)

=(-0.2)(-0.7)(0.2)+(-0.2)(0.3)(0.6)

+(0.8)(-0.7)(0.1)+(0.8)(0.3)(0.1)

=0.028-0.036-0.056+0.032

=-0.032 (Negative Relationship)

64

5.6 Correlation

Covariance is an unbounded measure of

interdependence between two variables.

Often, it is useful to obtain a BOUNDED measure

of interdependence between two variables, as

this opens the door for comparison.

Correlation is such a bounded variable, as it lies

between -1 and 1.

65

5.6 Correlation

Correlation Formulas:

W V Corr (W , V )

Corr (V ,W )

Cov(V ,W )

WV

(v E (v))( w E (w)) f (v, w)

v

w

Var (v) Var ( w)

66

5.6 Correlation Example

From the Data above:

Var(C)

=∑ (c-E(C)2f(v)

=(1-1.20)2(0.8)+(2-1.20)2(0.2)

=0.032 + 0.128

=0.16

Var(W) =∑ (u-E(U)2f(w)

=(0-0.7)2(0.3)+(1-0.7)2(0.7)

=0.147 + 0.063

=0.21

67

5.6 Correlation Example

From the Data above:

Corr(C,U) =Cov(C,U)/[sd(C)sd(U)]

=-0.032 / [0.16(0.21)]1/2

=-0.175

Still represents a negative relationship.

68

5.6 Graphical Correlation

If Correlation = 1, observations of the two variables lie

upon an upward sloping line

If Correlation = -1, observations of the two variables lie

on a downward sloping line

If Correlation is between 0 and 1, observations of the

two variables will be scattered along an upward sloping

line.

If Correlation is between 0 and -1, observations of the

two variables will be scattered along a downward

69

sloping line.

5.6 Correlation, Covariance and

Independence

Covariance, correlation and independence have

the following relationship:

If two random variables are independent, their

covariance (correlation) is zero.

INDEPENDENCE => ZERO COVARIANCE

If two variables have zero covariance

(correlation), they may or may not be

independent.

ZERO COVARIANCE ≠> INDEPENDENCE

70

5.6 Correlation, Covariance and

Independence

INDEPENDENCE => ZERO COVARIANCE

ZERO COVARIANCE ≠> INDEPENDENCE

From these relationships, we know that

Non-zero Covariance => Dependence

but

Dependence ≠> Non-zero Covariance

71

5.7 POPULATION VS. SAMPLE DATA

Population Data – Full information on the ENTIRE

population.

-Includes population probability (pdf)

-Uses the previous formulas

-ex) data on an ENTIRE class

Sample Data

– Partial information from a RANDOM

SAMPLE (smaller selection) of the population

-Individual data points (no pdf)

-Uses the following formulas

-ex) Study of 2,000 random students

72

5.7 Estimators

Population Expected Value:

μ = E(Y) = Σ y f(y)

Sample Mean:

Y

Y

i

N

__

Note: From this point on, Y may be expressed as

Ybar (or any other variable - ie:Xbar). For example,

via email no equation editor is available, so answers

73

may be in this format.

5.7 Estimators

Population Variance:

σY2 = Var(Y) = Σ [y-E(y)]2 f(y)

Sample Variance:

S y2

2

(

Y

Y

)

i

N 1

74

5.7 Estimators

Population Standard Deviation:

σY = (σ2)1/2

Sample Standard Deviation:

Sy = (Sy2)1/2

75

5.7 Estimators

Population Covariance:

Cov(V,W)=∑∑(v-E(v))(w-E(w))f(v,w)

Sample Covariance:

(V V )(W W )

Cov(V ,W )

i

i

N 1

76

5.7 Estimators

Population Correlation:

σvw = corr(V,W)= Cov(V,W)/ σv σw

Sample Correlation:

rvw = corr(V,W)= Cov(V,W)/ Sv Sw

77

5.7 Estimators

Population Regression Function:

Yi = β1 + β2Xi + єi

Estimated Regression Function:

ˆ

ˆ

ˆ

Yi 1 2 X i

78

5.7 Estimators

OLS Estimation:

ˆ

( X X )(Y Y )

(X X )

i

2

i

2

i

Cov( X , Y )

ˆ

2

S X2

ˆ Y ˆ X

1

2

^

Note: B2 may be expressed as b2hat

79

5.7 Estimators Example

Given the data set:

Price

4

3

3

6

Quantity

10

15

20

15

Find sample means, variance, covariance, correlation,

and ols estimation

80

5.7 Estimators Example

Price

4

3

3

6

Quantity

10

15

20

15

Sample Means:

Pbar = (4+3+3+6)/4 = 4

Qbar = (10+15+20+15)/4 = 15

81

5.7 Estimators Example

Price

4

3

3

6

Pbar = 4

Quantity

10

15

20

15

Qbar=15

Sample Variance:

Sp2

Sq2

= [(4-4)2+(3-4)2+(3-4)2+(6-4)2]/(N-1)

=(0+1+1+4)/3

=2

= [(10-15)2+(15-15)2+(20-15)2+(15-15)2]/(N-1)

=(25+0+25+0)/3

=50/3

82

5.7 Estimators Example

Price

4

3

3

6

Pbar = 4

Quantity

10

15

20

15

Qbar=15

Sample Covariance:

Cov(p,q)= [(4-4)(10-15)+(3-4)(15-15)

+(3-4)(20-15)+(6-4)(15-15)]/(N-1)

=[ 0 + 0 -5 +0] /3

= -5/3

83

5.7 Estimators Example

Price

4

3

3

6

Pbar = 4

Quantity

10

15

20

15

Qbar=15

Sample Correlation

Corr(p,q) =

=

=

=

Cov(p,q)/SpSq

5/3 / [2(50/3)]1/2

-5/3 / (10/31/2)

-0.2886

84

5.7 Estimators Example

Price

4

3

3

6

Pbar = 4

Quantity

10

15

20

15

Qbar=15

Ols Estimation

B2hat = ∑(Xi-Xbar)(Yi-Ybar)

---------------------∑(Xi-Xbar)2

= [(4-4)(10-15)+(3-4)(15-15)+(3-4)(20-15)+(6-4)(15-15)

-----------------------------------------------------------------------------

(4-4)2+(3-4)2+(3-4)2+(6-4)2

=-5/6

85

5.7 Estimators Example

Price

4

Quantity 10

3

3

6

Pbar = 4

15

20

15

Qbar=15

Ols Estimation

B1hat = Ybar – (B2hat)(Xbar)

= 15- (-5/6)4

110 5

= 90/6 + 20/6

Yi

Xi

= 110/6

6 6

110 5

ˆ

Qi

Pi

6 6

86

5.7.1 Estimators as random variables

Each of these estimators will give us a result based upon

the data available.

Therefore, two different data sets can yield two

different point estimates.

Therefore the value of the point estimate can be seen as

being the result of a chance experiment – obtaining a

data set.

Therefore each point estimate is a random variable,

with a probability distribution that can be analyzed using

the expectation and variance operator.

87

5.7.1 Estimators Distribution

Since the same mean is a variable, we can easily apply

expectation and summation rules to find the expected

value of the sample mean:

Y

Y

i

N

Yi 1

E Yi

E Y E

N

N

1

1

E Y E (Yi ) Y

N

N

1

E Y N Y Y

N

88

5.7.1 Estimators Distribution

If we make the simplifying assumption that there is no

covariance between data points (ie: one person’s

consumption is unaffected by the next person’s

consumption), we can easily calculate variance for the

sample mean:

2

Yi 1

Var Yi

Var Y Var

N

N

2

2

1

Var Y

N

1

Var (Yi ) N

2

1

2

Var Y N Y Y

N

N

2

2

Y

89

5.7.1 Estimators Distribution

Although we can’t observe the population variance of

Ybar, we can calculate its sample variance, therefore,

Var Y

2

Y

N

2

Y

S

SampleVar Y

N

90

5.8 Common Economic Distributions

In order to test assumptions and models,

economists need be familiar with the

following distributions:

Normal

t

Chi-square

F

For full examples and explanations of these

tables, please refer to a statistics text.

91

5.8 Normal Distribution

The Normal (Z) Distribution produces a

symmetric bell-shaped curve with a mean of

zero and a standard deviation of one.

The probability that z>0 is always 0.5

The probability that z<0 is always 0.5

Z-tables generally (but not always) measure area

from the centre

Probabilities decrease as you move from the center

92

5.8 Normal Example

Weekly weight gain can be argued to have a

normal distribution:

On average, no weight is gained or lost

A few pounds may be gained or lost

It is very unlikely to lose or gain many pounds

Find Prob(Gain between 0 and 1 pound)

Prob(0<z<1) = 0.3413

= 34.13%

93

5.8 Normal Example

Find Prob(Lose more than 2 pounds)

Prob(z<-2) = 0.5 - 0.4772

= 0.0228 (2.28%)

Find Prob (Do not gain more than 2 pounds)

Prob(z<2) = 0.5+0.4772

= 0.9772(97.72%)

94

5.8 Converting to a normal distribution

Z distributions assume that the mean is zero and

the standard deviation is one.

If this is not the case, the distribution needs to

be converted to a normal distribution using

the following formula:

Z

x x

x

95

5.8 Assignment Example

The average for the Fall 2005 Assignment #2

was 82%. Standard deviation was aprox. 6.

What is the probability of a random student

getting above 90%?

Prob(Y>90)

= Prob[{(Y-82)/6}>{(90-82)/6}]

= Prob(Z>1.33)

= 0.5 - Prob (0<Z<1.33)

= 0.5 - 0.4082

=9.18%

96

5.8 Assignment Example

What is the probability of getting a mark in the

80’s?

Prob(79<Y<90)

= Prob[{(79-82)/6}<{(Y-82)/6}<{(90-82)/6}]

= Prob(-0.5<Z<1.33)

= Prob(0<Z<0.5) + Prob(0<Z<1.33)

=0.1915 + 0.4082

=0.5997

=59.97%

97

5.8 Assignment Example

Find the mark (Y*) wherein there is a 15%

probability that Y<Y* (Bottom 15% of the class)

(Since 0.15<50, Z*<0)

Prob(Z<Z*)

= 0.5-Prob(0<Z<-Z*)

0.15

= 0.5-Prob(0<Z<-Z*)

Prob (0<Z<-Z*)=0.35

From tables, -Z*= 1.04

Therefore

Z* = -1.04

98

5.8 Example Continued

We know that

Z = (x-μ)/σ

So

X= μ+z(σ)

X= 82+(-1.04)6

X= 82-6.24

X= 75.76

There is a 15% chance that a student scored less

than 75.76%

99

5.8 Other Distributions

All other distributions depend on DEGREES OF

FREEDOM

Degrees of Freedom are generally dependant on

two things:

Sample size (as sample rise rises, so does

degrees of freedom)

Complication of test (more complicated

statistical tests reduce degrees of freedom)

Simple conclusions are easier to make

than complicated ones

100

5.8 t-distribution

t-distributions can involve 1-tail or 2-tail tests

Interpolation is often needed within the table

Example 1:

Find the critical t-values (t*) that cuts of 1% of

both tails with 27df

(Note: 1% off both tails = 0.5% off each tail)

For p=0.495, df 27 gives t*=2.77, -2.77

101

5.8 t-distribution

Example 2:

Find the critical t-value (t*) that cuts of 1% of

the right tail with 35df

For 1T=0.01, df 30 gives t*=2.46

df 40 gives t*=2.42

Since 35 is halfway between 30 and 40, a good

approximation of df 35 would be:

t*=(2.46+2.42)/2 = 2.44

102

5.8 t-distribution

Typically, the following variable (similar to the

normal Z variable seen earlier) will have a tdistribution: (we will see examples later)

Estimator E ( Estimator)

t

Sample sd ( Estimator)

103

5.8 chi-square distribution

Chi-square distributions are 1-tail tests

Interpolation is often needed within the table

Example:

Find the critical chi-squared value that cuts off

5% of the right tail with 2df

For Right Tail = 0.05, df=2

Critical Chi-Squared Value = 5.99

104

5.8 F-distribution

F-distributions are 1-tail tests

Interpolation is often needed within the table

Example:

Find the critical F value (F*) that cuts of 1% of

the right tail with 3df in the numerator and

80df in the denominator

For Right Tail = 0.01, df1=3, df2=80,

df2=60 gives F*=4.13 df2=120 gives F*=3.95

105

5.8 Interpolation

df2=60 gives F*=4.13 df2=120 gives F*=3.95

Since 80 is 1/3rd of the way between 60 and 120:

60

80

100

120

Our F-value should be 1/3 of the way between

4.13 and 3.95:

4.13

?

3.95

Approximization:

F*=4.13-(4.13-3.95)/3=4.07

106

5.8 Distribution Usage

Different testing of models will use different

tables, as we will see later in the course.

In general:

1) Normal tables do distribution estimations

2) t-tables do simple tests

3) F-tables do simultaneous tests –Prob(a & b)

4) Chi-squared tables do complicated tests

devised by mathematicians smarter than you

or I (they invented them, we use them) 107

5.9 Confidence Intervals

Thus far, all our estimates have been POINT estimates;

a single number emerges as our estimate for an

unknown parameter.

Ie)

X 3.74

Even if we have good data and have an estimator with

a small variance, the chances that our estimate will

equal our actual value are very low.

Ie) If a coin is expected to turn heads half the time.

The chance that it actually does that in an

108

experiment is very low

5.9.1 Constructing Confidence Intervals

Confidence intervals or interval estimators

acknowledge underlying uncertainties and are

an alternative to point estimators

Confidence intervals propose a range of values

in which the true parameter could lie, given a

range of probability.

Confidence intervals can be constructed since our

109

point estimates are RANDOM VARIABLES.

5.9.1 Degrees of Freedom

When given actual population data, we converted

into a z-score:

Z = (x-μ)/σ

With random samples, we convert into a t-score:

t = (x – E(x)) / sample sd(x)

with n-1 degrees of freedom

This is proven by various complicated central

limit theorems

110

5.9.1 CI’s and Alpha

Probabilities of confidence intervals are denoted

by α (alpha).

Given α, we construct a 100(1- α)% confidence

interval. If α=5%, we construct a 95%

confidence interval.

P(Lower limit<true parameter<Upper limit)=1- α

111

5.9.1 Formula

Given a repeated sample, we want to construct

confidence intervals for the mean such that:

P{t* ( X X ) / s X t*} 1

(1-α)%

-t*

t*

t

Where t has n-1 degrees of freedom, and ±t*

112

cuts α/2 off both tails.

5.9.1 Formula

Rearranging we get:

P{ X t * s X X X t * s X } 1

(1-α)%

X t * sX

X t * sX

μX

113

5.9.1 Formula

Our final formula becomes:

CI X X t * s X

Or in general:

CItruevalue estimate t * sestimate

Which gives us an upper and lower bound for

our CI.

114

5.9.1 Example

Flipping a coin has given us 25 heads with a value of

1, and 15 tails with a value of zero. Find the

95% CI if n=40.

We therefore have:

25(1) 15(0) 25

C

0.625

40

40

115

5.9.1 IMPORTANT - Estimated Standard

Deviation of a Sample Mean

We have already seen that sample standard deviation

is found through the formula:

SY

(Y Y )

2

i

N 1

Standard deviation of a sample mean is found

through:

sY sY / N

116

5.9.1 Example

SC

(C C )

2

i

N 1

25(1 0.625) 15(0 0.625)

SC

40 1

2

2

9.375

SC

0.49

39

sC sC / N 0.49 / 40 0.077

117

5.9.1 Example

A 95% CI has 2.5% off each tail. If n=40,

t* = 2.02

CI C C t * sC

CI C 0.625 2.02(0.077)

CI C [0.47,0.78]

118

5.9.1 Interpretation:

In this example, we have a confidence interval of

[0.47, 0.78].

In other words, in repeated samples, 95% of

these intervals will include the probability of

getting a “heads” when flipping a coin.

119

5.9.1 Confidence Requirements

In order to construct a confidence interval, one

needs:

a) A point estimate of the parameter

b) Estimated standard deviation of the

parameter

c) A critical value from a probability distribution

(or α and the sample size, n)

120

5.10 Hypothesis Testing

After a model has been derived, it is often useful to test

various hypotheses:

Are a pair of dice weighted towards another number

(say 11)?

Does a player get blackjack more often than he

should?

Will raising tuition increase graduation rates?

Will soaring gas costs decrease car sales?

Will the recession affect Xbox sales?

Does fancy wrapping increase the appeal of

Christmas presents?

Does communication between rivals affect price?

121

5.10 Hypothesis Testing

Question: Is our data CONSISTENT with a

particular parameter having a specific value?

Although we may observe an outcome (ie: a

Blackjack player has 150% of his starting

chips) (assume the average outcome should

be 80%),

We need to test if this outcome is:

1) Consistent with typical chance or

2) Inconsistent – perhaps showing cheating

122

5.10 Hypothesis Testing

Testing Consistency of a Hypothesized Parameter:

1) Form a null and an alternate hypothesis.

H0 = null hypothesis = variable is equal to a

number

Ha = alternate hypothesis = variable is not equal

to a number

EX)

H0: Outcome=0.8

Ha: Outcome≠0.8

123

5.10 Hypothesis Testing

Testing Consistency of a Hypothesized Parameter:

2) Collect appropriate sample data

3) Select an acceptable probability (α) of rejecting

a null hypothesis when it is true

-Type one error

-Lower α, more unlikely to find a sample that

rejects the null hypothesis

- α is often 10%, 5%, or 1%

124

5.10 Hypothesis Testing

Testing Consistency of a Hypothesized Parameter:

4) Construct an appropriate test statistic

-ensure the test statistic can be calculated from

the sample data

-ensure its distribution is appropriate to that being

tested (ie: t-statistic for test for mean)

125

5.10 Hypothesis Testing

Testing Consistency of a Hypothesized Parameter:

5) Establish (do not) reject regions

-Construct bell curve

-Tails are Reject H0 regions

-Centre is Do not Reject H0 regions

126

5.10 Hypothesis Testing

Testing Consistency of a Hypothesized Parameter:

6) Compare the test statistic to the critical statistic

-If the test statistic lies in the tails, reject

-If the test statistic doesn’t lie in the tails, do not

reject

-Never Accept

7) Interpret Results

127

5.10 Hypothetical Example

Johnny is a poker player who has an average of 8 times

out of ten (from 120 games and the standard deviation

is 0.5). Test the hypothesis that Johnny never wins.

H0: W=0

Ha: W ≠0

2) We have estimated W=8. The standard

deviation was 0.5

3) We let α=1%; we want a strong result.

4) t= (estimate-hypothesis)/sd = (8-0)/0.5=16

5) t* for n-1=119, α=1%: t*=2.62

6) t*<t; Reject H0

128

1)

5.10 Hypothetical Example

7) Allowing for a 1% chance of a Type 1 error, we

reject the null hypothesis that Johnny never

wins at Poker.

According to our data, it is consistent that

Johnny sometimes wins at Poker.

129