15 Regression (completed ppt)

advertisement

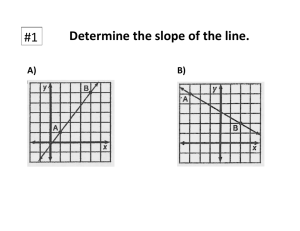

Regression Lines y=mx+b m = slope of the line; how steep it is b = y-intercept of the line; where the line hits the Y axis Slope Slope is the comparative rate of change for Y and X. Steeper slope indicates a greater change Slope = m = ∆Y = (Y2-Y1) = rise ∆X (X2-X1) run Compact and Augmented Model The Compact Model says that your best guess for any value in a sample is the mean. C: Yi = β0 + εi • Anyone’s Yi value (DV) is equal to the intercept (β0) plus error The Augmented Model makes your prediction even better than the mean by adding a predictor(s). A: Yi = β0 + β1X1+ … + βnXn+εi • With the average height of 5’5 we add other predictors like shoe size or ring size. Parameters and Degrees of Freedom A parameter is a numeric quantity, that describes a certain population characteristic. (i.e. population mean) The number of betas in your compact and augmented model indicates how many parameters you have in each model. df Regression = PA-PC df Residual = N-PA df Total = N-PC Predicting Height From Mean Height How much error was there? C: Yi = β0 + εi; PC =1 Where β0 is your average height and εi is your error in the compact mode PC = 1 Ŷc = b0 = Ӯ. Predicting Height from Shoe Size and Mean Height How much error was there now? A: Yi = β0 + β1X1+ εi ; PA = 2 β0 is the adjusted mean, β1 represents the effect of shoe size, X1 is shoe size (a predictor) and εi is the error ŶA = b0+b1X1 b1= SSxy/SSx = slope b0 = Ӯ – b1(Xbar1) Proportional Reduction in Error PRE is the amount of error you have reduced by using the augmented model to predict height as opposed to the compact model = R2 = ɳ2 = SSreg SStotal = SSxy √(SSx)(SSy) PRE Creating the ANOVA Table The Coefficients Table Comparing Regression Printout With ANOVA Contrast Coding Contrast codes are orthogonal codes meaning that they are unrelated codes. Three rules to follow when using contrast codes: The sum of the weights for all groups must be zero The sum of the products for each pair must be zero The difference in the value of positive weights and negative weights should be one for each code variable http://www.stat.sc.edu/~mclaina/psyc/1st%20lab%20notes%20710.pdf Sums of Squares Everywhere! SSE(C) =SSy = SSt SSE(A) = SSresid =SSw SSreg = SSb