statLecture11b

advertisement

Corpora and Statistical Methods

Lecture 11

Albert Gatt

Part 2

Statistical parsing

Preliminary issues

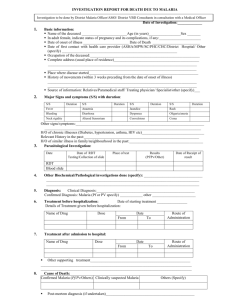

How parsers are evaluated

Evaluation

The issue:

what objective criterion are we trying to maximise?

i.e. under what objective function can I say that my parser does “well”

(and how well?)

need a gold standard

Possibilities:

strict match of candidate parse against gold standard

match of components of candidate parse against gold standard

components

Evaluation

A classic evaluation metric is the PARSEVAL one

initiative to compare parsers on the same data

not initially concerned with stochastic parsers

evaluate parser output piece by piece

Main components:

compares gold standard tree to parser tree

typically, gold standard is the tree in a treebank

computes:

precision

recall

crossing brackets

PARSEVAL: labeled recall

# correct nodes in candidate parse

# nodes in treeban k parse

Correct node = node in candidate parse which:

has same node label

originally omitted from PARSEVAL to avoid theoretical conflict

spans the same words

PARSEVAL: labeled precision

# correct nodes in candidate parse

# nodes in candidate parse

The proportion of correctly labelled and correctly spanning

nodes in the candidate.

Combining Precision and Recall

As usual, Precision and recall can be combined into a

single F-measure:

F

1

1

1

(1 )

P

R

PARSEVAL: crossed brackets

number of brackets in the candidate parse which cross

brackets in the treebank parse

e.g. treebank has ((X Y) Z) and candidate has (X (Y Z))

Unlike precision/recall, this is an objective function to

minimise

Current performance

Current parsers achieve:

ca. 90% precision

>90% recall

1% cross-bracketed constituents

Some issues with PARSEVAL

1.

These measures evaluate parses at the level of individual

decisions (nodes).

2.

Success on crossing brackets depends on the kind of parse

trees used

3.

ignore the difficulty of getting a globally correct solution by

carrying out a correct sequence of decisions

Penn Treebank has very flat trees (not much embedding),

therefore likelihood of crossed brackets decreases.

In PARSEVAL, if a constituent is attached lower in a tree

than the gold standard, all its daughters are counted

wrong.

Probabilistic parsing with PCFGs

The basic algorithm

The basic PCFG parsing algorithm

Many statistical parsers use a version of the CYK algorithm.

Assumptions:

CFG productions are in Chomsky Normal Form.

A BC

Aa

Use indices between words:

Book the flight through Houston

(0) Book (1) the (2) flight (3) through (4) Houston (5)

Procedure (bottom-up):

Traverse input sentence left-to-right

Use a chart to store constituents and their span + their probability.

Probabilistic CYK: example PCFG

S NP VP [.80]

NP Det N [.30]

VP V NP [.20]

V includes [.05]

Det the [.4]

Det a [.4]

N meal [.01]

N flight [.02]

Probabilistic CYK: initialisation

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

//syntactic lookup

for i = j-2 to 0 do:

chartij := {}

for k = i+1 to j-1 do:

for each A -> BC do:

if B in chartik & C in chartkj:

chartij := chartij U {A}

1

0

1

2

3

4

5

2

3

4

5

Probabilistic CYK: lexical step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

1

0

1

2

3

4

5

Det

(.4)

2

3

4

5

Probabilistic CYK: lexical step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

1

0

1

2

3

4

5

2

Det

(.4)

N

.02

3

4

5

Probabilistic CYK: syntactic step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

//syntactic lookup

for i = j-2 to 0 do:

chartij := {}

for k = i+1 to j-1 do:

for each A -> BC do:

if B in chartik & C in chartkj:

chartij := chartij U {A}

0

1

2

3

4

5

1

2

Det

(.4)

NP

.0024

N

.02

3

4

5

Probabilistic CYK: lexical step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

0

1

2

3

4

5

1

2

Det

(.4)

NP

.0024

3

N

.02

V

.05

4

5

Probabilistic CYK: lexical step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

0

1

2

3

4

5

1

2

Det

(.4)

NP

.0024

3

4

N

.02

V

.05

Det

.4

5

Probabilistic CYK: syntactic step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

0

1

2

3

4

1

2

Det

(.4)

NP

.0024

3

4

5

N

.02

V

.05

Det

.4

N

.01

Probabilistic CYK: syntactic step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

//syntactic lookup

for i = j-2 to 0 do:

chartij := {}

for k = i+1 to j-1 do:

for each A -> BC do:

if B in chartik & C in chartkj:

chartij := chartij U {A}

0

1

2

3

4

1

2

Det

(.4)

NP

.0024

3

4

5

Det

.4

NP

.001

N

.02

V

.05

N

.01

Probabilistic CYK: syntactic step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

//syntactic lookup

for i = j-2 to 0 do:

chartij := {}

for k = i+1 to j-1 do:

for each A -> BC do:

if B in chartik & C in chartkj:

chartij := chartij U {A}

0

1

2

3

4

1

2

Det

(.4)

NP

.0024

3

4

5

N

.02

V

.05

VP

.00001

Det

.4

NP

.001

N

.01

Probabilistic CYK: syntactic step

The flight includes a meal.

//Lexical lookup:

for j = 1 to length(string) do:

chartj-1,j := {X : X->word in G}

//syntactic lookup

for i = j-2 to 0 do:

chartij := {}

for k = i+1 to j-1 do:

for each A -> BC do:

if B in chartik & C in chartkj:

chartij := chartij U {A}

0

1

2

3

4

1

2

Det

(.4)

NP

.0024

3

4

5

S

.00000001

92

N

.02

V

.05

VP

.00001

Det

.4

NP

.001

N

.01

Probabilistic CYK: summary

Cells in chart hold probabilities

Bottom-up procedure computes probability of a parse

incrementally.

To obtain parse trees, cells need to be augmented with

backpointers.

Probabilistic parsing with

lexicalised PCFGs

Main approaches (focus on Collins (1997,1999))

see also: Charniak (1997)

Unlexicalised PCFG Estimation

Charniak (1996) used Penn Treebank POS and phrasal

categories to induce a maximum likelihood PCFG

only used relative frequency of local trees as the estimates for rule

probabilities

did not apply smoothing or any other techniques

Works surprisingly well:

80.4% recall; 78.8% precision (crossed brackets not estimated)

Suggests that most parsing decisions are mundane and can be handled well by

unlexicalized PCFG

Probabilistic lexicalised PCFGs

Standard format of lexicalised rules:

associate head word with non-terminal

e.g. dumped sacks into

VP(dumped) VBD(dumped) NP(sacks) PP(into)

associate head tag with non-terminal

VP(dumped,VBD) VBD(dumped,VBD) NP(sacks,NNS) PP(into,IN)

Types of rules:

lexical rules expand pre-terminals to words:

e.g. NNS(sacks,NNS) sacks

probability is always 1

internal rules expand non-terminals

e.g. VP(dumped,VBD) VBD(dumped,VBD) NP(sacks,NNS)

PP(into,IN)

Estimating probabilities

Non-generative model:

take an MLE estimate of the probability of an entire rule

Count(VP(dumped, VBD ) VBD (dumped, VBD ) NP(sacks, NNS ) PP(into, IN))

Count(VP(dumped, VBD) )

non-generative models suffer from serious data sparseness problems

Generative model:

estimate the probability of a rule by breaking it up into sub-rules.

Collins Model 1

Main idea:

represent CFG rules as expansions into Head + left modifiers + right

modifiers

LHS STOP Ln Ln1...L1 H R1...Rn1Rn STOP

Li/Ri is of the form L/R(word,tag); e.g. NP(sacks,NNS)

STOP: special symbol indicating left/right boundary.

Parsing:

Given the LHS, generate the head of the rule, then the left modifiers (until

STOP) and right modifiers (until STOP) inside-out.

Each step has a probability.

Collins Model 1: example

VP(dumped,VBD) VBD(dumped,VBD) NP(sacks,NNS) PP(into,IN)

1.

Head H(hw,ht):

PH ( H (hw, ht ) | Parent , hw, ht )

P(VBD(dumped, VBD) | VP(dumped, VBD))

Collins Model 1: example

VP(dumped,VBD) VBD(dumped,VBD) NP(sacks,NNS) PP(into,IN)

1.

Head H(hw,ht):

PH ( H (hw, ht ) | Parent , hw, ht )

n 1

2.

Left modifiers:

P ( L (lw , lw ) | Parent , H , hw, ht )

L

i

i

t

i 1

P(STOP | VP(dumped, VBD) ,VBD(dumped, VBD))

Collins Model 1: example

VP(dumped,VBD) VBD(dumped,VBD) NP(sacks,NNS) PP(into,IN)

1.

Head H(hw,ht):

PH ( H (hw, ht ) | Parent , hw, ht )

n 1

2.

P ( L (lw , lw ) | Parent , H , hw, ht )

Left modifiers:

L

i

i

t

i 1

n 1

3.

Right modifiers:

P ( R (rw , rw ) | Parent , H , hw, ht )

R

i

i

t

i 1

PR ( NP(sacks, NNS ) | VP(dumped, VBD) ,VBD (dumped, VBD))

PR ( PP(into, IN ) | VP(dumped, VBD) ,VBD (dumped, VBD))

PR (STOP | VP(dumped, VBD) ,VBD (dumped, VBD))

Collins Model 1: example

VP(dumped,VBD) VBD(dumped,VBD) NP(sacks,NNS) PP(into,IN)

1.

Head H(hw,ht):

PH ( H (hw, ht ) | Parent , hw, ht )

n 1

2.

P ( L (lw , lw ) | Parent , H , hw, ht )

Left modifiers:

L

i

i

t

i 1

n 1

P ( R (rw , rw ) | Parent, H , hw, ht )

3.

Right modifiers:

4.

Total probability: multiplication of (1) – (3)

R

i

i

t

i 1

Variations on Model 1: distance

Collins proposed to extend rules by conditioning on

distance of modifiers from the head:

PL ( Li (lwi , lwt ) | P, H , hw, ht, distanceL (i 1))

PR ( Ri (rwi , rwt ) | P, H , hw, ht, distanceR (i 1))

a function of the yield of modifiers seen.

Distance for R2

probability =

words under R1

Using a distance function

Simplest kind of distance function is a tuple of binary features:

Is the string of length 0?

Does the string contain a verb?

…

Example uses:

if the string has length 0, PR should be higher:

English is right-branching & most right modifiers are adjacent to the head verb

if string contains a verb, PR should be higher:

accounts for preference to attach dependencies to main verb

Further additions

Collins Model 2:

subcategorisation preferences

distinction between complements and adjuncts.

Model 3 augmented to deal with long-distance (WH)

dependencies.

Smoothing and backoff

Rules may condition on

words that never occur in

training data.

Collins used 3-level

backoff model.

Combined using linear

interpolation.

1.

use head word

PR ( Ri (rwi , rwt ) | Parent , H , hw, ht )

2.

use head tag

PR ( Ri (rwi , rwt ) | Parent , H , ht )

3.

parent only

PR ( Ri (rwi , rwt ) | Parent )

Other parsing approaches

Data-oriented parsing

Alternative to “grammar-based” models

does not attempt to derive a grammar from a treebank

treebank data is stored as fragments of trees

parser uses whichever trees seem to be useful

Data-oriented parsing

Suppose we want to parse Sue heard Jim.

Corpus contains the following potentially useful fragments:

Parser can combine these to give

a parse

Data-oriented Parsing

Multiple fundamentally distinct derivations of a single tree.

Parse using Monte Carlo simulation methods:

randomly produce a large sample of derivations

use these to find the most probable parse

disadvantage: needs very large samples to make parses accurate,

therefore potentially slow

Data-oriented parsing vs. PCFGs

Possible advantages:

using partial trees directly accounts for lexical dependencies

also accounts for multi-word expressions and idioms (e.g. take

advantage of)

while PCFG rules only represent trees of depth 1, DOP fragments

can represent trees of arbitrary length

Similarities to PCFG:

tree fragments could be equivalent to PCFG rules

probabilities estimated for grammar rules are exactly the same as for

tree fragments

History Based Grammars (HBG)

General idea: any derivational step can be influenced by any

earlier derivational step

(Black et al. 1993)

the probability of expansion of the current node conditioned on

all previous nodes along the path from the root

History Based Grammars (HBG)

Black et al lexicalise their grammar.

every phrasal node inherits 2 words:

its lexical head H1

a secondary head H2, deemed to be useful

e.g. the PP in the bank might have H1=in and H2=bank

Every non-terminal is also assigned:

a syntactic category (Syn) e.g. PP

a semantic category (Sem) e.g with-Data

Use the index I that indicates what number child of the

parent node is being expanded

HBG Example (Black et al 1993)

History Based Grammars (HBG)

Estimation of the probability of a rule R:

P( Syn, Sem, R, H1 , H 2 | Syn p , Sem p , R p , I , H1p , H 2 p )

probability of:

the current rule R to be applied

its Syn and Sem category

its heads H1 and H2

conditioned on:

Syn and Sem of parent node

the rule that gave rise to the parent

the index of this child relative to the parent

the heads H1 and H2 of the parent

Summary

This concludes our overview of statistical parsing

We’ve looked at three important models

Also considered basic search techniques and algorithms