Chapter 1

advertisement

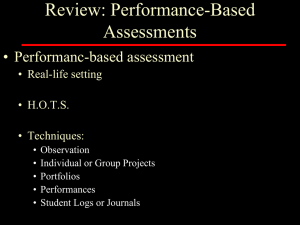

Review: Alternative Assessments • Alternative/Authentic assessment • Real-life setting • Performance based • Techniques: • • • • • Observation Individual or Group Projects Portfolios Exhibitions Student Logs or Journals • Developing alternative assessments • • • • • Determine purpose Define the target Select the appropriate assessment task Set performance criteria Determine assessment quality Review: Grading • Grading process: Objectives of instruction Test selection and administration Results compared to standards • Making grading fair, reliable, and valid • • • • • • • Determine defensible objectives Ability group students Construct tests which reflect objectivity No test is perfectly reliable Grades should reflect status, not improvement Do not use grades to reward good effort Consider grades as measurements, not evaluations Final grades Cognitive Assessments Physical Fitness Knowledge Physical Fitness Knowledge HPER 3150 Dr. Ayers Test Planning • Types Mastery (driver’s license) Achievement (mid-term) Table of Specifications (content-related validity) • Content Objectives history, values, equipment, etiquette, safety, rules, strategy, techniques of play • Educational Objectives (Blooms’ taxonomy, 1956) knowledge, comprehension, application, analysis, synthesis, evaluation Table of Specifications for a 33 Item Exercise Physiology Concepts Test (Ask-PE, Ayers, 2003) T of SPECS-E.doc Test Characteristics • When to test • Often enough for reliability but not too often to be useless • How many questions (p. 185-6 guidelines) • More items yield greater reliability • Format to use (p. 186 guidelines) • Oral (NO), group (NO), written (YES) • Open book/note, take-home: (dis)advantages of both • Question types • Semi-objective (short-answer, completion, mathematical) • Objective (t/f, matching, multiple-choice, classification) • Essay Semi-objective Questions • Short-answer, completion, mathematical • When to use (factual & recall material) • Weaknesses • Construction Recommendations (p. 190) • Scoring Recommendations Objective Questions • True/False, matching, multiple-choice • When to use (M-C: MOST IDEAL) • FORM7 (B,E).doc • Pg. 196-203: M-C guidelines • Construction Recommendations (p. 191-200) • Scoring Recommendations Cognitive Assessments I • Explain one thing that you learned today to a classmate Review: Cognitive Assessments I • Test types • Mastery Achievement • Table of Specifications (value, use, purpose) • Questions Types • Semi-objective: short-answer, completion, mathematical • Objective: t/f, match, multiple-choice • Essay (we did not get this far) Figure 10.1 The difference between extrinsic and intrinsic ambiguity (A is correct) B B B A A A D D C C D C Too easy Extrinsic ambiguity Intrinsic Ambiguity (weak Ss miss) (all foils = appealing) Essay Questions • When to use (definitions, interpretations, comparisons) • Weaknesses • Scoring • Objectivity • Construction & Scoring recommendations (p. 205-7) Administering the Written Test • Before the Test • During the Test • After the Test Characteristics of Good Test Items • • • • • • Leave little to "chance" Reliable Relevant Valid Average difficulty Discriminate Gotten correct by more knowledgeable students Missed by less knowledgeable students • Time consuming to write Quality of the Test • Reliability and Validity • Overall Test Quality Individual Item Quality Item Analysis • Used to determine quality of individual test items • Item Difficulty Percent answering correctly • Item Discrimination How well the item "functions“ Also how “valid” the item is based on the total test score criterion Item Difficulty 0 (nobody got right) – 100 (everybody got right) Goal=50% Uc Lc Difficulty * 100 Un Ln Item Discrimination <20% & negative (poor) 20-40% (acceptable) Goal > 40% Uc Lc Discri min ation * 100 Un Figure 10.4 The relationship between item discrimination and difficulty Sources of Written Tests • Professionally Constructed Tests (FitSmart, Ask-PE) • Textbooks (McGee & Farrow, 1987) • Periodicals, Theses, and Dissertations Questionnaires • • • • • • • • Determine the objectives Delimit the sample Construct the questionnaire Conduct a pilot study Write a cover letter Send the questionnaire Follow-up with non-respondents Analyze the results and prepare the report Constructing Open-Ended Questions • Advantages Allow for creative answers Allow for respondent to detail answers Can be used when possible categories are large Probably better when complex questions are involved • Disadvantages Analysis is difficult because of non-standard responses Require more respondent time to complete Can be ambiguous Can result in irrelevant data Constructing Closed-Ended Questions • Advantages Easy to code Result in standard responses Usually less ambiguous Ease of response • Disadvantages Frustration if correct category is not present Respondent may chose inappropriate category May require many categories to get ALL responses Subject to possible recording errors Factors Affecting the Questionnaire Response • Cover Letter Be brief and informative • Ease of Return You DO want it back! • Neatness and Length Be professional and brief • Inducements Money and flattery • Timing and Deadlines Time of year and sufficient time to complete • Follow-up At least once (2 about the best response rate you will get) The BIG Issues in Questionnaire Development • Reliability Consistency of measurement • Validity Truthfulness of response • Representativeness of the sample To whom can you generalize? Cognitive Assessments II • Ask for clarity on something that challenged you today