Let's drive from Johannesburg to Australia

advertisement

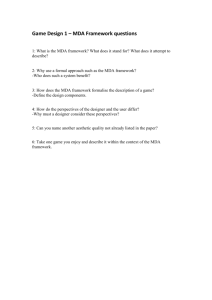

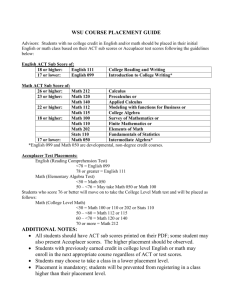

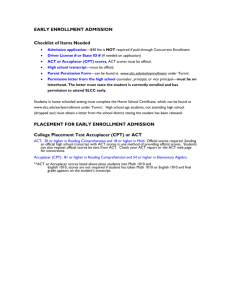

Introducing Diagnostic Assessment at UNISA Carol Bohlmann Department Mathematical Sciences 15 March 2005 Reading Intervention Results Phase I Overall reading scores < 60% => unlikely to pass maths exam High reading scores do not guarantee mathematical success. Low reading score a barrier to effective mathematical performance. Results – Phase II Reading scores improved by 10% (45% to 56%); but still < 60%. Reading skills and mathematics exam results considered in relation to matric English and matric first language (African language) results, and to mean mathematics assignments. Strongest correlation was between pretest reading scores and mathematics exam mark; highly significant. Results – Phase III (2002) Reading speed and comprehension test data (n = 78) (voluntary submission) Mean reading speed 128wpm (lowest 14 wpm - 11% in final exam) Mean comprehension score approx. 70% Results – Phase III (2003) Reading speed and comprehension test data (n = 1 345) (1st assignment) Mean reading speed 115 wpm (many < 20 wpm) Mean comprehension score approx. 70% Reading skill (total 54): anaphoric reference (17), logical relations, academic vocabulary (in relation to maths), visual literacy Correlations High correlation between anaphor score and total reading score (0,830) Moderately high correlation between comprehension and total reading score (t = 0,581; t2 = 0,338) Total reading score correlated more strongly with exam mark than did other aspects of reading (t = 0,455; t2 = 0,207) Attrition rate: 27% in 1997 to 64% in 2004 – effect of poor reading skills for drop-outs? Diagnostic process necessary A longer intervention programme had little impact (measured in terms of exam results): students did not/ could not use video effectively on their own (feedback). Students made aware of potential reading problems might be more motivated to take advantage of a facilitated reading intervention programme. Project assignment: 2001, 2002, 2003 Reading/language problems Meta-cognitive problems: Lack of critical reasoning skills undermines conceptual development General knowledge problems: Poor general knowldege impedes students’ ability to learn from examples used to illustrate concepts Diagnostic assessment accepted (2003) Assessment internationally and nationally accepted. A New Academic Policy for Programmes and Qualifications in Higher Education (CHE, 2001) proposed an outcomes-based education model; commitment to learnercentredness. Learner-centredness => smooth interface between learners and learning activities, not possible without a clear sense of learner competence on admission. Pre-registration entry-level assessment can facilitate smoother articluation. The official view … Institutions ‘will continue to have the right to determine entry requirements as appropriate beyond the statutory minimum. However, ..., selection criteria should be sensitive to the educational backgrounds of potential students ... .’ (Gov. Gazette 1997) SAUVCA’s role National initiative to develop Benchmark Tests academic literacy numeracy maths Piyushi Kotecha (SAUVCA CEO): “This is a timeous initiative as the sector is ensuring that it will be prepared for the replacement of the Senior Certificate by the FETC in 2008. Specifically, national benchmark tests will gauge learner competencies so that institutions can better support and advise students. It will also enable higher education to ascertain early on the extent to which the FETC is a reliable predictor of academic success. Piyushi Kotecha (cont.) This exercise will therefore create a transparent standard that will ensure that learners, teachers, parents and higher education institutions know exactly what is expected when students enter the system. As such it will also allow for greater dialogue between the schooling and higher education sectors. Purpose of Diagnostic Assessment Better advice and support; scaffolding for ‘high-risk’ students. Critical information can address increased access and throughput. Admissions and placement testing can lead to a reduction in number of high-risk students admitted to various degree programmes. Benchmark Tests can provide standardised tests without the process dictating how different universities should use the results. Some difficulties … Economies of scale favour large enrolments ODL principles embrace all students Moral obligation to be honest with potential students and responsible in use of state funding Cost – benefit considerations Establishing the testing process Meetings with stakeholders Assessment not approved as a prerequisite to study, but as a compulsory (experimental) co-registration requirement: students thus register for mathematics module and simultaneously for diagnostic assessment, even if they register late and are assessed mid year. BUT later assessment allows less time for remedial action; students advised to delay registration until after assessment. Computerised testing the ideal; not initially feasible. The process (cont.) Two module codes created for the two assessment periods: (i) supplementary exam period in Jan and (ii) end of first semester Procedures managed by Exam Dept Explanatory letter for students, Marketing; Calendar; Access Brochure Selecting content: some considerations Internationally accepted standards Assessment tools adapted to suit specific UNISA requirements Reliability and validity important criteria Need for practical, cost effective measures Various options investigated, e.g. AARP (UCT); ACCUPLACER and UPE adaptations; UP UCT & UPE AARP possibly most appropriate (trial undertaken at UNISA in 1998), but time consuming and not computer-based. UPE demographically and academically similar to UNISA, research into placement assessment since 1999. ACCUPLACER (USA/ETS) found appropriate. ACCUPLACER at UPE Computerised adaptive tests (unique for each student) for algebra, arithmetic and reading (pen-and-paper options available – only one test). A profile developed across all the scores in the battery (including school performance) Regression formulae and classification functions used to classify potential students with respect to risk. Formulae based on research. ACCUPLACER Reading Comprehension Established reliability and validity Possible bias: Seven questions possibly ambiguous or with cultural bias - did not detract from items’ potential to assess construction of meaning from text. Left in original format for first round of testing; can delete or adapt later. Own standards Basic Arithmetic Test ‘Home grown’ Testing for potential Assesses understanding rather than recall Items based on misconceptions that are significant barriers to understanding the content and recognised problem areas Experiment on benefit of drill-and-practice Reliability, validity to be established Test format and data capture Three hours, 35 MCQs (four options) in each category (Total 70) Mark reading sheets; return papers Assignment section captured marks Computer services processed marks and determined categories Marks made public Aspects assessed in ARC Aspect tested Questions Causal relations Contrastive relations Recognition of sequence Interpretation of implied or inferred information 2, 4 3, 7, 8, 23 5 10, 14, 15, 17, 20, 27, 32, 33, 35 Aspects assessed in ARC (cont.) Aspect tested Questions Comprehension/interpretation of factual information; detailed/ general Academic vocabulary Number sense Recognition of contradiction, inconsistency Substantiation 9, 11, 23, 28, 29 All except 5, 26 23 12 1, 6 Aspects assessed in BAT Aspect tested Questions Simple arithmetic operations (whole 19 numbers) Simple arithmetic operations (fractions/decimals) 12 Pattern recognition Number sense 3 5 Conversion of units 3 Academic (maths) /Technical vocab 3 / 4 Aspects assessed in BAT (cont.) Aspect tested Questions Comparison Time - how long /Time - when ‘Translation’ from words to mathematical statements Recognition of insufficient or redundant information Learning from explanation 10 3/2 17 Spatial awareness 3 Insight 14 4 2 Grading criteria Three categories considered (exlp. in T/L 101) Category 53: Students likely to be successful with no additional assistance. Category 52: Students likely to be successful provided they had support. Category 51: Students unlikely to be successful without assistance beyond that available at the university. Criteria for classification based on ACCUPLACER guidelines and empirical results following Phases I, II and III of the reading intervention. Criteria - Reading Compreh. Conversion table in ACCUPLACER Coordinator’s Guide converts raw score out of 35 to a score out of 120 - some form of weighting takes place. 0 to 4 out of 35 equivalent to 20 points. Increment between the numbers of correct answers increases gradually from 1 to 4. ‘Reward’ for obtaining a greater number of correct answers. ACCUPLACER recommendations Three categories: Weakest: 51 out of 120 ~ 31% Moderate: 80 out of 120 ~ 60% Good: 103 or higher ~ 83% Scores reflect different reading skills, outlined in Coordinator’s Guide. From Phases I and II of the reading project: 60% ~ threshold below which reading skills too weak to support effective study. Our score Giving students the ‘benefit of the doubt’ the upper boundary of the lowest category in the MDA (students at risk with respect to reading) was pitched lower, at 70 out of 120 (~ 50%). Comparison of boundaries High-risk category Upper boundary UNISA Upper boundary ACCUP Raw score (0 – 35) 22 Converted score (0 – 120) 67 47 23 71 51 25 79 59 26 84 64 % Criteria - Basic Arithmetic Test Questions weighted: intention to enable students who demonstrated greater insight to score better than those who had memorised procedures without understanding. Simplest, procedural questions (such as addition or multiplication): W = 1 or 1,5 Questions requiring some insight and interpretation of language: W = 2, 2,5 or 3 Raw score out of 35 ~ final score max 69 Weight distribution of BAT questions Categ Easy Weight % of total 1 10 No. items Aspect assessed 7 Simp arith + frac/dec + no. sense lang, time + money, insight + pattern Easy Mod easy 1,5 2 13 30 6 10 Mod diff Diff 2,5 22 6 3 25 6 Cumulative totals Score up to 53% by correctly answering easy to moderately easy items (W = 1, 1,5 or 2) Score up to 75% by correctly answering ‘easy’, ‘moderately easy’ and ‘moderately difficult’ items (W up to 2,5) Score over 75% only if ‘difficult’ items (W = 3) also answered correctly. Setting the lower limit 10 items (17% of total) computational - no reading skills. Possible for all students to answer these questions correctly. 25 items (83% of total) dependent on reading and other skills. 60% ‘threshold’ => 17% + (60% of 83%) i.e. 67% set as the lower boundary for BAT (~ raw score 46 out of 69). Students with < 46 at risk wrt numerical and quantitative reasoning. Setting the upper limit ARC top category begins at score of 103 (approximately 83%) BAT equivalent: 57 out of 69 No other empirical evidence – 57 set as cut-off point for high achievement in BAT MDA categories Category 51 Reading (weighted) S < 70 OR BAT (weighted) S < 46 53 S > 103 AND S > 59 52 All other scores The assessment process Procedural issues Problems with co-registration requirement Several administrative problems ARC results Good item-test correlations. Only five questions with correlations of below 0,5. Students scored reasonably well on most of the potentially problematic items. Only three of these had low itemtest correlations. BAT results Weaker item-test correlations than Reading Comprehension score. Low (< 0,30), moderate and high itemtest correlations in all question categories. Reading may play greater role in categorisation. Consolidation of results January: Category 53 10 3% Category 52 93 29% Category 51 223 68% Total 326 Note: Oct 03/Jan 04 exams: 76% failed Consolidation (cont.) June (after exposure to mathematics and English study material): Category 53 35 4% Category 52 176 21% Category 51 623 75% Total 834 Further analysis of results Assignments (January group): Category 51 mean = 39% Category 52 mean = 48% Category 53 mean = 65% Exam results (all students) Registered: 1 518 Not admitted to the exam: 912 Obtained exam admission: 606 Wrote exam: 551 Passed: 162 (October: 145; January: 17) Exam mean: 27% MDA students with exam results: 463 MDA exam mean: 35% Exam results by risk category Category 51 No. of students 332 (72%) Mean exam score 30% 52 106 (23%) 45% 53 25 (5%) 57% Exams – no ARC Category 51 No. of students 136 (29%) Mean exam score 26% 52 189 (41%) 32% 53 138 (30%) 48% No exam admission Cat. No. of MDA students in category n = 1 160 846 No. of MDA students without admission n = 698 514 (74%) 51 % of MDA students in category without exam admission 514/846 = 61% 52 269 164 (23%) 164/269 = 61% 53 45 20 (3%) 20/45 = 44% Comparison between students who wrote/did not write MDA Wrote MDA Did not write Did not write (n = 463) MDA(n =101) / wrote Pass ( n = 162) 125 (77%) 37 (23%) 37/125 = 30% Fail ( n = 401) 337 (84%) 64 (16%) 64/337 = 19% Implications of assessment Counselling essential, especially for Category 51 and 52 students. All potential support options dependent on staff and resource allocation. Options: The Institute for Continuing Education (ICE): advice regarding alternative directions of study, or measures to upgrade academic skills before studying. Initially agreed to investigate such options, but no progress to date. Implications of assessment National Tutorial Support Coordinator seemed in favour of using information obtained in the assessment process to inform the tutorial programme. No information forthcoming on support options or the necessary data collection procedures. The Bureau for Student Counselling and Career Development (BSCCD) staff willing to assist where possible, but staff not deployed at all centres. Implications of assessment The Povey Centre (instruction in English language proficiency, reading and writing skills) possibly able to provide some reading instruction via the Learning Centres, at no additional charge (other than the basic Learning Centre registration fee required from all students who registered for tutorial classes) (START programme). No clarity yet regarding extent to which this will be rolled out; impact for mathematics to be investigated. Implications for qualitative research Psychological implications of ‘going to university’ but needing first to be taught how to count and read? Cost implications of referral? Gate keeping or gateway? Pre-registration assessment… … is critically important. Costs, benefits, advantages and disadvantages of instituting diagnostic assessment for mathematics at UNISA need to be thoroughly investigated. Information regarding the implementation process must be well analysed and utilised. True access depends on providing students with appropriate career guidance. Ongoing research (quantitative & qualitative) into specific test aspects and components essential.