Threats and Countermeasures: Improving Web Application Security

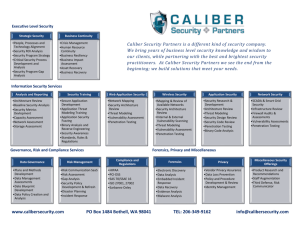

advertisement

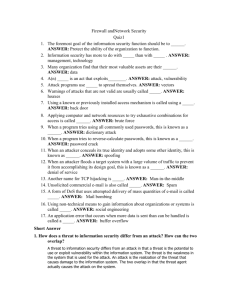

Threat Modeling

Terminology

Protected Resources / Asset: Things to protect

(tangible or intangible)

Entry/Exit Points: Ways to get at an asset

Threat: Risks to an asset

Attack / exploit: An action taken that harms an asset

Vulnerability: Specific ways to execute the attack

Risk: Likelihood that vulnerability could be exploited

Mitigation / Countermeasure: Something that addresses a

specific vulnerability

We can mitigate vulnerabilities…

…but the threat still exists!!!

Terminology Example

Asset(s):

Entry/Exit Points:

$5,000,000 under the mattress in

guest bedroom

Threat(s):

Loosing the $5,000,000

Front & Side Doors

Windows (guest bedroom &

elsewhere in residence

Note vulnerability can be shared

across attacks(!)

Threat

Attack

Vulnerability

Risk

(0-100)

Loosing the

$5,000,000

Burglar

breaks in

and steals

money

Plane glass windows

95

Windows can be lifted out of

frame

85

No dead bolt on doors / doors

can be kicked in

75

No alarm system

100

No alarm system

100

House Burns

Down

QUESTION…

ASSUMING you could engage in repeated (destructive)

testing…

How would you test the mitigation of each of the

vulnerabilities?

During Development? At deployment? Over time?

Threat

Attack

Vulnerability

Risk

(0-100)

Loosing the

$5,000,000

Burglar

breaks in

and steals

money

Plane glass windows

95

Windows can be lifted out of

frame

85

No dead bolt on doors / doors

can be kicked in

75

No alarm system

100

No alarm system

100

House Burns

Down

Terminology Example (Cont’d)

Lets apply some mitigations to our vulnerabilities

QUESTION: How would you test the effectiveness of each

mitigation?

BIGGER QUESTION: How would verify the mitigation effectiveness

beyond initial deployment???

Vulnerability

Risk

(0-100)

Mitigation +

$$$ at Deployment

Revised

Risk

Cost /

delta

Plane glass windows

95

Lexan Glass, $5,000

70

$200

Windows can be lifted

out of frame

85

Steel Frames, $1000

65

$50

No dead bolt on doors / 75

doors can be kicked in

Install Dead Bolts, $50

70

$10

No alarm system

100

Install Alarm System, $200

80

$10

No alarm system

100

Install Alarm System, $200

80

$10

KEY POINT: The threat doesn’t go away!!!

Ways I can defeat each of those mitigations:

User Invites Tracy Monteith into home, Tracy sees the

lumpy mattress, investigates, leaves abruptly and is last

seen heading to the Bahamas to “retire”

That is… I bypass all of the security measures explicitly with

someone I “trust” (its’ Tracy, big mistake there, eh? )

User forgets to lock the door / windows (nobody notices)

Smoke detector batteries expire (nobody notices)

That is… Is your mitigation still turned on past deployment?

(A: Auditing / Logging / Ongoing Verification Testing)

What about a meteor (vaporizing house and asset!)

That is… there will always be “new” attacks around the corner!

Your Threat Model / Test Plan is a living document!

Testing during development / deployment isn’t enough

Mitigations may have vulnerabilities themselves

Although you should probably take this into account

when calculating the initial risk reduction for the mitigation

Mitigation

Revised

Risk

Ongoing Risk to Mitigation Revised Risk

(MAINTENANCE!)

Lexan Glass

70

SWAT-style door ram

85 (ram is expensive)

Steel Frames

65

Chainsaw cuts around the

frames

95 (chainsaws are

cheap)

Install Dead Bolts

70

SWAT-style door ram

85 (ram is expensive)

Install Alarm System

80

Cut power & phone lines

98 (bolt cutters are

cheap)

Install Alarm System

80

Cut power & phone lines

98 (bolt cutters are

cheap)

Sometimes, though, the mitigation may not map 1:1 to a

vulnerability or an attack… but applies to the THREAT

Consider…

Depositing $5,000,000 in a bank account

Cost: Not good, have to pay Uncle Sam $38%

Risk: FICA only insures up to ~ $100,000 (???)

Mitigate: by depositing $100,000 in ~30 banks (after tax)

Try a safe deposit box!

Cost: Better, and Uncle Sam doesn’t know what’s in the box!

Risk: Could still be robbed (harder though, requires random luck

that the burglar opens your box)

Mitigate: Take out insurance to cover risk (compensates for loss of

asset)

One more term to define…

Trust Boundaries: Boundaries that surround the asset and

require a specified degree of trust to access

GREAT PLACE TO TEST!

Code Red, SQL Slammer, Blaster, etc. were all cases of

allowing un-validated user input to cross into privileged

trust boundaries

Two types of Security Issues (to model / test)

And before we begin on threat modeling itself:

Input trust issues

Everything else!

Threat Modeling Methodologies

All basically saying the same thing

Some differences in terminology

Some differences reflect evolution over last 2 years)

2 Years Old

1 year old

+/- 2 weeks old

3 Phases of Threat Modeling

Define: The first phase defines security centric

information which is used later to model threats

Model: The second phase constructs Threat Events

Analyzing and prioritizing decomposition

Measure Threats: The third phase assesses

probability, impact, risk, and cost and value of

mitigation

Measuring Risk (DREAD) value before and after

Mitigation

Documenting estimated costs of Mitigation

Allows DREAD delta comparison to Mitigation cost

Threat Modeling Process

(Writing Secure Code + Threat Modeling)

Collect

Background

Information

Model the

System

Use Scenarios

Entry Points

External

Dependencies

Assets

Implementation

Assumptions

External Security

Notes

Internal Security

Notes

Determine

Threats

Identify Threats

Roles and

Identities

Trust Levels

Data Flow

Diagrams/Process

Models

Analyze Threats/

Determine

Vulnerabilities

Defining the System

USE SCENERIOS

Actor

User, Admin, Hacker,

Someone

Interaction

Authenticates, Requests,

Writes, Reads

Service

IIS, SQL, WS, etc.

EXTERNAL DEPENDENCIES

Feeds

Inbound (Pull) <implies trust>

Outbound (Push)

Feedstore vs. External

Vendor?

Applications

Who consumes your service

Environments

Extranet vs. Intranet vs.

Internet

Defining the System (Cont’d)

IMPLEMENTATION

ASSUMPTIONS

Platform

W2K vs. W2K3 vs. ???

Technologies

SQL 2000 vs. ???

Protocols

SSL vs. IPSec

Standards

Known mitigating factors from

Design Time

EXTERNAL SECURITY NOTES

What do external entities need to be

aware of in the context of security

with regards to your application?

Who depends on your application?

Can they trust your outbound feed(s)?

Who do they need to contact re:

security?

INTERNAL SECURITY NOTES

Document security strategy notes

Priorities

Challenges

Exceptions

Constraints

Threat Modeling Process

(Writing Secure Code + Threat Modeling)

Collect

Background

Information

Model the

System

Use Scenarios

Entry Points

External

Dependencies

Assets

Implementation

Assumptions

External Security

Notes

Internal Security

Notes

Determine

Threats

Identify Threats

Roles and

Identities

Trust Levels

Data Flow

Diagrams/Process

Models

Analyze Threats/

Determine

Vulnerabilities

Modeling the System

ENTRY POINTS

Services

IIS, SQL, MSMQ, File,

WS

Object or Classes

DLLs, Tables, Views, etc

Methods

Procedures, Routines etc

ASSETS

Both concrete and abstract, that

could be targets of an attack

A concrete example might be

corporate data stored in a

database.

A more abstract example

might be network coherency in

a peer to peer application.

Assets should be nouns.

Modeling the System (Cont’d)

ROLES AND IDENTITIES

This allows the ability to define

what roles exist in the

application

Allow identification of what

authentication mechanism is

used

Also allow weighting of groups

to assess impact and

opportunity due to size of

population

TRUST LEVELS

Trust levels characterize either

entry points or assets.

Entry points describe the

external entity that can

interface with the entry point.

Assets indicate what privilege

level would normally be able to

access the resource.

The type of trust level is

specific to the entry point or

protected resource

Modeling the System (Cont’d)

DATA FLOW / DECOMPISITION

Time to break down the application

During initial design we don’t know much detail so we

typically only go to the service level and partially identify

‘logical’ objects

We record this decomposition as “Entry Points”;

components where an entity interfaces within the

application

IIS6 Context Diagram

Authentication

Data

Request for a

page

Browser

Logs and alerts

Web Server

Authentication

Request

Page contents

Administrator

Configuration

data

IIS6 Level-0 DFD

Authentication

Database

1.0

Authentication

Module

5.0

Authentication

Data

6.0 User

Information

12.0

Configuration

Data

4.0

Authentication

Request

3.0

Configuration

manager

3.0

Authentication

query

Browser

7.0

Authentication

Result

11.0

Configuration

Data

1.0 Page

Request

9.0 Page

contents

2.0 Request

Processor

2.0

Configuration

Data

Administrator

13.0

Configuration

changes

14.0

Configuration

Data

16.0 Log

Request

18.0 Logs

and alerts

15.0 Log

configuration

Configuration

Data

4.0

Logging

Engine

17.0 Log Data

10.0 Log data

8.0 Page

data

Webroot

Logs

Threat Modeling Process

(Writing Secure Code + Threat Modeling)

Collect

Background

Information

Model the

System

Use Scenarios

Entry Points

External

Dependencies

Assets

Implementation

Assumptions

External Security

Notes

Internal Security

Notes

Determine

Threats

Identify Threats

Roles and

Identities

Trust Levels

Data Flow

Diagrams/Process

Models

Analyze Threats/

Determine

Vulnerabilities

Threat Profile

Where to BEGIN?

By Network / Server / Application

Document your assumptions / Service Level Agreements(!)

By Technology / Service

By type of threat (CIA)

Network

Confidentiality

Integrity

(who can read) (who can write)

TE1

TE2

Availability

(who can access)

TE3

Server

TE4

TE5

TE6

Application

TE7

TE8

TE9

Threat Profile (Alternate View)

From: Improving Web Application Security—Threats and Countermeasures

For each threat…

What are the conditions that allow the threat to take

place (THREAT TREES)

What are the attacks?

How would you classify the attack (STRIDE)

What are the ENTRY / EXIT Points?

What protected resources / assets are exposed?

Enumerate vulnerabilities that allow the attack

Evaluate RISK before mitigation…

…evaluate risk after mitigation

…how would you verify / test the mitigation?

Threat Trees

Threat Modeling Tool

Summary

Threat Modeling Tool

http://msdn.microsoft.com/security/securecode/threatmo

deling/default.aspx

A quality threat model should feed directly into your test

plans

Validating the mitigation (as intended)

Testing the mitigation (in ways not intended)

No matter what mitigation you choose, the threat to the

asset doesn’t go away!!!

Questions?

Boneyard

(Risk Analysis/DREAD)

Calculation of Risk

(How does DREAD do this?)

Risk = Impact * Probability

Impact = (DREAD)

Damage

Note that Damage needs to be assessed in terms of Confidentiality,

Integrity and Availability

Affected Users (how large is the user base affected?

Probability = (DREAD)

Reproducibility (how difficult to reproduce? Is it scriptable?)

Exploitability (how difficult to use the vulnerability to effect the

attack?)

Discoverability (how difficult to find?)

Calculation of Risk (Cont’d)

Let’s establish a few assumptions

In the Risk equation; Damage and Probability are equally important

The factors, as well as the result should have mathematical value (in

order to assist in easily determining priorities)

Therefore in any formula giving each factor equal weight an

imbalance occurs giving Probability a 3:2 representation over

Damage

To mitigate:

e.g. Risk = Impact(D+A) * Probability(R+E+D)

while ensuring the summary of qualitative scales for Risk and Probability

are equal (e.g. D+A=(1-10) & R+E+D=(1-10) which results in a scale of 6100 when the summation of factors are multiplied

Damage

Damage has 3 categories and each has been given it’s own value

ranking

Confidentiality (Read)

Vulnerability or exposure of sensitive data or process (e.g. application

or business logic)

Integrity (Write)

Compromise of application or system data integrity (e.g. log tampering,

data modification)

Availability

Degradation or denial of service

Damage

Confidentiality

(Read)

Data Elements*

5–

Critical -SSN, CreditCard,

Passwords

4 – High Data Elements

Integrity

(Write)

Data Elements

-SSN, CreditCard,

Bank Account #

Data Elements

Availability

(access)

Domain Control:

-Bank Acct #,

-(as defined}

Moderate Host

Control:

- (as defined}

Data Elements

3–

Moder -(as defined}

ate

2 – Minor Data Elements

Data Elements

Partial Denial:

Data Elements

Degradation

-(as defined}

1 – Trivial Data Elements

-(as defined}

Data Elements

Nuisance:

-(as defined}

-(as defined}

-(as defined}

Affected Users

Roles

Population

(Confidentiality, Integrity)

(Availability)

5

Admin

All

4

Power Users

Most

3

Group

Many

2

User

Few

1

Public

None

Reproducibility

Value

Description

1 - Complex

The attack is very difficult to reproduce, even

with knowledge of the security hole

2 - Moderate

The attack can be reproduced, but only with a

timing window and a particular race situation

3 – Simple

The attack can be reproduced every time and

does not require a timing window

Exploitability

Value

1-Expert

Description

The exploit is unpublished, difficult to execute and

requires significant insider knowledge and technical

expertise or multiple vulnerabilities must be exploited

before any impact can be realized.

2Journeyma

n

3-Adept

The exploit is unpublished, difficult to execute and

requires significant insider knowledge or technical

expertise.

The exploit is known (including technical and/or insider

information) but is difficult to execute and no exploit

code is available.

4-Novice

The exploit is well known and automated script has been

provided that script-kiddies can run to exploit the

vulnerability.

Discoverability

Value

1-Difficult

Description

The vulnerability is obscure, and it is unlikely that

users will work out damage potential

2-Moderate The vulnerability is in a seldom-used part of the

product, and only a few users should come across it.

It would take some thinking to see malicious use

3-Easy

Published information explains the attack. The

vulnerability is found in commonly used features and

is very noticeable

A new spin on DREAD

Use DREAD to calculate

The risk before mitigation

The risk after mitigation

The cost of implementing the mitigation AND the cost to maintain the

mitigation

NOW you can make an accurate assessment of the best mitigation for a

given vulnerability!!!

Example: Using SSL or IPSec to secure IIS – SQL Server

SSL: Low cost to turn on, but can easily be turned off (what is the

cost to validate the mitigation over time?

IPSec: High cost to turn on initially, but low maintenance cost +

INCREASED SECURITY

Perf impact on both machines, but Accelerator cards can easily mitigate

this

Boneyard (Misc)

STRIDE

Use STRIDE in several

scenarios to identify the

categories of threat

S

Spoofing identity

T

Tampering with data

R

Repudiability

I

Information disclosure

D

Denial of service

E

Elevation of privilege

An alternate taxonomy

(Patterns and Practices group)

Big Issues

There are two types of security issues

Input trust issues

Everything else!

Buffer Overruns

Integer Overflow Attacks

Non-admin issues

How common are BOs?

Approx 33% of bulletins remedy BOs

200

180

160

140

120

Bulletins (2003)

100

Buffer Overruns

80

60

40

20

0

Microsoft

Sun

RedHat

Debian

Source: Vendor Web sites, 01Jan03 –31Dec03

Exploiting the overrun

Healthy

stack

buff

SFP

ret

szData

Pass big

buffer

Hand-crafted code

Stack

after

attack

Pointer to start

of buffer

Execute from here on function return!

Terms

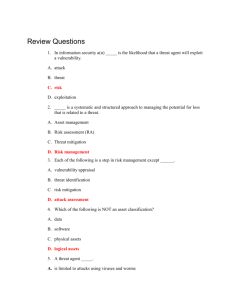

CIA (Defensive Goals)

Confidentiality (who can

read what)

Integrity (who can write

what)

Availability (who can access

what)

Breaking Apart the System

Secure the Network

Secure the Host/Server

Secure the Application

DREAD (Measure of Risk)

Damage (Impact)

Reproducibility

(Probability)

Exploitability (Probability)

Affected Users (Impact)

Discoverability

(Probability)

Buffer overrun example:

Index Server ISAPI (Code Red)

// cchAttribute is the character count of user input

WCHAR wcsAttribute[200];

if ( cchAttribute >= sizeof wcsAttribute)

THROW( CException( DB_E_ERRORSINCOMMAND ) );

DecodeURLEscapes( (BYTE *) pszAttribute, cchAttribute,

wcsAttribute,webServer.CodePage());

...

void DecodeURLEscapes( BYTE * pIn, ULONG & l,

WCHAR * pOut, ULONG ulCodePage ) {

WCHAR * p2 = pOut;

ULONG l2 = l;

...

for( ; l2; l2-- ) {

// write to p2 based on pIn, up to 12 bytes

Buffer overrun example: Code Red

// cchAttribute is the character count of user input

WCHAR wcsAttribute[200];

if ( cchAttribute >= sizeof wcsAttribute / sizeof WCHAR)

THROW( CException( DB_E_ERRORSINCOMMAND ) );

DecodeURLEscapes( (BYTE *) pszAttribute, cchAttribute,

wcsAttribute,webServer.CodePage());

...

void DecodeURLEscapes( BYTE * pIn, ULONG & l,

WCHAR * pOut, ULONG ulCodePage ) {

WCHAR * p2 = pOut;

ULONG l2 = l;

...

for( ; l2; l2-- ) {

// write to p2 based on pIn, up to 12 bytes

Buffer Overrun Examples

DCOM Remote Activation (MS03-026)

error_status_t _RemoteActivation(..., WCHAR *pwszObjectName, ... )

*phr = GetServerPath( pwszObjectName, &pwszObjectName);

...

}

Sitting on port 135 – The Internet

HRESULT GetServerPath(WCHAR *pwszPath, WCHAR **pwszServerPath ){

WCHAR * pwszFinalPath = pwszPath;

WCHAR wszMachineName[MAX_COMPUTERNAME_LENGTH_FQDN + 1];

hr = GetMachineName(pwszPath, wszMachineName);

*pwszServerPath = pwszFinalPath;

}

HRESULT GetMachineName(

WCHAR * pwszPath,

WCHAR

wszMachineName[MAX_COMPUTERNAME_LENGTH_FQDN + 1]) {

pwszServerName = wszMachineName;

LPWSTR pwszTemp = pwszPath + 2;

while ( *pwszTemp != L'\\' )

*pwszServerName++ = *pwszTemp++;

...

}

Copies buffer from the

network until ‘\’ char found

{

All user input is evil!

User input should be

Constrained (data type, length, format)

Rejected (filter for known bad values)

Sanitized (transform malicious or dangerous characters)

Developers’ first impulse: Regular expressions

\d{5}(-\d{4}){0,1}

What’s wrong with this regular expression?

Then there’s the matter of “trust boundaries”

How much do you trust that the input you are receiving has

already been validated?

Sample buffer overrun:

Integer overflow

int ConcatString(char *buf1, char *buf2,

size_t len1, size_t len2){

char buf[256];

if((len1 + len2) > 256) return -1;

memcpy(buf, buf1, len1);

memcpy(buf + len1, buf2, len2);

What if: len1 == 0xFFFFFFFE

len2 == 0x00000102

Sample buffer overrun:

Integer underflow

void function(size_t bufsize, char *buf) {

if (bufsize < 64) {

char *pBuff = new char[bufsize-1];

memcpy(pBuff,buf,bufsize-1);

...

}

}

What if bufsize is zero?

Sample buffer overrun:

Wrong sign

void function(int bufsize, char *buf) {

if (bufsize < 64) {

char *pBuff = new char[bufsize];

memcpy(pBuff,buf,bufsize);

...

}

}

What if buffer size is less than zero?