Big-O Analysis Example 2

advertisement

CSE 1342

Programming

Concepts

Algorithmic Analysis Using Big-O

Part 1

The Running Time of Programs

Most problems can be solved by more than one

algorithm. So, how do you choose the best

solution?

The best solution is usually based on efficiency

Efficiency of time (speed of execution)

Efficiency of space (memory usage)

In the case of a program that is infrequently run or

subject to frequent modification, algorithmic

simplicity may take precedence over efficiency.

The Running Time of Programs

An absolute measure of time (5.3 seconds, for

example) is not a practical measure of efficiency

because …

The execution time is a function of the amount of

data that the program manipulates and typically

grows as the amount of data increases.

Different computers will execute the same program

(using the same data) at different speeds.

Depending on the choice of programming language

and compiler, speeds can vary on the same

computer.

The Running Time of Programs

The solution is to remove all implementation

considerations from our analysis and focus on those

aspects of the algorithm that most critically effect

the execution time.

The most important aspect is usually the number

of data elements (n) the program must

manipulate.

Occasionally the magnitude of a single data

element (and not the number of data elements) is

the most important aspect.

The 90 - 10 Rule

The 90 - 10 rule states that, in general, a program

spends 90% of its time executing the same 10% of

its code.

This is due to the fact that most programs rely

heavily on repetition structures (loops and

recursive calls).

Because of the 90 - 10 rule, algorithmic analysis

focuses on repetition structures.

Analysis of Summation Algorithms

Consider the following code segment that sums

each

row of an n-by-n array (version 1):

grandTotal = 0;

for (k = 0; k < n; k++) {

sum[k] = 0;

for (j = 0; j < n; j++) {

sum[k] += a[k][j];

grandTotal += a[k][j];

}

}

Requires 2n2 additions

Analysis of Summation Algorithms

Consider the following code segment that sums

each

row of an n-by-n array (version 2)

grandTotal = 0;

for (k = 0; k < n; k++) {

sum[k] = 0;

for (j = 0; j < n; j++) {

sum[k] += a[k][j];

}

grandTotal += sum[k];

}

Requires n2 + n additions

Analysis of Summation Algorithms

When we compare the number of additions

performed in versions 1 and 2 we find that …

(n2 + n) < (2n2) for any n > 1

Based on this analysis the version 2 algorithm

appears to be the fastest. Although, as we shall

see, faster may not have any real meaning in the

real world of computation.

Analysis of Summation

Algorithms

Further analysis of the two summation algorithms.

Assume a 1000 by 1000 ( n = 1000) array and a

computer that can execute an addition

instruction in 1 microsecond.

• 1 microsecond = one millionth of a second.

The version 1 algorithm (2n2) would require

2(10002)/1,000,000 = 2 seconds to execute.

The version 2 algorithm (n2 + n) would require

(10002 + 1000)/1,000,000 = = 1.001 seconds to

execute.

From a users real-time perspective the difference

is insignificant

Analysis of Summation

Algorithms

Now increase the size of n.

Assume a 100,000 by 100,000 ( n = 100,000) array.

The version 1 algorithm (2n2) would require

2(100,0002)/1,000,000 = 20,000 seconds to execute (5.55

hours).

The version 2 algorithm (n2 + n) would require

(100,0002 + 100,000)/1,000,000 = 10,000.1 seconds to

execute (2.77 hours).

From a users real-time perspective both jobs take a long

time and would need to run in a batch environment.

In terms of order of magnitude (big-O) versions 1 and 2

have the same efficiency - O(n2).

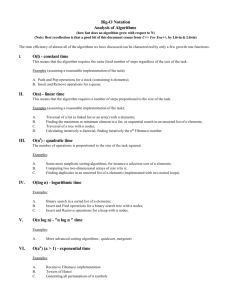

Big-O Analysis

Overview

O stands for order of magnitude.

Big-O analysis is independent of all implementation

factors.

It is dependent (in most cases) on the number of data

elements (n) the program must manipulate.

Big-O analysis only has significance for large values of

n.

For small values of n big-o analysis breaks down.

Big-O analysis is built around the principle that the

runtime behavior of an algorithm is dominated by its

behavior in its loops (90 - 10 rule).

Definition of Big-O

Let T(n) be a function that measures the running

time of a program in some unknown unit of time.

Let n represent the size of the input data set that the

program manipulates where n > 0.

Let f(n) be some function defined on the size of the

input data set, n.

We say that “T(n) is O(f(n))” if there exists an

integer n0 and a constant c, where c > 0, such that

for all integers n >= n0 we have T(n) <= cf(n).

The pair n0 and c are witnesses to the fact that

T(n) is O(f(n))

Simplifying Big-O

Expressions

Big-O expressions are simplified by dropping

constant factors and low order terms.

The total of all terms gives us the total running time

of the program. For example, say that

T(n) = O(f3(n) + f2(n) + f1(n))

where f3(n) = 4n3; f2(n) = 5n2; f1(n) = 23

or to restate T(n):

T(n) = O(4n3 + 5n2 + 23)

After stripping out the constants and low order

terms we are left with T(n) = O(n3)

Simplifying Big-O

Expressions

T(n) = f1(n) + f2(n) + f3(n) + … + fk(n)

In big-O analysis, one of the terms in the T(n)

expression is identified as the dominant term.

A dominant term is one that, for large values of

n, becomes so large that it allows us to ignore

the other terms in the expression.

The problem of big-O analysis can be reduced to

one of finding the dominant term in an expression

representing the number of operations required by

an algorithm.

All other terms and constants are dropped from

the expression.

Big-O Analysis

Example 1

for (k = 0; k < n/2; ++k) {

for (j = 0; j < n*n; ++j) {

statement(s)

}

}

Outer loop executes n/2 times

Inner loop executes n2 times

T(n) = (n/2)(n2) = n3/2 = .5(n3)

T(n) = O(n3)

Big-O Analysis

Example 2

for (k = 0; k < n/2; ++k) { statement(s) }

for (j = 0; j < n*n; ++j) { statement(s) }

First loop executes n/2 times

Second loop executes n2 times

T(n) = (n/2) + n2 = .5n + n2

n2 is the dominant term

T(n) = O(n2)

Big-O Analysis

Example 3

while (n > 1) {

statement(s)

n = n / 2;

}

The values of n will follow a logarithmic

progression.

Assuming n has the initial value of 64, the

progression will be 64, 32, 16, 8, 4, 2.

Loop executes log2 times

O(log2 n) = O(log n)

Big-O Comparisons

Analysis Involving

if/else

if (condition)

loop1; //assume O(f(n)) for loop1

else

loop2; //assume O(g(n)) for loop 2

The order of magnitude for the entire if/else

statement is

O(max(f(n), g(n)))

An Example

Involving if/else

if (a[1][1] = = 0)

for (i = 0; i < n; ++i)

for (j = 0; j < n; ++j)

a[i][j] = 0;

f(n) = n2

else

for (i = 0; i < n; ++i)

a[i][j] = 1;

g(n) = n

The order of magnitude for the entire if/else

statement is

O(max(f(n), g(n))) = O(n2)