Selection Equation

advertisement

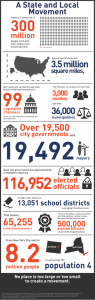

Correcting for Self-Selection Bias Using the Heckman Selection Correction in a Valuation Survey Using Knowledge Networks Presented at the 2005 Annual Meeting of the American Association of Public Opinion Research Trudy Cameron, University of Oregon J. R. DeShazo, UCLA Mike Dennis, Knowledge Networks Motivating Insights It is possible to have 20% response rate, yet still have a representative sample It is possible to have 80% response rate, yet still have very non-representative sample Need to know: What factors affect response propensity Whether response propensity is correlated with the survey outcome of interest Research Questions Can the inferences from our two samples of respondents from the Knowledge Networks Inc (KN) consumer panel be generalized to the population? Do observed/unobserved factors that affect the odds of a respondent being in the sample also affect the answers that he/she gives on the survey? What we find: Insignificant selectivity using one method Significant, but very tiny, selectivity using an alternative method A bit disappointing for us (no sensational results, reduced publication potential) Probably reassuring for Knowledge Networks, since our samples appear to be reasonably representative Heckman Selectivity Correction Intuition: Example 1 Suppose your sample matches the US population on observables: age, gender, income Survey is about government regulation Suppose liberals are more likely to fill out surveys, but no data on political ideology for non-respondents (unobserved heterogeneity) Sample will have disproportionate number of liberals Your sample is likely to overstate sympathy for government regulation (sample selection bias) Heckman Selectivity Correction Intuition: Example 2 Suppose your sample matches the US population on observables: age, gender income Survey is about WTP for health programs People who are fearful about their future health are more likely to respond, but have no data for anyone on “fearfulness” (the salience of health programs) Sample will tend to overestimate WTP for health programs Level curves, 0 Level curves, 0 The selection “process” Several phases of attrition (transitions) in KN samples. Assume RDD=“random” 1. 2. 3. 4. 5. RDD → recruited Recruited → profiled Profiled → active at time of sampling Active → drawn for survey sample Drawn → member of estimating sample Can explore a different selection process for each of these transitions Exploit RDD telephone numbers Recruits can be asked their addresses Some non-recruit numbers matched to addresses using reverse directories Phone numbers with no street address? Matched (approximately) to best census tract using the geographic extent of the telephone exchange Link to other geocoded data Panel protection during geocoding Using dummy identifiers, match street addresses to relevant census tract (or telephone exchange to tract, courtesy Dale Kulp at MSG), return data to KN Get back from KN the pool of initial RDD contacts, minus all confidential addresses, with our respondent case IDs restored Merge with auxiliary data about census tract attributes and voting behaviors Cameron and Crawford (2004) Data for each of the 65,000 census tracts in the year 2000 U.S. census ~ 95 count variables for different categories Convert to proportions of population (or households, or dwellings) Factor analysis: 15 orthogonal factors that together account for ~ 88% of variation in sociodemographic characteristics across tracts Categories of Census Variables Population density Ethnicity; Gender; Age distribution Family structure Housing occupancy status; Housing characteristics Urbanization; Residential mobility Linguistic isolation Educational Attainment Disabilities Employment Status Industry; Occupation; Type of income Labels: 15 orthogonal factors "well-to-do prime" "elderly disabled" "well-to-do seniors" "rural farm., self-employ. "single renter twenties" "low mobil., stable neigh." "unemployed" "Native American" "minority single moms" "female" "thirty-somethings" "health-care workers" "working-age disabled" "asian-hispanic-multi, language isolation" "some college, no grad" 2000 Presidential Voting Data Leip (2004) Atlas of U.S. Presidential Elections: vote counts for each county Use: % of county votes for “Gore,” “Nader,” versus “Bush and others” (omitted category) will not be orthogonal to our 15 census factors Empirical Illustrations 1. Analysis of “government” question in “public interventions” sample--by naïve OLS, and via preferred Heckman model 2. Analysis of selection processes leading to “private interventions” sample Marginal selection probabilities Conditional selection probabilities Allow marginal utilities to depend on propensity to respond to survey Analysis 1: Heckman Selectivity Model Public intervention sample Find an outcome variable that is Measures an attitude that may be relevant to other research questions Can be treated as cardinal and continuous (although it is actually discrete and ordinal) Can be modeled (naively) by OLS methods Can be generalized into a two-equation FIML selectivity model Government Involvement in Regulating Env., Health, Safety? Rating Frequency Percent 1 – minimally involved 2 3 4 5 6 7 – heavily involved 134 90 199 493 609 591 795 4.60 3.09 6.84 16.94 20.92 20.30 27.31 Total 2,911 100.00 Heckman correction model: bias not statistically significant Fail to reject at 5% level, at 10% level (but close) Point estimate of error correlation = +0.10 May be more likely to respond if approve of govt reg Interpretation: Insufficient signal-to-noise to conclude that there is non-random selection Reassuring, but could stem from noise due to: – Census tract factors, county votes rather than individual characteristics – Treating ordinal ratings as cardinal and continuous Implications for “govt” variable 1 OLS (fitted) 2 Heckmana (fitted) 3 simulated (index) 4 simulated (prob) Observations 2911 2911 2911 2911 Mean 5.17 4.69 5.18 5.17 5% 25% 50% 75% 95% 4.80 4.97 5.12 5.33 5.67 4.34 4.50 4.64 4.85 5.18 1.99 4.02 5.03 6.97 7.03 2.00 4.00 5.00 7.00 7.00 Log L -5549.30 -23061.82 -5548.07 -5548.63 Analysis 2 Conditional Logit Choice Models Very attractive properties for analyzing multiple discrete choices,…but No established methods for joint modeling of selection propensity and outcomes in the form of 3-way choices Testable Hypothesis: Do the marginal utilities of key attributes depend upon the fitted selection index (or the fitted selection probability)? If yes: then observable heterogeneity in the odds of being in the sample contributes to heterogeneity in the apparent preferences in the estimating sample If no: greater confidence in representativeness of the estimating sample (although still no certainty) RDD contact dispositions Variable Proportion Disposition (n=525,190) Recruited Profiled Active panel at sample time (Eligible at sample time…>24 years) Drawn for sample Estimating sample 0.354 0.183 0.075 (0.068) 0.006 0.003 Results: Response propensity models Use response propensity as a shifter on the parameters of conditional logit choice models Only one marginal utility parameter (related to the disutility of a sick-year) appears robustly sensitive to selection propensity Baseline coefficient is -50 units, average shift coefficient is on the order of 3 units, times a deviation in fitted response probabilities that averages about 0.004 Conclusions 1 For our samples: hard to find convincing and robust evidence of substantial sample selection bias in models for outcome variables in two KN samples Good news for Knowledge Networks; not so good for us, as researchers, in terms of the publication prospects for this dimension of our work Conclusions 2 Analysis 1: Insignificant point estimate of bias in distribution of attitudes toward regulation on the order of “10% too much in favor” Analysis 2: Statistically significant (but tiny) heterogeneity in key parameters across response propensities in systematically varying parameters models Guidance High response rates do not necessarily eliminate biases in survey samples Weights help (so sample matches population on observable dimensions), but weights are not necessarily a fix if unobservables are correlated Cannot tell if correlated unobservables are a problem without doing this type of analysis Need to model the selection process explicitly Need to explain differences in response propensities Need analogous data for respondents and for people who do not respond – E.g. Census tract factors and county voting percentages – Anything else that might capture salience of survey topic (e.g. county mortality rates from same diseases covered in survey, hospital densities)