424p.10.lec4 - Stanford University

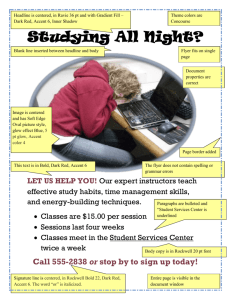

advertisement

CS 424P/ LINGUIST 287 Extracting Social Meaning and Sentiment Dan Jurafsky Lecture 4: Speech and Prosody 1/5/07 Outline Articulatory Phonetics Acoustic Phonetics Prosody Pitch Accents Disfluencies 1/5/07 Phonetics Articulatory Phonetics How speech sounds are made by articulators (moving organs) in mouth. Acoustic Phonetics Acoustic properties of speech sounds 1/5/07 Speech Production Process Respiration: We (normally) speak while breathing out. Respiration provides airflow. “Pulmonic egressive airstream” Phonation Airstream sets vocal folds in motion. Vibration of vocal folds produces sounds. Sound is then modulated by: Articulation and Resonance Shape of vocal tract, characterized by: Oral tract Teeth, soft palate (velum), hard palate Tongue, lips, uvula Nasal tract 1/5/07 Text adopted from Sharon Rose Sagittal section of the vocal tract (Techmer 1880) Nasal Cavity Pharynx Vocal Folds (within the Larynx) Trachea Lungs 1/5/07 Text copyright J. J. Ohala, Sept 2001, from Sharon Rose slide 1/5/07 From Mark Liberman’s website, from Ultimate Visual Dictionary 1/5/07 From Mark Liberman’s Web Site, from Language Files (7th ed) Vocal tract 1/5/07 Figure thnx to John Coleman!! Vocal tract movie (high speed x-ray) 1/5/07 Figure of Ken Stevens, from Peter Ladefoged’s web site 1/5/07 Figure of Ken Stevens, labels from Peter Ladefoged’s web site USC’s SAIL Lab Shri Narayanan 1/5/07 Larynx and Vocal Folds The Larynx (voice box) A structure made of cartilage and muscle Located above the trachea (windpipe) and below the pharynx (throat) Contains the vocal folds (adjective for larynx: laryngeal) Vocal Folds (older term: vocal cords) Two bands of muscle and tissue in the larynx Can be set in motion to produce sound (voicing) 1/5/07 Text from slides by Sharon Rose UCSD LING 111 handout The larynx, external structure, from front 1/5/07 Figure thnx to John Coleman!! Vertical slice through larynx, as seen from back 1/5/07 Figure thnx to John Coleman!! Voicing: 1/5/07 •Air comes up from lungs •Forces its way through vocal cords, pushing open (2,3,4) •This causes air pressure in glottis to fall, since: • when gas runs through constricted passage, its velocity increases (Venturi tube effect) • this increase in velocity results in a drop in pressure (Bernoulli principle) •Because of drop in pressure, vocal cords snap together again (6-10) •Single cycle: ~1/100 of a second. Figure & text from John Coleman’s web site Voicelessness When vocal cords are open, air passes through unobstructed Voiceless sounds: p/t/k/s/f/sh/th/ch If the air moves very quickly, the turbulence causes a different kind of phonation: whisper 1/5/07 Vocal folds open during breathing 1/5/07 From Mark Liberman’s web site, from Ultimate Visual Dictionary Vocal Fold Vibration 1/5/07 UCLA Phonetics Lab Demo Consonants and Vowels Consonants: phonetically, sounds with audible noise produced by a constriction Vowels: phonetically, sounds with no audible noise produced by a constriction (it’s more complicated than this, since we have to consider syllabic function, but this will do for now) 1/5/07 Text adapted from John Coleman Oral vs. Nasal Sounds 1/5/07 Thanks to Jong-bok Kim for this figure! Tongue position for vowels 1/5/07 Vowels IY AA UW 1/5/07 Fig. from Eric Keller American English Vowel Space HIGH iy uw ix ih FRONT ux ax eh ah ae 1/5/07 uh ao BACK aa LOW Figure from Jennifer Venditti [iy] vs. [uw] 1/5/07 Figure from Jennifer Venditti, from a lecture given by Rochelle Newman [ae] vs. [aa] 1/5/07 Figure from Jennifer Venditti, from a lecture given by Rochelle Newman 2. Acoustic Phonetics Sound Waves http://www.kettering.edu/~drussell/Demos/waves- intro/waves-intro.html 1/5/07 Simple Period Waves (sine waves) • Characterized by: • period: T • amplitude A • phase • Fundamental frequency in cycles per second, or Hz • F0=1/T 1/5/07 1 cycle Simple periodic waves Computing the frequency of a wave: 5 cycles in .5 seconds = 10 cycles/second = 10 Hz Amplitude: 1 Equation: Y = A sin(2ft) 1/5/07 Speech sound waves A little piece from the waveform of the vowel [iy] Y axis: Amplitude = amount of air pressure at that time point Positive is compression Zero is normal air pressure, negative is rarefaction X axis: time. 1/5/07 Digitizing Speech 1/5/07 Digitizing Speech Analog-to-digital conversion Or A-D conversion. Two steps Sampling Quantization 1/5/07 Sampling • Measuring amplitude of a signal at time t • The sample rate needs to have at least two samples for each cycle • One for the positiive, and one for the negative half of each cycle • More than two samples per cycle is ok • Less than two samples will cause frequencies to be missed • So the maximum frequency that can be measured is one that is half the sampling rate. • The maximum frequency for a given sampling 1/5/07called Nyquist frequency rate Sampling Original signal in red: If measure at green dots, will see a lower frequency wave and miss the correct higher frequency one! 1/5/07 Sampling • In practice we use the following sample rates • 16,000 Hz (samples/sec), for microphones, “wideband” • 8,000 Hz (samples/sec) Telephone • Why? • Need at least 2 samples per cycle • Max measurable frequency is half the sampling rate • Human speech < 10KHz, so need max 20K • Telephone is filtered at 4K, so 8K is enough. 1/5/07 Quantization Quantization • Representing real value of each amplitude as integer • 8-bit (-128 to 127) or 16-bit (32768 to 32767) Formats: • 16 bit PCM • 8 bit mu-law; log compression Byte order 40 byte • LSB (Intel) vs. MSB (Sun, header Apple) Headers: • Raw (no header) • Microsoft wav • Sun .au 1/5/07 WAV format 1/5/07 Fundamental frequency Waveform of the vowel [iy] Frequency: repetitions/second of a wave Above vowel has 10 reps in .03875 secs So freq is 10/.03875 = 258 Hz This is speed that vocal folds move, hence voicing Each peak corresponds to an opening of the vocal folds The frequency of the complex wave is called the fundamental frequency of the wave or F0 Pitch track Amplitude We need a way to talk about the amplitude of a region of a signal over tune We can’t just average all the values. Why not? So we often talk about RMS amplitude N x[i]2 ARMS i1 N Power and Intensity Power: related to square of amplitude N 1 2 Power x[i] N i1 Intensity in air: power normalized to auditory threshold, given in dB. P0 is auditory threshold pressure = 2x10-5 pa N 1 2 Intensity 10log 10 x[i] NP0 i1 Plot of Intensity Pitch and Loudness Pitch is the mental sensation or perceptual correlated of F0 Relationship between pitch and F0 is not linear; human pitch perception is most accurate between 100Hz and 1000Hz. Linear in this range Logarithmic above 1000Hz Mel scale is one model of this F0-pitch mapping A mel is a unit of pitch defined so that pairs of sounds which are perceptually equidistant in pitch are separated by an equal number of mels Frequency in mels = 1127 ln (1 + f/700) She just had a baby Note that vowels all have regular amplitude peaks Stop consonant Closure followed by release Notice the silence followed by slight bursts of emphasis: very clear for [b] of “baby” Fricative: noisy. [sh] of “she” at beginning Fricative 1/5/07 Waves have different frequencies 100 Hz 1000 Hz 1/5/07 Complex waves: Adding a 100 Hz and 1000 Hz wave together 1/5/07 Spectrum Amplitude Frequency components (100 and 1000 Hz) on x-axis 100 1/5/07 Frequency in Hz 1000 Spectra continued Fourier analysis: any wave can be represented as the (infinite) sum of sine waves of different frequencies (amplitude, phase) 1/5/07 Spectrum of one instant in an actual soundwave: many components across frequency range 1/5/07 Part of [ae] waveform from “had” Note complex wave repeating nine times in figure Plus smaller waves which repeats 4 times for every large pattern Large wave has frequency of 250 Hz (9 times in .036 seconds) Small wave roughly 4 times this, or roughly 1000 Hz Two little tiny waves on top of peak of 1000 Hz waves 1/5/07 Back to spectrum Spectrum represents these freq components Computed by Fourier transform, algorithm which separates out each frequency component of wave. x-axis shows frequency, y-axis shows magnitude (in decibels, a log measure of amplitude) Peaks at 930 Hz, 1860 Hz, and 3020 Hz. 1/5/07 Seeing formants: the spectrogram 1/5/07 Formants Vowels largely distinguished by 2 characteristic pitches. One of them (the higher of the two) goes downward throughout the series iy ih eh ae aa ao ou u The other goes up for the first four vowels and then down for the next four. These are called "formants" of the vowels, lower is 1st formant, higher is 2nd formant. 1/5/07 Spectrogram: spectrum + time dimension 1/5/07 Different vowels have different formants Vocal tract as "amplifier"; amplifies different frequencies Formants are result of different shapes of vocal tract. Any body of air will vibrate in a way that depends on its size and shape. Air in vocal tract is set in vibration by action of vocal cords. Every time the vocal cords open and close, pulse of air from the lungs, acting like sharp taps on air in vocal tract, Setting resonating cavities into vibration so produce a number of different frequencies. 1/5/07 Again: why is a speech sound wave composed of these peaks? Articulatory facts: The vocal cord vibrations create harmonics The mouth is an amplifier Depending on shape of mouth, some harmonics are amplified more than others 1/5/07 From Mark Liberman’s Web site 1/5/07 How formants are produced Q: Why do different vowels have different pitches if the vocal cords are vibrating at the same rate? A: This is a confusion of frequencies of SOURCE and frequencies of FILTER! 1/5/07 Source-filter model of speech production Input Glottal spectrum Filter Output Vocal tract frequency response function Source and filter are independent, so: Different vowels can have same pitch The same vowel can have different pitch 1/5/07 Figures and text from Ratree Wayland slide from his website 1/5/07 Deriving schwa: how shape of mouth (filter function) creates peaks! Reminder of basic facts about sound waves f = c/ c = speed of sound (approx 35,000 cm/sec) A sound with =10 meters has low frequency f = 35 Hz (35,000/1000) A sound with =2 centimeters has high frequency f = 17,500 Hz (35,000/2) 1/5/07 Resonances of the vocal tract The human vocal tract as an open tube Closed end Open end Air in a tube of a given length will tend to vibrate at Length 17.5 cm. resonance frequency of tube. 1/5/07 Figure from Ladefoged(1996) p 117 Resonances of the vocal tract The human vocal tract as an open tube Closed end Open end Length 17.5 cm. Air in a tube of a given length will tend to vibrate at resonance frequency of tube. 1/5/07 Figure from W. Barry Speech Science slides Resonances of the vocal tract If vocal tract is cylindrical tube open at one end Standing waves form in tubes Waves will resonate if their wavelength corresponds to dimensions of tube Constraint: Pressure differential should be maximal at (closed) glottal end and minimal at (open) lip end. Next slide shows what kind of length of waves can fit into a tube with this contraint 1/5/07 1/5/07 From Sundberg Computing the 3 formants of schwa Let the length of the tube be L F1 = c/1 = c/(4L) = 35,000/4*17.5 = 500Hz F2 = c/2 = c/(4/3L) = 3c/4L = 3*35,000/4*17.5 = 1500Hz F1 = c/2 = c/(4/5L) = 5c/4L = 5*35,000/4*17.5 = 2500Hz So we expect a neutral vowel to have 3 resonances at 500, 1500, and 2500 Hz These vowel resonances are called formants 1/5/07 Vowel [i] sung at successively higher pitch. 2 1 5 4 3 6 7 1/5/07 Figures from Ratree Wayland slides from his website Summary Acoustic Phonetics Waves, sound waves, and spectra Speech waveforms F0, pitch, intensity Spectra Spectrograms Formants Reading spectrograms Deriving schwa: why are formants where they are PRAAT Resources: dictionaries and phonetically-labeled corpora. 1/5/07 3. Prosody Defining Intonation Ladd (1996) “Intonational phonology” “The use of suprasegmental phonetic features Suprasegmental = above and beyond the segment/phone F0 Intensity (energy) Duration to convey sentence-level pragmatic meanings” I.e. meanings that apply to phrases or utterances as a whole, not lexical stress, not lexical tone. Three aspects of prosody Prominence: some syllables/words are more prominent than others Structure/boundaries: sentences have prosodic structure Some words group naturally together Others have a noticeable break or disjuncture between them Tune: the intonational melody of an utterance. From Ladd (1996) Prosodic Prominence: Pitch Accents A: What types of foods are a good source of vitamins? B1: Legumes are a good source of VITAMINS. B2: LEGUMES are a good source of vitamins. Prominent syllables are: • Louder • Longer • Have higher F0 and/or sharper changes in F0 (higher F0 velocity) Slide from Jennifer Venditti Prosodic Boundaries I met Mary and Elena’s mother at the mall yesterday. I met Mary and Elena’s mother at the mall yesterday. French [bread and cheese] [French bread] and [cheese] Slide from Jennifer Venditti Prosodic Tunes Legumes are a good source of vitamins. Are legumes a good source of vitamins? Slide from Jennifer Venditti Prosody Part I Thinking about F0 Graphic representation of F0 400 350 F0 (in Hertz) 300 250 200 150 100 50 legumes are a good source of VITAMINS time Slide from Jennifer Venditti The ‘ripples’ 400 350 300 250 200 150 [t] 100 [s] 50 legumes are a good source of VITAMINS [s] F0 is not defined for consonants without vocal fold vibration. Slide from Jennifer Venditti The ‘ripples’ 400 350 300 250 200 150 100 50 [g] [z] [g] [v] legumes are a good source of VITAMINS ... and F0 can be perturbed by consonants with an extreme constriction in the vocal tract. Slide from Jennifer Venditti Abstraction of the F0 contour 400 350 300 250 200 150 100 50 legumes are a good source of VITAMINS Our perception of the intonation contour abstracts away from these perturbations. Slide from Jennifer Venditti The ‘waves’ and the ‘swells’ 400 ‘wave’ = accent 350 300 250 200 150 ‘swell’ = phrase 100 50 legumes are a good source of VITAMINS Slide from Jennifer Venditti Prosody Part II: Prominence: Placement of Pitch Accents Stress vs. accent Stress is a structural property of a word it marks a potential (arbitrary) location for an accent to occur, if there is one. Accent is a property of a word in context it is a way to mark intonational prominence in order to ‘highlight’ important words in the discourse. (x) (x) x x stressed syll x full vowels x x (accented syll) x x x x x x x vi ta mins Ca li for nia syllables Slide from Jennifer Venditti Stress vs. accent (2) The speaker decides to make the word vitamin more prominent by accenting it. Lexical stress tell us that this prominence will appear on the first syllable, hence VItamin. So we will have to look at both the lexicon and the context to predict the details of prominence I’m a little surPRISED to hear it CHARacterized as upBEAT Which word receives an accent? It depends on the context. The ‘new’ information in the answer to a question is often accented while the ‘old’ information is usually not. Q1: What types of foods are a good source of vitamins? A1: LEGUMES are a good source of vitamins. Q2: Are legumes a source of vitamins? A2: Legumes are a GOOD source of vitamins. Q3: I’ve heard that legumes are healthy, but what are they a good source of ? A3: Legumes are a good source of VITAMINS. Slide from Jennifer Venditti Same ‘tune’, different alignment 400 350 300 250 200 150 100 50 LEGUMES are a good source of vitamins The main rise-fall accent (= “I assert this”) shifts locations. Slide from Jennifer Venditti Same ‘tune’, different alignment 400 350 300 250 200 150 100 50 Legumes are a GOOD source of vitamins The main rise-fall accent (= “I assert this”) shifts locations. Slide from Jennifer Venditti Same ‘tune’, different alignment 400 350 300 250 200 150 100 50 legumes are a good source of VITAMINS The main rise-fall accent (= “I assert this”) shifts locations. Slide from Jennifer Venditti Levels of prominence Most phrases have more than one accent The last accent in a phrase is perceived as more prominent Called the Nuclear Accent Emphatic accents like nuclear accent often used for semantic purposes, such as indicating that a word is contrastive, or the semantic focus. The kind of thing you uses ***s in IM, or capitalized letters ‘I know SOMETHING interesting is sure to happen,’ she said to herself. Can also have words that are less prominent than usual Reduced words, especially function words. Often use 4 classes of prominence: Emphatic accent, pitch accent, unaccented, reduced Prosody Part III: Intonational phrasing/boundaries A single intonation phrase 400 350 300 250 200 150 100 50 legumes are a good source of vitamins Broad focus statement consisting of one intonation phrase (that is, one intonation tune spans the whole unit). Slide from Jennifer Venditti Multiple phrases 400 350 300 250 200 150 100 50 legumes are a good source of vitamins Utterances can be ‘chunked’ up into smaller phrases in order to signal the importance of information in each unit. Slide from Jennifer Venditti I wanted to go to London, but could only get tickets for France Phrasing can disambiguate Global ambiguity: The old men and women stayed home. The old men % and women % stayed home. Sally saw % the man with the binoculars. Sally saw the man % with the binoculars. John doesn’t drink because he’s unhappy. John doesn’t drink % because he’s unhappy. Slide from Jennifer Venditti Phrasing sometimes helps disambiguate 400 350 300 250 Mary & Elena’s mother mall 200 150 100 50 I met Mary and Elena’s mother at the mall yesterday One intonation phrase with relatively flat overall pitch range. Slide from Jennifer Venditti Phrasing sometimes helps disambiguate 400 350 Elena’s mother mall 300 250 Mary 200 150 100 50 I met Mary and Elena’s mother at the mall yesterday Separate phrases, with expanded pitch movements. Slide from Jennifer Venditti Intonational tunes Yes-No question tune 550 500 450 400 350 300 250 200 150 100 50 are LEGUMES a good source of vitamins Rise from the main accent to the end of the sentence. Slide from Jennifer Venditti Yes-No question tune 550 500 450 400 350 300 250 200 150 100 50 are legumes a GOOD source of vitamins Rise from the main accent to the end of the sentence. Slide from Jennifer Venditti Yes-No question tune 550 500 450 400 350 300 250 200 150 100 50 are legumes a good source of VITAMINS Rise from the main accent to the end of the sentence. Slide from Jennifer Venditti WH-questions [I know that many natural foods are healthy, but ...] 400 350 300 250 200 150 100 50 WHAT are a good source of vitamins WH-questions typically have falling contours, like statements. Slide from Jennifer Venditti Broad focus “Tell me something about the world.” 400 350 300 250 200 150 100 legumes are a good source of vitamins In the absence of narrow focus, English tends to mark the first and last ‘content’ words with perceptually prominent accents. 50 Slide from Jennifer Venditti Rising statements “Tell me something I didn’t already know.” 550 500 450 400 350 300 250 200 150 100 50 legumes are a good source of vitamins [... does this statement qualify?] High-rising statements can signal that the speaker is seeking approval. Slide from Jennifer Venditti Yes-No question 550 500 450 400 350 300 250 200 150 100 50 are legumes a good source of VITAMINS Rise from the main accent to the end of the sentence. Slide from Jennifer Venditti ‘Surprise-redundancy’ tune [How many times do I have to tell you ...] 400 350 300 250 200 150 100 50 legumes are a good source of vitamins Low beginning followed by a gradual rise to a high at the end. Slide from Jennifer Venditti ‘Contradiction’ tune “I’ve heard that linguini is a good source of vitamins.” 400 350 300 250 200 150 100 50 linguini isn’t a good source of vitamins [... how could you think that?] Sharp fall at the beginning, flat and low, then rising at the end. Slide from Jennifer Venditti 4. Pitch Accent Prediction from text Detection from text and speech Advanced linguistic models that capture tune 1/5/07 Study 1: Pitch accent prediction Which words in an utterance should bear accent? “Accent” in the sense of simplified ToBI (Ostendorf et al. 2001, ShattuckHufnagel and Ostendorf 1999) i believe at ibm they make you wear a blue suit. i BELIEVE at IBM they MAKE you WEAR a BLUE SUIT. 2001 was a good movie, if you had read the book. 2001 was a good MOVIE, if you had read the BOOK. Factors in accent prediction Part of speech: Content words are usually accented Function words are rarely accented Of, for, in on, that, the, a, an, no, to, and but or will may would can her is their its our there is am are was were, etc But not just function/content: A Broadcast News example from Hirschberg (1993) SUN MICROSYSTEMS INC, the UPSTART COMPANY that HELPED LAUNCH the DESKTOP COMPUTER industry TREND TOWARD HIGH powered WORKSTATIONS, was UNVEILING an ENTIRE OVERHAUL of its PRODUCT LINE TODAY. SOME of the new MACHINES, PRICED from FIVE THOUSAND NINE hundred NINETY five DOLLARS to seventy THREE thousand nine HUNDRED dollars, BOAST SOPHISTICATED new graphics and DIGITAL SOUND TECHNOLOGIES, HIGHER SPEEDS AND a CIRCUIT board that allows FULL motion VIDEO on a COMPUTER SCREEN. Factors in accent prediction Contrast Legumes are poor source of VITAMINS No, legumes are a GOOD source of vitamins I think JOHN or MARY should go No, I think JOHN AND MARY should go List intonation I went and saw ANNA, LENNY, MARY, and NORA. Word order Preposed items are accented more frequently TODAY we will BEGIN to LOOK at FROG anatomy. We will BEGIN to LOOK at FROG anatomy today. Information Status New versus old information. Old information is deaccented There are LAWYERS, and there are GOOD lawyers EACH NATION DEFINES its OWN national INTERST. I LIKE GOLDEN RETRIEVERS, but MOST dogs LEAVE me COLD. Complex Noun Phrase Structure Sproat, R. 1994. English noun-phrase accent prediction for text-to-speech. Computer Speech and Language 8:79-94. Proper Names, stress on right-most word New York CITY; Paris, FRANCE Adjective-Noun combinations, stress on noun Large HOUSE, red PEN, new NOTEBOOK Noun-Noun compounds: stress left noun HOTdog (food) versus HOT DOG (overheated animal) WHITE house (place) versus WHITE HOUSE (made of stucco) examples: MEDICAL Building, APPLE cake, cherry PIE. What about: Madison avenue, park street ?? Some Rules Furniture+Room -> RIGHT (e.g., kitchen TABLE) Proper-name + Street -> LEFT (e.g. PARK street) Other features POS POS of previous word POS of next word Stress of current, previous, next syllable Unigram probability of word Bigram probability of word Position of word in sentence Advanced features Accent is often deflected away from a word due to focus on a neighboring word. Could use syntactic parallelism to detect this kind of contrastive focus: • ……driving [FIFTY miles] an hour in a [THIRTY mile] zone • [WELD] [APPLAUDS] mandatory recycling. [SILBER] [DISMISSES] recycling goals as meaningless. • …but while Weld may be [LONG] on people skills, he may be [SHORT] on money Previous state of the art on accent prediction Useful features include: (starting with Hirschberg 1993) Lexical class (function words, clitics not accented) Word frequency Word identity (promising but problematic) Given/New, Theme/Rheme Focus Word bigram predictability Position in phrase Complex nominal rules (Sproat) Combined in a machine learning classifier: Decision trees (Hirchberg 1993), Bagging/boosting (Sun 2002) Hidden Markov models (Hasegawa-Johnson et al 2005) 1 1 Conditional random fields (Gregory and Altun 2004) Study 1: Nenkova et al 2007, 2008. What features are the best predictors of pitch accent? How much do sophisticated linguistic features help over simple features? Given/New Focus/contrast How can we make use of word identity? Can we rely on word identity across genres? Corpus and approach 12 Switchboard conversations 14,555 tokens Annotated for binary prominence M. Ostendorf, I. Shafran, S. Shattuck-Hufnagel, L. Carmichael, and W. Byrne. 2001. A prosodically labeled database of spontaneous speech. ISCA Workshop on Prosody, pp 119--121. Majority class 58% not accented words Decision tree classifier Supervised machine learning Training on labeled tokens, trying to predict +/- accent Exhaustive comparison of predictors with different feature sets Combinations of 1 to 5 features Features Information Status: Given/new/mediated Nissim, Dingare, Carletta, and Steedman 2004. An annotation scheme for information status in dialogue. LREC 2004. theyold have all the WATERnew theyold WANT. Theyold can ACTUALLY PUMP waterold. Kontrast (Contrast, focus, focus-sensitive adverb, etc) Calhoun, Nissim, Steedman, Brenier 2005. A framework for annotating information structure in discourse. Proceedings of the ACL Pie in the Sky Workshop, 45-25 YOU take this subject much more personally than I do. (How much does a nanny cost?) I THINK it’s about SIXTY DOLLARS a WEEK for TWO children Position in utterance (# of words from beginning/end) Shattuck-Hufnagel, Ostendorf, Ross 1994; Lisa Selkirk this morning Animacy (Zaenen et al 2004), Dialog act (Jurafsky et al 1998) POS, n-gram prob, tf.idf, word len, stopword, distance from pause Accent ratio feature Is prominence a property of the word itself? Of all the times this word occurred What percentage were accented? Memorized from a labeled corpus 60 Switchboard conversations (Ostendorf et al 2001) Detailed computation of accent ratio: k number of times a word is prominent n all occurrences of the word k AccentRatio( w) , if B(k , n,0.5) 0.05 n Single feature prediction accuracy Accent ratio significantly outperforms word frequency Accent ratio: 75.59% Word frequency: 73.77% Part of speech: 70.28% Accent ratio classifier a word not-prominent if AR < 0.38 Words not in the accent ratio dictionary assigned to “prominent” class Linguistic features not powerful by themselves Focus/contrast (kontrast): 67.57% Given/new: 64.13% Combining features Accent ratio always in best classifiers Kontrast (Focus/contrast) most helpful in combination with accent ratio Small or no effect of givenness, distance Best overall result: 76.7% accuracy AR + kontrast + tf.idf + givenness + distance 75.6% accuracy AR alone 1 2 Why didn’t given/new help? Restricted applicability Nouns/pronouns Influences the form of referring expression Most old information expressed with a pronoun Pronouns are usually not accented Mediated category More than half of the annotated nouns Different accents appropriate depending on the semantic relation POS med new old NN 1189 420 255 PRO 64 0 1495 Information status Accented med 752 63% new 307 73% old 156 61% Notaccented 437 113 99 • New information more likely to be accented • But most nouns are accented anyway •1215/1864 = 65% accenting of nouns overall 125 Why is accent ratio useful? Cover all part-of-speech categories Low accent ratio words a, uh, thing, some, been, up, out, it’s, them, him stayed, supposed, said, say, wanna minutes, little, thing, anything High accent ratio words actually, anyway, yeah, wow, gosh, yes, no, excatly one intonation phrase + exclamatives too, also, else Focus-inducing words rather, major, great, poor Intensifiers especially, definitely Accent Ratio as analytic tool Yuan, Brenier, Jurafsky. 2005. Pitch Accent Prediction: Effects of Genre and Speaker. Eurospeech. Brenier. 2008 ms. The automatic prediction of prosodic prominence from text. PhD Dissertation. Genre differences: spontaneous speech (conversation, interview) vs read speech (storybooks, broadcast) Read speech: words don’t vary Spontaneous speech: more variation Other differences: Disfluencies and their effect on accent in spontaneous speech Christodoulou, Babwah and Arnold (2008) Discourse markers (and has higher AR in spontaneous speech) Is accent ratio robust to genre? Cross-genre experiment: broadcast news Cross: Accent ratio from Switchboard 82% accuracy Within: Unigram, bigram, backwards bigram probability; trained on broadcasts 83.67% accuracy So even across genre, accent ratio is still a very useful predictor of accent Summary for Study 1 The best predictor of a word being accented is whether the word tends to be accented in general Kontrast is also useful Given/New status not very helpful This information already carried by NP form (pronoun) We don’t have good theoretical predictions about mediated entitites Study 2: Pitch accent detection Sridhar, Nenkova, Narayanan, Jurafsky. Speech Prosody 2008 Nenkova and Jurafsky 2007. ASRU 2007. Can we detect pitch accent from speech and text? How best to combine acoustic and lexical cues? How useful is contextual information (from neighboring words)? 1 3 Our experiment The same 12 Switchboard conversations 14,555 word tokens The task is again predicting whether a word is accented, using Text features (in this experiment, accent ratio+POS) Acoustic features Combined in a CRF (“Conditional Random Field”) classifier CRF is sort of a version of logistic regression that deals well with sequences Evaluated by how well we match human accent labels 1 3 Acoustic features tested Duration of word Pitch Energy F0 mean of word Normalized by side F0 std dev Normalized by side Max F0 in word Min F0 in word F0 slope 1 3 Mean RMS energy in word Energy std dev Energy slope across word RMS energy in first half of word RMS energy in second half of word Final set of acoustic features duration of word std dev of F0 values in word linear regression F0 slope over all points of word ratio of F0 mean in second and first half difference in F0 mean of second and first half of word, normalized by mean and std dev of F0 in convside std dev of rms energy in word 1 3 Log-linear classifier Features Accuracy in % Current word 68.1 Current POS 70.2 Accent Ratio 75.3 Word+POS+AR 75.4 Acoustics only 73.1 All Features 77.4 • Accent ratio by itself better than acoustic features • Combining them helps • But would it help also to use contextual information? 1 3 Better results using context Features in CRF Current word ± 1 word ± 2 words Words 68.1% 75.7% 75.1% POS tags 70.2 72.7 73.2 Accent Ratio 75.3 75.2 75.1 Word+POS+AR 75.4 76.0 75.9 Acoustics only 73.1 74.0 73.9 All Features 77.4 78.3 78.2 • Best results (78.3%) use one previous and following word • Again accent ratio is better than acoustics • But the combination is better still • We think these are the best published numbers on this task How well do humans do? Compare human to human Read news (Boston University Radio) Ostendorf, Price, Shattuck-Hufnagel (1995) One identical portion (1662 words) was read by 6 different speakers We computed pairwise agreement between humans Accuracy Average: 82% Min: 79% Max: 85% This suggests that current performance of 78.3% might be approaching human performance Summary from Study 2 Machine Learning Sequence models (CRF) give best performance on accent detection Confirms CRF results of Gregory and Altun (2004) And HMM results of Hasegawa-Johnson et al (2005) Linguistic Features Single preceding and following word most useful Probably captures stress clash/lapse Accent ratio is still best single feature Acoustic features give further improvement Surprisingly, most useful acoustic features: Raw: not normalized by speaker! 1 3 Advanced: Intonational Transcription Theories: ToBI ToBI: Tones and Break Indices Pitch accent tones H* “peak accent” L* “low accent” L+H* “rising peak accent” (contrastive) L*+H ‘scooped accent’ H+!H* downstepped high Boundary tones L-L% (final low; Am Eng. Declarative contour) L-H% (continuation rise) H-H% (yes-no queston) Break indices 0: clitics, 1, word boundaries, 2 short pause 3 intermediate intonation phrase 4 full intonation phrase/final boundary. Examples of the TOBI system •I don’t eat beef. L* L* L*L-L% •Marianna made the marmalade. H* L-L% L* H-H% •“I” means insert. H* H* H*L-L% 1 H*LH*L-L% 3 Slide from Lavoie and Podesva 5. Disfluencies 1/5/07 Disfluencies Disfluencies: standard terminology (Levelt) Reparandum: thing repaired Interruption point (IP): where speaker breaks off Editing phase (edit terms): uh, I mean, you know Repair: fluent continuation Counts (from Shriberg, Heeman) Sentence disfluency rate ATIS: 6% of sentences disfluent (10% long sentences) Levelt human dialogs: 34% of sentences disfluent Swbd: ~50% of multiword sentences disfluent TRAINS: 10% of words are in reparandum or editing phrase Word disfluency rate SWBD: 6% ATIS: 0.4% AMEX 13% (human-human air travel) Prosodic characteristics of disfluencies Nakatani and Hirschberg 1994 Fragments are good cues to disfluencies Prosody: Pause duration is shorter in disfluent silence than fluent silence F0 increases from end of reparandum to beginning of repair, but only minor change Repair interval offsets have minor prosodic phrase boundary, even in middle of NP: Show me all n- | round-trip flights | from Pittsburgh | to Atlanta Syntactic Characteristics of Disfluencies Hindle (1983) The repair often has same structure as reparandum Both are Noun Phrases (NPs) in this example: So if could automatically find IP, could find and correct reparandum! Use of different disfluencies Clark and Fox Tree Looked at “um” and “uh” “uh” includes “er” (“er” is just British non-rhotic dialect spelling for “uh”) Different meanings Uh: used to announce minor delays Preceded and followed by shorter pauses Um: used to announce major delays Preceded and followed by longer pauses Um versus uh: delays (Clark and Fox Tree) Utterance Planning The more difficulty speakers have in planning, the more delays Consider 3 locations: I: before intonation phrase: hardest II: after first word of intonation phrase: easier III: later: easiest And then uh somebody said, . [I] but um -- [II] don’t you think there’s evidence of this, in the twelfth - [III] and thirteenth centuries? Delays at different points in phrase More on location of FPs Peters: Medical dictation task Monologue rather than dialogue In this data, FPs occurred INSIDE clauses Trigram PP after FP: 367 Trigram PP after word: 51 Stolcke and Shriberg (1996b) wk FP wk+1: looked at P(wk+1|wk) Transition probabilities lower for these transitions than normal ones Conclusion: People use FPs when they are planning difficult things, so following words likely to be unexpected/rare/difficult Repeaters and Deleters Fast speakers get ahead of themselves and restarts Slow speakers wait and use filled pauses but don’t restart 1/5/07 Detecting Disfluencies 1/5/07 Recent work: EARS Metadata Evaluation (MDE) A recent multiyear DARPA bakeoff Sentence-like Unit (SU) detection: find end points of SU Detect subtype (question, statement, backchannel) Edit word detection: Find all words in reparandum (words that will be removed) Filler word detection Filled pauses (uh, um) Discourse markers (you know, like, so) Editing terms (I mean) Interruption point detection Liu et al 2003 Kinds of disfluencies Repetitions I * I like it Revisions We * I like it Restarts (false starts) It’s also * I like it MDE transcription Conventions: ./ for statement SU boundaries, <> for fillers, [] for edit words, * for IP (interruption point) inside edits And <uh> <you know> wash your clothes wherever you are ./ and [ you ] * you really get used to the outdoors ./ MDE Labeled Corpora CTS BN Training set (words) 484K 182K Test set (words) 35K 45K STT WER (%) 14.9 11.7 SU % 13.6 8.1 Edit word % 7.4 1.8 Filler word % 6.8 1.8 MDE Algorithms Use both text and prosodic features At each interword boundary Extract Prosodic features (pause length, durations, pitch contours, energy contours) Use N-gram Language model Combine via HMM, Maxent, CRF, or other classifier State of the art: Edit word detection Multi-stage model HMM combining LM and decision tree finds IP Heuristics rules find onset of reparandum Separate repetition detector for repeated words One-stage model CRF jointly finds edit region and IP BIO tagging (each word has tag whether is beginning of edit, inside edit, outside edit) Error rates: 43-50% using transcripts 80-90% using ASR Fragments Incomplete or cut-off words: Leaving at seven fif- eight thirty uh, I, I d-, don't feel comfortable You know the fam-, well, the families I need to know, uh, how- how do you feel… Uh yeah, yeah, well, it- it- that’s right. And it- SWBD: around 0.7% of words are fragments (Liu 2003) ATIS: 60.2% of repairs contain fragments (6% of corpus sentences had a least 1 repair) Bear et al (1992) Another ATIS corpus: 74% of all reparanda end in word fragments (Nakatani and Hirschberg 1994) Fragment glottalization Uh yeah, yeah, well, it- it- that’s right. And it- Why fragments are important Frequent enough to be a problem: Only 1% of words/3% of sentences But if miss fragment, tend to get surrounding words wrong (word segmentation error). Goldwater et al.: 14% absolute increase in word error rate (from 18% to 32%) for words before fragments!! Useful for finding other repairs In 40% of SRI-ATIS sentences containing fragments, fragment occurred at right edge of long repair 74% of ATT-ATIS reparanda ended in fragments Sometimes are the only cue to repair “leaving at <seven> <fif-> eight thirty” Cues for fragment detection 49/50 cases examined ended in silence >60msec; average 282ms (Bear et al) 24 of 25 vowel-final fragments glottalized (Bear et al) Glottalization: increased time between glottal pulses 75% don’t even finish the vowel in first syllable (i.e., speaker stopped after first consonant) (O’Shaughnessy) Cues for fragment detection Nakatani and Hirschberg (1994) Word fragments tend to be content words: Lexical Class Token Percent Content 121 42% Function 12 4% 155 54% Untranscribed Cues for fragment detection Nakatani and Hirschberg (1994) 91% are one syllable or less Syllables Tokens Percent 0 113 39% 1 149 52% 2 25 9% 3 1 0.3% Cues for fragment detection Nakatani and Hirschberg (1994) Fricative-initial common; not vowel-initial Class % words % frags % 1-C frags Stop 23% 23% 11% Vowel 25% 13% 0% Fric 33% 45% 73% Liu (2003): Acoustic-Prosodic detection of fragments Prosodic features Duration (from alignments) Of word, pause, last-rhyme-in word Normalized in various ways F0 (from pitch tracker) Modified to compute stylized speaker-specific contours Energy Frame-level, modified in various ways Liu (2003): Acoustic-Prosodic detection of fragments Voice Quality Features Jitter A measure of perturbation in pitch period Praat computes this Spectral tilt Overall slope of spectrum Speakers modify this when they stress a word Open Quotient Ratio of times in which vocal folds are open to total length of glottal cycle Can be estimated from first and second harmonics Creaky voice (laryngealization) vocal folds held together, so short open quotient The larynx main function of vocal folds: block objects from falling into trachea Slide from Ulrike Gut Inside the larynx Slide from Ulrike Gut Phonation phonation: vibration (=opening and closing) of the vocal folds vocal folds closed - air from the lungs pushes them apart – sucked back together (Bernoulli effect) Slide from Ulrike Gut Voice Quality and the Larynx Adductive tension (interarytenoid muscles adduct the arytenoid muscles) Medial compression (adductive force on vocal processes- adjustment of ligamental glottis) Longitudinal pressure (tension of vocal folds) Slide from K. Marasek, J. Wilcox Modulation of vocal fold vibration vocal folds are moved (adducted) by muscles can be tensed – the shorter the vocal folds the faster they vibrate 200 times/sec 120 times/sec Slide from Ulrike Gut Modes of phonation voicelessness = no vocal fold vibration modal (normal) voicing whisper breathy voice voice creaky voice Slide from Ulrike Gut Modal voice neutral mode muscular adjustments are moderate vibration of the vocal folds is periodic with full closing of glottis, so no audible friction noises are produced when air flows through the glottis. frequency of vibration and loudness are in the lowto mid range for conversational speech Slide from K. Marasek, J. Wilcox Breathy voice arytenoid cartilages remain slightly apart continuous airflow during vocal fold vibration Slide from Ulrike Gut Creaky voice arytenoid cartilages tightly together so that vocal folds can only vibrate at the other end normal creaky voice Slide from Ulrike Gut Creaky voice – voiced phonation vocal folds vibrate at a very low frequency – vibration is somewhat irregular, vibrating mass is “heavier” because of low tension (only the ligamental part of glottis vibrates) The vocal folds are strongly adducted longitudinal tension is weak Moderately high medial compression Vocal folds “thicken” and create an unusually thick and slack structure. Slide from K. Marasek, J. Wilcox Whisper in whisper there is no true vibration of the vocal folds; adduction of vocal folds while maintaining an opening between the arytenoid cartilages Slide from Ulrike Gut Whispery voice – voiceless phonation Very low adductive tension Medial compression moderately high Longitudinal tension moderately high Little or no vocal fold vibration ( produced through turbulences generated by the friction of the air in and above the larynx, which produces frication) Slide from K. Marasek, J. Wilcox Liu (2003) Use Switchboard 80%/20% Downsampled to 50% frags, 50% words Generated forced alignments with gold transcripts Extract prosodic and voice quality features Train decision tree Liu (2003) results Precision 74.3%, Recall 70.1% hypothesis reference complete fragment complete 109 35 fragment 43 101 Liu (2003) features Features most queried by DT Feature % jitter .272 Energy slope difference between current and following word .241 Ratio between F0 before and after boundary .238 Average OQ .147 Position of current turn 0.084 Pause duration 0.018