Regression, Nested Models and Diagnositcs

advertisement

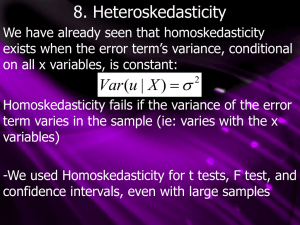

Regression and Diagnostics I271B QUANTITATIVE METHODS Regression versus Correlation 2 Correlation makes no assumption about one whether one variable is dependent on the other– only a measure of general association Regression attempts to describe a dependent nature of one or more explanatory variables on a single dependent variable. Assumes one-way causal link between X and Y. Thus, correlation is a measure of the strength of a relationship -1 to 1, while regression measures the exact nature of that relationship (e.g., the specific slope which is the change in Y given a change in X) Basic Linear Model 3 Yi = b0 + b1xi + ei. • X (and X-axis) is our independent variable(s) • Y (and Y-axis) is our dependent variable • b0 is a constant (y-intercept) • b1 is the slope (change in Y given a oneunit change in X) • e is the error term (residuals) Basic Linear Function 4 Slope 5 But...what happens if B is negative? Statistical Inference Using Least Squares 6 We obtain a sample statistic, b, which estimates the population parameter. We also have the standard error for b Uses standard t-distribution with n-2 degrees of freedom for hypothesis testing. Yi = b0 + b1xi + ei. Why Least Squares? 7 For any Y and X, there is one and only one line of best fit. The least squares regression equation minimizes the possible error between our observed values of Y and our predicted values of Y (often called y-hat). Data points and Regression 8 http://www.math.csusb.edu/faculty/stanton/m262/ regress/regress.html Multivariate Regression 9 Control Variables Alternate Predictor Variables Nested Models Nested Models 10 Model 1 Model 2 Model 3 • Control Variable 1 • Control Variable 2 • Control Variable 1 • Control Variable 2 • Explanatory Variable 1 • Control Variable 1 • Control Variable 2 • Explanatory Variable 1 • Explanatory Variable 2 Regression Diagnostics 11 Lab #4 12 Stating Hypothesis Interpreting Hypotheses Terminology Appropriate statistics and conventions Effect Size (revisited) Cohen’s d and the .2, .5, .8 interpretation values See also: http://web.uccs.edu/lbecker/Psy590/es.htm for a very nice lecture and discussion of the different types of effect size calculations Multicollinearity Occurs when an IV is very highly correlated with one or more other IV’s Caused by many things (including variables computed by other variables in same equation, using different operationalizations of same concept, etc) Consequences For OLS regression, it does not violate assumptions, but Standard Errors will be much, much larger than normal when there is multicollinearity (confidence intervals become wider, t-statistics become smaller) We often use VIF (variance inflation factors) scores to detect multicollinearity Generally, VIF of 5-10 is problematic, higher values considered problematic Solving the problem Typically, regressing each IV on the other IV’s is a way to find the problem variable(s). 13 Heteroskedasticity OLS regression assumes that the variance of the error term is constant. If the error does not have a constant variance, then it is heteroskedastic. Where it comes from Error may really change as an IV increases Measurement error Underspecified model 14 Heteroskedasticity (continued) Consequences We still get unbiased parameter estimates, but our line may not be the best fit. Why? Because OLS gives more ‘weight’ to the cases that might actually have the most error from the predicted line. Detecting it We have to look at the residuals (difference between observed responses from the predicted responses) First, use a residual versus fitted values plot (in STATA, rvfplot) or the residuals versus predicted values plot, which is a plot of the residuals versus one of the independent variables. We should see an even band across the 0 point (the line), indicating that our error is roughly equal. If we are still concerned, we can run a test such as the Breusch-Pagan/Cook-Weisberg Test for Heteroskedasticity. It tests the null hypothesis that the error variances are all EQUAL, and the alternative hypothesis that there is some difference. Thus, if it is significant then we reject the null hypothesis and we have a problem of heteroskedasticity. 15