OrmWeeklyPresentations

advertisement

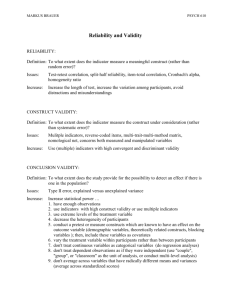

Organizational Research Methods Week 2: Causality • Understanding causal process – Ultimate goal of science • Causal description – Consequence of treatment – Prediction • Causal explanation – Mechanism by which cause works – Mediation Experiment • • • • Vary something to discover effects Limited generalizability Good for causal description not explanation Natural science control through precise measurement – Sterile test tubes, electronic instruments Three Elements of Causality • Cause and effect are related • Cause preceded effect • No plausible alternative explanations • John Stuart Mill Nonexperimental Research Strategy 1. Determine covariation 2. Test for time sequence • • Longitudinal design Quasi-experiment 3. Rule out plausible alternatives • • Based on data/theory Logical Inus Condition • Multiple causes/mechanisms for a phenomenon – – – – The same thing can occur for different reasons Sufficient but unnecessary conditions Multiple motives People do things for different reasons • Are social phenomena a hierarchy of inus conditions? • Should we expect strong relationships between variables if inus conditions exist? Inus Conditions For Turnover • • • • • • • • Better job offer Bullying Disability Dissatisfaction Poor skill-job match Pursue other interests (e.g., Olympics) Spouse transfer Strategic reasons: Part of plan Confirmation/Falsification • Observation used to – Confirm/support theories – Falsify/disconfirm theories • Confirmation: All swans are white – Must observe all swans in existence • Falsification: One black swan – Easier to falsify than confirm – Null hypothesis testing disconfirmation – Based on construct validity • Poor measure might falsely falsify Scientific Skepticism • Science not completely based on objective reality • Observations based on theory of construct • Construct validity is theoretical interpretation of what numbers represent • Theories could be wrong: Biased measurement – NA as bias (Watson, Pennebaker, Folger) – Social constructions (Salancik & Pfeffer) • Science based on trust of methods: Faith – Experiments – SEM – Statistical control (Meehl) Shadish et al. Skepticisms (p. 30) • “…scientists tend to notice evidence that confirms their preferred hypotheses and to overlook contradictory evidence.” • “They make routine cognitive errors of judgment” • The react to peer pressures to agree with accepted dogma” • The are partly motivated by sociological and economic rewards” Meehl 1971: High School Yearbooks • What is Meehl’s issue with Schwarz and common practice? • Schwarz approach – Control SES because it relates to schizophrenia and social participation – Does not consider plausible alternatives • Schwarz accepts without skepticism (Shadish) • Meehl no Automatic Inference Machine Automatic Inference Machine • • • • • • • • Idea that statistics can provide tests for causation There is no such thing as a “causal test” There is no such thing as a “test” for mediation Statistical controls do not provide the “true” relationship between variables Statistics are only numbers: They don’t know where they came from Inference is in the design Inference is in the mind: Logical reasoning Write “There is no automatic inference machine” 2 times Meehl 1971: High School Yearbooks • What is Meehl’s issue with Schwarz and common practice? • Schwarz approach – Control SES because it relates to schizophrenia and social participation – Does not consider plausible alternatives • Schwarz accepts without skepticism (Shadish) • Meehl no Automatic Inference Machine • Meehl Commonest Methodological Vice in Social Science Research Commonest Methodological Vice • What is the commonest methodological vice? • Assuming certain variables are fixed and therefore must be causal – SES – Demographics – Personality • But these variables can’t be effects. • Can they? Can Job Satisfaction Cause Gender? • Correlation of Gender and satisfaction = group mean differences • Satisfaction can’t cause someone’s gender • Satisfaction can be the cause of gender distribution of a sample • Suppose Females have higher satisfaction than Males • Multiple reasons Alternative Gender-Job Satisfaction Model • Females more likely to quit dissatisfying jobs • Dissatisfaction causes gender distribution • Gender moderates relation of satisfaction with quitting Satisfaction . . . . . . . . . . . . . . . . . . . . . . . . . . … . . . . Females . Males More Alternatives 1. Women less likely to take dissatisfying job (better job decisions) 2. Women less likely to be hired into dissatisfying jobs (protected) 3. Women less likely to be bullied/mistreated 4. Women given more realistic previews (lower expectations) 5. Women more socially skilled at getting what they want at work How To Use Controls • • • • • • Controls great devices to test hypotheses/theory Rule in/out plausible alternatives Best based on theory Sequence of tests No automatic/blind use/inference Tests with controls not more conclusive and often less Control Strategy 1. Test that A and B are related • Salary relates to job satisfaction 2. Confirm/disconfirm control variable • Gender relates to both 3. Generate/test alternative explanations for control variable • • • • Differential expectations Differential hiring rate Differential job experience Differential turnover rate Week 3: Validity and Threats To Validity • Validity – Interpretation of constructs/results – Inference based on purpose • Hypothesized causal connections among constructs • Nature of constructs • Population of interest – People – Settings • Not a property of designs or measures themselves Four Types of Design Validity • Statistical conclusion – Appropriate statistical method to make desired inference • Internal validity – Causal conclusions reasonable based on design • Construct validity – Interpretation of measures • External validity – Generalizeability to population of interest Threats to Validity • Statistical Conclusion – Statistics used incorrectly – Low power – Poor measurement • Internal Validity – – – – Confounds of IV with other events, variables Group differences (pre-existing or attrition) Lack of temporal order Instrument changes Threats To Validity 2 • Construct Validity – – – – Inadequate specification of theoretical construct Unreliable measurement Biases Poor content validity • External Validity – Inadequate specification of population – Poor sampling of population • Subjects • Settings Points From Shadish et. al • Evaluation of validity based on subjective judgment • Scientists conservative about accepting results/conclusions that run counter to belief • Scientists market their ideas; try to convince colleagues • Design controls preferable to statistical • Statistical controls based on assumptions – Untrue – Untested – Untestable Points From Shadish et al. 2 • People in same workplace more similar than people across workplaces – When might this be true? • Multiple tests – Probability compounding assumes independent tests • Molar vs. molecular – First determine molar effect – Then breakdown to determine molecular elements Qualitative Methods • What are qualitative methods – Collection/analysis of written/spoken text – Direct observation of behavior • • • • Participant observation Case study Interview Written materials – Existing documents – Open-ended questions Qualitative Research 2 • Accept subjectivity of science – Is this an excuse? • Less driven by hypothesis • Assumption that reality a social construction – If no one knows I’ve been shot, am I really dead? • • • • • Interested in subject’s viewpoint More open-ended More interested in context Less interested in general principles Focus more on interpretation than quantification Analysis • Content Analysis – – – – – Interviews Written materials Open-ended questions Audio or video recordings Quantifying • Counts of behaviors/events • Categorization of incidents • Multiple raters with high agreement • Nonquantitative – Analysis of case – Narrative description The Value of the Quantitative Approach • What is the value/use of this approach? • Is this science? • Must everything be quantified? Qualitative Organizational Research: Job Stress • Quantitative survey dominates • Role ambiguity and conflict dominated in 1980s & 1990s (Katz & Kahn) • Dominated by Rizzo et al. weak scales • Studies linked RA & RC to potential consequences and moderators • Qualitative approach (Keenan & Newton, 1985) • Stressful incidents Keenan & Newton’s SIR • Stress Incident Record – Describe event in prior 2 weeks – Aroused negative emotion • Top stressful events for engineers – – – – – Time/effort wasted Interpersonal conflict Work underload Work overload Conditions of employment • RA & RC rare (1.2% and 4.3%) Subsequent SIR Research • Comparison of occupations – Clerical: Work overload, lack of control – Faculty: Interpersonal conflict, time wasters – Sales clerks: Interpersonal conflict, time wasters • Informed subsequent quantitative studies – Focus on more common stressors • Interpersonal conflict • Organizational constraints – Forget RA & RC Cross-Cultural SIR Research • Comparison of university support staff • India vs. U.S. Stressor India US Overload 0% 25.6% Lack of control 0% 22.6% Lack of structure 26.5% 0% Constraints (Equipment) 15.4% 0% Conflict 16.5% 12.3% Research As Craft • Scholarly research as expertise not bag of tricks • Logical case • Go beyond sheer technique – Research not just formulaic/trends – Not just using right design, measures, stats – Can’t go wrong with Big Five, SEM, Metaanalysis – SEM on meta-analytic correlation matrix of Big Five Developing the Craft • Experience • Trying different things – – – – • • • • • • Constructs Designs/methods Problems Statistics Reading Reviewing Teaching Thinking/discussing Courses necessary but not sufficient Lifelong learning—you are never done Developing the Craft 2 • Field values novelty and rigor • Don’t be afraid of exploratory research – Not much contribution if answer known in advance • • • • • Look for surprises Don’t be afraid to follow intuition Ask interesting question without a clear answer Focus on interesting variables Good papers tell stories – Variables are characters – Relationships among variables Week 4 Construct & External Validity and Method Variance Constructs • Theoretical level – Conceptual definitions of variables – Basic building blocks of theories • Measurement level – Operationalizations – Based on theory of construct What We Do With Constructs • Define • Operationalize/Measure • Establish relations with other constructs – Covariation – Causation Construct Validity • Case based on weight of evidence • Theory of the construct – What is its nature? – What does it relate to? • Strength based on – Adequate definition – Adequate operationalization – Control for confounding Steps To Building the Case 1. 2. 3. 4. Define Construct Operationalize construct: Scale development Construct theory: What it relates to Validation evidence 1. Correlation with other variables 1. 2. 2. 3. 4. 5. Cross-sectional Predictive Known groups Convergent validity Discriminant validity Factorial validity Points By Shadish et al. • Construct confounding – – – – Assessment of unintended constructs SD and NA Race and income Height and gender • O = T + E + Bias – Bias = Extra unintended stuff Points by Shadish 2 • Mono-operation bias – Not clear on what it is • Advocates converging operations • Multiple operationalizations • What is a different operationalization? – – – – Different item formats Different raters Different experimenters Different training programs Points by Shadish 3 • Compensatory equalization: Extra to control group • Compensatory de-equalization: Extra to experimental group • FMHI Study – Random assignment to FMHI vs. state hospital – Staff violated Construct Validity: Example of Weak Link • Deviance: Violation of norms • Theoretical construct weakness – Whose norms? • Society, organization, workgroup • Operationalization weakness – List of behaviors with no reference to norms – Norms assumed from behavior • Retaliation: Response to unfairness – Asks behaviors plus motive – Retaliation mentioned in instructions External Validity • Link between sample and theoretical population • Define theoretical population • Identify critical characteristics • Compare sample to population – Employed individuals – Do students qualify? External Validity 2 • Generalizability – – – – – People Settings Organizations Treatments Outcomes • Exploring generalizability – Compare subgroups Facts Are the Enemy of Truth When Facts Oppose Belief • • • • • Gender bias in medical studies (Shadish p. 87) Women are neglected in medical research Treatments not tested on women New grant rules require women Study of 724 studies (Meinart et al.) – – – – – 55.2% both genders 12.2% males only 11.2% females only 21.4% not specified 355,000 males, 550,000 females When Politics Attack Science • IQ and performance • Differential validity of IQ tests • Others? Method Variance • Method Variance: Variance attributable to the method itself rather than trait • Campbell & Fiske 1959 • Assumed to be ubiquitous • VTotal = VTrait + VError + VMethod Campbell & Fiske, 1959 • “…features characteristic of the method being employed, features which could also be present in efforts to measure other quite different traits.” Common Method Variance • • • • • • CMV or Mono-method bias When method component shared VTotal = VTrait + VError + VMethod Assumes same method has same Vmethod Assumed to inflate correlations Only raised with self-reports Evidence Vs. Truth • Truth: CMV = Everything with same method correlated • Evidence: Boswell et al. JAP 2004, n = 1601 Leverage seeking Separation seeking -.04 Career satisfaction -.10 -.14 Perceived alternatives .07 -.09 .20 Reward importance .16 .05 -.03 .02 Potential Universal Biases • Truth: Specific biases widespread • Evidence – Social Desirability Meta-analysis • • • • • Moorman & Podsakoff Mean r = .05 Highest -.17 role ambiguity; .17 job satisfaction Lowest .01 Performance Lower with employees (.03) than students (.09) – Social Desirability Control Study • Role clarity-job satisfaction – r = .46 to .45 (when SD controlled) Single-Source Vs. Multi-Source • Truth: Multisource correlations smaller • Evidence: Crampton & Wagner Meta-analysis – – – – Compared single-source vs. multi-source 26.6% single-source higher (job sat and perform) 62.2% no difference (job sat and turnover) 11.2% multi-source higher (job sat and absence) Evidence-Based Conclusions • Measures subject to bias • Depends on construct AND method • Shared biases can inflate correlations – Social desirability – Negative affectivity • Are they really bias? • Unshared biases can attenuate correlations Solutions • • • • • • Eliminate term “method variance” 3rd variables Construct validity and potential 3rd variables Interpret results cautiously Choose methods to control feasible 3rd variables Alternate sources – Not always accurate • Converging operations • Use strategy of first establishing correlation – Rule out 3rd variables in series of steps Week 5: Quasi-Experimental Design • What is an experiment? – Random assignment – Creation of Conditions? – Naturally occurring experiment Quasi-experiment • • • • Design without random assignment Comparison of conditions Researcher created or existing Can characteristics of people be an IV? – Gender – Personality • Is a survey a quasi-experiment? Settings • Laboratory vs. field • Laboratory – Setting in which phenomenon doesn’t naturally occur • Field – Setting in which phenomenon naturally occurs • Classroom field for educational psychologist • Classroom lab for us Lab vs. Field Strengths/Weaknesses • Lab – – – – High level of control Easy to do experiments Limits to what can be studied Limited external validity of population/setting • Field – – – – – Limited control Difficult to do experiments Wide range of what can be studied High reliance on self-report High external validity Lab in I/O Research • What’s the role of lab in I/O research? • Stone suggests lab is as generalizeable as field. Do you agree? • Stone says I/O field biased against lab. Is it? • When should we do lab vs. field studies? Quasi-Experimental Compromise • Quasi-experiments – Compromise when true experiment isn’t possible – Built in confounds • Requires more data than experiment to rule out confounds • Inference complex • Logic puzzle not cookbook • Can’t just assume IV caused DV Quasi-Experiment and Control • Use of design AND statistical controls • “Statistical adjustment only after the best design controls have been used” Shadish, p. 161 • Control through comparison groups • Control through retesting – – – – Pretest-posttest Multiple pretests/posttests Long-term follow-ups Trends • Statistical control: 3rd variables & potential confounds Single Group Designs • Posttest only • • • • XO When (if ever) is this useful? Pretest-posttest OXO When is this useful? What are the limitations? Nonequivalent Groups Design • Preexisting groups assigned treatment vs. control X O O • Establishes difference between groups • Limited inference Nonequivalent Groups Design Limitations • What are the main limitations? – Groups could have been different initially – Interaction of group characteristics and treatment – Differential history causing differences Coping With Preexisting Group Differences 1. Assess preexisting differences • Pretest 2. Assess trends • Multiple pretests and posttests 3. Assign multiple groups • 4. 5. 6. 7. 8. Random assignment of groups if possible Replicate Additional control groups Matching Statistical adjustment of potential confounds Switching replications—Give treatment to control Matching • Selecting similar participants from each group – Choose one or more matching variables – Assess variables – Choose pairs that are close matches • Difficult to match on multiple variables – Sample size reduction • Might bias samples – High in one sample low in another – Meaning of high/low can vary – LOC: Internal Chinese is external New Zealander Case-Control Design • Compare sample meeting criterion with sample not meeting • Must match to same population – Employees who quit vs. all other employees – Employees who were promoted vs. other employees – CEOs vs. line employees – Employees assaulted/bullied vs. others • Assess other variables to compare Case-Control • Typically we have the case sample at hand • Controls may not be easily accessible • Often cases compared to a “normal” population – Cancer patients vs. norms for general public • Could compare cases in organization with employees in general – E.g., absence from case group vs. absence rate in company Is Case-Control Useful To Us? • What might we use this design to study in Organizations? • What is the Case Group? • What is the Control Group? • What variables do we compare? Limits To Case-Control Design • Defining groups from same population • Effect size uncertain – All cases have X – Small proportion of people with X are cases – Asymmetrical prediction • Groups may differ on more than case variable • Retrospective assessment of supposed cause – Quitting caused report of lower satisfaction Week 6: Design • Experimental Design – Random assignment – Creation of conditions • Randomized experiment – Time sequence built into design – Still must rule out plausible alternatives • Construct validity of IV and DV • External validity for lab studies • Is “real science” so real? Random Assignment • Random sample (external validity) • Random assignment (internal validity) – Probability of assignment equal – Expected value of characteristics equal – Not all variables equal • Type 1 errors • Faith in random assignment • Differential attrition Control Groups • • • • • No treatment Waiting list Placebo treatment Currently accepted treatment Comparisons to isolate variables Bias In Experiments • • • • Construct validity of DV and IV Bias in Assessment of DV Bias/confounding in IV Bias affects – – – – – – Subjects Experimenters Samples Conditions (Contamination and Distortion) Designs Instruments Humans Used As Instruments • Self vs. other reports • Bias in judgments of others – Schema & stereotypes – Implicit theories – Attractiveness • Pretty blondes are dumb – Physical ability • Athletes are dumb – Height • Tall are better leaders Demand Characteristics • • • • Implicit meaning of experimental condition IV not accurately perceived Subject motivated to do well Subject tries to figure out experiment – Response not natural for situation Lie Detection Lab Vs. Field • • • • • • Lab Study Two trials of detection Detect Trial 1, Easier to fool Trial 2 Fool Trial 1, Easier to detect Trial 2 Opposite to field experience Hypothesized that motive important – Want to fool—being detected makes it harder to fool – Want not be detected—being detected makes it easier to fool Lie Detection Study 2 • 2 trials x 2 conditions – Told intelligent can fool • Anxious when caught – Told sociopath can fool • Relax when caught Percent Caught Trial 2 Motive Caught Trial 1 Fooled Trial 1 Fool (intelligent) 94% 19% Catch (sociopath) 25% 88% Subject Expectancies • Hawthorne Effects – Knowledge of being in an experiment – Does this really happen? • Placebo Effects • Blind procedures Experimenter Effects • Observer Errors – – – – – Late 1700s Greenwich Observatory Maskelyne fires Kinnebrook for errors Astronomer Bessel: Widespread errors About 1% of observation have errors 75% direction of hypothesis • Experimenter expectancy—self fulfilling prophecy • Clever Hans • Dull/Bright rat study • Double blind procedure Experimenter Behavior • Smiling at subjects – 12% at males – 70% at females • Mixed gender S-E longer to complete • Videotape of S-E interactions (Female E) Auditory Visual Male subject Friendly Nonfriendly Female subject Nonfriendly Friendly Cross-Sectional Design • • • • • • • • All data at once Variables assessed once Most common design in I/O & OB/HR Often done with questionnaires Can establish relationships Cannot rule out most threats Cheap and efficient Good first step Data Collection Method • Ways of collecting data – Self-report questionnaire • Formats – Interview • Degree of structure – Observation • Behavior checklist vs. rating – Open-ended questionnaire Data Source • • • • • • Incumbent Supervisor Coworker Significant other Observer Existing materials – Job description Single-Source • • • • All data from one source Usually also mono-method Usually survey Many areas usually self-report – Well-being • Some area other-report – Performance Multi-Source • Same variables from different sources – Convergent validity – Confirmation of results • Different variables from different sources – Rules out some biases and 3rd variables – Some biases can be shared • Not panacea Bias Can Affect All Raters • Self-Efficacy—outward signs of confidence – Gives impression of effortless performance – Coworker perception of employee’s constraints • Doesn’t appear to have constraints – Supervisor perceptions of performance • Looks like a great performer Constraints Self-report Job Performance Self-report Self-Efficacy Self-report Constraints Coworker Job Performance Self-report Self-Efficacy Self-report Constraints Coworker Job Performance Supervisor Self-Efficacy Self-report Week 7: Longitudinal Designs • Design introducing element of time • Same variable measured repeatedly • Different variables separated in time – Turnover • How much time needed to be longitudinal? Advantages of Longitudinal Design • Can establish relationships • Can sometimes establish time sequence • Can rule out some plausible alternatives – Some biases – Occasion factors – Mood Proper Time Sequence • Before and after an event – Turnover • Precursors assessed prior – Job satisfaction • Difficult to know when satisfaction occurred • Arbitrary points in time not helpful – Steady state results same as cross-sectional Predicting Change • Showing that X predicts change in Y • Relation of X & Y controlling for prior levels • Weak evidence for causality • Regression to mean effects • Basement/ceiling effects Attrition Problem • Attrition between time periods – From organization – From study • Attrition not random • Mean change due to attrition • Interaction of attrition and variables – Those most/least affected quit Practical Issues • Tracking subjects • Matching responses – Loss of anonymity – Use of secret codes • Subject might not remember – Anonymous identifiers • First street lived on • Name of first grade teacher • Grandmother’s first name • Participation incentives • Time to complete study Pretest-Posttest Design Single Group O1 O2 Two Group O1 X O 2 O1 O2 Trends Over Time Single group Time Series O1 O 2 O3 X O 4 O5 O6 Multigroup Time Series O1 O 2 O3 X O 4 O5 O6 O1 O 2 O3 O 4 O5 O6 Discontinuity • Change in trend around X • Single group – Can’t rule out other causes • Multigroup – Control group to rule out alternatives Zapf et al. • Stress area • Relationships small over time • Inus conditions – Strains caused by 15 factors – Each accounts for 7% of variance – .26 correlation if measurement perfect • Attrition of least healthy • Relationships not always linear Curvilinear Stressor-Strain • Two studies – CISMS2: Anglo n = 1470 • Spector-Jex, 1991, n =232 • Stressors – Conflict, Constraints, Role ambiguity,Workload • Strains – anxiety, anger, depression, frustration, intent, job satisfaction, symptoms Analyses • Curvilinear regression • Strain = Stressor + Stressor2 • Plot by substituting values of Stressor – Similar to plotting moderated regression Example • Y = 10 - 2X + .2X2 • X ranges from 0 to 20 • Substitute values 5 points apart (0, 5, 10, 15, 20) Computations X b1X (b1 = -2) X2 b2X2 (b2 = .2) b1X+b2X2 10 +b1X+b2X2 0 0 0 0 0 10 5 -10 25 5 -5 5 10 -20 100 20 0 10 15 -30 225 45 15 25 20 -40 400 80 40 50 Illustration of Curvilinear Regression 60 50 Ŷ 40 30 20 10 0 0 5 10 15 X 20 25 Results • Significance for workload • Limited significance for – Conflict – Constraints – Role ambiguity Strain CISMS Spector-Jex Direction Anxiety ns * U Frustration -- * U Intent * * U Job Satisfaction Symptoms * * Inverted U * ns U Week 8: Field Research and Evaluation • Field Research – Done in naturalistic settings • Experimental • Quasi-experimental • Observational • Evaluation – Organizational Effectiveness – Figuring out if things work • Organizations • Programs • Interventions Challenges To Field Research • Access to organizations/subjects • Lack of control – Distal contact with subjects (surveys) – Who participates – Contaminating conditions • Participants discussing study • Lack of full cooperation • Organizational resistance to change Creative and Varied Approaches Accessing Subjects • Define population needed for your purpose – People – Jobs – Organizations • List likely locations to access populations • Consider ways to access locations Defining Populations: People • Characteristics of people – Demographics • Age, Education, Gender, Race – KSAOs – Occupations • • • • Do variables of interest vary across occupations? Single or multiple occupations Single controls variety of factors Multiple – More variance – Tests of occupation differences – Greater generalizeability Defining Populations: Organizations • Characteristics of organizations needed – Occupations represented – Characteristics of people represented – Characteristics of practices • Single versus multiple organizations – Single adds control – Multiple adds • Variance • Tests of organization characteristics • Generalizeability Accessing Participants: Students • Psychology student subject pool • Employed students in classes, e.g., night • Advantages/Limitations – – – – – – – Easily accessed on many campuses Cheap Cooperative Younger and more educated than average Heterogeneous jobs/organizations Often part-time and temporary jobs Potential work-school conflict Accessing Participants: Nonstudents • Organizations: Access can be a problem • Association mailing lists: Single occupations • Web search: Government employees • Clubs, churches, nonwork organizations • Unions • General surveys – Phone, mail, door-to-door, street corner Approaching Organizations • • • • Sell to management Appeal to value of science not ideal What’s in it for them? Partnership – Free service: Employee survey, job analysis – Address their problem – Piggyback your interest Modes of Approach • Personal contact: Networking – Give talks to local managers, e.g., SHRM – Students in class – Approach based on known need • Hospitals and violence • Consider the audience – – – – Psychologist vs. nonpsychologist HR vs. nonHR Level of sophistication about problem Don’t assume you know more than organization about their problem Project Prospectus • One page nontechnical prospectus – – – – – – Purpose: Clear and succinct What you need from them What it will cost (e.g., staff time) What’s in it for them What products you will provide to them Timeline Example • Determine factors leading to patient assaults on nurses in hospitals • Need to survey 200 nurses with questionnaire • Questionnaire will take 10-15 minutes – Can be taken on break or home • Will provide report to organization about – How many nurses have been assaulted – The impact of the assaults on them – Factors that might be addressed to reduce the problem • Would like to conduct study next month, and provide report within 60 days of completion. Partnerships • • • • Academics and nonacademics Projects come from mutual interests Piggyback onto organizational project Internship – Johannes Rank’s training evaluation • Issues – Proprietary results – Organizational confidentiality Program Evaluation/Organizational Effectiveness • Program Evaluation – Education – Human Services – Determining if program is effective • Organizational Effectiveness – More generic – Determining effectiveness of organization – Determining effectiveness of activity/unit Formative Approach • • • • • Focus on processes Often used in developmental approach Can be qualitative Can be quantitative Action research – Identify problem, try solution, evaluate, revise Summative Approach • • • • • Assess if things work Often quantitative Experimental or quasi-experimental design Compare to control group/s Utility – Return on investment (ROI) • Private sector • Profitability – Cost/outcome (bang for buck) • Military—literally • Nonmilitary—cost/unit of outcome Steps In Determining Effectiveness 1. Define goals/objectives 2. Determine criteria for success 3. Choose design 1. Single group vs. multigroup 4. 5. 6. 7. 8. Pick measures Collect data Analyze/draw conclusion Report/Feedback Program improvement Week 9 Survey Methods & Constructs • Survey methods • Sampling • Cross-cultural challenges – Measurement equivalence/invariance • Causal vs. Effect Indicators • Artifactual constructs Survey Settings • Within employer organization • Within other organization – – – – University Professional association Community group Club • General population – Phone book – Door-to-door Methods • Questionnaire – Paper-and-pencil – E-mail – Web • Interview – – – – – Face-to-face Phone Video-phone E-mail Instant Message Population • Single organization • Multiple organizations – Within industry/section • Single occupation • Multiple occupations • General population – Employed students Sample Versus Population • Survey everyone in population vs. sample – From single organization or unit of organization • Often survey goes to everyone – From multiple organizations • Kessler: All psychology faculty – From other organization • Professional association • Often survey everyone – General population Sampling Definitions • Population – Aggregate of cases meeting specification – – – – All humans All working people All accountants Not always directly measurable • Sampling frame – List of all members of a population to be sampled – List of all USF faculty Sampling Definitions cont. • Stratum – Segment of a population • Divided by a characteristic – Demographics • Male vs. female – Job level • Manager vs. nonmanager – Job title – Occupation – Department/division of organization Representativeness of Samples • Representative – Sample characteristics match population • Non representative – Sample characteristics do not match population • Some procedures more likely to yield representative samples Nonprobability Sampling • Nonprobability sample – Every member of population doesn’t have equal chance • Representativeness not assured • Types – Accidental or convenience – Snowball – Quota – Accidental but stratified • Choose half male/female – Purposive – Handpick cases that meet criteria • Pick full-time employees in a class Probability Sampling • Random sample from defined population • Stratified random sample – More efficient than random • Cluster or multistage – Random selection of aggregates • Select organizations stratified by industry International Research Methods • Cross-cultural vs. cross-national (CC/CN) • Purposes – – – – Research within a country/culture (emic) Test finding/theory CC/CN Compare countries/cultures Test culture hypotheses across groups defined by culture CC/CN differences • Within country • Across countries • Across regions – North America vs. Latin America Challenges of CC/CN Research • Equivalence of samples • Equivalence of measures – MEI Sample Equivalence • Confounding of country with sample characteristics – Occupations • Can vary across countries – Industry sectors • Private sector doesn’t exist universally – Organization characteristics – People characteristics (e.g., demographics) • Gender breakdown differs across countries Instrument Issues • Linguistic meaning – Translation – Back-translation • Calibration – Numerical equivalence – Cultural response tendencies • Asian modesty • Latin expansiveness • Measurement equivalence – Construct validity – Factor Structure Measurement Equivalence/Invariance MEI • Construct Validity – Same interpretation across groups • SEM and IRT approaches – Based on item inter-relationship similarity – Factor structure – Item characteristic curves SEM Approach • Equality of variance/covariance • Equal corresponding loadings • Form invariance – Equal number of factors – Same variables load per factor IRT Approach • Equivalent item behavior • For unidimensional scales • Better developed for ability tests Eastern versus Western Control Beliefs at Work Paul E. Spector, USF Juan I. Sanchez, Florida International University Oi Ling Siu, Lingnan University, Hong Kong Jesus Salgado, University of Santiago, Spain Jianhong Ma, Zhejiang University, PRC Applied Psychology: An International Review, 2004 Background • Cross-cultural study of control beliefs • Americans Vs. Chinese • Locus of control beliefs vary – Chinese very external vs. Americans – Suggests Chinese passive view of world – Look to others for direction Primary Vs. Secondary Control • Primary: Direct control of environment • Secondary: Adapt self to environment – – – – Predictive: Enhance ability to predict events Illusory: Focusing on chance, i.e., gambling Vicarious: Associate with powerful others Interpretive: Looking for meaning • Asians more secondary • Rothbaum, Weisz, & Snyder Socioinstrumental Control • • • • Control through social networks Build social networks Cultivate relationships Juan Sanchez Purpose • Develop/validate new control scales – Secondary control – Socioinstrumental control • Avoid ethnocentricism by using international item writers Pilot Study Method • • • • Develop definitions of constructs International team wrote 87 items Administered 126 Americans Item analysis Sample Items Secondary • I take pride in the accomplishments of my superiors at work (Vicarious control) • In doing my work, I sometimes consider failure in my work as payment for future success (Interpretative control) Socioinstrumental • It is important to cultivate relationship with superiors at work if you want to be effective • You can get your own way at work if you learn how to get along with other people Pilot Study Results • Secondary control scale – 11 items – Alpha = .75 – R = -.44 Work LOC • Socioinstrumental control 24 items – Alpha = .87 – R = .26 Work LOC • Two scales r = .12 (nonsignificant) Main Study Method • Subjects from HK, PRC, US – Ns = 130, 146, 254 – Employed students & university support • Work LOC & New Scales • Stressors – Autonomy, conflict, role ambiguity & conflict • Stains – Job satisfaction, work anxiety, life satisfaction Coefficient Alphas Scale HK PRC US Secondary .87 .70 .76 Socioinstrumental .91 .88 .91 Mean Differences HK PRC US R2 Second 43.8A 46.0B 45.6B .02 Socio 93.4A 97.1B 91.9A .01 Work LOC 51.0B 57.0C 40.2A .38 Variable Correlations With Work LOC Variable HK PRC US Secondary .33 -.55 -.21 Socioinstrumental .51 -.59 .23 Significant Correlations Variable HK PRC US Job sat, Autonomy Job sat All Socioinstrumental Role conflict Job sat Autonomy Work LOC Job sat, Autonomy, Conflict None All Secondary Conclusions • • • • Procedure created internally consistent scale Little mean difference China vs. US Work LOC huge mean difference Relationships different across samples Causal Vs. Effect Indicator • • • • Reflective Vs. Formative Nature of indicators Determines meaningful statistics Affects conclusions Effect or Reflective Indicator • Indicator caused by or reflects underlying construct • Change in construct Change in indicators • Classical test theory • Measures of attitudes and personality • Needs internal consistency • Factor analysis meaningful Causal or Formative Indicator • • • • • • • Indicator defines underlying construct Items don’t reflect single construct Items not interchangeable Change in indicator Change in construct Socio-economic status, Behavior checklists Internal consistency irrelevant Factor analysis might not be meaningful How Do You Know? • • • • Theoretical interpretation Are items equivalent forms of construct? Do items correlate? Time sequencing—which changed first? – Does increase in SES affect education and income equally? • No statistical test exists • No automatic inference machine Artifactual Constructs: Overinterpretation of Factor Analysis • Tendency to assume factors = constructs • If items load on different factors they reflect different constructs • Sometimes item characteristics are confounded with factors – Wording direction General Assumptions About Item Relationships • • • • Related items reflect same construct Unrelated items reflect different constructs Clusters of items reflect the same construct Factor analysis is magic General Assumptions About Measurement • People agree with items in direction of position – If I have a favorable attitude, I will agree with all favorable items • People disagree with items opposite to direction of position – If I have a favorable attitude, I will disagree with all unfavorable items • Responses to oppositely worded items are a mirror image of one another – If I moderately agree with positive items, I will moderately disagree with negative items Ideal Point Principle: Thurstone • Items vary along a continuum. • People’s positions vary along a continuum • People agree only with items near their position • Oppositely worded items not always mirror image • Items of same value relate strongly • Items of different value relate weakly Agree Disagree Person Item value on construct continuum Difficulty Factors • Ability tests • Items vary in difficulty • Items of same difficulty relate well – Those who get 1 easy will tend to get all easy • Items of varying difficulty relate less well – Those who get hard tend to get easy – Those who get easy don’t all get hard Example People Easy Items Hard Items Low Ability 80% Correct 0% Correct High Ability 100% Correct 80% Correct Affects On Statistics • • • • Easy items strongly correlated Hard items strongly correlated Easy items relate modestly to Hard Factor analysis produces factors based on difficulty • Difficulty factors reflect item characteristics not people characteristics Summated Ratings • Items of same scale value relate strongly • Items of different value relate modestly • Scatterplots triangular not elliptical – High-Low, Low-High, and Low-Low common – Few High-High • Often distributions are skewed • Mixed value items produce factors according to scale value of item • Might not reflect underlying constructs Plot of Moderate Positively Vs. Negatively Worded Job Satisfaction Items Scatterplot of Moderate Worded Job Satisfaction Items 46 Positively Worded Items 41 36 31 26 21 16 11 6 6 11 16 21 26 31 Negatively Worded Items 36 41 46 Plot of Extreme Positively Vs. Negatively Worded Job Satisfaction Items 46 Extreme Positively Worded Item Score 41 36 31 26 21 16 11 6 6 11 16 21 26 31 Extreme Negatively Worded Item Score 36 41 46 Conclusions • Be wary of factors where content is confounded with item direction • Be wary when assumption of homoscedasticity is violated • Be wary when items are extremely worded • More evidence than factor analysis Week 10 Theory What Is A Theory? • Bernstein – Set of propositions that account for predict and control phenomena • Muchinsky – Statement that explains relationships among phenomena • Webster – General or abstract principles of science – Explanation of phenomena Types of Theories • Inductive – Starts with data – Theory explains observations • Deductive – Starts with theory – Data used to support/refute theory Common Usage of Theory • Conjecture, opinion, speculation or hypothesis – Wikipedia Advantages • Integrates and summarizes large amounts of data • Can help predict • Guides research • Helps frame good research questions Disadvantages • Biases researchers • “Theory, like mist of eyeglasses, obscures facts” (Charlie Chan in Muchinsky) • “Facts are the enemy of truth” (Levine’s boss) • A distraction as research does not require theory (Skinner) Hypothesis • Statement of expected relationships among variables • Tentative • More limited than a theory • Doesn’t deal with process or explanation Model • Representation of a phenomenon • Description of a complex entity or process – Webster • Boxes and arrows showing causal flow Theoretical Construct • Abstract representation of a characteristic of people, situation, or thing • Building blocks of theories Paradigm • Accepted scientific practice • Rules and standards for scientific practice • Law, theory, application and instrumentation that provide models for research. – Thomas Kuhn What Are Our Paradigms? • Behaviorism? • Environment-perception-outcome approach • Surveys Structure of Scientific Revolutions Thomas Kuhn “An apparently arbitrary element, compounded of personal and historical accident, is always a formative ingredient of the beliefs espoused by a given scientific community at a given time.”, p. 4 “research as a strenuous and devoted attempt to force nature into the conceptual boxes supplied by professional education.”, p. 5 History of Theory • Behaviorism: Rejection of theory – More consistent with natural science – Avoid the unobservable – Dustbowl empiricism criticism • “Cognitive revolution”: Embracing models and theory – Unobservables commonly studied • Organizational research – Theory as paramount • The empiricists strike back? – Hambrick and Locke Current State of Theory • Almost required in introductions – Marginalize importance of data – Ideas more important than facts • Scholarship vs. Science – Scholarly writing—making good arguments – Scientific writing—describing/explaining phenomena based on data Misuse of Theory • Posthoc: Pretending theory drove research • Citing theories as evidence • Claiming hypothesis is based on a theory it is not based on • Sprinkling cites to irrelevant theories – (Sutton & Staw) Example from Stress Research • Hobfol’s Conservation of Resources Theory – People are motivated to acquire and conserve resources – Demands on resources and threats to resources are stressful • People routinely cite COR theory in support of stressor-strain hypotheses – No measure of resources or threat – Using a theory to support a hypothesis that does not derive from the theory Why Do People Do This? • Pressure for theory • Everyone else is doing it – Subjective norms • Think this is real science • Playing the game Backlash • Increasing criticism of the obsession with theory – – – – – Hambrick & Locke Harry Barrick: Half-life of models in cognitive AOM sessions One unnamed reviewer Informal interactions Proper Role of Theory in Science • Goal of science is to understand the world • Science is evidence-based not intuition-based – Data is the heart of science – Theory is current state of understanding how/why things work • Theory is the tail not the dog • There is a place for both empiricism and theory Natural Science • More focused on data • Longer timeframe – Decades and centuries of data before theory • “Social science theory a smokescreen to hide weak data” USF chemist Levels of Explanation • • • • • Atomic or chemical Neural Individual cognitive Social Higher the level, looser the connections and constructs Use Theory Properly • Hypotheses: Explicitly derive from a theory • Don’t claim support from a theory • Often better to mention theories in the discussion • Only mention multiple theories if your study is a comparative test Week 11 Levels of Analysis Level • Nature of the sampling unit – – – – – – – – Person Couple Family Group/Team Department Organization Industry sector Country Individual Vs. Higher Level • Most psychological constructs person level – Attitude – Performance • Some constructs higher (aggregate) level – Organizational climate – Team performance Types of Aggregates • Sum of individuals – Sales team performance • Consensus of individuals – Norms – Majority votes • Aggregate level data – – – – Job analysis observer ratings for job title Organization profitability Team characteristic (size, gender breakdown) Turnover rates Aggregate As Sum of Individuals • Sum individual characteristics – Ask individuals about own values – Sum values • Direct assessment of aggregate – Ask individuals about people in their unit – “How do your team members feel about…?” – Sum values Ecological Fallacy • Drawing inferences from one level to another • When measurement and question don’t match – Job satisfaction vs. group morale – Individual behavior vs. group behavior • Improper inference • Can’t draw conclusions across levels • Empirically data only reflect own level If it works for individuals, why won’t it work for groups? Correlation and Subgroups Individual no correlation; Group positive Individual no correlation; Group negative Individual positive correlation: Group none Individual positive correlation; Group negative Pay and Job Satisfaction • Question 1: Job level – Do better paying jobs have more satisfied people? • Question 2: Individual level – Are better paid within jobs more satisfied? Job Satisfaction Nurses Physicians Salary •Pay-Job Satisfaction correlation •Mixed jobs r = .17 (Spector, 1985) •Single job r = .50 (Rice et al. 1990) Pooled Within-Group Correlation Remove Effects of Group Differences in Means Correlation Pooled WG SPxy rxy SS x SS y SP SP x1y1 x2 y 2 rxy (SS SS )(SS SS ) x1 x2 y1 y2 where 1 and 2 refer to groups 1 and 2 Independence of Observations • Independence a statistical assumption • Subjects nested in groups – Subjects influence one another – Observations non-independent • Example – – – – – Effects of supervisory style on OCB Subjects nested in workgroups Style ratings within supervisor nonindependent Subjects influence one another Shared biases Confounding of Levels • Individual case per unit – One person per organization • Nonindependence issue resolved • Confounding of individual vs. organization – Is relation due to individual or organization? • Potential problem for inference – Self report of satisfaction and org performance – Could be shared bias—happy employee reports greater performance Hierarchical Linear Modeling • Statistical technique • Deals with nonindependence • Analyze data at two or more levels – Individuals in teams – Teams in organizations • Interaction of levels – Does team moderate relation between satisfaction and OCB? Week 12 Veteran’s Day Week 13 Literature Reviews Narrative Review • • • • • • Summary of research findings Qualitative analysis “Expert” analysis Based on evidence Room for subjectivity Classical approach Meta-analysis • • • • • Quantitative cumulation of findings Based on common metric Many approaches Many decision rules Room for subjectivity in decision rules Meta-Analysis HunterSchmidt Approach There are MANY ways to conduct meta-analysis Use of Narrative Review • • • • Used almost exclusively before 1990s Psychological Bulletin In depth literature summary Brief overview vs. comprehensive – Brief overview part of empirical articles • Can contrast very different studies – Constructs – Designs – Measures • Small number of studies Limitations to Narratives • One person’s subjective impression • Different reviews – different conclusions • Lacks decision rules for drawing conclusions – What if half studies are significant? • Difficulty with conflicting results • Narratives often hard to read • Narratives difficult to write Narrative Review Procedure • • • • • • Define domain Decide scope (how comprehensive) Inclusion rules Identify/obtain studies Read studies/take notes Organize review – Outline of topics – Assign studies to topic • Write sections • Draw conclusions Meta-Analysis • NO AUTOMATIC INFERENCE MACHINE • Does NOT provide absolute truth • Does NOT provide population parameters – Provides parameter estimates, i.e. statistics – Samples not always random or representative • Has not revolutionized research • Is just another tool that you need Just Another Tool Use of Meta-Analysis • • • • Dominant procedure today for reviews Published in most journals Often descriptive and superficial Allows for hypothesis tests – Moderators • Requires highly similar studies – Constructs – Designs – Measures Limitations To Meta-Analysis • Small number of studies meeting criteria • Convenience sample of convenience samples • Subjectivity of decision rules – Inclusion/exclusion rules – Statistics used – Procedures to gather studies • Journals • Dissertations • Unpublished • Different reviewers, different conclusions • Sometimes data are made up • Need lots of studies Meta-Analysis Procedure • Define domain and scope • Inclusion rules • Decide on M-A method – Artifact adjustments? • • • • • Identify/obtain studies Code data from studies Conduct analyses Prepare tables Write paper/interpret results Define Domain • Choose topic • Specify domain – Personality: Big Five vs. Individual traits • Define populations – Employees vs. Students • Define settings – Workplace vs. Home • Types of studies – Group comparisons vs. correlations • Define variable operationalizations – Self-reports vs. other reports Apples Vs. Oranges • Quantitative estimate of population parameter – What is the population? • Mean effect size across samples • Assumes sample statistics assess same thing • Cumulating results across different constructs not meaningful Inclusion Rules • Operationalizations parallel forms – Measures of NA, neuroticism, emotional stability, trait anxiety – All trait measures • Samples from same population – All full-time working adults – Full-time = > 30 hours/week – All American samples • Designs equivalent – All cross-sectional self-report • Journal published studies vs. others Meta-Analysis Method • Many to choose from • Nature of studies – Group comparisons – Correlations • Rosenthal – Describe distribution of rs – Moderators as specific variables to test • Hunter-Schmidt – Adjust for artifacts – Moderators as more variance than expected Effect Size Estimates • Combine effect sizes • Correlation as amount of shared variance • Magnitude of mean differences d M M SE Treatment Control Where d is difference in means in SD units Identify/Obtain Studies • • • • • • Electronic databases (Psychlit) Other reviews Reference lists of papers Conference programs/proceedings Listservs Write authors in area Coding • Choose variables to code • Judgments about inclusion rules • How to handle multiple statistics – Independent samples – Dependent samples: Average • Sometimes ratings made, e.g., quality – Interrater agreement Variables To Code • • • • • Effect sizes N Reliability of measures Name of measures Sample description – – – – Demographics Job types Organization types Country • Design Analysis • Meta-analysis software • Statistical package – Excel, SAS, SPSS • Organize results – Tables by IV or DV • Analysis of moderators Interpret • Often descriptive – Little insight other than mean correlations – Nothing new if results have been consistent • • • • • Often superficial Can test hypotheses Effects of moderators Can inconsistencies be resolved? Suggest new directions or research gaps? Rosenthal Approach • Convert statistics to r – Chi square from 2x2 table – Independent group t-test – Two-level between group ANOVA • • • • Convert r to z Compute descriptive statistics Describe results in tables Meta-analysis as summary of studies Rosenthal Descriptives • • • • • • Mean effect size Weighted mean Median Mode Standard deviation Confidence interval Rosenthal Moderators • Identify moderator and relate to effect sizes • Correlate characteristic of study with r • • • • • • • • Satisfaction – turnover study Unemployment as moderator Found studies Contacted authors where/when conducted Database of unemployment rates Correlated unemployment to r of study Unemployment –r with satisfaction-turnover Carsten-Spector 1987 Schmidt-Hunter • Convert effect sizes to r • Compute descriptive statistics on r • Collect artifact data – – – – • • • • Theoretical variability Unreliability Restriction of range Quality of study Artifact distributions to estimate missing data Adjust observed mean r to estimate rho Compare observed SD to theoretical after adjustments Residual variance = moderators Estimating Missing Artifacts • Estimate = Make up data • “The magic of statistics cannot create information where none exists” Wainer • Existing data to guess what missing might have been • Hall-Brannick JAP 2000 it is inaccurate • Science of what might be rather than what is Value of Artifact Adjustments • Variability in r is what is/isn’t expected • Show that variance due to differential reliability, restriction of range, etc. • Requires you have artifact data Rosenthal Vs. H-S • Both identify/code studies • Both compute descriptive statistics • R to z transformation – Rosenthal yes, H-S no • • • • • H-S artifact adjustments H-S rho vs. Rosenthal mean r H-S advocate estimating unobservables Rosenthal deals only with observables Begin the same, H-S goes farther Why I Prefer Rosenthal • Rho is parameter for undefined population – Convenience sample of convenience samples – Population = studies that were done/found • Unavailable artifact data – Uncomfortable in estimating missing data • Prefer to deal with observables • Don’t believe in automatic inference • Lot’s of competing methods Week 14 Ethics In Research Ethical Practices • Conducting Research – Treatment of human subjects – Treatment of organizational subjects • Data Analysis/Interpretation • Disseminating Results – Publication • Peer reviewing Ethical Codes • • • • • Appropriate moral behavior/practice Accepted practices Basic Principle: Do no harm Protect dignity, health, rights, well-being Codes – APA?? – AOM American Psychological Association Code • Largely Practice oriented • Five principles – – – – – Beneficence and Nonmaleficence [Do no harm] Fidelity and Responsibility Integrity Justice Respect for People’s Rights and Dignity • Standards and practices • Applies to APA members • http://www.apa.org/ethics/ Preamble Psychologists are committed to increasing scientific and professional knowledge of behavior and people's understanding of themselves and others and to the use of such knowledge to improve the condition of individuals, organizations, and society. Psychologists respect and protect civil and human rights and the central importance of freedom of inquiry and expression in research, teaching, and publication. They strive to help the public in developing informed judgments and choices concerning human behavior. In doing so, they perform many roles, such as researcher, educator, diagnostician, therapist, supervisor, consultant, administrator, social interventionist, and expert witness. APA Conflict Between Profession and Ethical Principles • Restriction of Advertising – Violation of the law • Maximization of income for members • Tolerance of torture – Convoluted statements • Other associations manage to avoid such conflicts Academy of Management Code • • Largely academically oriented Three Principles – – – • Responsibility to – – – – – • Responsibility Integrity Respect for people’s rights and dignity Students Advancement of managerial knowledge AOM and larger profession Managers and practice of management All people in the world http://www.aomonline.org/aom.asp?ID=&page_ID=239 Professional Principles Our professional goals are to enhance the learning of students and colleagues and the effectiveness of organizations through our teaching, research, and practice of management. Why I Prefer AOM • Consistent principles • Simpler • More directly relevant to organizational practice and research • No attempt to compromise ethics for profit Principles Vs. Practice • Principles clear in theory • Ethical line not always clear • Ethical dilemmas – Harm can be done no matter what is done – Conflicting interests between parties • Employee versus organization • Whose rights take priority? Example: Exploitive Relationships • Principle – Psychologists do not exploit persons over whom they have supervisory, evaluative, or other authority • What does it mean to exploit? • Professor A hires Student B to be an RA – How much pay/compensation is exploitive? – How many hours/week demanded? • What if student gets publication? Example: Assessing Performance • In academic and supervisory relationships, psychologists establish a timely and specific process for providing feedback to students and supervisees. • Not giving an evaluation is unethical? • How often? • How detailed? • What if honest feedback harms the person’s job situation? Conducting Research • • • • • Privacy Informed consent Safety Debriefing Inducements Privacy • Anonymity: Best protection – Procedures to match data without identities • Confidentiality – Security of identified data • Locked computer/cabinet/lab • Encoding data • Code numbers cross-referenced to names – Removing names and identifying information Informed Consent • Subject must know what is involved – Purpose – Disclosure of risk – Benefits of research • Researcher/society • Subject – Privacy/confidentiality • Who has access to data • Who has access to identity – Right to withdraw – Consequences of withdrawal Safety • Minimize exposure to risk – Workplace safety study: Control group • Physical and psychological risk Debriefing • • • • • • Subject right to know Educational experience for students Written document Presentation Surveys: Provide contact for follow-up Provide results in future upon request Inducements • Pure Volunteer – no inducement • Course requirement – Is this coercion? • Extra credit • Financial payment – Is payment coercion? Institutional Review Board: IRB • Original Purpose: Protection of human subjects • Current Purpose: Protection of institution – Federal government requirement • We pay for government atrocities of the past – – – – Government sanctions Bureaucratic Often absurd Designed for invasive medical research IRB Jurisdiction • • • • • Institutions receiving federal research funds All research at institution under jurisdiction Cross-country differences Canada like US China doesn’t exist Types of Review • Full – One year • Expedited: Research with limited risk – Data from audio/video recordings – Research on individual or group characteristics or behavior (including, but not limited to, research on perception, cognition, motivation, identity, language, communication, cultural beliefs or practices, and social behavior) or research employing survey, interview, oral history, focus group, program evaluation, human factors evaluation, or quality assurance methodologies. – One year • Exempt – Five years Exempt • • • • • • • Project doesn’t get board review Determined by staff member You can’t determine own exemption Five year Surveys, interviews tests, observations Unless Subjects identified AND potential for harm – – – – Legal liability Financial standing Employability Reputation IRB Impact • Best: Minor bureaucratic inconvenience – Protects institution – Protects investigator • Worst: Chilling effect on research – Prevents certain projects – Ties up investigators for months • Which is USF? – Good as it gets IRB: What Goes Wrong? • Inadequate expertise – Lack of understanding of research – Apply medical model to social science • Going beyond authority – Copyright issues • Abuse of power • Refuge of the petty and small minded tyrant Research Vs. Practice • Research = Purpose not activity • Dissemination intent = research – Presentation – Publication • Class demo not research • Management project not research – Consulting projects as research projects • Don’t ethics apply to class demos? – Not IRB purview Dealing with Organizations? • Who needs protection – Employee – Organization • Who owns and can see the data? – Researcher – Organization • What if organization won’t play by IRB rules? – IRB has no jurisdiction off campus Anticipate Ethical Conflicts • Avoid issues – Don’t know can’t tell • Negotiate issues – – – – Confidentiality Nature of report Ownership of data Procedures Ethical Issues: Analysis • Honesty in research • Report what was done – Why Hunter-Schmidt aren’t unethical making up data • Bad data practice – – – – Fabrication Deleting disconfirming cases: Trimming Data mining: Type 1 Error hunt Selective reporting: Only the significant findings Dissemination • • • • Authorship credit Referencing Sharing Data Editorial issues – Editor – Reviewers Author Credit • Authors: Substantive contributions – What is substantive? – People vary in generosity • Order of authorship – – – – Order of contribution Not by academic rank Dissertation/thesis special case Last for senior person • Authorship agreed to up front • Potential for student/junior colleague exploitation Slacker Coauthors • • • • • When do you drop from coauthorship Late Not at all Poor quality Less than you expected Submission • One journal at a time • One conference at a time • Can submit to conference and journal – Prior to paper being in press • Almost all electronic submission – Difficult and tedious – Break paper into multiple documents – Enter each coauthor • Most reviewing is blind – Only editor knows authors/reviewers Journal Review • All 1st submissions are rejects – Don’t want to see again – Revise and resubmit (R&R) • Will consider revision if you insist (high risk) • Encourages resubmission • • • • Desk rejections: No review Feedback from 1 to 4 reviewers (mode 2) Feedback from editor Multiple cycles of R&R can be required – Can be rejected at any step • Tentative accept: Needs minor tweaks • Full accept: Congratulations! Steps To Publication • Submit • R&R • Revise – Include response to feedback • Provisional acceptance – Minor revision • Acceptance – Copyright release – Proofs • In print – Entire process 1 year or more R&R • More likely accepted than rejected – Depends on editor – Good editor has few R&R rejects • Work hard to incorporate feedback • Argue points of disagreement – Additional analyses – Prior literature – Logical argument • Don’t be argumentative – Choose your battles • Give high priority Author Role • Make good faith effort to revise • Incorporate feedback • Be honest in what was done – Don’t claim you tried things you didn’t • Treat editor/reviewers with respect Editor Role • • • • Be an impartial judge Weight input from authors and reviewers Be decisive Keep commitments – R&R is promise to publish if things fixed • Treat everyone with respect Reviewer Role • • • • Objective review No room for politics Reveal biases to editor Disclose ghost-reviewer to editor – E.g., doctoral student – Pre-approval • • • • Private recommendation to editor Feedback to author/s Keep commitments Treat author with respect Reviewer As Ghostwriter • Art Bedeian • Notes reviewers go too far – Dictating question asked, hypotheses, analyses, interpretation • Review inflation over the years – Sometimes feedback longer than papers • Reviewers subjective • Poor inter-rater agreement • Abuse of power? Reviewer Problems • • • • • Reviewers late Reviewers nasty Overly picky Factually inaccurate Overly dogmatic – – – – Favorite stats (CFA/SEM) Edit out ideas they disagree with Insist on own theoretical position Assume there’s only one right way • Not knowledgeable • Miss obvious • Careless Scientific Progress Through Dispute • Work is based on prior work – Testing theories – Integrating findings/theories • Build a case for an argument or conclusion • Disseminate • Colleagues build case for alternative – Scientific dispute • Two camps battle producing progress – Dispute motivates work – Literature enriched Crediting Sources • Must reference anything borrowed – Cite findings/ideas – Quote direct passages – Little quoting done in psychology • Stealing work – Plagiarism: not quoting quotes – Borrowing ideas • Papers • People • Reviewed papers – Easy to forget you didn’t have idea Strategy For Successful Publication • Choose topic field likes – Existing hot topic – Tomorrow’s hot topic (hard to predict) • Conduct high quality study • Craft good story – Make a strong case for conclusions – Theoretical arguments in introduction – Strong data to test • Write clearly and concisely • Pay attention to current practice • Lead don’t follow Dealing With Journals • Be patient and persistent • Match paper to journal – Journal interests – Quality of paper • Count on extensive revision • Learn from rejection – Consider feedback – Only fix things you agree with – Look for trends over reviewers Fragmented Publication • Multiple submission from same project • Discouraged in theory • Required in practice – Single purpose – Tight focus • Different purposes – Minimize overlap – Cross-cite – Disclose to editor Example: CISMS • Four major papers • Unreliability of Hofstede measure – Applied Psychology: An International Review • Universality of Work LOC and well-being – Academy of Management Journal • Country level values and well-being – Journal of Organizational Behavior • Work-family pressure and well-being – Personnel Psychology How Much Overlap Is Too Much? • A: Aquino, K., Grover, S. L., Bradfield, M., & Allen, D. G. (1999). The effects of negative affectivity, hierarchical status, and self-determination on workplace victimization, Academy of Management Journal, 42, 260-272. • B: Aquino, K. (2000). Structural and individual determinants of workplace victimization: The effects of hierarchical status and conflict management style. Journal of Management, 26, 171-193. Purpose from Abstract • A: “Conditions under which employees are likely to become targets of coworkers’ aggressive actions” • B: “…when employees are more likely to perceive themselves as targets of coworkers’ aggressive actions” Procedure • A:“Two surveys were administered to employees of a public utility as part of an organizational assessment. Although the surveys differed in content, both versions contained an identical set of items measuring workplace victimization” • B: “Two different surveys were administered to employees of a public utility as part of an organizational assessment. Although the surveys differed in content, both versions contained an identical set of items measuring workplace victimization.” Sample/Measures • A: n = 371, 76% response, mean age 40.7, tenure 11.5, 65% male, 72% African American • B: n = 369, 76% response, mean age 40.7, tenure 11.5, 65% male, 72% African American • A: PANAS, Hierarchical status (Haleblian), selfdetermination, Victimization (14 items) • B: Rahim Organizational Conflict Inventory-II, Hierarchical status (Haleblian), Victimization (14 items) Factor Analysis/Table 1 • A: Exploratory FA of Victimization, CFA of 8 items on holdout sample • B: Exploratory FA of Victimization, CFA of 8 items on holdout sample • A: “Factor loadings and lamdas for victimization itemsa” [Note misspelling of lambda] • B: “Factor loadings and lamdas for victimization items1” [Note misspelling of lambda] Table 3/Hypothesis Tests • A: “Results of hierarchical regression analysis” • B: “Results of hierarchical regression analysis” • A: “Two regression equations were fitted: one predicting direct victimization and the other predicting indirect victimization.” • B: “Two regression equations were fitted: one predicting direct victimization and the other predicting indirect victimization.” Limitations • A: “This study has several limitations that deserve comment. Perhaps the most serious is its crosssectional research design. The victim precipitation model is based on the assumption that victims either intentionally or unintentionally instigate some negative acts.” • B: “This study has several limitations that deserve comment. Perhaps the most serious is its crosssectional research design. The victim precipitation model is based on the assumption that victims either intentionally or unintentionally instigate some negative acts…” Research Support: Grants • Funding needed for many studies – Expands what can be done • Some research very cheap – Shoestring because lack of funding? – Lack of funding because research is cheap? • Universities encourage grants – Diminishing state support Grant Pros • Covers direct costs of research – – – – Equipment/supplies Subject fees/inducements Human resources (research assistants) Faculty time • Buyouts and summer support • Conference travel – Dissemination • Support students • Prestige • Administrative admiration (rewards) Grant Cons • Tough to get: Competitive – Time consuming • • • • Requires resubmission with long cycle time Administrative nightmare Takes time from teaching/research Can redirect research focus – Tail wags dog • Confuse path with goal – Grant is not a research contribution Sources • Federal (Highest status—greenest money) • Foundations • Internal University Grants – Small – Not as competitive • • • • New investigator grants ERC pilot grants SIOP grants Dissertation grants Federal Grants Challenging • High rejection rate • Takes multiple submissions (R&R) • Must link to priorities—not everything fundable Grant Strategy • Develop grant writing skill • Tie to fundable – Workplace health and safety • Musculoskeletal Disorders (MSD) and …. • Workplace violence • • • • • Intervention research in demand Interdisciplinary Use of consultants Pilot studies Programmatic and strategic Week 15 Wrap-Up Successful Research Career • Conducting good research – Lead don’t follow • Visibility – – – – Good journals Conferences Other outlets Quantity • First authored publications – Important more early in career • Impact • Grants Programmatic • Program of research – – – – – – – More conclusive Multiple tests Boundary conditions More impact through visibility Helps getting jobs Helps with tenure/promotion Can have more than one focus Conducting Successful Research • Develop an interesting question – – – – Based on theory Based on literature Based on observation Based on organization need • Link question to literature – – – – Theoretical perspective Place in context of what’s been done Multiple types of evidence Consider other disciplines Conducting Successful Research 2 • Design one or more research strategies – Lab vs. field – Data collection technique • Survey, interview, observation, etc. – Design • Experimental, quasi-experimental or observational • Cross-sectional or longitudinal • Single-source or multisource – Instrumentation • Existing or ad hoc Conducting Successful Research 3 • Analysis • Hierarchy of methods simple to complex – – – – Descriptives Bi-variable relationships Test for controls Complex relationships • • • • Multiple regression Factor analysis HLM SEM Conducting Successful Research 4 • Conclusions – – – – – What’s reasonable based on data Alternative explanations Speculation Theoretical development Suggestions for future KSAOs Needed • • • • • • Content knowledge Methods expertise Writing skill Presentation skill Creativity Thick skin Pipeline • Body of work at various stages – – – – – In press Under review Writing/revising In progress Planning • Set priorities – Don’t let revisions sit – Get work under review – Always work on next project • Collaboration to multiply productivity • Time management Authorship Order • First takes the lead on paper – Most of writing – Most input in project • Important early in career to be first • Balance quantity with order • Sometimes most senior person is last – PI on project – Senior member of lab Impact • Effect of work on field/world • Citations – Sources • ISI Thomson • Harzing’s Publish or Perish • Others – Self-citation – Citation studies • Individuals (e.g., Podsakoff et al. Journal of Management 2008) • Programs (e.g., Oliver et al. TIP, 2005) • Being attacked Partnering • • • • State/local government Corporations Tying research to consulting Partnership with practitioner – In kind Grants • Expands what you can do • Good for career – Current employer – Potential future employers Grantsmanship • Develop grant writing skill – Start as a student • Small grant at first • Proposal somewhat different from article – Background that establishes need for study – Demonstrates ability to conduct • Expertise of team • Letters of agreement/support – High likelihood of success • Pilot data important • Low risk • Address funding agency priority Final Advice • Be a leader not a follower – Address problem that is not being addressed – Find creative ways of doing things • Be evolutionary not revolutionary – Too different unlikely to be accepted – Most creative often in lesser journals • Follow-up studies in better journals • Critical mass – Need multiple publications on topic to be noticed – Programmatic • Build on the past, don’t tear it down – Positive rather than negative citation Final Advice cont. • Be flexible in thinking – Don’t get prematurely locked into • Conclusion, Idea, Method, Theory • Use theory inductively – A good theory explains findings • Don’t take yourself too seriously • Have a thick skin • Enjoy your work