Document

advertisement

Lecture 4

Probability

and what it has to do with

data analysis

Please Read

Doug Martinson’s

Chapter 2: ‘Probability Theory’

Available on Courseworks

Abstraction

Random variable, x

it has no set value, until you ‘realize’ it

its properties are described by a

distribution, p(x)

Probability density distribution

When you realize x

the probability that the value you get is

between x and x+dx

is p(x) dx

the probability, P, that the value you get is

is between x1 and x2

x

is P = x p(x) dx

2

1

Note that it is written with a capital P

And represented by a fraction between

0 = never

And

1 = always

p(x)

Probability P that x is

between x1 and x2 is

proportional to this area

x

x1

x2

p(x)

Probability that x is between - and +

is unity, so total area = 1

x

the probability that the value you get is

is something is unity

+

- p(x) dx = 1

Or whatever the allowable range of x is …

Why all this is relevant …

Any measurement is that contains noise is treated

as a random variable, x

The distribution p(x) embodies both the ‘true value’

of the quantity being measured and the

measurement noise

All quantities derived from a random variable are

themselves random variables, so …

The algebra of random variables allows you to

understand how measurement noise affects

inferences made from the data

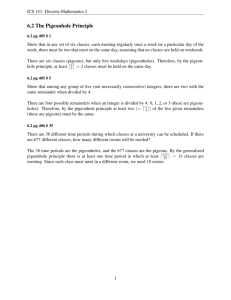

Basic Description of Distributions

Mode

x at which distribution has peak

most-likely value of x

p(x)

peak

xmode

x

But modes can be deceptive …

100 realizations of x

Sure, the 1-2 range has the most

counts, but most of the

measurements are bigger than 2!

N

3

18

11

8

11

14

8

7

11

9

p(x)

peak

x

0-1

1-2

2-3

3-4

4-5

5-6

6-7

7-8

8-9

9-10

0

xmode

x

10

Median

50% chance x is smaller than xmedian

50% chance x is bigger than xmedian

p(x)

No special

reason the

median

needs to

coincide with

the peak

50%

50%

xmedian

x

Expected value or ‘mean’

p(x)

x you would get if you took the mean

of lots of realizations of x

Let’s examine a discrete

distribution, for simplicity ...

4

3

2

1

0

1

2

3

x

Hypothetical table of 140 realizations of x

x

1

2

3

Total

mean =

=

N

20

80

40

140

[ 20 1 + 80 2 + 40 3 ] / 140

(20/140) 1 + (80/140) 2 + (40/140) 3

=

p(1) 1 + p(2) 2 + p(3) 3

=

Σi

p(xi) xi

by analogy

for a smooth distribution

Expected value of x

E(x) = -

+

x p(x) dx

by the way …

You can compute the expected (“mean”)

value of any function of x this way …

E(x) = -

E(x2)

= -

E(x) =

etc.

+

x p(x) dx

+

+

-

x2 p(x) dx

x p(x) dx

Beware

E(x2) E(x)2

E(x) E(x)2

and so forth …

Width of a distribution

p(x)

Here’s a perfectly sensible way to

define the width of a distribution…

50%

25%

W50

25%

x

… it’s not used much, though

Width of a distribution

Here’s another way…

p(x)

Parabola [x-E(x)]2

E(x)

x

… multiply and integrate

p(x)

Idea is that if distribution is narrow, then

most of the probability lines up with the

low spot of the parabola

[x-E(x)]2 p(x)

E(x)

But if it is wide, then some of the

probability lines up with the high

parts of the parabola

Compute

this total

area …

E(x)

Variance =

x

s2

= -

+

[x-E(x)]2 p(x) dx

x

variance = s

p(x)

A measure of width …

s

E(x)

x

we don’t immediately know its

relationship to area, though …

the Gaussian or normal

distribution

variance

expected value

p(x) =

1

(2p)s

exp{ -

2

(x-x)

/

Memorize me !

2

2s

)

p(x)

x=1

s=1

Examples of

x

Normal

p(x)

Distributions

x=3

s = 0.5

x

Properties of the normal distribution

Expectation =

Median =

p(x)

Mode = x

95%

x

x-2s x

x+2s

95% of

probability

within 2s of

the expected

value

Functions of a random variable

any function of a random variable is itself a random variable

If x has distribution p(x)

the y(x) has distribution

p(y) = p[x(y)] dx/dy

This follows from the rule for

transforming integrals …

1 = x1 p(x) dx = y1 p[x(y)] dx/dy dy

x2

y2

Limits so that y1=y(x1), etc.

example

Let x have a uniform (white) distribution of [0,1]

p(x)

1

0

x

1

Uniform probability that x is anywhere between 0 and 1

Let y = x2

then x=y½

y(x=0)=0

y(x=1)=1

dx/dy=½y-½

p[x(y)]=1

So p(y)=½y-½ on the interval [0,1]

1

Numerical test

histogram of 1000 random numbers

Histogram of x,

generated with

Excel’s rand()

function which

claims to be based

upon a uniform

distribution

Histogram of x2,

generated by

squaring x’s from

above

Plausible

that it’s

uniform

Plausible

that it’s

proportional

to 1/y

multivariate distributions

example

Liberty island is inhabited by both pigeons

and seagulls

40% of the birds are pigeons

and 60% of the birds are gulls

50% of pigeons are white and 50% are grey

100% of gulls are white

Two variables

species s takes two values

pigeon p

Of 100 birds,

and gull g

20 are white pigeons

color c takes two values

white w

and tan t

20 are tan pigeons

60 are white gulls

0 are tan gulls

What is the probability that a bird

has species s and color c ?

a random

bird, that is

p

20%

20%

g

60%

0%

s

w

t

c

Note: sum

of all boxes

is 100%

This is called the

Joint Probability

and is written

P(s,c)

Two continuous variables

say x1 and x2

have a joint probability

distribution

and written

p(x1, x2)

with

p(x1, x2) dx1 dx2 = 1

The probability that

x1 is between x1 and x1+dx1

and

x2 is between x2 and x2+dx2

is

p(x1, x2) dx1 dx2

so

p(x1, x2) dx1 dx2 = 1

You would contour a joint

probability distribution

and it would look something like

x2

x1

What is the probability that a bird

has color c ?

Of 100

birds,

start with P(s,c)

p

20%

20%

s

g

and sum columns

60%

0%

w

t

c

To get P(c)

80%

20%

20 are

white

pigeons

20 are tan

pigeons

60 are

white

gulls

0 are tan

gulls

What is the probability that a bird

has species s ?

start with P(s,c)

p

20%

20%

s

Of 100 birds,

20 are white

pigeons

20 are tan

pigeons

60 are white

gulls

0 are tan

gulls

g

60%

0%

w

t

c

40%

and

sum

rows

60%

To get P(s)

x2

These operations make sense with

distributions, too

x2

x2

x1

p(x1)

x1

x1

p(x1) = p(x1,x2) dx2

distribution of x1

(irrespective of x2)

p(x2) = p(x1,x2) dx1

distribution of x2

(irrespective of x1)

p(x2)

Given that a bird is species s

what is the probability that it has color c ?

Of 100 birds,

20 are white pigeons

p

50%

50%

g

100%

0%

s

20 are tan pigeons

60 are white gulls

0 are tan gulls

w

t

c

Note, all

rows sum

to 100

This is called the

Conditional Probability of c given s

and is written

P(c|s)

similarly …

Given that a bird is color c

what is the probability that it has species s ?

Of 100 birds,

20 are white pigeons p

20 are tan pigeons

25%

100%

75%

0%

s

60 are white gulls

0 are tan gulls

So 25% of white

birds are pigeons

g

w

t

c

Note, all

columns

sum to 100

This is called the

Conditional Probability of s given c

and is written

P(s|c)

Beware!

P(c|s)

p

50%

p

50%

s

P(s|c)

25%

100%

75%

0%

s

g

100%

0%

w

t

c

g

w

t

c

note

P(s,c) = P(s|c) P(c)

p

20

p

20

25

100

=s

s

g

60

w

0

c

t

25% of 80 is 20

g

75

80

20

0

w

w

c

t

t

c

and

P(s,c) = P(c|s) P(s)

50% of 40 is 20

p

20

p

20

50

50

=s

s

g

60

w

0

c

t

p

g

100

40

s

0

g

w

c

t

60

and if

P(s,c) = P(s|c) P(c) = P(c|s) P(s)

then

P(s|c) = P(c|s) P(s) / P(c)

and

P(c|s) = P(s|c) P(c) / P(s)

… which is called Bayes Theorem

Why Bayes Theorem is important

Consider the problem of fitting a straight line to data, d,

where the intercept and slope are given by the vector

m.

If we guess m and use it to predict d we are doing

something like P(d|m)

But if we observe d and use it to estimate m then we are

doing something like P(m|d)

Bayes Theorem provides a framework for relating what

we do to get P(d|m) to what we do to get P(m|d)

Expectation

Variance

And

Covariance

Of a multivariate distribution

The expected value of x1 and x2 are calculated in a

fashion analogous to the one-variable case:

E(x1)= x1 p(x1,x2) dx1dx2 E(x2)= x2 p(x1,x2) dx1dx2

Note

E(x1) = x1 p(x1,x2) dx1dx2

x2

= x1 [ p(x1,x2)dx2 ] dx1

x1

= x1 p(x1) dx1

So the formula really is just

the expectation of a onevariable distribution

The variance of x1 and x2 are calculated in a fashion

analogous to the one-variable case, too:

sx12= (x1-x1)2p(x1,x2) dx1dx2 with x1=E(x1)

and similarly for sx22

Note, once again

sx12= (x1-x1)2p(x1,x2) dx1dx2

x2

= (x1-x1)2 [p(x1,x2) dx2] dx2

= (x1-x1)2p(x1) dx1

x1

So the formula really is just the

variance of a one-variable

distribution

Note that in this distribution

if x1 is bigger than x1, then x2 is bigger than x2 and

if x1 is smaller than x1, then x2 is smaller than x2

x2

This is a

positive correlation

x2

x1

x1

Expected value

Conversely, in this distribution

if x1 is bigger than x1, then x2 is smaller than x2 and

if x1 is smaller than x1, then x2 is smaller than x2

x2

This is a

negative correlation

x2

x1

x1

Expected value

This correlation can be quantified by multiplying the

distribution by a four-quadrant function

x2

x2

x1

-

+

+

-

x1

And then integrating. The function (x1-x1)(x2-x2) works

fine

cov(x1,x2) = (x1-x1) (x2-x2) p(x1,x2) dx1dx2

Called the “covariance”

Note that the vector x with elements

xi = E(xi)= xi p(x1,x2) dx1dx2

is the expectation of x

and the matrix Cx with elements

Cij = (xi-xi) (xj-xj) p(x1,x2) dx1dx2

has diagonal elements equal to the variance of xi

Cxii = sxi2

and

off-diagonal elements equal to the covariance of xi and xj

Cxij = cov(xi,xj)

“Center” of multivatiate distribution

x

“Width” and “Correlatedness” of multivariate

distribution

summarized a lot – but not everything –

about a multivariate distribution

Functions of a set of random

variables, x

A vector of of N

random variables

in a vector, x

given y(x)

Do you remember how to

transform the integral

… p(x)

N

d x

=

… ? dNy =

given y(x)

then

… p(x)

N

d x

=

… p[x(y)] |dx/dy| dNy =

Jacobian determinant, that is,

the determinant of matrix Jij

whose elements are dxi/dyj

But here’s something that’s EASIER …

Suppose y(x) is a linear function y=Mx

Then we can easily calculate the

expectation of y

yi = E(yi) = … yi p(x1 … xN) dx1…dxN

= … SMijxj p(x1 … xN) dx1… dxN

= S Mij … xj p(x1 … xN) dx1 … dxN

= S Mij E(xi) = S Mij xi

So y=Mx

And we can easily calculate the covariance

Cyij = … (yi – yi) (yj – yj) p(x1,x2) dx1dx2

= … ΣpMip(xp – xp) ΣqMjq (xq – xq) p(x1…xN) dx1…dxN

= ΣpMip ΣqMjq … (xp – xp) (xq – xq) p(x1…xN) dx1 …dxN

= ΣpMip ΣqMjq Cxpq

So Cy = M Cx MT

Memorize!

Note that these rules work

regardless of the distribution of x

if y is linearly related to x, y=Mx

then

y=Mx

(rule for means)

Cy = M Cx MT

(rule for propagating error)