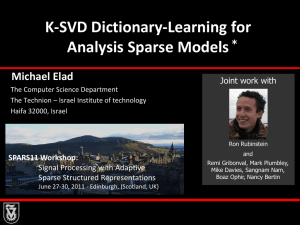

Sparse Representations and the Basis Pursuit Algorithm

advertisement

An Introduction to Sparse

Representation

and the K-SVD Algorithm

Ron Rubinstein

The CS Department

The Technion – Israel Institute of technology

Haifa 32000, Israel

University of Erlangen - Nürnberg

April 2008

D x

Noise Removal ?

Our story begins with image denoising …

?

Remove

Additive

Noise

Practical application

A convenient platform (being the simplest inverse

problem) for testing basic ideas in image processing.

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

2

Denoising By Energy Minimization

Many of the proposed denoising algorithms are related to the minimization

of an energy function of the form

1

2

f x

xy

2

2

y : Given measurements

x : Unknown to be recovered

Sanity (relation to

measurements)

Pr x

Prior or regularization

•

This is in-fact a Bayesian point of view, adopting the

Maximum-Aposteriori Probability (MAP) estimation.

•

Clearly, the wisdom in such an approach is within the

choice of the prior – modeling the images of interest.

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

Thomas Bayes

1702 - 1761

3

The Evolution Of Pr(x)

During the past several decades we have made all sort of guesses

about the prior Pr(x) for images:

2

Pr x x 2

2

Pr x L x 2

Energy

Prx x

Smoothness

1

D x

Adapt+

Smooth

Prx W x 1 Pr x = Bx 1

TotalVariation

2

Pr x L x W

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

Wavelet

Sparsity

Bilateral

Filter

Prx L x

Robust

Statistics

Pr x 0

0

for x D

Sparse &

Redundant

4

Agenda

1. A Visit to

Sparseland

Introducing sparsity & overcompleteness

2. Transforms & Regularizations

How & why should this work?

Welcome

to

Sparseland

3. What about the dictionary?

The quest for the origin of signals

4. Putting it all together

Image filling, denoising, compression, …

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

5

Generating Signals in Sparseland

K

M

N

Fixed Dictionary

Sparse &

random

vector

D α

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

N

x

• Every column in

D (dictionary) is

a prototype

signal (atom).

• The vector is

generated

randomly with

few non-zeros in

random locations

and with random

values.

6

Sparseland Signals Are Special

M

α

Multiply

by D

x Dα

• Simple: Every signal is built

as a linear combination of

a few atoms from the

dictionary D.

• Effective: Recent works

adopt this model and

successfully deploy it to

applications.

• Empirically established:

Neurological studies show

similarity between this model

and early vision processes.

[Olshausen & Field (’96)]

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

7

Transforms in Sparseland ?

• Assume that x is known to emerge from

M.

.

• How about "Given x, find the α that generated it in M " ?

N

x

Dα

M

T

α K

D

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

8

Problem Statement

We need to solve an

under-determined

linear system of equations:

• Among all (infinitely

many) possible solutions

we want the sparsest !!

• We will measure sparsity

using the L0 "norm":

α

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

Dαx

Known

0

9

Measure of Sparsity?

k

x p = xj

p

p

2

f x xp

j=1

2

p

p

As p 0 we

get a count

of the non-zeros

in the vector

α

D x

0

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

1

-1

1

1

p

p

p0

p 1

+1

x

10

Where We Are

A sparse

& random

vector

α

Min α

Multiply

by D

α

x Dα

3 Major

Questions

D x

• Is

αα

ˆ

0

s.t. x Dα

α̂

?

• NP-hard: practical ways to get

α̂ ?

• How do we know D?

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

11

Inverse Problems in Sparseland ?

• Assume that x is known to emerge from

M.

• Suppose we observe y H x v , a degraded and noisy

version of x with v 2 ε. How do we recover x?

• How about "find the α that generated y " ?

y M

M

Dα

Hx

N

x

Q

α K

ˆ

Noise

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

12

Inverse Problem Statement

v

x Dα

A sparse

& random

vector

α

Multiply

by D

Min α

"blur"

by H

α

y HDα

y Hx v

3 Major

Questions

(again!)

D x

• Is

αα

ˆ

0

s.t.

2

ε

α̂

?

• How can we compute

α̂ ?

• What D should we use?

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

13

Agenda

1. A Visit to

Sparseland

Introducing sparsity & overcompleteness

T

2. Transforms & Regularizations

How & why should this work?

3. What about the dictionary?

The quest for the origin of signals

Q

4. Putting it all together

Image filling, denoising, compression, …

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

14

The Sparse Coding Problem

Our dream for now: Find

the sparsest solution to

Dαx

Known

Put formally,

P0 : Min α 0 s.t. x Dα

α

known

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

15

Question 1 – Uniqueness?

M

α

x Dα

Min α

Multiply

by D

α

0

s.t. x Dα

α̂

Suppose we can

solve this exactly

Why should we necessarily get

α α?

ˆ

α 0 α 0.

It might happen that eventually ˆ

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

16

Matrix "Spark"

Definition: Given a matrix D, =Spark{D} is the smallest

and and

number of columns that are linearly dependent.

Donoho & Elad (‘02)

• By definition, if Dv=0 then v 0

• Say I have 1 and you have 2, and the two are

different representations of the same x :

x D 1 D 2

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

D 1 2 0

1 2 0

17

Uniqueness Rule

• Now, what if my 1 satisfies 1

?

0

2

• The rule 1 2 0 implies that 2

0

Uniqueness

Donoho & Elad (‘02)

!

2

If we have a representation that satisfies

σ

α0

2

then necessarily it is the sparsest.

So, if M generates signals using "sparse enough" ,

the solution of

P0 : Min α 0 s.t. x Dα

will find them exactly.

α

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

18

Question 2 – Practical P0 Solver?

M

α

Multiply

by D

x Dα

Min α

α

0

s.t. x Dα

Are there reasonable ways to find

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

α̂

α̂ ?

19

Matching Pursuit (MP) Mallat & Zhang (1993)

• The MP is a greedy

algorithm that finds one

atom at a time.

• Step 1: find the one atom

that best matches the signal.

• Next steps: given the

previously found atoms, find

the next one to best fit …

• The Orthogonal MP (OMP) is an improved

version that re-evaluates the coefficients after

each round.

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

20

Basis Pursuit (BP) Chen, Donoho, & Saunders (1995)

Instead of solving

Min α 0 s.t. x Dα

α

Solve this:

Min α 1 s.t. x Dα

α

• The newly defined problem is convex (linear programming).

• Very efficient solvers can be deployed:

Interior point methods [Chen, Donoho, & Saunders (`95)] ,

Iterated shrinkage

[Figuerido & Nowak (`03), Daubechies, Defrise, & Demole (‘04),

Elad (`05), Elad, Matalon, & Zibulevsky (`06)].

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

21

Question 3 – Approx. Quality?

M

α

Multiply

by D

x Dα

MP/BP

α̂

How effective are MP/BP ?

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

22

BP and MP Performance

Donoho & Elad

Gribonval & Nielsen

Tropp

Temlyakov

(‘02)

(‘03)

(‘03)

(‘03)

Given a signal x with a representation

x Dα , if 0 (somethreshold ) then

BP and MP are guaranteed to find it.

MP and BP are different in general (hard to say which is better).

The above results correspond to the worst-case.

Average performance results available too, showing much better

bounds [Donoho (`04), Candes et.al. (`04), Tanner et.al. (`05), Tropp et.al. (`06)].

• Similar results for general inverse problems

[Donoho, Elad & Temlyakov

(`04), Tropp (`04), Fuchs (`04), Gribonval et. al. (`05)].

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

23

Agenda

1. A Visit to

Sparseland

Introducing sparsity & overcompleteness

2. Transforms & Regularizations

How & why should this work?

3. What about the dictionary?

The quest for the origin of signals

4. Putting it all together

Image filling, denoising, compression, …

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

24

Problem Setting

M

α

Multiply

by D

x Dα

α 0 L

D x

P

Xj

j 1

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

Given these P examples and

a fixed size (NK) dictionary,

how would we find D?

25

The Objective Function

X

DA X F

2

Min

D, A

The examples are

linear combinations

of atoms from D

D

A

s.t. j, j 0 L

Each example has a

sparse representation with

no more than L atoms

(N,K,L are assumed known, D has normalized columns)

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

26

K–SVD – An Overview

D

Initialize

D

Sparse Coding

Use MP or BP

Dictionary

Update

X

T

Column-by-Column by

SVD computation

Aharon, Elad & Bruckstein (‘04)

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

27

K–SVD: Sparse Coding Stage

DA X F

2

Min

A

s.t. j, α j 0 L

For the jth

example

we solve

Min Dα x j

α

2

D

X

T

s.t. α 0 L

2

Ordinary Sparse Coding !

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

28

K–SVD: Dictionary Update Stage

DA X F

2

Min

D

s.t. j, α j 0 L

D

For the kth

atom

we solve

Min

dk

Ek

d a

j k

D x

T

j j

T

k k

d a Ek

2

X

T

F

X (the residual)

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

29

K–SVD Dictionary Update Stage

Min

dk

T

k k

d a Ek

2

D

F

We can do

better than

this

Min

dk ,ak

T

k k

d a Ek

2

F

X

T

But wait! What

about sparsity?

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

30

K–SVD Dictionary Update Stage

We want to solve:

Min

dk ,a k

dk

Only some of

the examples

use column dk!

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

a

T

k

Ek

When updating ak,

only recompute

the coefficients

corresponding to

those examples

2

F

Solve with

SVD!

31

The K–SVD Algorithm – Summary

D

Initialize

D

Sparse Coding

Use MP or BP

Dictionary

Update

X

T

Column-by-Column by

SVD computation

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

32

Agenda

1. A Visit to

Sparseland

Introducing sparsity & overcompleteness

2. Transforms & Regularizations

How & why should this work?

3. What about the dictionary?

The quest for the origin of signals

4. Putting it all together

Image filling, denoising, compression, …

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

33

Image Inpainting: Theory

Assumption: the signal x was created

by x=Dα0 with a very sparse α0.

D α0 x

Missing values in x imply

missing rows in this linear

system.

By removing these rows, we get

.

Dα0 = x

Now solve

Min

0

=

~ ~

s.t. x D

If α0 was sparse enough, it will be the solution of the

above problem! Thus, computing Dα0 recovers x perfectly.

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

34

Inpainting: The Practice

We define a diagonal mask operator W representing the lost

samples, so that

w i,i {0,1}

y = Wx + v

Given y, we try to recover the representation of x, by solving

α̂ = ArgMin α

α

0

s.t. y - WDα

2

x̂ D

ˆ

We use a dictionary that is the sum of two dictionaries, to get an

effective representation of both texture and cartoon contents. This

also leads to image separation [Elad, Starck, & Donoho (’05)]

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

35

Inpainting Results

Dictionary:

Curvelet (cartoon)

+ Global DCT

(texture)

Source

Outcome

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

36

Inpainting Results

Dictionary:

Curvelet (cartoon)

+ Overlapped

DCT (texture)

Source

Outcome

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

37

Inpainting Results

20%

50%

80%

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

38

Denoising: Theory and Practice

Given a noisy image y, we can clean it by solving

α̂ = ArgMin α

α

0

s.t. y - Dα

2

x̂ D

ˆ

Can we use the K-SVD dictionary?

With K-SVD, we cannot train a dictionary for an entire

image. How do we go from local treatment of patches to a

global prior?

Solution: force shift-invariant sparsity – for each NxN

patch of the image, including overlaps.

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

39

From Local to Global Treatment

α̂ = ArgMin α

α

0

s.t. y - Dα

2

x̂ D

ˆ

For patches,

our MAP penalty

becomes

ˆx = ArgMin 1 x - y

x ,{αij }ij

2

2

2

+ μ R ij x - Dαij

ij

s.t. αij 0 L

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

2

Extracts the

2 (i,j)th patch

Our prior

40

What Data to Train On?

Option 1:

Use a database of images: works quite well

(~0.5-1dB below the state-of-the-art)

Option 2:

Use the corrupted image itself !

Simply sweep through all NxN patches

(with overlaps) and use them to train

Image of size 1000x1000 pixels

~106

examples to use – more than enough.

This works much better!

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

41

Image Denoising: The Algorithm

ˆx = ArgMin 1 x - y

x ,{αij }ij ,D 2

2

2

+ μ R ij x - Dαij

ij

x and D known

x and ij known

Compute ij per patch

Compute D to minimize

αij = Min R ij x - Dα

α

2

2

s.t. αij 0 L

using matching pursuit

Min R ij x - Dα

D

ij

2

2

using SVD, updating one

column at a time

2

2

s.t. αij 0 L

D and ij known

Compute x by

x I RijTRij

ij

1

y RijTDij

ij

which is a simple averaging

of shifted patches

K-SVD

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

42

Denoising Results

Source

Result 30.829dB

Noisy image

PSNR 22.1dB

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

Initial dictionary

Obtained

dictionary

(overcomplete

DCT) 64×256

after 10 iterations

43

Denoising Results: 3D

Source:

Vis. Male Head

(Slice #137)

2d-KSVD:

PSNR=27.3dB

3d-KSVD:

PSNR=12dB

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

PSNR=32.4dB

44

Image Compression

Problem: compressing photo-ID images.

General purpose methods (JPEG,

JPEG2000) do not take into account the

specific family.

By adapting to the image-content,

better results can be obtained.

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

45

Compression: The Algorithm

Divide each image to disjoint

15x15 patches, and for each

compute a unique dictionary

Divide to disjoint patches, and

sparse-code each patch

Quantize and entropy-code

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

Compression

Detect features and align

Training set (2500 images)

Training

Detect main features and align

the images to a common

reference (20 parameters)

46

Compression Results

Original

JPEG

JPEG 2000

PCA

K-SVD

11.99

10.49

8.81

5.56

10.83

8.92

7.89

4.82

10.93

8.71

8.61

5.58

Results for

820 bytes

per image

Bottom:

RMSE values

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

47

Compression Results

Original

JPEG

JPEG 2000

PCA

K-SVD

15.81

13.89

10.66

6.60

14.67

12.41

9.44

5.49

15.30

12.57

10.27

6.36

Results for

550 bytes

per image

Bottom:

RMSE values

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

48

Today We Have Discussed

1. A Visit to

Sparseland

Introducing sparsity & overcompleteness

2. Transforms & Regularizations

How & why should this work?

3. What about the dictionary?

The quest for the origin of signals

4. Putting it all together

Image filling, denoising, compression, …

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

49

Summary

Sparsity and overcompleteness are important

ideas for designing better

tools in signal and image

processing

What

next?

Coping with

an NP-hard

problem

We have seen

inpainting, denoising

and compression

algorithms.

(a) Generalizations: multiscale, nonnegative,…

(b) Speed-ups and improved algorithms

Approximation algorithms

can be used, are

theoretically established and

work well in practice

What dictionary

to use?

How is all

Several dictionaries

this used? already exist. We have

shown how to

practically train D

using the K-SVD

(c) Deploy to other applications

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

50

Why Over-Completeness?

0.1

0.05

1

0

-0.05

-0.1

0.1

2

0.05

0

1.0

0

0.3

0.1 10

0 10-2

-0.05

-0.1

+

1

3

0.5

0.5

0

1

4

0.5

00

64

D x

-0.1

-4

10

-0.2

-6

10

-0.3

|T{1+0.32}|

-0.410-8

-0.5 -10

10

0

-0.6

0.05

|T{1+0.32+0.53+0.054}|

20

40

60

80

100

120

DCT Coefficients

128

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

51

Desired Decomposition

10

0

-2

10

10

10

10

-4

-6

-8

-10

10

0

40

80

DCT Coefficients

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

120

160

200

240

Spike (Identity) Coefficients

52

Inpainting Results

70% Missing Samples

DCT (RMSE=0.04)

Haar (RMSE=0.045)

K-SVD (RMSE=0.03)

90% Missing Samples

DCT (RMSE=0.085_

Haar (RMSE=0.07)

K-SVD (RMSE=0.06)

D x

An Introduction to

Sparse Representation

And the K-SVD Algorithm

Ron Rubinstein

53