Peer-Assessment ppt

advertisement

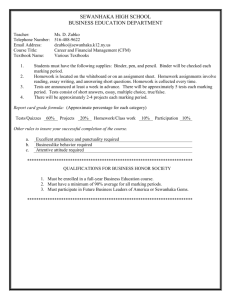

Heriot-Watt University The Use of Computerized Peer-Assessment Dr Phil Davies Division of Computing & Mathematical Sciences Department of Computing FAT University of Glamorgan General View of Peer Assessment Lecturer or Student? i.e. marking Good for developing student reflection – So what? Where’s the marks How can students be expected to mark as ‘good’ as ‘experts’ Why should I mark ‘properly’ and waste my time - I get a fixed mark for doing it The feedback given by students is not of the same standard that I give. I still have to do it again myself to make sure they marked ‘properly’ Lectures getting out of doing their jobs Defining Peer-Assessment • In describing the teacher .. A tall b******, so he was. A tall thin, mean b******, with a baldy head like a light bulb. He’d make us mark each other’s work, then for every wrong mark we got, we’d get a thump. That way – he paused – ‘we were implicated in each other’s pain’ McCarthy’s Bar (Pete McCarthy, 2000,page 68) What will make Computerized Peer-Assessment ACCEPTABLE to ALL? AUTOMATICALLY CREATE A MARK THAT REFLECTS THE QUALITY OF AN ESSAY/PRODUCT VIA PEER MARKING, + A MARK THAT REFLECTS THE QUALITY OF THE PEER MARKING PROCESS i.e. A FAIR/REFLECTIVE MARK FOR MARKING AND COMMENTING THE FIRST CAP MARKING INTERFACE Typical Assignment Process • Students register to use system CAP • Create an essay in an area associated with the module • Provide RTF template of headings • Submit via Bboard Digital Drop-Box • Anonymous code given to essay automatically by system • Use CAP system to mark Self/Peer Assessment • Often Self-Assessment stage used – Set Personal Criteria – Opportunity to identify errors – Get used to system • Normally peer-mark about 5/6 • Raw peer MEDIAN mark produced • Need for student to receive Comments + Marks Compensation High and Low Markers • Need to take this into account • Each essay has a ‘raw’ peer generated mark - MEDIAN • Look at each student’s marking and ascertain if ‘on average’ they are an under or over marker • Offset mark given by this value • Create a COMPENSATED PEER MARK • It’s GOOD TO TALK – Tim Nice but Dim EMAILS THE MARKER .. ANONYMOUS Below are comments given to students. Select the 3 most Important to YOU 1. I think you’ve missed out a big area of the research 2. You’ve included a ‘big chunk’ - word for word that you haven’t cited properly 3. There aren’t any examples given to help me understand 4. Grammatically it is not what it should be like 5. Your spelling is atroceious 6. You haven’t explained your acronyms to me 7. You’ve directly copied my notes as your answer to the question 8. 50% of what you’ve said isn’t about the question 9. Your answer is not aimed at the correct level of audience 10.All the points you make in the essay lack any references for support Order of Answers • Were the results all in the ‘CORRECT’ order – probably not? -> Why not! • Subject specific? • Level specific – school, FE, HE • Teacher/Lecturer specific? • Peer-Assessment is no different – Objectivity through Subjectivity • Remember – Feedback Comments as important as marks! • Students need to be rewarded for marking and commenting WELL -> QUANTIFY COMMENTS Each Student is using a different set of weighted comments Comments databases sent to tutor Comments – Both Positive and Negative in the various categories. Provides a Subjective Framework for Commenting & Marking First Stage => Self Assess own Work Second Stage (button on server) => Peer Assess 6 Essays Feedback Index • Produce an index that reflects the quality of commenting • Produce a Weighted Feedback Index • Compare how a marker has performed against these averages per essay for both Marking + Commenting – Looking for consistency -5 -4 -3 -2 -1 -0 +0 1 2 3 4 5 6 7 29 44 41 49 46 53 64 49 53 60 62 69 68 69 38 48 47 51 45 54 58 53 62 62 64 65 73 49 51 50 60 57 57 67 66 51 58 53 50 59 57 63 59 65 8 9 82 64 0 4.2 5.0 1.4 3.5 4.0 6.8 4.8 3.6 3.9 4.7 2.5 3.1 2.8 0 29 41 45 48 49 49 56 52 56 58 59 67 64 71 82 The Review Element • Requires the owner of the file to ‘ask’ questions of the marker • Emphasis ‘should’ be on the marker • Marker does NOT see comments of other markers who’ve marked the essays that they have marked • Marker does not really get to reflect on their own marking – get a reflective 2nd chance • I’ve avoided this in past -> get it right first time Used on Final Year Degree + MSc • • • • • • • • • • • • MSc EL&A 13 students 76 markings 41 replaced markings (54%) Average time per marking = 42 minutes Range of time taken to do markings 3-72 minutes Average number of menu comments/marking = 15.7 Raw average mark = 61% Out of 41 Markings ‘replaced’ –> 26 changed mark 26/76 (34%) Number of students who did replacements = 8 (out of 13) 2 students ‘Replaced’ ALL his/her markings 26 markings actually changed mark -1,+9, -2,-2, +1, -8, -3,-5, +2, +8, -2, +6, +18(71-89), 1, -4, -6, -5, -7, +7, -6, -3, +6, -7, -7, -2, -5 (Avge -0.2) How to work out Mark (& Comment) Consistency • • • • • Marker on average OVER marks by 10% Essay worth 60% Marker gave it 75% Marker is 15% over Actual consistency index (Difference) = 5 • This can be done for all marks and comments • Creates a consistency factor for marking and commenting Automatically Generate Mark for Marking • Linear scale 0 -100 mapped directly to consistency … the way in HE? • Map to Essay Grade Scale achieved (better reflecting ability of group)? • Expectation of Normalised Results within a particular cohort / subject / institution? Current ‘Simple’ Method • Average Marks – Essay Mark = 57% – Marking Consistency = 5.37 • Ranges – Essay 79% <-> 31% – Marking Consistency 2.12 <-> 10.77 • Range Above Avge 22% <-> 3.25 (6.76=1) • Range Below Avge 26% <-> 5.40 (4.81=1) ALT-J journal entitled ‘Don’t Write, Just Mark; The Validity of Assessing Student Ability via their Computerized Peer-Marking of an Essay rather than their Creation of an Essay’ ALT-J (CALT) Vol. 12 No. 3 , pp 263279. • Took a Risk • No necessity to write an essay • Judged against previous essays from past – knew mark and feedback index • NO PLAGIARISM opportunity • Worked really well Some Points Outstanding or Outstanding Points • What should students do if they identify plagiarism? • What about accessibility? • Is a computerised solution valid for all subject areas? • At what age / level can we trust the use of peer assessment? • How do we assess the time required to perform the marking task? • What split of the marks between creation & marking Summary • Research / Meeting Pedagogical Needs / Improving relationship between assessment & learning – Keep asking yourself WHY & WHAT am I assessing? • DON’T LET THE TECHNOLOGISTS DRIVE THE ASSESSMENT PROCESS!! i.e. Lunatics taking over the asylum • Don’t let the ‘artificial’ need to adhere to standards & large scale implementation of on-line assessment be detrimental to the assessment and learning needs of you and your students. ‘Suck it and see’. • By using composite e-assessment methods are then able to assess full range of learning + assessment • Favourite Story to finish Personal Reference Sources • CAA Conference Proceedings http://www.caaconference.com/ • ‘Computerized Peer-Assessment’, Innovations in Education and Training International Journal (IETI), 37,4, pp 346-355, Nov 2000 • ‘Using Student Reflective Self-Assessment for Awarding Degree Classifications’, Innovations in Education and Training International Journal (IETI), 39,4, pp 307-319, Nov 2002. • ALT-J journal entitled ‘Closing the Communications Loop on the Computerized Peer Assessment of Essays’, 11, 1, pp 41-54, 2003. • ALT-C 2003 Research stream paper, Peer-Assessment: No marks required, just feedback, Sheffield University, Sept 2003. • ALT-J journal entitled ‘Don’t Write, Just Mark; The Validity of Assessing Student Ability via their Computerized Peer-Marking of an Essay rather than their Creation of an Essay’ ALT-J (CALT) Vol. 12 No. 3 , pp 263-279. • Peer-Assessment: Judging the quality of student work by the comments not the marks?, Innovations in Education and Teaching International (IETI), 43, 1, pp 69-82, 2006. Contact Information pdavies@glam.ac.uk Phil Davies J317 01443 482247 University of Glamorgan