Standardization of phenotyping algorithms and data

advertisement

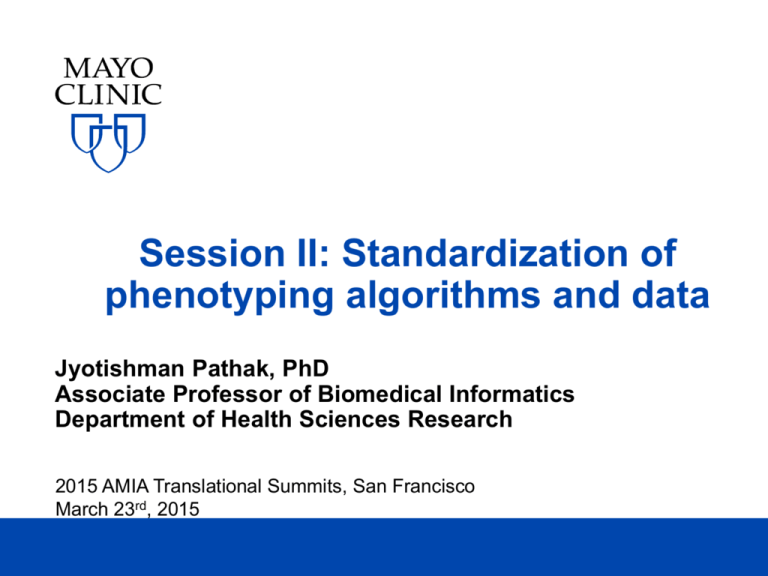

Session II: Standardization of

phenotyping algorithms and data

Jyotishman Pathak, PhD

Associate Professor of Biomedical Informatics

Department of Health Sciences Research

2015 AMIA Translational Summits, San Francisco

March 23rd, 2015

DISCLOSURES

Relevant Financial Relationship(s)

Mayo Clinic and Dr. Pathak have a financial

interest related to a device, product or

technology referenced in this presentation

with Apervita Inc.®

Off Label Usage

None

2015 AMIA Translational Summits

©2015 MFMER | slide-2

Outline

• Standards-based approaches to EHR

phenotyping

• NQF Quality Data Model

• JBoss® Drools business rules environment

• PhenotypePortal.org

• Standards-based approaches to phenotype

data representation

• Biomedical vocabularies and information models

• eleMAP data element harmonization toolkit

2015 AMIA Translational Summits

©2015 MFMER | slide-3

Key lessons learned from eMERGE

• Algorithm design and transportability

•

•

•

•

Non-trivial; requires significant expert involvement

Highly iterative process

Time-consuming manual chart reviews

Representation of “phenotype logic” is critical

• Standardized data access and representation

• Importance of unified vocabularies, data elements, and value sets

• Questionable reliability of ICD & CPT codes (e.g., billing the wrong

code since it is easier to find)

• Natural Language Processing (NLP) is critical

[Kho et al. Sc. Trans. Med 2011; 3(79): 1-7]

2015 AMIA Translational Summits

©2015 MFMER | slide-4

Example algorithm: Hypothyroidism

2015 AMIA Translational Summits

©2012 MFMER | slide-5

EHR-driven Phenotyping Algorithms

Rules

Evaluation

Phenotype

Algorithm

Transform

Mappings

Visualization

Transform

Data

NLP, SQL

2015 AMIA Translational Summits

[eMERGE Network]

©2015 MFMER | slide-6

Porting Phenotype Algorithms

• Initial eMERGE plan: sharing SQL, XML, near

executable pseudo-code

• That didn’t work: the sites were too heterogeneous

• More effective: sharing documents and humanreadable flowcharts

• Downside: requires humans to translate, each

implementation requires interpretation of ambiguity

• Moving forward: executable algorithm and

workflow specifications (back to the intentions of the

initial plan)

• Requirements: portability, standards-based (when

possible), buy-in at different levels

2015 AMIA Translational Summits

©2015 MFMER | slide-7

Algorithm Execution Process (baseline)

Human

Structured

Data

Extract &

Transform

Custom Scripts/Code

Text

NLP

Combine

Execute

Algorithm

Algorithm

Specifications

Custom

Scripts

Text

[eMERGE Network]

2015 AMIA Translational Summits

©2015 MFMER | slide-8

Pros and Cons

• Pros

• Very flexible

• “Portable” (with caveats)

• Cons

• Too flexible (no standard format)

• Requires implementation from scratch for every

•

algorithm

Error prone; can lead to ambiguity

2015 AMIA Translational Summits

©2015 MFMER | slide-9

Algorithm Execution Process

(standardized phenotype definitions)

Human

Structured

Data

Extract &

Transform

Custom Scripts/Code

Text

NLP

Combine

Execute

Algorithm

Algorithm

Specifications

Custom

Scripts

QDM

[eMERGE Network]

2015 AMIA Translational Summits

©2015 MFMER | slide-10

Pros and Cons

• Pros

• Consistent format

• Standards based

• Automatic translation to HTML (etc.) for human

consumption

• Cons

• Not as flexible as text

• May require extensions to cover algorithms not

•

expressible as Boolean rules

Still requires mapping to executable code

2015 AMIA Translational Summits

©2015 MFMER | slide-11

Algorithm Execution Process

(custom execution engine)

Automatic

Structured

Data

Extract &

Transform

Custom Scripts/Code

Text

Combine

NLP

Target

Execute

Algorithm

Algorithm

Specifications

Custom

Engine

QDM

[eMERGE Network]

2015 AMIA Translational Summits

©2015 MFMER | slide-12

Pros and Cons

• Pros

• Same as for previous, plus

• Consistent target for mapping specifications

• Allows possibility of automatic mapping

• Cons

• Need to transform source data into format

•

•

required by execution engine

Not portable to other institutions (may not be a

problem if you don’t care)

Unclear how to integrate NLP scripts/code

2015 AMIA Translational Summits

©2015 MFMER | slide-13

Algorithm Execution Process

(common execution engine)

Automatic

Structured

Data

Extract &

Transform

Custom Scripts/Code

Text

Combine

NLP

Target

Execute

Algorithm

Algorithm

Specifications

Drools,

KNIME

QDM

[eMERGE Network]

2015 AMIA Translational Summits

©2015 MFMER | slide-14

Pros and Cons

• Pros

• Same as for previous, plus

• Can re-use mappings developed externally

• Cons

• Need to transform source data into format

•

•

required by execution engine

Automatic mappings may be sub-optimal

Execution engine may not fit idiosyncratic

features of local data sources

2015 AMIA Translational Summits

©2015 MFMER | slide-15

NQF Quality Data Model (QDM) - I

• Standard of the National Quality Forum (NQF)

• A structure and grammar to represent quality measures

and phenotype definitions in a standardized format

• Groups of codes in a code set (ICD-9, etc.)

• "Diagnosis, Active: steroid induced diabetes" using

"steroid induced diabetes Value Set GROUPING

(2.16.840.1.113883.3.464.0001.113)”

• Supports temporality & sequences

• AND: "Procedure, Performed: eye exam" > 1 year(s)

starts before or during "Measurement end date"

• Implemented as a set of XML schemas

• Links to standardized terminologies (ICD-9, ICD-10,

SNOMED-CT, CPT-4, LOINC, RxNorm etc.)

2015 AMIA Translational Summits

©2015 MFMER | slide-16

NATIONAL QUALITY FORUM

NQF Quality Data Model (QDM) - II

[NQF QDM update, June 2012]

M Composition Diagram—The diagram depicts the definition of a QDM element beginning with defining a category, or the type of info

examples shown in the center blue boxes). The clear boxes on the left hand side of the drawing show the application of a state, or cont

2015 AMIA Translational Summits

©2015 MFMER | slide-17

NATIONAL

QUALITY

FORUM

NQF Quality

Data

Model

(QDM) - III

[NQF QDM update, June 2012]

Element Structure—The components of a QDM element are shown in the figure. The graphic on the left identifies the terms used

e QDM element. The graphic on the right uses each of these components to describe a QDM element indicating “Medication, admin

DM element is composed of a category, the state in which that category is expected to be used, and a value set of codes in a defined

2015 AMIA

Summits

©2015individual

MFMER attributes,

| slide-18

pecify which instance of the category is expected. The boxes

in theTranslational

lower section

of the QDM element specify

2015 AMIA Translational Summits

©2015 MFMER | slide-19

Example: Diabetes & Lipid Mgmt. - I

2015 AMIA Translational Summits

©2012 MFMER | slide-20

Example: Diabetes & Lipid Mgmt. - II

Human readable HTML

2015 AMIA Translational Summits

©2015 MFMER | slide-21

Example: Diabetes & Lipid Mgmt. - III

2015 AMIA Translational Summits

©2015 MFMER | slide-22

Example: Diabetes & Lipid Mgmt. - IV

2015 AMIA Translational Summits

©2015 MFMER | slide-23

Example: Diabetes & Lipid Mgmt. - V

Computer readable HQMF XML

(based on HL7 v3 RIM)

2015 AMIA Translational Summits

©2015 MFMER | slide-24

Modeling NQF criteria using Measure

Authoring Tool (MAT)

2015 AMIA Translational Summits

©2015 MFMER | slide-25

2015 AMIA Translational Summits

©2012 MFMER | slide-26

An Evaluation of the NQF Quality Data Model for Representing Electronic

Health Record Driven Phenotyping Algorithms

William K. Thompson, Ph.D.1, Luke V. Rasmussen1, Jennifer A. Pacheco1,

Peggy L. Peissig, M.B.A.2, Joshua C. Denny, M.D. 3, Abel N. Kho, M.D. 1,

Aaron Miller, Ph.D.2, Jyotishman Pathak, Ph.D.4,

1

Northwestern University, Chicago, IL; 2Marshfield Clinic, Marshfield, WI; 3Vanderbilt

University, Nashville, TN; 4Mayo Clinic, Rochester, MN

Abstract

The development of Electronic Health Record (EHR)-based phenotype selection algorithms is a non-trivial and

highly iterative process involving domain experts and informaticians. To make it easier to port algorithms across

institutions, it is desirable to represent them using an unambiguous formal specification language. For this purpose

we evaluated the recently developed National Quality Forum (NQF) information model designed for EHR-based

quality measures: the Quality Data Model (QDM). We selected 9 phenotyping algorithms that had been previously

developed as part of the eMERGE consortium and translated them into QDM format. Our study concluded that the

QDM contains several core elements that make it a promising format for EHR-driven phenotyping algorithms for

clinical research. However, we also found areas in which the QDM could be usefully extended, such as representing

information extracted from clinical text, and the ability to handle algorithms that do not consist of Boolean

combinations of criteria.

Introduction and Motivation

Identifying subjects for clinical trials and research studies can be a time-consuming and expensive process. For this

reason, there has been much interest in using the electronic health records (EHRs) to automatically identify patients

that match clinical study eligibility criteria, making it possible to leverage existing patient data to inexpensively and

automatically generate lists of patients that possess desired phenotypic traits.1,2 Yet the development of EHR-based

phenotyping algorithms is a non-trivial and highly iterative process involving domain experts and data analysts.3 It

is therefore desirable to make it as easy as possible to re-use such algorithms across institutions in order to minimize

the degree of effort involved, as well as the potential for errors due to ambiguity or under-specification. Part of the

solution to this issue is the adoption of an unambiguous and precise formal specification language for representing

phenotyping algorithms. This step is naturally a pre-condition for achieving the long-term goal of automatically

executable phenotyping algorithm specifications. A key element required to achieve this goal is a formal

representation for modeling the algorithms that can aid portability by enforcing standard syntax on algorithm

specifications, along with providing well-defined mappings to standard semantics of data elements and value sets.

[Thompson

et al., AMIA 2012]

Our experience in the development of phenotyping algorithms stems from work performed as part

of the electronic

4

Medical Records and Genomics (eMERGE) consortium, a network of seven sites originally using data collected in

the EHR as part of routine clinical care to

detect

phenotypes

for use

in genome-wide association studies. The

2015

AMIA

Translational

Summits

©2015 MFMER | slide-27

An Evaluation of the NQF Quality Data Model for Representing Electronic

Health Record Driven Phenotyping Algorithms

William K. Thompson, Ph.D.1, Luke V. Rasmussen1, Jennifer A. Pacheco1,

Peggy L. Peissig, M.B.A.2, Joshua C. Denny, M.D. 3, Abel N. Kho, M.D. 1,

Aaron Miller, Ph.D.2, Jyotishman Pathak, Ph.D.4,

1

Northwestern University, Chicago, IL; 2Marshfield Clinic, Marshfield, WI; 3Vanderbilt

University, Nashville, TN; 4Mayo Clinic, Rochester, MN

Abstract

The development of Electronic Health Record (EHR)-based phenotype selection algorithms is a non-trivial and

highly iterative process involving domain experts and informaticians. To make it easier to port algorithms across

institutions, it is desirable to represent them using an unambiguous formal specification language. For this purpose

we evaluated the recently developed National Quality Forum (NQF) information model designed for EHR-based

quality measures: the Quality Data Model (QDM). We selected 9 phenotyping algorithms that had been previously

developed as part of the eMERGE consortium and translated them into QDM format. Our study concluded that the

QDM contains several core elements that make it a promising format for EHR-driven phenotyping algorithms for

clinical research. However, we also found areas in which the QDM could be usefully extended, such as representing

information extracted from clinical text, and the ability to handle algorithms that do not consist of Boolean

combinations of criteria.

Introduction and Motivation

Identifying subjects for clinical trials and research studies can be a time-consuming and expensive process. For this

reason, there has been much interest in using the electronic health records (EHRs) to automatically identify patients

that match clinical study eligibility criteria, making it possible to leverage existing patient data to inexpensively and

automatically generate lists of patients that possess desired phenotypic traits.1,2 Yet the development of EHR-based

phenotyping algorithms is a non-trivial and highly iterative process involving domain experts and data analysts.3 It

is therefore desirable to make it as easy as possible to re-use such algorithms across institutions in order to minimize

the degree of effort involved, as well as the potential for errors due to ambiguity or under-specification. Part of the

solution to this issue is the adoption of an unambiguous and precise formal specification language for representing

phenotyping algorithms. This step is naturally a pre-condition for achieving the long-term goal of automatically

executable phenotyping algorithm specifications. A key element required to achieve this goal is a formal

representation for modeling the algorithms that can aid portability by enforcing standard syntax on algorithm

[Thompson

et al., AMIA 2012]

specifications, along with providing well-defined mappings to standard semantics of data elements

and value sets.

Our experience in the development of phenotyping algorithms stems from work performed as part of the electronic

Medical Records and Genomics (eMERGE)4 consortium, a network of seven sites originally using data collected in

the EHR as part of routine clinical care to

detect

phenotypes

for use

in genome-wide association

studies.

The | slide-28

2015

AMIA

Translational

Summits

©2015

MFMER

JBoss® Drools rules management system

• Represents knowledge with

declarative production rules

• Origins in artificial intelligence

expert systems

• Simple when <pattern> then

<action> rules specified in

text files

• Separation of data and logic

into separate components

• Forward chaining inference

model (Rete algorithm)

• Domain specific languages

(DSL)

2015 AMIA Translational Summits

©2015 MFMER | slide-29

Example Drools rule

{Rule Name}

rule

when

“Glucose <= 40, Insulin On”

{binding}

{Java Class}

{Class Getter Method}

$msg : GlucoseMsg(glucoseFinding <= 40,

currentInsulinDrip > 0 )

then

{Class Setter Method}

glucoseProtocolResult.setInstruction(GlucoseInstructions.

GLUCOSE_LESS_THAN_40_INSULIN_ON_MSG);

end

Parameter {Java Class}

2015 AMIA Translational Summits

©2015 MFMER | slide-30

Automatic translation from NQF criteria

to Drools

Measure

Authoring

Toolkit

From non-executable to

executable

Drools

Engine

Measures

XML-based

Structured

representation

Data Types

XML-based

structured

representation

Value Sets

Converting measures to

Drools scripts

Mapping data types

and value sets

Drools

scripts

Fact

Models

saved in XLS

files

2015 AMIA Translational Summits

©2015 MFMER | slide-31

[Li et al., AMIA 2012]

2015 AMIA Translational Summits

©2015 MFMER | slide-32

The “executable” Drools workflow

[Li et al., AMIA 2012]

2015 AMIA Translational Summits

©2015 MFMER | slide-33

http://phenotypeportal.org

[Endle et al., AMIA 2012]

1. Converts QDM to

Drools

2. Rule execution by

querying the CEM

database

3. Generate

summary reports

2015 AMIA Translational Summits

©2012 MFMER | slide-36

2015 AMIA Translational Summits

©2012 MFMER | slide-37

[Peterson AMIA 2014]

2015 AMIA Translational Summits

©2015 MFMER | slide-38

Example: Initial Patient Population criteria

for CMS eMeasure (CMS163V1)

2015 AMIA Translational Summits

©2012 MFMER | slide-39

[Peterson AMIA 2014]

2015 AMIA Translational Summits

©2015 MFMER | slide-40

Validation using Project Cypress

[Peterson AMIA 2014]

2015 AMIA Translational Summits

©2015 MFMER | slide-41

Alternative: Execution using KNIME

2015 AMIA Translational Summits

©2015 MFMER | slide-42

Outline

• Standards-based approaches to EHR

phenotyping

• NQF Quality Data Model

• JBoss® Drools business rules environment

• PhenotypePortal.org

• Standards-based approaches to phenotype

data representation

• Biomedical vocabularies and information models

• eleMAP data element harmonization toolkit

2015 AMIA Translational Summits

©2015 MFMER | slide-43

Overall Objective - I

• Without terminology and metadata standards:

• Health data is non-comparable

• Health systems cannot meaningfully

•

interoperate

Secondary uses of data for research and

applications (e.g., clinical decision support) is

not possible

• Our goal: Standardized and consistent

representation of eMERGE phenotype

data submitted to dbGaP (Database of

Genotypes and Phenotypes)

2015 AMIA Translational Summits

©2015 MFMER | slide-44

Overall Objective - II

1.Create/modify

a data

dictionary

2. Harmonize

data

elements to

standards

3. Generate

standardized

phenotype

data

2015 AMIA Translational Summits

Raw/Local Data from

EMR

©2015 MFMER | slide-45

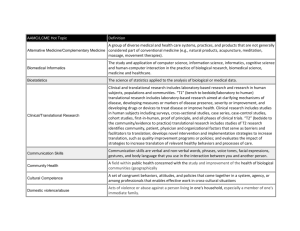

What are Data Dictionaries?

• Data dictionaries

• Collections of variables

• Often constructed for a particular study

• May contain common and study-specific

variables

• Wide spectrum of formality

• Inconsistencies can complicate (or prevent) data

integration

• Differences in format (require transformations)

• Differences in semantic meaning

• Differences in data values

[PHONT RIPS 2011]

2015 AMIA Translational Summits

©2015 MFMER | slide-46

Data Element Standardization

Lab Measurements

trig_bl

Triglycerides (mg/dL)

Fasting triglycerides measured at Clinic

triglycerides Visit 1 in mg/dl units

Calculated variable: natural log of

tTriglycerides triglycerides

• Similar data element with different semantics

• Fasting vs. non-fasting (implied semantics?)

• Specific time is implied (visit number)

• Mathematically transformed (natural log)

[PHONT RIPS 2011]

2015 AMIA Translational Summits

©2015 MFMER | slide-47

Data Element Standardization

Medications

0 = No;

1 = Amiodarone (Cordarone,Pacerone);

2 = Sotalol (Betapace);

3 = Propafenone (Rhythmol);

4 = Quinidine (Quinaglute,Quinalan);

5 = Procainamide (Procanbid);

6 = Flecainide (Tambocor);

7 = Disopyramide (Norpace);

8 = Other;

9 = Mexilitene (Mexitil);

10 = Tikosyn (Dofetilide);

-8 = Not Applicable;

-1 = Unknown

• Different permissible values

1 = ACE Inhibitors;

2 = Amiodarone;

3 = Angiotensin Receptor Blockers;

4 = Beta Blockers;

5 = Calcium Channel Blockers;

6 = Class IA;

7 = Class IB;

8 = Class IC;

9 = Class III;

10 = Class V;

-1 = Unknown;

-1 = No Meds

• Can use standard drug ontologies

• Content and meaning of values

• Local meanings ("other",

"unknown")

• Could also have different

representations (codes vs. text)

(RxNorm)

• Standardize drug names and

classes (NDF-RT)

• Auto-classify agents (more

flexible, less error prone)

2015 AMIA Translational Summits

[PHONT RIPS 2011]

©2015 MFMER | slide-48

eMERGE Data Dictionary Standardization

• Data dictionary standardization effort

• Harmonize core eMERGE data elements

• Leverage standardized ontologies and metadata

• Develop SOPs for data dictionary usage

Standardization

Collect Dictionaries

Library of Standardized

Data Elements

[PHONT RIPS 2011]

2015 AMIA Translational Summits

©2015 MFMER | slide-49

Background: Clinical Terminology Standards

and Resources

• NCI Cancer Data Standards Repository

• Metadata registry based on ISO/IEC 11179 standard

•

•

•

for storing common data elements (CDEs)

Allows creating, editing, deploying, and finding of

CDEs

Provides the backbone for NCI’s semantic-computing

environment, including caBIG (Cancer Biomedical

Informatics Grid)

Approx. 40,000 CDEs

2015 AMIA Translational Summits

©2015 MFMER | slide-50

2015 AMIA Translational Summits

51

2015 AMIA Translational Summits

52

Background: Clinical Terminology Standards

and Resources

• CDISC Terminology

• To define and support terminology needs of the

•

•

CDISC models across the clinical trial continuum

Used as part of the Study Data Tabulation Model: an

international standard for clinical research data,

approved by the FDA as a standard electronic

submission format

Comprises approx. 2300 terms covering

demographics, interventions, findings, events, trial

design, units, frequency, and ECG terminology

2015 AMIA Translational Summits

©2015 MFMER | slide-53

2015 AMIA Translational Summits

54

Background: Clinical Terminology Standards

and Resources

• NCI Thesaurus

• Reference terminology for clinical care,

•

•

translational and basic cancer research

Comprises approx. 70,000 concepts

representing information for nearly 10,000

cancers and related diseases

NCI Enterprise Vocabulary Services

(LexEVS) provides the terminology

infrastructure for caBIG, NCBO etc.

2015 AMIA Translational Summits

©2015 MFMER | slide-55

2015 AMIA Translational Summits

56

2015 AMIA Translational Summits

57

Background: Clinical Terminology Standards

and Resources

• Systematized Nomenclature of Medicine Clinical

Terms is a comprehensive terminology covering

most areas of clinical information including

diseases, findings, procedures, microorganisms,

pharmaceuticals etc.

• Comprises approx. 370,000 concepts

• Acquired by International Health Terminology

Standards Development Organization (IHTSDO)

in 2007

2015 AMIA Translational Summits

©2015 MFMER | slide-58

2015 AMIA Translational Summits

59

Background: Clinical Terminology Standards

and Resources

• LOINC

• Logical Observation Identifiers Names and

•

•

Codes provides a set of universal codes and

names to identify laboratory and other clinical

observations

Over 100,000 terms

RELMA: Regenstreif LOINC Mapping

Assistant Program helps users map their

local terms or lab tests to universal LOINC

codes

2015 AMIA Translational Summits

©2015 MFMER | slide-60

2015 AMIA Translational Summits

61

eleMAP Conceptual Architecture

https://victr.vanderbilt.edu/e

MAP

2015 AMIA Translational Summits

©2015 MFMER | slide-62

eleMAP Data Harmonization Process

• 5 easy steps

1.Create a study

2.Create your data dictionary

3.Harmonize the data elements

4.Harmonize the actual/raw data

5.Iterate if necessary…

• Quick demo or Screen Shots

2015 AMIA Translational Summits

©2015 MFMER | slide-63

eleMAP Data Harmonization Process: 1

Step 1: Select “Harmonize Data” under My Account

2015 AMIA Translational Summits

©2015 MFMER | slide-64

eleMAP Data Harmonization Process: 2

Step 2: Select Study, Source, and Upload raw data file

2015 AMIA Translational Summits

©2015 MFMER | slide-65

eleMAP Data Harmonization Process: 3

Step 3: Click OK to confirm import of data file

2015 AMIA Translational Summits

©2015 MFMER | slide-66

eleMAP Data Harmonization Process: 4

Step 4: Display of harmonized data; Download file

2015 AMIA Translational Summits

©2015 MFMER | slide-67

eleMAP Data Harmonization Process: 5

Step 5: Harmonized data file for dbGaP submission

2015 AMIA Translational Summits

©2015 MFMER | slide-68

eleMAP Statistics (as of 03/09/2015)

• 18 different eMERGE studies

• 407 data elements, across 13 different

categories

•

•

•

•

•

68% mapped to caDSR CDEs

41% mapped to SNOMED CT concepts

41% mapped to NCI Thesaurus concepts

25% mapped to SDTM DEs

30% DEs have no mapping

2015 AMIA Translational Summits

©2015 MFMER | slide-69

Key lessons learned from eMERGE

phenotype data integration

Use case: eMERGE Network Combined Dataset

Studies: RBC, WBC, Height, Lipids, Diabetic Retinopathy, and

Hypothyroidism

1. Issue: Data value inconsistencies were found in common variables among

studies (e.g. race).

Suggestion: Use eleMAP to define study phenotypes/data elements and

disseminate finalized DD to all sites prior to actual data collection.

2. Issue: Same variable name was used for different data element concepts (e.g.

weight, height, and BMI)

Suggestion: Use eleMAP to review concept/description of existing data

elements and define new data elements if necessary.

3. Issue: Inconsistent data values were received (e.g. Sex). Some were original

values (F=Female; M=Male) and some were mapped values

(Female=C46110;Male=C46109).

Suggestion: Best to gather data in original values, combined data sets, and

then harmonize merged data files via eleMAP.

2015 AMIA Translational Summits

©2015 MFMER | slide-70

PheKB Data Dictionary Validation

• Validate data dictionary

• Columns

• Formatting

• Validate data file against data dictionary

• Variable names, order

• Data types, min, max

• Encoded values

2015 AMIA Translational Summits

©2015 MFMER | slide-71

PheKB Data Dictionary Validation

2015 AMIA Translational Summits

©2015 MFMER | slide-72

PheKB Data Dictionary Validation

2015 AMIA Translational Summits

©2015 MFMER | slide-73

PheKB Data Dictionary Validation

2015 AMIA Translational Summits

©2015 MFMER | slide-74

PheKB Data Dictionary Validation

2015 AMIA Translational Summits

©2015 MFMER | slide-75

Future eleMAP/PheKB Integration

• Tightly integrate data dictionary authoring in the

phenotype definition process

• Utilize eleMAP functionality to define the data

dictionary

• Submitted data sets validated against the builtin definition

2015 AMIA Translational Summits

©2015 MFMER | slide-76

Concluding remarks

• Standardization of phenotyping algorithms

and data dictionaries is critical

• Portability of algorithms across multiple

EMR environments

• Consistent and comparable data sets

• To the extent possible, the goal should be

to leverage on-going community-wide and

national standardization efforts

• Join the club!

2015 AMIA Translational Summits

©2015 MFMER | slide-77

Relevant presentations

• Monday (03/23/15)

• Session: TBI03 (Cyril Magnin III) @ 3:30PM

• Computational Phenotyping from Electronic

Health Records across National Networks

• Wednesday (03/25/15)

• Session: CRI02 (Embarcadero) @ 10:30AM

• A Modular Architecture for Electronic Health

Record-Driven Phenotyping

2015 AMIA Translational Summits

©2015 MFMER | slide-78

http://projectphema.org

2015 AMIA Translational Summits

©2015 MFMER | slide-79

Thank You!

Pathak.Jyotishman@mayo.edu

http://jyotishman.info

2015 AMIA Translational Summits

©2015 MFMER | slide-80