chapter3

advertisement

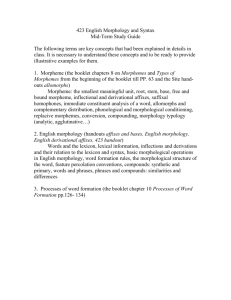

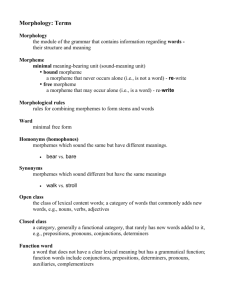

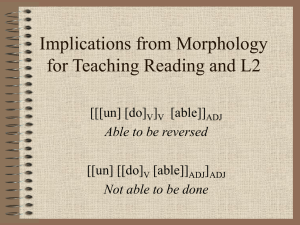

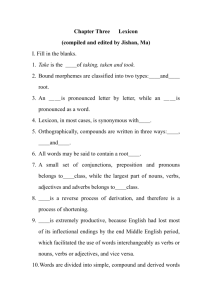

Morphological Analysis Chapter 3 Morphology • Morpheme = "minimal meaning-bearing unit in a language" • Morphology handles the formation of words by using morphemes – base form (stem,lemma), e.g., believe – affixes (suffixes, prefixes, infixes), e.g., un-, -able, -ly • Morphological parsing = the task of recognizing the morphemes inside a word – e.g., hands, foxes, children • Important for many tasks – machine translation, information retrieval, etc. – Parsing, text simplification, etc 2 Morphemes and Words • Combine morphemes to create words Inflection combination of a word stem with a grammatical morpheme same word class, e.g. clean (verb), clean-ing (verb) Derivation combination of a word stem with a grammatical morpheme Yields different word class, e.g delight (verb), delight-ful (adj) Compounding combination of multiple word stems Cliticization combination of a word stem with a clitic different words from different syntactic categories, e.g. I’ve = I + have 3 Inflectional Morphology • Inflectional Morphology • word stem + grammatical morpheme cat + s • only for nouns, verbs, and some adjectives • Nouns plural: regular: +s, +es irregular: mouse - mice; ox - oxen many spelling rules: e.g. -y -> -ies like: butterfly - butterflies possessive: +'s, +' • Verbs main verbs (sleep, eat, walk) modal verbs (can, will, should) primary verbs (be, have, do) 4 Inflectional Morphology (verbs) • • Verb Inflections for: main verbs (sleep, eat, walk); primary verbs (be, have, do) • • • • • Morpholog. Form stem -s form -ing participle past; -ed participle Regularly Inflected walk walks walking walked • • • • • • Morph. Form stem -s form -ing participle -ed past -ed participle Irregularly Inflected Form eat catch eats catches eating catching ate caught eaten caught Form merge merges merging merged try tries trying tried map maps mapping mapped cut cuts cutting cut cut 5 Inflectional Morphology (nouns) • Noun Inflections for: • regular nouns (cat, hand); irregular nouns(child, ox) • Morpholog. Form • stem • plural form Regularly Inflected Form cat hand cats hands • Morph. Form • stem • plural form Irregularly Inflected Form child ox children oxen 6 Inflectional and Derivational Morphology (adjectives) • Adjective Inflections and Derivations: • • • • prefix suffix suffix suffix un-ly -ier, -iest -ness unhappy adjective, negation happily adverb, manner happier, happiest comparatives happiness noun • plus combinations, like unhappiest, unhappiness. • Distinguish different adjective classes, which can or cannot take certain inflectional or derivational forms, e.g. no negation for big. 7 Derivational Morphology (nouns) 8 Derivational Morphology (adjectives) 9 Verb Clitics 10 Morpholgy and FSAs • We’d like to use the machinery provided by FSAs to capture these facts about morphology Recognition: Accept strings that are in the language Reject strings that are not In a way that doesn’t require us to in effect list all the words in the language 11 Computational Lexicons • Depending on the purpose, computational lexicons have various types of information Between FrameNet and WordNet, we saw POS, word sense, subcategorization, semantic roles, and lexical semantic relations For our purposes now, we care about stems, irregular forms, and information about affixes 12 Starting Simply • Let’s start simply: Regular singular nouns listed explicitly in lexicon Regular plural nouns have an -s on the end Irregulars listed explicitly too 13 Simple Rules 14 Now Plug in the Words Recognition of valid words But “foxs” isn’t right; we’ll see how to fix that 15 Parsing/Generation vs. Recognition • We can now run strings through these machines to recognize strings in the language • But recognition is usually not quite what we need Often if we find some string in the language we might like to assign a structure to it (parsing) Or we might have some structure and we want to produce a surface form for it (production/generation) • Example From “cats” to “cat +N +PL” 16 Finite State Transducers • Add another tape • Add extra symbols to the transitions • On one tape we read “cats”, on the other we write “cat +N +PL” 17 FSTs 18 Applications • The kind of parsing we’re talking about is normally called morphological analysis • It can either be • An important stand-alone component of many applications (spelling correction, information retrieval) • Or simply a link in a chain of further linguistic analysis 19 Transitions c:c a:a t:t +N: ε +PL:s • c:c means read a c on one tape and write a c on the other • +N:ε means read a +N symbol on one tape and write nothing on the other • +PL:s means read +PL and write an s 20 Typical Uses • Typically, we’ll read from one tape using the first symbol on the machine transitions (just as in a simple FSA). • And we’ll write to the second tape using the other symbols on the transitions. 21 Ambiguity • Recall that in non-deterministic recognition multiple paths through a machine may lead to an accept state. • Didn’t matter which path was actually traversed • In FSTs the path to an accept state does matter since different paths represent different parses and different outputs will result 22 Ambiguity • What’s the right parse (segmentation) for • Unionizable • Union-ize-able • Un-ion-ize-able • Each represents a valid path through the derivational morphology machine. 23 Ambiguity • There are a number of ways to deal with this problem • Simply take the first output found • Find all the possible outputs (all paths) and return them all (without choosing) • Bias the search so that only one or a few likely paths are explored 24 The Gory Details • Of course, its not as easy as • “cat +N +PL” <-> “cats” • As we saw earlier there are geese, mice and oxen • But there are also a whole host of spelling/pronunciation changes that go along with inflectional changes • Fox and Foxes vs. Cat and Cats 25 Multi-Tape Machines • To deal with these complications, we will add more tapes and use the output of one tape machine as the input to the next • So to handle irregular spelling changes we’ll add intermediate tapes with intermediate symbols 26 Multi-Level Tape Machines • We use one machine to transduce between the lexical and the intermediate level, and another to handle the spelling changes to the surface tape 27 Intermediate to Surface • The add an “e” rule as in fox^s# <--> fox^es# 28 Lexical to Intermediate Level 29 Foxes 30 Note • A key feature of this machine is that it doesn’t do anything to inputs to which it doesn’t apply. • Meaning that they are written out unchanged to the output tape. • Also, the transducers may be run in the other direction too (examples in lecture) Caveat: small changes would need to be made, e.g., in what “other” means. You are responsible for understanding them in the direction we covered them, and in the idea of how we can use them in the other direction as well 31 Overall Scheme 32 Cascades • This is an architecture that we’ll see again • Overall processing is divided up into distinct rewrite steps • The output of one layer serves as the input to the next 33