Presentation (PPTX 510KB)

advertisement

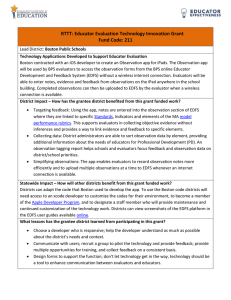

Navigator Evaluator Penny Hawkins ANZEA Conference 7 July 2015 An Evaluation Journey NZ Government US Philanthropy UK Government Checking Evaluation Bearings Systematic evaluation of social programmes began in education and health sectors - way back 1950 & 60s increased use in government programmes with more expenditure in health, education and rural development Evaluation literature began to increase in 1960s By 1970s evaluation emerged as a specialist field and the first journal appeared in mid-70s (Evaluation Review, Sage) Professional associations emerged through 1980s Evaluation developed primarily in the public sector – in recent decades fuelled by NPM What have we learned along the way? Changing tides in evaluation – fads, trends, rips and undercurrents Clashing views – gold standard, methods-driven approaches and pragmatic, user-focused evaluation Practice change happens at different times in separate fields – national public policy & international development Learning enclosed within different evaluation communities and sectors What’s our direction of travel? As evaluation navigators…can we see the horizon? In the current climate of fiscal restraints and pressure to make choices about where resources are directed, there is still demand for evaluation and hence it continues to have an important role in public policy Evaluation practitioners come from different academic disciplines and professions…this diversity is a strength but can create conflicting views about evaluation practice New players entering the field – not identifying as evaluators – evaluation becoming more mainstreamed and part of management Sailing into the wind Catch the wind or get caught in the doldrums – impact evaluation blues Can we navigate clear pathways as evaluation professionals to develop evaluation approaches fit for the future? Can we harness divergent views on how the field needs to develop or will the scientists and pragmatists never work well together? The wind is behind us Increasing demand for faster turnaround – can no longer wait 1, 2, 3, years for evaluation findings – change is becoming more rapid and evaluative information is needed more quickly A shift towards monitoring? Yes and No, there’s continuing demand for robust information from evaluations to inform understanding of how change happens Charting the course How can evaluation better influence government policy and decision-making? Compass points - Clarity about evaluation demand: who? what? when? - Align evaluation with design and implementation - Strong connections with decision-makers and users before, during and after - Compelling demonstrations of use leading to positive changes – mind shifts resulting from evaluation and beneficial changes made as a result of new insights Does practice lead theory? 1. Merging of monitoring and evaluation 2. Continuous data generation and continuous analysis for adapting policy/programme delivery 3. Virtual analysis, modelling of trends and conditions 4. Visual data displays not long narrative reports 5. Data collected and analysed by non-evaluators 6. Systems and partnerships in evaluation rather than individuals or teams 7. Increased transparency of evaluations – open data Adaptive interventions Uncertainty about how to achieve results is leading to more flexible interventions Evaluation practice changing to respond to emerging demand Simple linear logic models being replaced by more complicated theories of change and systems thinking Need for new evaluation skills and techniques Evaluation in a digital world From data poor to data rich in a few short years! Let’s get digital, digital… Big data, Small data Mobile phones and other hand-held electronic devices Geo-spatial data, mapping and tracking Real time data e.g. participant feedback Social media New skills, new approaches, new methods – decisions made with real time data analysis Role of evaluation professionals (as navigators)? Example of how digital data can be used for evaluation (Bamberger and Raftree) Increase in poverty levels in rural areas: – Reduced purchase of mobile phone top ups – Withdrawal of savings from on-line accounts – Reduced on-line orders of seeds and fertilisers (phone/internet) – Increase in use of words like ‘sick’ and ‘hungry’ on social media – Reduction in the number of vehicles travelling to the local market (satellite data) Navigating the way forward Commissioners need to change the way ToRs are framed – less prescriptive and express interest and leave space for the evaluators to use different types of data and analysis Evaluators need to acquire new skills in using digital data – its retrieval and analysis Potential for attracting young evaluators into the profession? Data scientists and evaluators working together Avoiding the rocks under the surface “Apophenia” – seeing meaningful patterns and connections where none exist Selection bias – who has access to and uses digital tools/media Correlation is not causation (the Starbucks leads to Evaluation problem) Technology-driven evaluation approaches Potential loss of insights from person-to-person direct interaction Ethical issues – privacy and unintended effects New methods, old standards… The move to develop new approaches seems inevitable – 70s methods now outdated Moving out of our comfort zone into wide uncharted territories What about professional principles and standards – can be forgotten in the rush to use new methods Are the ones we have still fit for purpose? Building a new boat on an old hull Traditional methods can be time intensive, expensive and intrusive Potential benefits of digital data: faster analysis including real time visual data cross-referencing data sets for accuracy efficiency gains Under construction….coming towards you… Drop anchor - value evaluation Can we demonstrate the value of evaluation? Making the case for evaluation’s unique offer Ex-ante and ex-post assessments of potential and actual effects of evaluation Demonstrating return on evaluation investments Use of the evaluation findings: start, stop, improve policy/programme – efficient use of resources Effects resulting from use of the evaluation e.g. scale up of a successful initiative benefitting more people Message in a bottle Evaluation journey - past and present Evaluator as Navigator Rough waters and uncharted courses Work to be done – and fast! Exploring new approaches Collaborating with other players An exciting time to be involved in evaluation!