DA encodes reward prediction error

advertisement

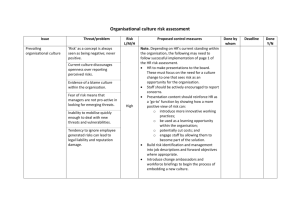

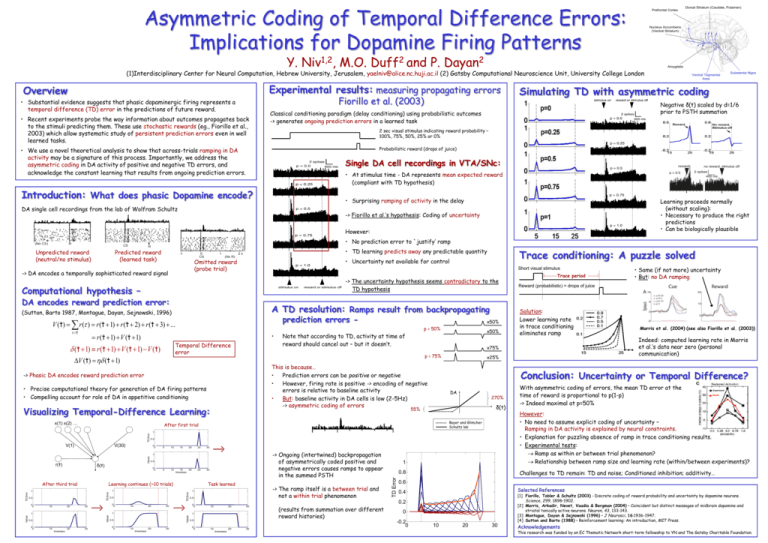

Asymmetric Coding of Temporal Difference Errors: Implications for Dopamine Firing Patterns Y. 1,2 Niv , M.O. 2 Duff and P. 2 Dayan Prefrontal Cortex Nucleus Accumbens (Ventral Striatum) Amygdala (1)Interdisciplinary Center for Neural Computation, Hebrew University, Jerusalem, yaelniv@alice.nc.huji.ac.il (2) Gatsby Computational Neuroscience Unit, University College London Experimental results: measuring propagating errors Overview Fiorillo et al. (2003) • Substantial evidence suggests that phasic dopaminergic firing represents a temporal difference (TD) error in the predictions of future reward. • Recent experiments probe the way information about outcomes propagates back to the stimuli predicting them. These use stochastic rewards (eg., Fiorillo et al., 2003) which allow systematic study of persistent prediction errors even in well learned tasks. Dorsal Striatum (Caudate, Putamen) Ventral Tegmental Area Substantia Nigra Simulating TD with asymmetric coding Negative δ(t) scaled by d=1/6 prior to PSTH summation Classical conditioning paradigm (delay conditioning) using probabilistic outcomes -> generates ongoing prediction errors in a learned task 2 sec visual stimulus indicating reward probability – 100%, 75%, 50%, 25% or 0% Probabilistic reward (drops of juice) • We use a novel theoretical analysis to show that across-trials ramping in DA activity may be a signature of this process. Importantly, we address the asymmetric coding in DA activity of positive and negative TD errors, and acknowledge the constant learning that results from ongoing prediction errors. Single DA cell recordings in VTA/SNc: • At stimulus time - DA represents mean expected reward (compliant with TD hypothesis) Introduction: What does phasic Dopamine encode? • Surprising ramping of activity in the delay DA single cell recordings from the lab of Wolfram Schultz Learning proceeds normally (without scaling): • Necessary to produce the right predictions • Can be biologically plausible -> Fiorillo et al.’s hypothesis: Coding of uncertainty However: • No prediction error to `justify’ ramp Unpredicted reward (neutral/no stimulus) • TD learning predicts away any predictable quantity Predicted reward (learned task) • Uncertainty not available for control Omitted reward (probe trial) -> DA encodes a temporally sophisticated reward signal Short visual stimulus -> The uncertainty hypothesis seems contradictory to the TD hypothesis Computational hypothesis – DA encodes reward prediction error: A TD resolution: Ramps result from backpropagating (Sutton, Barto 1987, Montague, Dayan, Sejnowski, 1996) prediction errors - V (t) r ( ) r (t 1) r (t 2) r (t 3) ... t r (t 1) V (t 1) Temporal Difference error V (t) (t 1) Visualizing Temporal-Difference Learning: 15 20 25 0 0 30 15 20 timesteps -> Ongoing (intertwined) backpropagation of asymmetrically coded positive and negative errors causes ramps to appear in the summed PSTH 0.5 10 20 20 timesteps 30 30 -> The ramp itself is a between trial and not a within trial phenomenon 0.5 10 20 timesteps 30 10 20 30 0.5 0 0 1 10 20 timesteps 30 (results from summation over different reward histories) Solution: Lower learning rate in trace conditioning eliminates ramp Morris et al. (2004) (see also Fiorillo et al. (2003)) Indeed: computed learning rate in Morris et al.’s data near zero (personal communication) With asymmetric coding of errors, the mean TD error at the time of reward is proportional to p(1-p) -> Indeed maximal at p=50% Experiment Model However: • No need to assume explicit coding of uncertainty – Ramping in DA activity is explained by neural constraints. • Explanation for puzzling absence of ramp in trace conditioning results. • Experimental tests: Ramp as within or between trial phenomenon? Relationship between ramp size and learning rate (within/between experiments)? Challenges to TD remain: TD and noise; Conditioned inhibition; additivity… 0.8 0.6 Selected References 0.4 [1] Fiorillo, Tobler & Schultz (2003) - Discrete coding of reward probability and uncertainty by dopamine neurons. Science, 299, 1898–1902. [2] Morris, Arkadir, Nevet, Vaadia & Bergman (2004) – Coincident but distinct messages of midbrain dopamine and striatal tonically active neurons. Neuron, 43, 133-143. [3] Montague, Dayan & Sejnowski (1996) – J Neurosci, 16:1936-1947. [4] Sutton and Barto (1988) – Reinforcement learning: An introduction, MIT Press. 0.2 1 0.5 0 0 30 1 0 0 Values 0.5 10 25 Task learned 1 Values Values 10 1 0 0 1 0 0 5 TD Error TD Error 0.5 20 δ(t) 30 0.5 Learning continues (~10 trials) 1 270% Bayer and Glimcher Schultz lab TD Error TD Error δ(t) After third trial TD Error Values r(t) 10 DA 0.5 10 Reward (probabilistic) = drops of juice x25% 1 5 • Same (if not more) uncertainty • But: no DA ramping Conclusion: Uncertainty or Temporal Difference? After first trial 0 0 Trace period x75% 55% 1 0 0 Note that according to TD, activity at time of reward should cancel out – but it doesn’t. This is because… • Prediction errors can be positive or negative • However, firing rate is positive -> encoding of negative errors is relative to baseline activity • But: baseline activity in DA cells is low (2-5Hz) -> asymmetric coding of errors • Precise computational theory for generation of DA firing patterns • Compelling account for role of DA in appetitive conditioning V(30) x50% p = 75% -> Phasic DA encodes reward prediction error x(1) x(2) … x50% p = 50% • (t 1) r (t 1) V (t 1) V (t) V(1) Trace conditioning: A puzzle solved 0 -0.2 0 10 20 30 Acknowledgements This research was funded by an EC Thematic Network short-term fellowship to YN and The Gatsby Charitable Foundation.