A closer Look inside Oracle ASM

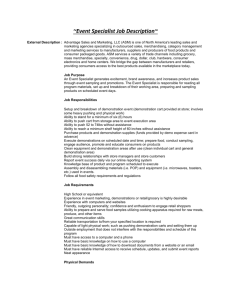

advertisement

A Closer Look inside Oracle ASM UKOUG Conference 2007 Luca Canali, CERN IT Outline Oracle ASM for DBAs Introduction and motivations ASM is not a black box Investigation of ASM internals Focus on practical methods and troubleshooting ASM and VLDB Metadata, rebalancing and performance Lessons learned from CERN’s production DB services ASM Oracle Automatic Storage Management Provides the functionality of a volume manager and filesystem for Oracle (DB) files Works with RAC Oracle 10g feature aimed at simplifying storage management Together with Oracle Managed Files and the Flash Recovery Area An implementation of S.A.M.E. methodology Goal of increasing performance and reducing cost ASM for a Clustered Architecture • Oracle architecture of redundant low-cost components Servers SAN Storage ASM Disk Groups • Example: HW = 4 disk arrays with 8 disks each • An ASM diskgroup is created using all available disks – – – – The end result is similar to a file system on RAID 1+0 ASM allows to mirror across storage arrays Oracle RDBMS processes directly access the storage RAW disk access ASM Diskgroup Mirroring Striping Failgroup1 Striping Failgroup2 Files, Extents, and Failure Groups Files and extent pointers Failgroups and ASM mirroring ASM Is not a Black Box ASM is implemented as an Oracle instance Familiar operations for the DBA Configured with SQL commands Info in V$ views Logs in udump and bdump Some ‘secret’ details hidden in X$TABLES and ‘underscore’ parameters Selected V$ Views and X$ Tables View Name X$ Table Description V$ASM_DISKGROUP X$KFGRP performs disk discovery and lists diskgroups V$ASM_DISK X$KFDSK, X$KFKID performs disk discovery, lists disks and their usage metrics V$ASM_FILE X$KFFIL lists ASM files, including metadata V$ASM_ALIAS X$KFALS lists ASM aliases, files and directories V$ASM_TEMPLATE X$KFTMTA ASM templates and their properties V$ASM_CLIENT X$KFNCL lists DB instances connected to ASM V$ASM_OPERATION X$KFGMG lists current rebalancing operations N.A. X$KFKLIB available libraries, includes asmlib N.A. X$KFDPARTNER lists disk-to-partner relationships N.A. X$KFFXP extent map table for all ASM files N.A. X$KFDAT allocation table for all ASM disks ASM Parameters Notable ASM instance parameters: *.asm_diskgroups='TEST1_DATADG1','TEST1_RECODG1' *.asm_diskstring='/dev/mpath/itstor*p*' *.asm_power_limit=5 *.shared_pool_size=70M *.db_cache_size=50M *.large_pool_size=50M *.processes=100 More ASM Parameters Underscore parameters Several undocumented parameters Typically don’t need tuning Exception: _asm_ausize and _asm_stripesize May need tuning for VLDB in 10g New in 11g, diskgroup attributes V$ASM_ATTRIBUTE, most notable disk_repair_time au_size X$KFENV shows ‘underscore’ attributes ASM Storage Internals ASM Disks are divided in Allocation Units (AU) Default size 1 MB (_asm_ausize) Tunable diskgroup attribute in 11g ASM files are built as a series of extents Extents are mapped to AUs using a file extent map When using ‘normal redundancy’, 2 mirrored extents are allocated, each on a different failgroup RDBMS read operations access only the primary extent of a mirrored couple (unless there is an IO error) In 10g the ASM extent size = AU size ASM Metadata Walkthrough Three examples follow of how to read data directly from ASM. Motivations: Build confidence in the technology, i.e. ‘get a feeling’ of how ASM works It may turn out useful one day to troubleshoot a production issue. Example 1: Direct File Access 1/2 Goal: Reading ASM files with OS tools, using metadata information from X$ tables 1. Example: find the 2 mirrored extents of the RDBMS spfile 2. sys@+ASM1> select GROUP_KFFXP Group#, DISK_KFFXP Disk#, AU_KFFXP AU#, XNUM_KFFXP Extent# from X$KFFXP where number_kffxp=(select file_number from v$asm_alias where name='spfiletest1.ora'); GROUP# DISK# AU# EXTENT# ---------- ---------- ---------- ---------1 16 17528 0 1 4 14838 0 Example 1: Direct File Access 2/2 3. Find the disk path sys@+ASM1> select disk_number,path from v$asm_disk where GROUP_NUMBER=1 and disk_number in (16,4); DISK_NUMBER PATH ----------- -----------------------------------4 /dev/mpath/itstor417_1p1 16 /dev/mpath/itstor419_6p1 Read data from disk using ‘dd’ dd if=/dev/mpath/itstor419_6p1 bs=1024k count=1 skip=17528 |strings 4. X$KFFXP Column Name Description NUMBER_KFFXP ASM file number. Join with v$asm_file and v$asm_alias COMPOUND_KFFXP File identifier. Join with compound_index in v$asm_file INCARN_KFFXP File incarnation id. Join with incarnation in v$asm_file XNUM_KFFXP ASM file extent number (mirrored extent pairs have the same extent value) PXN_KFFXP Progressive file extent number GROUP_KFFXP ASM disk group number. Join with v$asm_disk and v$asm_diskgroup DISK_KFFXP ASM disk number. Join with v$asm_disk AU_KFFXP Relative position of the allocation unit from the beginning of the disk. LXN_KFFXP 0->primary extent,1->mirror extent, 2->2nd mirror copy (high redundancy and metadata) Example 2: A Different Way A different metadata table to reach the same goal of reading ASM files directly from OS: sys@+ASM1> select GROUP_KFDAT Group# ,NUMBER_KFDAT Disk#, AUNUM_KFDAT AU# from X$KFDAT where fnum_kfdat=(select file_number from v$asm_alias where name='spfiletest1.ora'); GROUP# DISK# AU# ---------- ---------- ---------1 4 14838 1 16 17528 X$KFDAT Column Name (subset) Description GROUP_KFDAT Diskgroup number, join with v$asm_diskgroup NUMBER_KFDAT Disk number, join with v$asm_disk COMPOUND_KFDAT Disk compund_index, join with v$asm_disk AUNUM_KFDAT Disk allocation unit (relative position from the beginning of the disk), join with x$kffxp.au_kffxp V_KFDAT Flag: V=this Allocation Unit is used; F=AU is free FNUM_KFDAT File number, join with v$asm_file XNUM_KFDAT Progressive file extent number join with x$kffxp.pxn_kffxp Example 3: Yet Another Way Using the internal package dbms_diskgroup declare fileType varchar2(50); fileName varchar2(50); fileSz number; blkSz number; hdl number; plkSz number; data_buf raw(4096); begin fileName := '+TEST1_DATADG1/TEST1/spfiletest1.ora'; dbms_diskgroup.getfileattr(fileName,fileType,fileSz, blkSz); dbms_diskgroup.open(fileName,'r',fileType,blkSz, hdl,plkSz, fileSz); dbms_diskgroup.read(hdl,1,blkSz,data_buf); dbms_output.put_line(data_buf); end; / DBMS_DISKGROUP Can be used to read/write ASM files directly It’s an Oracle internal package Does not require a RDBMS instance 11g’s asmcmd cp command uses dbms_diskgroup Procedure Name Parameters dbms_diskgroup.open (:fileName, :openMode, :fileType, :blkSz, :hdl,:plkSz, :fileSz) dbms_diskgroup.read (:hdl, :offset, :blkSz, :data_buf) dbms_diskgroup.createfile (:fileName, :fileType, :blkSz, :fileSz, :hdl, :plkSz, :fileGenName) dbms_diskgroup.close (:hdl) dbms_diskgroup.commitfile (:handle) dbms_diskgroup.resizefile (:handle,:fsz) dbms_diskgroup.remap (:gnum, :fnum, :virt_extent_num) dbms_diskgroup.getfileattr (:fileName, :fileType, :fileSz, :blkSz) File Transfer Between OS and ASM The supported tools (10g) RMAN DBMS_FILE_TRANSFER FTP (XDB) WebDAV (XDB) They all require a RDBMS instance In 11g, all the above plus asmcmd cp command Works directly with the ASM instance Strace and ASM 1/3 Goal: understand strace output when using ASM storage Example: read64(15,"#33\0@\"..., 8192, 473128960)=8192 This is a read operation of 8KB from FD 15 at offset 473128960 What is the segment name, type, file# and block# ? Strace and ASM 2/3 From /proc/<pid>/fd I find that FD=15 is 1. /dev/mpath/itstor420_1p1 This is disk 20 of D.G.=1 (from v$asm_disk) From x$kffxp I find the ASM file# and extent#: 2. 3. • Note: offset 473128960 = 451 MB + 27 *8KB sys@+ASM1>select number_kffxp, xnum_kffxp from x$kffxp where group_kffxp=1 and disk_kffxp=20 and au_kffxp=451; NUMBER_KFFXP XNUM_KFFXP ------------ ---------268 17 Strace and ASM 3/3 4. 5. 6. From v$asm_alias I find the file alias for file 268: USERS.268.612033477 From v$datafile view I find the RDBMS file#: 9 From dba extents finally find the owner and segment name relative to the original IO operation: sys@TEST1>select owner,segment_name,segment_type from dba_extents where FILE_ID=9 and 27+17*1024*1024 between block_id and block_id+blocks; OWNER SEGMENT_NAME SEGMENT_TYPE ----- ------------ -----------SCOTT EMP TABLE Investigation of Fine Striping An application: finding the layout of fine-striped files Explored using strace of an oracle session executing ‘alter B7 … … A6 … … Fine striping size = 128KB (1MB/8) B6 … … A5 … … B5 … … A4 … … B4 … … A3 … … B3 … … A2 … … A1 B2 … … … … … … A0 B1 … … B0 … … … … AU = 1MB system dump logfile ..’ Result: round robin distribution over 8 x 1MB extents A7 Metadata Files ASM diskgroups contain ‘hidden files’ Not listed in V$ASM_FILE (file# <256) Details are available in X$KFFIL In addition the first 2 AUs of each disk are marked as file#=0 in X$KFDAT Example (10g): GROUP# FILE# FILESIZE_AFTER_MIRR RAW_FILE_SIZE ---------- ---------- ------------------- ------------1 1 2097152 6291456 1 2 1048576 3145728 1 3 264241152 795869184 1 4 1392640 6291456 1 5 1048576 3145728 1 6 1048576 3145728 ASM Metadata 1/2 File#0, AU=0: disk header (disk name, etc), Allocation Table (AT) and Free Space Table (FST) File#0, AU=1: Partner Status Table (PST) File#1: File Directory (files and their extent pointers) File#2: Disk Directory File#3: Active Change Directory (ACD) The ACD is analogous to a redo log, where changes to the metadata are logged. Size=42MB * number of instances Source: Oracle Automatic Storage Management, Oracle Press Nov 2007, N. Vengurlekar, M. Vallath, R.Long ASM Metadata 2/2 File#4: Continuing Operation Directory (COD). The COD is analogous to an undo tablespace. It maintains the state of active ASM operations such as disk or datafile drop/add. The COD log record is either committed or rolled back based on the success of the operation. File#5: Template directory File#6: Alias directory 11g, File#9: Attribute Directory 11g, File#12: Staleness registry, created when needed to track offline disks ASM Rebalancing Rebalancing is performed (and mandatory) after space management operations Goal: balanced space allocation across disks Not based on performance or utilization ASM spreads every file across all disks in a diskgroup ASM instances are in charge of rebalancing Extent pointers changes are communicated to the RDBMS RDBMS’ ASMB process keeps an open connection to ASM This can be observed by running strace against ASMB In RAC, extra messages are passed between the cluster ASM instances LMD0 of the ASM instances are very active during rebalance ASM Rebalancing and VLDB Performance of Rebalancing is important for VLDB An ASM instance can use parallel slaves RBAL coordinates the rebalancing operations ARBx processes pick up ‘chunks’ of work. By default they log their activity in udump Does it scale? In 10g serialization wait events can limit scalability Even at maximum speed rebalancing is not always I/O bound ASM Rebalancing Performance Tracing ASM rebalancing operations 10046 trace of the +arbx processes Oradebug setospid … oradebug event 10046 trace name context forever, level 12 Process log files (in bdump) with orasrp (tkprof will not work) Main wait events from my tests with RAC (6 nodes) DFS lock handle Waiting for CI level 5 (cross instance lock) Buffer busy wait ‘unaccounted for’ enq: AD - allocate AU enq: AD - deallocate AU log write(even) log write(odd) ASM Single Instance Rebalancing Single instance rebalance Faster in RAC if you can rebalance with only 1 node up (I have observed: 20% to 100% speed improvement) Buffer busy wait can be the main event It seems to depend on the number of files in the diskgroup. Diskgroups with a small number of (large) files have more contention (+arbx processes operate concurrently on the same file) Only seen in tests with 10g 11g has improvements regarding rebalancing contention Rebalancing, an Example ASM Rebalancing Performance (RAC) Rate, MB/min 7000 6000 Oracle 11g 5000 Oracle 10g 4000 3000 2000 1000 0 0 2 4 6 8 10 Diskgroup Rebalance Parallelism Data: D.Wojcik, CERN IT 12 Rebalancing Workload When ASM mirroring is used (e.g. with normal redundancy) Rebalancing operations can move more data than expected Example: 5 TB (allocated): ~100 disks, 200 GB each A disk is replaced (diskgroup rebalance) The total IO workload is 1.6 TB (8x the disk size!) How to see this: query v$asm_operation, the column EST_WORK keeps growing during rebalance The issue: excessive repartnering ASM Disk Partners ASM diskgroup with normal redundancy Two copies of each extents are written to different ‘failgroups’ Two ASM disks are partners: When they have at least one extent set in common (they are the 2 sides of a mirror for some data) Each ASM disk has a limited number of partners Typically 10 disk partners: X$KFDPARTNER Helps to reduce the risk associated with 2 simultaneous disk failures Free and Usable Space When ‘ASM mirroring’ is used not all the free space should be occupied V$ASM_DISKGROUP.USABLE_FILE_MB: Amount of free space that can be safely utilized taking mirroring into account, and yet be able to restore redundancy after a disk failure it’s calculated for the case of the worst scenario, anyway it is a best practice not to have it go negative (it can) This can be a problem when deploying a small number of large LUNs and/or failgroups Fast Mirror Resync ASM 10g with normal redundancy does not allow to offline part of the storage A transient error in a storage array can cause several hours of rebalancing to drop and add disks It is a limiting factor for scheduled maintenances 11g has new feature ‘fast mirror resync’ Redundant storage can be put offline for maintenance Changes are accumulated in the staleness registry (file#12) Changes are applied when the storage is back online Read Performance, Random I/O IOPS measured with SQL (synthetic test) Small Random I/O (8KB block, 32GB probe table) 64 SATA HDs (4 arrays, 1 instance) 10000 9000 7000 8675 IOPS 6000 5000 ~130 IOPS per disk 4000 3000 Destroking, only the external part of the disks is used 2000 1000 Workload, number of oracle sessions 0 35 0 33 0 31 0 29 0 27 0 25 0 23 0 21 0 19 0 17 0 15 0 13 0 11 90 70 50 30 10 0 2 I/O small read per sec (IOPS) 8000 Read Performance, Sequential I/O Sequential I/O Throughput, 80GB probe table 64 SATA HDs (4 arrays and 4 RAC nodes) 800 700 600 MB/sec 500 400 Limited by HBAs -> 4 x 2 Gb 300 200 (measured with parallel query) 100 0 1 2 4 8 10 12 14 16 18 20 22 24 26 28 30 32 34 36 38 40 Workload, number of parallel query slaves Implementation Details Multipathing Linux Device Mapper (2.6 kernel) Block devices RHEL4 and 10gR2 allow to skip raw devices mapping External half of the disk for data disk groups JBOD config No HW RAID ASM used to mirror across disk arrays HW: Storage arrays (Infortrend): FC controller, SATA disks FC (Qlogic): 4Gb switch and HBAs (2Gb in older HW) Servers are 2x CPUs, 4GB RAM, 10.2.0.3 on RHEL4, RAC of 4 to 8 nodes Q&A Q&A Links: http://cern.ch/phydb http://twiki.cern.ch/twiki/bin/view/PSSGroup/ASM_Internals http://www.cern.ch/canali