NCAR_Nienhouse_final - Pacific Research Platform

advertisement

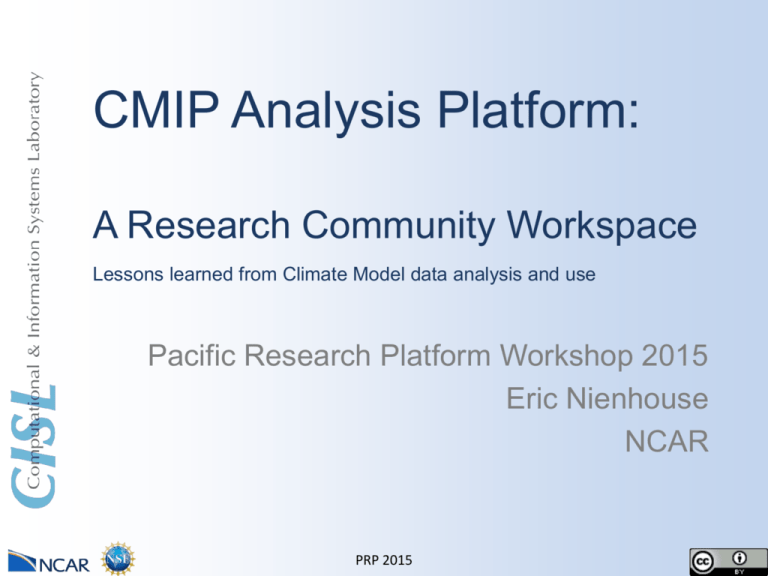

CMIP Analysis Platform: A Research Community Workspace Lessons learned from Climate Model data analysis and use Pacific Research Platform Workshop 2015 Eric Nienhouse NCAR PRP 2015 Sample of NCAR Data Archives We build data archives and curate data for diverse communities. 20K annual users, 300 data providers, 20K collections ACADIS ESGF & ESG-NCAR RDA Advanced Collaborative Arctic Data Information Service Climate Data at NCAR Research Data Archive NSF Arctic projects Self publishing tools Many disciplines Long term preservation Climate models (CESM) RCMs (NARCCAP) Large data volume Heavily accessed Actively curated reference Reanalysis + obs products Subset and re-format svcs ECMWF, ICOADS, JRA-55 PRP 2015 CMIP5: Coupled Model Intercomparison Research requires use of highly distributed data by 1000+ global users Goal: Study simulated future climate from suite of global models CMIP5 Data Organization and Distribution • 30 contributing modeling centers world-wide • 2 PB total, kept in-situ with modeling centers • Data accessed via the ESGF software stack Scientific User Challenges • Research requires transfer of 10’s to 100’s of TBs from many locations • Many organizations lack resources to manage xfer, store and analyze data • Preparing data for analysis was a significant barrier to scientific productivity Successes • Distributed search index for entire federation • Standard file naming and “Data Reference Syntax” supports discovery • Common, self describing data format (NetCDF + CF conventions) PRP 2015 CMIP Analysis Platform A community resource accelerating large scale climate research Shared resource funded by NSF for 500+ users hosted at NCAR Dedicated storage, analysis clusters and workflows for CMIP research Allows scientists to do science (and not data preparation and mgt) Offloads IT from science project to dedicated data curators and infrastructure Suite of human and software services layered on infrastructure. Pilot service with subset of 1.8PB of CMIP5 products in ESGF early 2016 Comparison of four decadal averages of temperature anomalies and ice area fraction. Data from the ensemble average of the CCSM4 monthly surface temperature anomaly (relative to 18501899 average for each month) from Jan 1850 to Dec 2100, from CMIP5 historical + RCP8.5 scenario runs. Data provided by Gary Strand. Visualization by Tim Scheitlin and Mary Haley. PRP 2015 CMIP AP: Yellowstone Environment Yellowstone GLADE HPC resource, 1.5 PFLOPS peak Central disk resource 16.4 PB Geyser Caldera DAV clusters High Bandwidth Low Latency HPC and I/O Networks FDR InfiniBand and 10Gb Ethernet NCAR HPSS Archive 160 PB capacity ~11 PB/yr growth 1Gb/10Gb Ethernet (40Gb+ future) Science Gateways Data Transfer Services RDA, ESG Remote Vis Partner Sites PRP 2015 XSEDE Sites CMIP Analysis Platform Goal • Enable collaborative climate science by reducing IT barriers Challenge • Data transfer to NCAR from up to 30 data nodes • CMIP6 data volume 16x data volume increase (~30PB) Tools/Environment • Synda download tool designed for ESGF/DRS for data synchronization • HTTP primary transfer protocol with PKI based auth-n and checksums • Varied transfer rates from 100’s of Kb up to 1Gb • Ad Hoc transfers initiated by community requests Wishes / Improvements • Intelligent network with data reduction capabilities • “Invisible” security model • Rich data use metrics for application and workflow development • Data compression that retains scientific content PRP 2015 Thank you! ejn@ucar.edu Funding: NSF PRP 2015 Challenges of obtaining data for analysis Big data challenges include increasing efficiency of obtaining data and information Evaluate Published Data Discover Analysis Access Discovery is improving Evaluation & Access is Challenging Metadata federation Search engines Schema.org Download required to evaluate Little guidance in workflow Human experts fill in gaps PRP 2015 Yellowstone environment @ NCAR • Yellowstone (High-performance computing) – – – – IBM iDataPlex Cluster with Intel Xeon E5-2670† processors 1.5 PetaFLOPs – 4,536 nodes – 72,576 cores 145 TB total memory Mellanox FDR InfiniBand full fat-tree interconnect • GLADE (Centralized file systems and data storage) – 16.4 PB GPFS – GPFS file systems, 90 GB/s aggregate I/O bandwidth • Geyser & Caldera (Data analysis and visualization) – Large-memory system with Intel Westmere EX processors • 16 nodes, 640 Westmere-EX cores, 1 TB memory per node – GPU computation/vis system with Intel Xeon E5-2670 processors • 16 nodes, 256 E5-2670 cores, 64 GB/node, 32 NVIDIA GPUs • Network and data transfer – Connected to XSEDEnet, Front Range GigaPOP – 2x 20-Gbps WAN routes into NCAR-Wyoming Supercomputing Center – Globus Transfer end-points into GLADE (ncar# and xsede#glade) † Sandy PRP 2015 Bridge EP