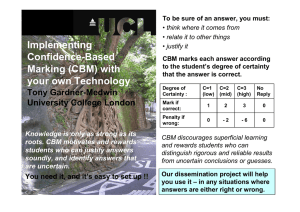

Confidence-Based Marking scheme

advertisement

Abstract: OXFORD Shock of the Old, 7/4/05 Tony Gardner-Medwin, Dept. Physiology, UCL, London WC1E 6BT a.gardner-medwin@ucl.ac.uk Why is your institution (probably) not using confidence-based marking (CBM) in place of right-wrong marking for objective tests? Decades of research and a decade of large-scale implementation at UCL have shown it to be theoretically sound, pedagogically beneficial, popular with students and easy to implement with both on-line and optical mark reader technologies. If the answer is ignorance, then you should look at our FDTL-funded dissemination website (www.ucl.ac.uk/lapt). Maybe the answer is inertia and the imagined constraints of an institutional VLE. But if you think that CBM must somehow be subjective, arbitrary, irrelevant to assessment of knowledge and understanding, discipline-specific, time-wasting, requiring new types of assessment material, or favouring particular personalities, then almost certainly you need to think or read more deeply about it. Within instructional material and formative or summative tests it helps reduce some of the very sensible regrets that we all have when we are forced to replace part of our paper-based assessments and small group teaching with automated tests and material. If you worry that your students simply repeat what they have learned - whether in essays or computer tests - without understanding why it is true, then CBM can help you discriminate between well-justified knowledge, tentative hunches, lucky guesses, simple ignorance and seriously confident errors. The presentation will explain what CBM is all about, give you experience based on questions about the Highway Code, seek audience feedback about what you perceive as potential + and - features, and cover evidence about many of the issues raised above. The take away message is that you fail in your duty to your students if you treat lucky guesses as equivalent to knowledge, or serious misconceptions as no worse than acknowledged ignorance. Your assessments should be something in which you have confidence. Gaining Confidence in Confidence-Based Marking Tony Gardner-Medwin, Physiology, UCL www.ucl.ac.uk/lapt What is CBM ? …. Why ? …. When ? What’s it like to experience CBM? What are possible pros and cons? ….. DISCUSSION ….. Issues, Data, implementation Why is your institution (probably) not using confidence-based marking (CBM) in place of right-wrong marking for objective tests? Oxford 4/05 What is CBM ? The LAPT (UCL) Confidence-Based Marking scheme … applied to each answer that will be marked right/wrong … e.g. T/F, MCQ, EMQs, Numerical, Simple text Confidence Level Score if Correct Score if Incorrect 1 1 0 2 2 -2 3 3 -6 < 67% < 2:1 67-80% > 2:1 >80% > 4:1 Best marks obtained if : Probability correct Odds Why CBM ? (1) Knowledge is degree of belief, or confidence: knowledge uncertainty ignorance misconception delusion decreasing confidence in what is true, increasing confidence in what is false (2) Students must be able to justify knowledge – relate it to other things, check it and argue with rigour. Rote learning is the bane of education. Knowledge is justified true belief In teaching we need to emphasise justification. In assessment we need to measure degrees of belief. With CBM you must think about justification You gain: EITHER if you find justifications for high confidence Mark expected on average OR if you see justifications for reservation. 3 C=3 2 C=2 1 C=1 0 -1 no reply -2 -3 -4 -5 67%80% 80% 67% -6 0% 50% 100% Confidence (estim ated probability correct) When & How do we use CBM ? ……… potentially whenever answers are marked right/wrong Student study: self-assessment, revision & learning materials … stand-alone (PC) or on the web, at home or in College Formative tests (once-off or repeat-till-pass, with randomised Qs or values) e.g. End of Module tests, Maths Practice/Assessment … access portal e.g. via WebCT, and grades returned e.g. to WebCT Open access for other universities, schools, etc. … BMAT practice & tips, GCSE maths, Biol AL, Physics, etc. Exams – summative assessment (at UCL) … T/F or MCQ, EMQ etc. using Optical Mark Reader … OMR (Speedwell) cards & processing available through UCL The UCL Confidence-Based Marking scheme … applied to each answer that will be marked right/wrong … e.g. T/F, MCQ, EMQs, Numerical, Simple text Confidence Level Score if Correct Score if Incorrect 1 1 0 2 2 -2 < 67% < 2:1 67-80% > 2:1 3 3 -6 Best marks obtained if : Probability correct Odds >80% > 4:1 What seem possible benefits (+) or drawbacks (-) to such a scheme? (a) In formative work (b) in exams ? Personality, gender issues: real or imagined? Does confidence-based marking favour certain personality types? • Both underconfidence and overconfidence are undesirable • ‘Correct’ calibration is well defined, desirable and achievable • No significant gender differences are evident (at least after practice) • Students with confidence problems: this is the way to deal with it! • In exams, we can adjust to compensate for poor calibration, so students still benefit from distinguishing more/less reliable answers How well do students discriminate confidence? 100% @C=3 90% % correct @C=2 80% 70% @C=1 60% 50% F M F M (i-c) (ex) F M F M (i-c) (ex) F M F M (i-c) (ex) Mean +/- 95% confidence limits, 331 students year 1 by ethnicity 100 90 80 confidence score 70 60 50 40 30 20 10 0 0 10 20 30 40 50 60 simple score (chance=0%) 70 80 90 100 Reliability and Validity of Confidence-based exam marks Exam marks are determined by: 1. the student’s knowledge and skills in the subject area 2. the level of difficulty of the questions 3. chance factors - how questions relate to details of the student’s knowledge and how uncertainties resolve (luck) (1) = “signal” (its measurement is the object of the exam) (3) = “noise” (random factors obscuring the “signal”) Confidence-based marks improve the “signal-to-noise ratio” A simple & convincing test of this is to compare marks on one set of questions with marks for the same student on a different set (e.g. odd & even Q nos.). High correlation means the data are measuring something about the student, not just “noise”. B. Marks scaled: 0%=chance 100%=max 2 R = 0.735 set 2 (simple) 80% 60% 40% 80% C. 2 R = 0.814 set 2 (confidence) 100% 60% 40% 7 C 20% 20% set 1 (sim ple) 0% set 1 (confidence) 0% 20% 40% 60% 80% 100% The correlation, across students, between scores on one set of questions and another is higher for CBM than for simple scores. But perhaps they are just measuring ability to handle confidence ? No. CBM scores are better than simple scores at predicting even the simple scores (ignoring confidence) on a different set of questions. This can only be because CBM is statistically a more efficient measure of knowledge. 0% 20% 40% 60% 80% 100% D. 80% 2 R = 0.776 set 2 (simple) 0% 60% 40% 20% set 1 (conf 0.6 ) 0% 0% 20% 40% 60% 80% 100% Coef. of Determination (r²), between odd & even numbered Qs in 6 exams (m±sem) Relative efficiency (adjusted conf- based scores / conventional) : m±sem 3 1 * P<0.05 ** P<0.01 0.8 conventional 0.6 adj. conf-based ** 2 difference 0.4 ** 0.2 * ** ** ** differences all P<0.01 ** 1 0 whole class bottom 1/3 top 1/3 whole class bottom 1/3 top 1/3 Improvements in reliability and efficiency, comparing CBM to conventional scores, in 6 medical student exams (each 250300 T/F Qs, >300 students). Cronbach Alpha (standard psychometric measure of ‘reliability’) On six exams (mean ± SEM, n=6): α = 0.925 ± 0.007 using CBM α = 0.873 ± 0.012 using number of items correct • The improvement (P<0.001, paired t-test) corresponds to a reduction of the random element in the variance of exam scores from 14.6% of the student variance to 8.1%. Arriving at a conclusion through probabilistic inference Nuggets of knowledge ? ? ? ? ? ? ? ? Networks of Understanding E V I D E N C E Confidence (Degree of Belief) Inference Choice Confidence-based marking places greater demands on justification, stimulating understanding To understand = to link correctly the facts that bear on an issue. We fail if we mark a lucky guess as if it were knowledge. We fail if we mark delusion as no worse than ignorance. www.ucl.ac.uk/lapt