Imperial

advertisement

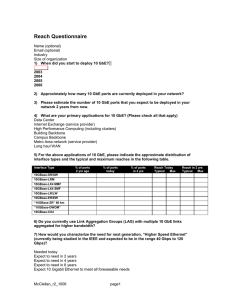

1 IC HEP Computing Review Geoff. Fayers G.J.Fayers@ic.ac.uk 2 Current Experiment Programme Aleph BaBar CMS Dark Matter (Boulby) Diamond-based applied research D0 LHCb Silicon-based detector development (esp. CMS) Zeus 3 Current Computing Hardware DEC4610 AXP 5 DEC AXP W/stns 4 more? 2 SUN Enterprise Svrs 2 SUN W/stns 1 NT Server+BDC ~37 PCs ~18 X-terms Ageing Macs + some new G3+later 2 RIOS PPCs (lynx) ~150 assigned IP addrs ~1.25Tb storage excl. some local PC disks Printers mostly HP 1 VAXStn 4000/60 Current Software DUX 4.0D on all AXP Solaris 2.6 on Enterprises (BaBar) Solaris 2.7 otherwise SAMBA NT4 + Office97/2000 =eXceed at desktop NT4 Server Linux5.2 (D0 FNAL variety) AFS on all UNIX Europractice Selection incl. Synopsys, HSPICE, Xilinx, et al. AutoCAD2000 ++ Ranger PCB design MS, Adobe packages for NT (Optivity) Dr. Solomon’s AVtk 4 Some Features 5 Homogeneous systems, NIS, NIS+, DNS Automount distributed file systems Quotas on home dirs. User-based scratch areas, 7-day files’ lifetime User-based stage areas Per-experiment data areas on rwx rwx r-x permissions Recent news headlines at login Optional news via e/mail Process priority monitor Concerns - 1 Non-convergence of Linux versions for LHC, US? Potential fragmentation of resources Non-scalability of current storage deployment Management effort for NT: SMS, other? No NT YP map capability (except Solaris6/NT4) Vagaries of A/V software Possible management of all http traffic by ICCS Costs of MS licences and network management tools Will 64-bit Linux (e.g IA64) supplant PC arch.? Possible withdrawal of ULVC Europractice support 6 7 Concerns - 2 Poor US connectivity esp. to FNAL for D0 Network management tools? Short investment cycles for PCs Metaframe expensive thin client solution Uncertain future PCI replacement Security, security, security, ……... Networking < Summer 1999: – – – – – = 10Base5, 10Base2 16-port 10BaseT Switch, HDx only NFS traffic via 2nd NICs through switch 5/10/20/80m non-standard custom braided fly leads only Limited division of 10Base2 collision domain to ease congestion – Tolerable response (mostly) – WAN via DECnis600 router: 10Base5 to AGS4+ on Campus FDDI (IP routing only) 8 9 Networking Upgrade Upgrade Summer 1999 Decisions: – – – – – Expected lifetime Likely technologies and services required Density of service ports Future campus connectivity Supporting hardware 10 Expected Lifetime Existing 10Base5/2 10 years old new system at least 10 years DECnis600 ~6 years new network hardware at least 5 years Future Technologies & Services - 1 Summer 1999: – 803.1d bridging standard updated and fully ratified as 802.1D-1998 (all 802.* technologies embraced) – GBE Adaptors at commodity prices, but... PCs too slow PCI-X interim solution GBE as 1000-BaseSX and 1000-BaseLX only GBE over Class D/E UTP expected Mar. 2000 Proprietary Class E solutions emerging for 4 x 250MHz Class F as 4 x 300MHz on horizon (but irrelevant for HEP?) 11 Future Technologies & Services - 2 IEEE HSSG already convened (appendix) LANe still complicated DWDM very likely in SJ4 - ATM will wane 12 The Other Issues Current aggregated b/w > 3.7 GB/sec, so Catalyst 5000 and some competitors useless (received wisdom: 2 x aggregate) Increasing use of laptops Outlet density to ISO standards - high No ATM adaptors for CoreBuilder 3500’s, so…... ICCS ATM spine strategy ditched……. Campus CellPlex7000’s dumped in favour of 9300’s (GBE) Stuck with CoreBuilder 3500 100BaseFX uplinks (poor performance, 802.3q implementation lagging) Possible need for ISDN DECnis600 replacement routing capability 13 Structured Cabling Decision Full Cat5e compatibility and compliance mandatory Mixture of Class D and Class E proprietary UTP MMF and SMF unterminated in corridor ceiling voids Insist on Class E performance testing to 250MHz Insist on full compliance to draft Class E standard Cat6 Guarantee underpinned by Manufacturer Indemnity Only tied Certified Installers considered Leverage early take-up and prestige potential 14 Implementation 15 8 ‘Cat6’ passive component manufacturers evaluated Only one testing fully in 200 - 300 MHz envelope Got flood Cat6 at 10% more than Cat5 17.5GB/sec non-blocking Extreme Summit48, level 2 and level 3 switching, 4-levels QoS (upgradable) 2 x 1000BaseSX ports, optional 1000BaseLX trcvrs HP ProCurve 2424M 10/100 switches HP ProCurve 24 10/100 hubs 100BaseFX uplinks to Campus spine 16 Problems Completed Dec 1999 Test results presented on CD OK but…. Manufacturing fault on some ports - resolution in hand…... HP 2424M fault…….HP to replace (lifetime on-site warranty) Future RAID-based storage, possibly home-grown solution Non-proprietary SAN based on dual cpu 64-bit server, GBE to Extreme Linux Farm(s) with 100BaseT cpus DHCP WAN connectivity resilience via FOIL? Possible Campus Spine bypass? Managed bandwidth pilot (BaBar) H.323/320 VC 17