Transformations - Crop and Soil Science

advertisement

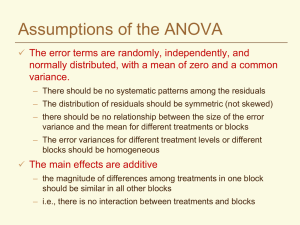

Assumptions of the ANOVA The error terms are randomly, independently, and normally distributed, with a mean of zero and a common variance. – There should be no systematic patterns among the residuals – The distribution of residuals should be symmetric (not skewed) – there should be no relationship between the size of the error variance and the mean for different treatments or blocks – The error variances for different treatment levels or different blocks should be homogeneous (of similar magnitude) The main effects are additive – the magnitude of differences among treatments in one block should be similar in all other blocks – i.e., there is no interaction between treatments and blocks If the ANOVA assumptions are violated: Affects sensitivity of the F test Significance level of mean comparisons may be much different than they appear to be Can lead to invalid conclusions Diagnostics Use descriptive statistics to test assumptions before you analyze the data – Means, medians and quartiles for each group (histograms, box plots) – Tests for normality, additivity – Compare variances for each group Examine residuals after fitting the model in your analysis – – – – Descriptive statistics of residuals Normal plot of residuals Plots of residuals in order of observation Relationship between residuals and predicted values (fitted values) SAS Box Plots Look For Mean Outlier (>1.5*IQR) Outliers Skewness Common Variance Caution Median IQR Quartile (25%) Min Not many observations per group IQR = interquartile range (25% - 75%) Additivity Linear additive model for each experimental design Yij = + i + ij CRD Yij = + i + j + ij RBD Implies that a treatment effect is the same for all blocks and that the block effect is the same for all treatments When the assumption would not be correct... Two nitrogen treatments applied to 3 blocks 1 2 3 Water Table Differences between treatments might be greater in block 3 When there is an interaction between blocks and treatments - the model is no longer additive – may be multiplicative; for example, when one treatment always exceeds another by a certain percentage SAS interaction plot Testing Additivity --- Tukey’s test Test is applicable to any two-way classification such as RBD classified by blocks and treatments Compute a table with raw data, treatment means, treatment effects ( Y. j Y.. ), block means and block effects (Yi. Y.. ) Q Yij Yi. Y.. Y. j Y.. Compute SS for nonadditivity = (Q2*N)/(SST*SSB) N = t*r with 1 df The error term is partitioned into nonadditivity and residual and can be tested with F Test can also be done with SAS Residuals Residuals are the error terms – what is left over after accounting for all of the effects in the model Yij = + i + ij CRD eij Yij Y.. Ti Yij = + i + j + ij RBD eij Yij Y.. Bi Tj Independence Independence implies that the error (residual) for one observation is unrelated to the error for another – Adjacent plots are more similar than randomly scattered plots – So the best insurance is randomization – In some cases it may be better to throw out a randomization that could lead to biased estimates of treatment effects – Observations in a time series may be correlated (and randomization may not be possible) Normality Look at stem leaf plots, boxplots of residuals Normal probability plots Minor deviations from normality are not generally a problem for the ANOVA Normality Normal Probability Plot from Original Data 350 300 250 Residuals 200 150 100 50 0 -3 -2 -1 0 1 -50 -100 -150 Quantiles of standard normal 2 3 Normality Normal Probability Plot from the same Data After Transformation 3 2 Residuals 1 0 -3 -2 -1 0 1 -1 -2 -3 -4 Quantiles of standard normal 2 3 Homogeneity of Variances Replicates Treatment 1 2 3 4 5 Total Mean s2 A 3 1 5 4 2 15 3 2.5 B 6 8 7 4 5 30 6 2.5 C 12 6 9 3 15 45 9 22.5 D 20 14 11 17 70 14 22.5 8 Logic would tell us that differences required for significance would be greater for the two highly variable treatments If we analyzed together: Source df SS MS F Treatments 3 330 110 8.8** Error 200 12.5 16 LSD=4.74 Analysis for A and B Conclusions would be different if we analyzed the two groups separately: Analysis for C and D Source df SS MS F Treatments 1 22.5 22.5 9* Error 8 20.0 2.5 Source df SS MS F Treatments 1 62.5 62.5 2.78 Error 180 22.5 8 Relationships of Means and Variances Most common cause of heterogeneity of variance Test the effect of a new vitamin on the weights of animals. What you see What the ANOVA assumes Examining the error terms Take each observation and remove the general mean, the treatment effects and the block effects; what is left will be the error term for that observation The model = Yij Y.. i j eij Block effect = i Yi. Y.. j Y.j Y.. Treatment effect = so ... Yij Y.. Yi. Y.. Y. j Y.. eij then ... Yij Y i. Y. j Y.. eij Finally ... eij Yij Y i. Y. j Y.. Looking at the error components Trt. A B C D Mean I 47 50 57 54 52 II 52 54 53 65 56 e11 = 47 – 52 – 53 + 58 = 0 III 62 67 69 74 68 IV 51 57 57 59 56 Trt. I A 0 B -1 C 4 D -3 Mean 0 Mean 53 57 59 63 58 II 1 -1 -4 4 0 III -1 0 0 1 0 IV 0 2 0 -2 0 Mean 0 0 0 0 0 Looking at the error components Trt. A B C D E F Mean I .18 .32 2.0 2.5 108 127 40 II .30 .4 3.0 3.3 140 153 50 III .28 .42 1.8 2.5 135 148 48 e11 = 0.18 – 40 - 0.3 + 49 = 8.88 IV Mean .44 0.3 .46 0.4 2.8 2.4 3.3 2.9 165 137 176 151 58 49 Trt. I II A 8.88 -1.00 B 8.92 -1.00 C 8.60 -0.40 D 8.60 -0.60 E -20.00 2.00 F -15.00 1.00 III 0.98 1.02 0.40 0.60 -1.00 2.00 IV -8.86 -8.94 -8.60 -8.60 19.00 16.00 Predicted values Remember Yij Y.. i j eij Y i. Y. j Y.. eij Predicted value Ŷij Y.. i j Yi. Y. j Y.. Plots of eij vs Ŷij should be random Plots of e ij vs Yij will be autocorrelated Residual Plots A valuable tool for examining the validity of assumptions for ANOVA – should see a random scattering of points on the plot For simple models, there may be a limited number of groups on the Predicted axis Look for random dispersion of residuals above and below zero Residual Plots – Outlier Detection Recheck data input and correct obvious errors If an outlier is suspected, could look at studentized residuals (ij) For a CRD Residuals (eij) ri 1 seij MSE r i ij eij Outliers seij Treat as a missing plot if too extreme (e.g. ij > 3 or 4) Predicted Values Visual Scores Values are discrete – Do not follow a normal distribution – Range of possible values is limited Alternatives? Residual Plot of Stand Ratings Frequency Plant Stand Ratings 20 10 0 Predicted Values 3 4 5 6 7 8 Score Are the errors randomly distributed? Residuals are not randomly distributed around zero – they follow a pattern Model may not be adequate – e.g., fitting a straight regression line when response is curvilinear Model not adequate 1.5 Residuals 1 0.5 0 -0.5 0 5 10 15 -1 -1.5 Predicted values 20 25 Are variances homogeneous? In this example the variance of the errors increases with the mean (note fan shape) Cannot assume a common variance for all treatments 160 Residuals 120 80 40 0 -40 0 20 40 60 80 100 -80 -120 -160 Predicted values 120 140 160 Homogeneity Quick Test (F Max Test) By examining the ratio of the largest variance to the smallest and comparing with a probability table of ratios, you can get a quick test. The null hypothesis is that variances are equal, so if your computed ratio is greater than the table value (Kuehl, Table VIII), you reject the null hypothesis. 2 s (max) 2 s (min) Where t = number of independent variances (mean squares) that you are comparing v = degrees of freedom associated with each mean square An Example Treatment N P N+P S N+S P+S N+P+S Variance 19.54 1492.27 98.21 5437.99 9.03 496.58 22.94 An RBD experiment with four blocks to determine the effect of salinity on the application of N and P on sorghum 5437.99/9.03 = 602.21 Table value (t=7, v=r-1=3) = 72.9 602.21>72.9 Reject null hypothesis and conclude that variances are NOT homogeneous (equal) Other HOV tests are more sensitive If the quick test indicates that variances are not equal (homogeneous), no need to test further But if quick test indicates that variances ARE homogeneous, you may want to go further with a Levene (Med) test or Bartlett’s test which are more sensitive. This is especially true for values of t and v that are relatively small. F max, Levene (Med), and Bartlett’s tests can be adapted to evaluate homogeneity of error variances from different sites in multilocational trials. Homogeneity of Variances - Tests Johnson (1981) compared 56 tests for homogeneity of variance and found the Levene (Med) test to be one of the best. – Based on deviations of observations from the median for each treatment group. Test statistic is compared to a critical F,t-1,N-t value. – This is now the default homogeneity of variance test in SAS (HOVTEST). Bartlett’s test is also common – Based on a chi-square test with t-1 df – If calculated value is greater than tabular value, then variances are heterogeneous What to do if assumptions are violated? Divide your experiment into subsets of blocks or treatments that meet the assumptions and conduct separate analyses Transform the data and repeat the analysis – residuals follow another distribution (e.g., binomial, Poisson) – there is a specific relationship between means and variances – residuals of transformed data must meet the ANOVA assumptions Use a nonparametric test – no assumptions are made about the distribution of the residuals – most are based on ranks – some information is lost – generally less powerful than parametric tests Use a Generalized Linear Model (PROC GLIMMIX in SAS) – make the model fit the data, rather than changing the data to fit the model Relationships between means and variances... Can usually tell just by looking. Do the variances increase as the means increase? If so, construct a table of ratios of variance to means and standard deviation to means Determine which is more nearly proportional - the ratio that remains more constant will be the one more nearly proportional This information is necessary to know which transformation to use – the idea is to convert a known probability distribution to a normal distribution Comparing Ratios - Which Transformation? Trt Mean M-C 0.3 M-V 0.4 C-C 2.4 C-V 2.9 S-C 137.0 S-V 151.0 Var SDev 0.01147 0.107 0.00347 0.059 0.3467 0.589 0.2133 0.462 546.0 23.367 425.3 20.624 Var/M SDev/M 0.04 0.01 0.14 0.07 3.98 2.82 0.36 0.15 0.24 0.16 0.17 0.14 SDev roughly proportional to the means The Log Transformation When the standard deviations (not the variances) of samples are roughly proportional to the means, the log transformation is most effective Common for counts that vary across a wide range of values – numbers of insects – number of diseased plants/plot Also applicable if there is evidence of multiplicative rather than additive main effects – e.g., an insecticide reduces numbers of insects by 50% – e.g., early growth of seedlings may be proportional to current size of plants General remarks... Data with negative values cannot be transformed with logs Zeros present a special problem If negative values or zeros are present, add 1 to all data points before transforming You can multiply all data points by a constant without violating any rules Do this if any of the data points are less than 1 (to avoid negative logs) Recheck... After transformation, rerun the ANOVA on the transformed data Recheck the transformed data against the assumptions for the ANOVA – Look at residual plots, normal plots – Carry out Levene’s test or Bartlett’s for homogeneity of variance – Apply Tukey’s test for additivity Beware that a transformation that corrects one violation in assumptions may introduce another Square Root Transformation One of a family of power transformations The variance tends to be proportional to the mean – e.g., if leaf length is normally distributed, then leaf area may require a square root transformation Use when you have counts of rare events in time or space – number of insects caught in a trap May follow a Poisson distribution (for discrete variables) If there are counts under 10, it is best to use square root of Y + 0.5 Will be easier to declare significant differences in mean separation When reporting, “detransform” the means – present summary mean tables on original scale Arcsin or Angular Transformation arcsin Yij Counts expressed as percentages or proportions of the total sample may require transformation Follow a binomial distribution - variances tend to be small at both ends of the range of values ( close to 0 and 100%) Not all percentage data are binomial in nature – e.g., grain protein is a continuous, quantitative variable that would tend to follow a normal distribution If appropriate, it usually helps in mean separation Arcsin or Angular Transformation arcsin Yij Data should be transformed if the range of percentages is greater than 40 May not be necessary for percentages in the range of 30- 70% If percentages are in the range of 0-30% or 70-100%, a square root transformation may be better Do not include treatments that are fixed at 0% or at 100% Percentages are converted to an angle expressed in degrees or in radians – express Yij as a decimal fraction – gives results in radians – 1 radian = 57.296 degrees Summary of Transformations Type of data Quantitative variable where treatment effects are proportional Positive integers that cover a wide range of values Counts of rare events Issue Natural scale is not normal (often rightskewed) Lognormal distribution Standard deviation proportional to the mean and/or nonadditivity Variance = mean Poisson distribution Transformation Log Percentages, wide range of values including extremes Variances are smaller near zero and 100 Binomial distribution ArcSin Log or log(Y+1) Square root or sqrt(Y+0.5) Reasons for Transformation We don’t use transformation just to give us results more to our liking We transform data so that the analysis will be valid and the conclusions correct Remember .... – all tests of significance and mean separation should be carried out on the transformed data – calculate means of the transformed data before “detransforming”