Risk Management - Software Engineering II

advertisement

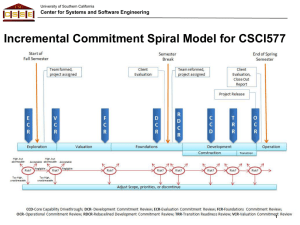

University of Southern California Center for Systems and Software Engineering Risk Management ©USC-CSSE 1 University of Southern California Center for Systems and Software Engineering ©USC-CSSE 2 University of Southern California Center for Systems and Software Engineering Risk vs Issue • A Risk is an uncertain event that could impact your chosen path should it be realised. • Risks are events that are not currently affecting you – they haven’t happened yet. • Once a risk is realised, it has the potential to become an Issue Source: http://agile101.net/2009/07/26/agile-risk-management-the-difference-between-risks-and-issues/ ©USC-CSSE 3 University of Southern California Center for Systems and Software Engineering Risk Management • What is risk? • What about problem, concern, issue? ©USC-CSSE 4 University of Southern California Center for Systems and Software Engineering Reactively Managing a Software Development Problem System Integration Time: • • • • • We just started integrating the various software components to be used in our project and we found out that COTS* products A and B can’t talk to one another This is a problem, caused by a previously unrecognized risk, that materialized: The risk that two COTS products might not be able to talk to one another; specifically that A and B might not be able to talk to one another) We’ve got too much tied up in A and B to change Our best solution is to build wrappers around A and B to get them to talk via CORBA** This will result in: – a 3 month schedule overrun = $100K contract penalty – a $300K cost overrun *COTS: Commercial off-the-shelf **CORBA: Common Object Request Broker Architecture ©USC-CSSE 5 University of Southern California Center for Systems and Software Engineering Proactively Managing a Risk (assessment) System Design Time: – A and B are our strongest COTS choices – But there is some chance that they can’t talk to one another • Probability that A and B can’t talk to one another = probability of loss: P(L) • From previous experience, with COTS like A and B, we assess P(L) at 50% – If we commit to using A and B, and we find out at integration time that they can’t talk to one another • Size of loss S(L) = $300K + $100K = $400K • We have a risk exposure of RE = P(L) * S(L) = (.5) * ($400K) = $200K ©USC-CSSE 6 University of Southern California Center for Systems and Software Engineering Risk Management Strategy 1: Buying Information System Design Time: – Let’s spend $30K and 2 weeks prototyping the integration of A and B – This will buy information on the magnitudes of P(L) and S(L) – If RE = P(L) * S(L) is small, we’ll accept and monitor the risk – If RE is large, we’ll use one/some of the other strategies ©USC-CSSE 7 University of Southern California Center for Systems and Software Engineering Other Risk Management Strategies • • • • Risk Avoidance – COTS product C is almost as good as B, and we know, from having used A and C, that C can talk to A – Delivering on time is worth more to the customer than the small performance loss Risk Transfer – If the customer insists on using A and B, have them establish a risk reserve, to be used in case A and B can’t talk to each other Risk Reduction – If we build the wrappers and the CORBA connections right now, we add cost but minimize the schedule delay Risk Acceptance – If we can solve the A and B interoperability problem, we’ll have a big competitive edge on future procurements 8 – Let’s do this on ©USC-CSSE our own money, and patent the solution University of Southern California Center for Systems and Software Engineering Software Risk Management Risk Identification Risk Assessment Risk Analysis Risk Prioritization Risk Management Risk mgmt Planning Risk Control Risk Resolution Risk Monitoring ©USC-CSSE Checklists Decision driver analysis Assumption analysis Decomposition Performance models Cost models Network analysis Decision analysis Quality factor analysis Risk exposure Risk leverage Compound risk reduction Buying information Risk avoidance Risk transfer Risk reduction Risk element planning Risk plan integration Prototypes Simulations Benchmarks Analyses Staffing Milestone tracking Top-10 tracking Risk reassessment Corrective action 9 University of Southern California Center for Systems and Software Engineering Top 10 Risk Categories: 1989 and 1995 1989 1995 1. Personnel shortfalls 1. Personnel shortfalls 2. Schedules and budgets 2. Schedules, budgets, process 3. Wrong software functions 3. COTS, external components 4. Wrong user interface 4. Requirements mismatch 5. Gold plating 5. User interface mismatch 6. Requirements changes 6. Architecture, performance, quality 7. Externally-furnished components 7. Requirements changes 8. Externally-performed tasks 8. Legacy software 9. Real-time performance 9. Externally-performed tasks 10. Straining computer science 10. Straining computer science ©USC-CSSE 10 University of Southern California Center for Systems and Software Engineering Primary CS577 Risk Categories (all on 1995 list) and Examples • Personnel shortfalls: commitment (This is team member’s last course; only needs C to graduate); compatibility; communication problems; skill deficiencies (management, Web design, Java, Perl, CGI, data compression, …) • Schedule: project scope too large for 24 weeks; IOC content; critical-path items (COTS, platforms, reviews, …) • COTS: see next slide re multi-COTS • Rqts, UI: mismatch to user needs (recall overdue book notices) • Performance: #bits; #bits/sec; overhead sources • Externally-performed tasks: Client/Operator preparation; commitment for transition effort ©USC-CSSE 11 University of Southern California Center for Systems and Software Engineering COTS and External Component Risks • COTS risks: immaturity; inexperience; COTS incompatibility with application, platform, other COTS; controllability • Non-commercial off-the shelf components: reuse libraries, government, universities, etc. – Qualification testing; benchmarking; inspections; reference checking; compatibility analysis ©USC-CSSE 12 University of Southern California Center for Systems and Software Engineering Risk Exposure Factors (Satellite Experiment Software) Unsatisfactory Outcome (UO) A. S/ W error kills experiment B. S/ W error loses key data C. Fault tolerance features cause unacceptable performance D. Monitoring software reports unsafe condition as safe E. Monitoring software reports safe condition as unsafe F. Hardware delay causes schedule overrun G. Data reduction software errors cause extra work H. Poor user interface causes inefficient operation I. Processor memory insufficient J. DBMS software loses derived data ©USC-CSSE Prob (UO) 3-5 Loss (UO) 10 Risk Exposure 30 - 50 3-5 8 24 - 40 4-8 7 28 - 56 5 9 45 5 3 15 6 4 24 8 1 8 6 5 30 1 7 7 2 2 4 13 University of Southern California Center for Systems and Software Engineering Risk Reduction Leverage (RRL) Change in Risk Exposure / cost to implement avoidance method RRL - RE BEFORE - RE AFTER RISK REDUCTION COST · Spacecraft Example LONG DURATION TEST LOSS (UO) PROB (UO) B RE B $20M 0.2 $4M PROB (UO) A REA 0.05 $1M COST $2M 4-1 2 RRL ©USC-CSSE = 1.5 FAILURE MODE TESTS $20M 0.2 $4M 0.07 $1.4M $0.26M 4- 1.4 = 10 0.26 14 University of Southern California Center for Systems and Software Engineering Risk Management Plans For Each Risk Item, Answer the Following Questions: 1. Why? Risk Item Importance, Relation to Project Objectives 2. What, When? Risk Resolution Deliverables, Milestones, Activity Nets 3. Who, Where? Responsibilities, Organization 4. How? Approach (Prototypes, Surveys, Models, …) 5. How Much? Resources (Budget, Schedule, Key Personnel) ©USC-CSSE 15 University of Southern California Center for Systems and Software Engineering Risk Management Plan: Fault Tolerance Prototyping 1. Objectives (The “Why”) – Determine, reduce level of risk of the software fault tolerance features causing unacceptable performance – Create a description of and a development plan for a set of low-risk fault tolerance features 2. Deliverables and Milestones (The “What” and “When”) – By week 3 1. 2. 3. 4. 5. Evaluation of fault tolerance option Assessment of reusable components Draft workload characterization Evaluation plan for prototype exercise Description of prototype – By week 7 6. 7. 8. 9. Operational prototype with key fault tolerance features Workload simulation Instrumentation and data reduction capabilities Draft Description, plan for fault tolerance features – By week 10 10. Evaluation and iteration of prototype 11. Revised description, plan for fault tolerance features ©USC-CSSE 16 University of Southern California Center for Systems and Software Engineering Risk Management Plan: Fault Tolerance Prototyping (concluded) • Responsibilities (The “Who” and “Where”) – System Engineer: G. Smith • Tasks 1, 3, 4, 9, 11, support of tasks 5, 10 – Lead Programmer: C. Lee • Tasks 5, 6, 7, 10 support of tasks 1, 3 – Programmer: J. Wilson • Tasks 2, 8, support of tasks 5, 6, 7, 10 • Approach (The “How”) – – – – – • Design-to-Schedule prototyping effort Driven by hypotheses about fault tolerance-performance effects Use real-time OS, add prototype fault tolerance features Evaluate performance with respect to representative workload Refine Prototype based on results observed Resources (The “How Much”) $60K - Full-time system engineer, lead programmer, programmer (10 weeks)*(3 staff)*($2K/staff-week) $0K - 3 Dedicated workstations (from project pool) $0K - 2 Target processors (from project pool) $0K - 1 Test co-processor (from project pool) $10K - Contingencies ©USC-CSSE $70K - Total 17 University of Southern California Center for Systems and Software Engineering Risk Monitoring Milestone Tracking – Monitoring of risk Management Plan Milestones Top-10 Risk Item Tracking – Identify Top-10 risk items – Highlight these in monthly project reviews – Focus on new entries, slow-progress items Focus review on manger-priority items Risk Reassessment Corrective Action ©USC-CSSE 18 University of Southern California Center for Systems and Software Engineering Project Top 10 Risk Item List: Satellite Experiment Software Mo. Ranking This Last #Mo. Risk Item Risk Resolution Progress Replacing Sensor-Control Software Developer 1 4 2 Top Replacement Candidate Unavailable Target Hardware Delivery Delays 2 5 2 Procurement Procedural Delays Sensor Data Formats Undefined 3 3 3 Action Items to Software, Sensor Teams; Due Next Month Staffing of Design V&V Team 4 2 3 Key Reviewers Committed; Need FaultTolerance Reviewer Software Fault-Tolerance May Compromise Performance 5 1 3 Fault Tolerance Prototype Successful Accommodate Changes in Data Bus Design 6 - 1 Meeting Scheduled With Data Bus Designers Testbed Interface Definitions 7 8 3 Some Delays in Action Items; Review Meeting Scheduled User Interface Uncertainties 8 6 3 User Interface Prototype Successful TBDs In Experiment Operational Concept - 7 3 TBDs Resolved Uncertainties In Reusable Monitoring Software - 9 3 Required Design Changes Small, Successfully Made ©USC-CSSE 19 University of Southern California Center for Systems and Software Engineering Early Risk Management in 577 Project Tasks Risk Management Skills; Skill-building activities Select projects; form teams Project risk identification Staffing risk assessment and resolution - Readings, lectures, homework, case study, guidelines Plan early phases Schedule/budget risk assessment, planning Risk–driven processes (ICSM) - Readings, lectures, homework, guidelines, planning and estimating tools Formulate, validate concept of operation Risk-driven level of detail - Readings, lecture, guidelines, project Manage to plans Risk monitoring and control - Readings, lecture, guidelines, project Develop, validate FC package Risk assessment and prioritization - Readings, lecture, guidelines, project FC Architecture Review Risk-driven review process Review of top-N project risks Readings, lecture, case studies, review ©USC-CSSE 20 University of Southern California Center for Systems and Software Engineering Software Risk Management Techniques Source of Risk Risk Management Techniques 1. Personnel shortfalls Staffing with top talent; key personnel agreements; teambuilding; training; tailoring process to skill mix; walkthroughs 2. Schedules, budgets, Process Detailed, multi-source cost and schedule estimation; design to cost; incremental development; software reuse; requirements descoping; adding more budget and schedule; outside reviews 3. COTS, external components Benchmarking; inspections; reference checking; compatibility prototyping and analysis 4. Requirements mismatch Requirements scrubbing; prototyping; cost-benefit analysis; design to cost; user surveys 5. User interface mismatch Prototyping; scenarios; user characterization (functionality; style, workload); identifying the real users ©USC-CSSE 21 University of Southern California Center for Systems and Software Engineering Software Risk Management Techniques Source of Risk Risk Management Techniques 6. Architecture, performance, quality Simulation; benchmarking; modeling; prototyping; instrumentation; tuning 7. Requirements changes High change threshold: information hiding; incremental development (defer changes to later increments) 8. Legacy software Reengineering; code analysis; interviewing; wrappers; incremental deconstruction 9. COTS, Externallyperformed tasks Pre-award audits, award-fee contracts, competitive design or Prototyping 10. Straining computer science Technical analysis; cost-benefit analysis; prototyping; reference checking ©USC-CSSE 22 University of Southern California Center for Systems and Software Engineering Validation Results on Process Adoption • Incidents of Process Selection and Direction #of teams Results on Project Process Selection Changes 8/14 Selected the right process pattern from the beginning 3/14 Unclear project scope ; re-select right at the end of the Exploration phase 1/14 Minor changes on project scope ; right at the end of the Valuation phase 1/14 Major change in Foundations phase 1/14 Infeasible project scope ©USC-CSSE 23 University of Southern California Center for Systems and Software Engineering Top 10 Risk Categories: 1995 and 2010 1995 2010 1. Personnel shortfalls 1. Customer-developer-user team cohesion 2. Schedules, budgets, process 2. Personnel shortfalls 3. COTS, external components 3. Architecture complexity; quality tradeoffs 4. Requirements mismatch 4. Budget and schedule constraints 5. User interface mismatch 5. COTS and other independently evolving systems 6. Architecture, performance, quality 6. Lack of domain knowledge 7. Requirements changes 7. Process Quality Assurance 8. Legacy software 8. Requirements volatility; rapid change 9. Externally-performed tasks 9. User interface mismatch 10. Straining computer science 10. Requirements mismatch ©USC-CSSE 24 University of Southern California Center for Systems and Software Engineering Primary CS577 Risk Categories (all on 1995 list) and Examples • Personnel shortfalls: commitment (This is team member’s last course; only needs C to graduate); compatibility; communication problems; skill deficiencies (management, Web design, Java, Perl, CGI, data compression, …) • Schedule: project scope too large for 24 weeks; IOC content; critical-path items (COTS, platforms, reviews, …) • COTS: see next slide re multi-COTS • Rqts, UI: mismatch to user needs (recall overdue book notices) • Performance: #bits; #bits/sec; overhead sources • Externally-performed tasks: Client/Operator preparation; commitment for transition effort ©USC-CSSE 25 University of Southern California Center for Systems and Software Engineering Top 11 - Risk distribution in CSCI577 12 10 8 6 4 2 0 ©USC-CSSE 26 University of Southern California Center for Systems and Software Engineering Comparing between risks in Fall and Spring 6 5 4 3 2 Fall 1 Spring 0 ©USC-CSSE 27 University of Southern California Center for Systems and Software Engineering Conclusions • Risk management starts on Day One – Delay and denial are serious career risks – Data provided to support early investment • Win Win spiral model provides process framework for early risk resolution – Stakeholder identification and win condition reconciliation – Anchor point milestones • Risk analysis helps determine “how much is enough” – Testing, planning, specifying, prototyping,… – Buying information to reduce risk ©USC-CSSE 28 University of Southern California Center for Systems and Software Engineering Quality Management ©USC-CSSE 29 University of Southern California Center for Systems and Software Engineering Outline • Quality Management – In CMMI 1.3 – In ISO 15288 – In CSCI577ab (c) USC-CSSE 30 University of Southern California Center for Systems and Software Engineering Objectives of QM • To ensure the high quality process • in order to deliver high quality products (c) USC-CSSE 31 University of Southern California Center for Systems and Software Engineering Quality Management in CMMI 1.3 Process Areas Configuration Management (CM) Product Integration (PI) Causal Analysis and Resolution (CAR) Project Monitoring and Control (PMC) Decision Analysis and Resolution (DAR) Project Planning (PP) Integrated Project Management (IPM) Quantitative Project Management (QPM) Measurement and Analysis (MA) Requirements Development (RD) Organizational Performance Management Requirements Management (REQM) (OPM) Organizational Process Definition (OPD) Organizational Process Focus (OPF) Risk Management (RSKM) Supplier Agreement Management (SAM) Organizational Process Performance (OPP) Technical Solution (TS) Organizational Training (OT) Validation (VAL) Process and Product Quality Assurance (PPQA) Verification (VER) (c) USC-CSSE 32 University of Southern California Center for Systems and Software Engineering PPQA - Product and Process Quality Assurance (c) USC-CSSE 33 University of Southern California Center for Systems and Software Engineering PPQA - Product and Process Quality Assurance (c) USC-CSSE 34 University of Southern California Center for Systems and Software Engineering PPQA for Agile development (c) USC-CSSE 35 University of Southern California Center for Systems and Software Engineering CM – Configuration Management (c) USC-CSSE 36 University of Southern California Center for Systems and Software Engineering CM – Configuration Management (c) USC-CSSE 37 University of Southern California Center for Systems and Software Engineering CM – Configuration Management (c) USC-CSSE 38 University of Southern California Center for Systems and Software Engineering MA – Measurement and Analysis (c) USC-CSSE 39 University of Southern California Center for Systems and Software Engineering VER - Verification (c) USC-CSSE 40 University of Southern California Center for Systems and Software Engineering VER - Verification (c) USC-CSSE 41 University of Southern California Center for Systems and Software Engineering VAL - Validation (c) USC-CSSE 42 University of Southern California Center for Systems and Software Engineering VAL - Validation (c) USC-CSSE 43 University of Southern California Center for Systems and Software Engineering Quality Management in ISO 15288 Activities a) Plan quality management. 1. 2. 3. Establish quality management policies Establish organization quality management objectives Define responsibilities and authority for implementation of quality management. b) Assess quality management. 1. 2. 3. Assess customer satisfaction and report. Conduct periodic reviews of project quality plans. The status of quality improvements on products and services is monitored. c) Perform quality management corrective action. 1. 2. Plan corrective actions when quality management goals are not achieved. Implement corrective actions and communicate results through the organization. (c) USC-CSSE 44 University of Southern California Center for Systems and Software Engineering Configuration Management in ISO 15288 Activities a)Plan configuration management. 1) Define a configuration management strategy 2) Identify items that are subject to configuration control. b)Perform configuration management a) Maintain information on configurations with an appropriate level of integrity and security b) Ensure that changes to configuration baselines are properly identified, recorded, evaluated, approved, incorporated, and verified. (c) USC-CSSE 45 University of Southern California Center for Systems and Software Engineering Quality Management in 577ab • • • • • • • • • • IIV&V Configuration Management Defect Reporting and Tracking Testing Buddy Review Architecture Review Board Core Capability Drive through Design Code Review Document template Sample artifacts (c) USC-CSSE 46 University of Southern California Center for Systems and Software Engineering Quality Guidelines • Design Guidelines – Describe design guidelines on how to improve or maintain modularity, reuse and maintenance – How the design will map to the implementation • Coding Guidelines – Describe how to document the code in such as way that it could easily be communicated to others (c) USC-CSSE 47 University of Southern California Center for Systems and Software Engineering Coding Guidelines • C: http://www.gnu.org/prep/standards/standards.html • C++ : http://geosoft.no/development/cppstyle.html • Java: http://geosoft.no/development/javastyle.html • Visual Basic: http://msdn.microsoft.com/en-us/library/h63fsef3.aspx (c) USC-CSSE 48 University of Southern California Center for Systems and Software Engineering Quality Guidelines • Version Control and History – Chronological log of the changes introduced to this unit • Implementation Considerations – Detailed design and implementation for as-built considerations • Unit Verification – Unit / integration test – Code walkthrough / review / inspection (c) USC-CSSE 49 University of Southern California Center for Systems and Software Engineering Quality Assessment Methods • Methods, tools, techniques, processes that can identify the problems – Detect and report the problem – Measure the quality of the software system • Three methods of early defect identification – peer review, IIV&V, Automated Analysis (c) USC-CSSE 50 University of Southern California Center for Systems and Software Engineering Peer Review • Reviews performed by peers in the development team – Can be from Fagan’s inspections to simple buddy checks – Peer Review Items – Participants / Roles – Schedule (c) USC-CSSE 51 University of Southern California Center for Systems and Software Engineering (c) USC-CSSE Defect Removal Profiles 52