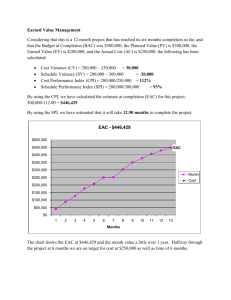

pg 1 Sec 2.0 EAC Manual

advertisement

2nd EAC-MCED Dialogue Megat Johari Megat Mohd Noor Universiti Teknologi Malaysia International Campus Kuala Lumpur 22nd February 2010 Topics Introduction Best Practices Concerns Causes Development Involvement Feedback Conclusion Introduction Objectives of Accreditation Ensure programmes attain standard comparable to global practice (pg 1 Sec 1.0 EAC Manual) Ensure CQI culture (pg 1 Sec 1.0 EAC Manual) Ensure graduates can register with BEM (pg 1 Sec 2.0 EAC Manual) Ensure CQI is practiced (pg 1 Sec 2.0 EAC Manual) Benchmark engineering programmes (pg 1 Sec 2.0 EAC Manual) Accreditation Policy Focus on outcomes and developed internal system (pg 4 Sec 5.1 EAC Manual) Determining the effectiveness of the quality assurance system (pg 4 Sec 5.1 EAC Manual) Compliance to criteria (pg 5 Sec 5.5 EAC Manual) Minor shortcoming(s) – less than 5 years accreditation (pg 4 Sec 5.6 EAC Manual) EAC Focus Breadth and depth of curriculum Outcome based approach Continual quality improvement Quality management system EAC Criteria Program Objectives Program Outcomes Academic Curriculum Students Academic & Support Staff Facilities Quality Management System Universities Best Practices Best Practices - Curriculum Extensive stakeholders involvement External examiner with adequate TOR Balanced curriculum including assessment; cognitive, psychomotor & affective Comprehensive benchmarking (including against WA attributes) Considered seriously students’ workload distribution Various delivery methods Best Practices – System Systematic approach to demonstrate attainment of program outcomes Staff training (awareness) on outcome based approach Moderation of examination questions to ensure appropriate level Course CQI implemented Best Practices – System System integrity ensured by committed and dedicated staff Constructive leadership Comprehensive self assessment report Planned and monitored activities (PDCA) Well documented policies / procedures and traceable evidence Certification to ISO 9001/17025, OSHAS 18001 Best Practices - Staff Highly qualified academic staff (PhD/PE) with research and industry experience Staff professional development and involvement Staff training (awareness) on outcome based approach Research / industry experience that enhance undergraduate teaching Academic staff in related discipline Ideal staff: student ratio (1:10 or better) Best Practices – Students & Facilities Awareness programs for students on outcomes Remedial classes to bridge basic knowledge gaps Current (not obsolete) laboratory equipment in appropriate number High end laboratory equipment Emphasis on safety Accreditation Concerns PEO & PO Specialisation at undergraduate level (eg. BEng [Nanotechnology]) Stakeholders involvement (eg. IAP); minimal and/or inappropriate Program objectives (PEO); restatement of program outcomes Program outcomes (PO); only cognitive assessment Curriculum Benchmarking; limited to curriculum (virtual) No link between engineering courses and specialisation Course outcomes mapping to PEO/PO; not well understood by academic staff Delivery method; traditional not embracing project/problem based (open-ended) Curriculum Courses devoid of higher cognitive level Team teaching not visible (not involved in planning nor summative evaluation) Industrial training (exposure); taking up a semester teaching time and/or conducted last Assessment & Evaluation Assessment types and weightage; favour high grades or facilitate pass Depth (level) of assessment; not visible / appropriate (lack of philosophy) Examination questions; not challenging Lack of summative evaluation Mostly indirect assessement (simplistic direct assessment; grade=outcome) Staff & Facilities Varied understanding of system (OBE) Academic staff; professional qualification / experience limited (mostly young academics) – issue of planning and recruitment policy Inadequate laboratory equipment / space / technician Laboratory safety Ergonomics Quality Management System Follow-up actions; slow or not visible No monitoring Grading system (low passing marks) Adhoc procedure (reactive) Financial sustainability Incomplete cycle (infancy) Causes & Development Causes Top management; not the driving force (delegation & accountability) Academic leadership Inadequate staff training or exposure Awareness of EAC requirements Unclear policy, procedures and/or philosophy Understanding between engineering & technology Development Latest Development 3 PE (or equivalent) per program Industrial training – vacation (not to take up the regular semester) WA-graduate attribute profile- Project Management & Finance WA- typically 4-5 years of study, depending on the level of students at entry WA- (knowledge aspect) engagement in research literature Potential merger of European-WA attributes leading to requirement of more advanced courses EAC Professional Development Submission to EAC (1-2 days); March 2010 Outcome based education (2-3 days); April 2010 Panel evaluators (3-4 days); May 2010 Evaluator refresher (1/2 - 1 day); May 2010 On-the-job training (accreditation visit) Customised workshop/courses EAC 1st Summit & Forum Aug 2010, Kuching Improvements Defer rejection for Application for Approval, and IHL will be called to discuss for resubmission Response to Evaluators’ report would require IHL’s corrective action as well apart from correcting factual inaccuracies, and would be tabled at EAC meeting Involvement EAC Involvement Accreditation Recognition Mentoring Mutual recognition – CTI France NABEEA IEA (Washington Accord) FEIIC (EQAPS) Universities Evaluation Panel Joint Committee on Standard Local Benchmarking Knowledge Sharing (systems) Local & International Observers EAC/Professional activities Interpreting WA graduate attributes Industry Sabbatical International collaboration (research + academic) Feedback Feedback from Universities UNIM UTAR UTM IIUM UNIMAS UMS USM UiTM Rated Poor (2/5) Explanation by Panel chair (UNIM) Interview session with lecturers (UNIM,UTM) Interview session with students (UNIM) Time keeping (UTM, USM) Asking relevant question according to EAC Criteria (IIUM, USM) Checking records (USM) Commitment and cooperation during visit (IIUM) Recapitulation from 1st Dialogue Not fault finding (need to highlight strength) Sampling may not be representative Giving adequate time to adjust with changes to the Manual Time frame to obtain results PE definition to be opened to other Professional bodies No clear justification requiring PE (nice to have) Appoint suitable and “related discipline” evaluators Appoint non-PE academics Usurping the power of senate MCED should be given the mandate to nominate academics to EAC Spell out the Manual clearly (eg. benchmarking) Assessment of EAC evaluators Flexibility of Appendix B Local benchmarking Response at exit meeting Engineering technology vs Engineering Conclusion Conclusion Great potential in leading engineering education Quality & competitive engineering education Contributing to greater goals Sharing of knowledge and practice Systems approach outcome based education Participative and engaging rather than adversary Professional development Facilitating and developmental Thank you