What do we know about exploratory testing?

advertisement

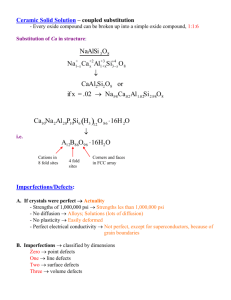

What do we know about exploratory testing? Implications for Practice kai.petersen@bth.se Exploratory testing • Definition • How it is used in industry • How it performs in comparison with scripted testing • Implications for practice Definitions Definition Exploratory software testing is a style of software testing that emphasizes the personal freedom and responsibility of the individual tester to continually optimize the value of her work by treating test-related learning, test design, test execution, and test result interpretation as mutually supportive activities that run in parallel throughout the project Cem Kaner Further definitions • Simultaneous learning, test design and test execution (James Bach) • Any testing to the extent that the tester actively controls the design of the tests as those tests are performed and uses information gained while testing to design new and better tests • Purposeful wandering, navigation through a space with a general mission, but without a pre-scripted route. Exploration involves continuous learning and experimenting. (Kaner, Bach and Petticord) Exploratory testing is not a testing technique. It is a way of thinking about testing. Techniques may be used in a exploratory way or support it. Misconceptions • ET is not structured, ad-hoc, does not provide tracking, and has no procedure for reporting defects • Ways of giving structure to it: – Test heuristics (guided by project environment quality criteria, product elements) – Charters (goal, coverage, time-frame) – Time-boxing – Mission There is a spectrum (cf. Jon Bach) freestyle vague scripts fragmented test cases Let yourself be distracted… charters You never know what you’ll find (explore interesting areas of the “search space”) Exploration Space (e.g. given by Mission) Make sure to go back to your mission periodically Cf. Kaner and Bach Pure scripted roles Industry practice Survey • Practitioners in Estonia, Finland, and other countries (total 61 responses) • Evenly distributed sample with regard to number of employees in company • Testers are experienced (over 90% have two years of experience or more, over 40% five years and more) • Primary roles were testers and test managers • The software tested was mostly usability critical, performance critical, and safety critical State of practice (1/3) • 88% of respondents used exploratory testing (self-selection bias) • Only a few people (25 % of respondents) are using a tool Mind map tools mostly used Only few tools used may indicate (a) The tool support is not sufficient/accessible (b) Practitioners do not feel a need for tool support State of practice (2/3) • ET is primarily used for system, integration acceptance, and usability testing, but also security testing. • The most frequently implemented practices to support ET are time-boxing, sessions, mission statements or charters, defect log, and a test log State of practice (3/3) • Most significant benefits are: support of creativity, testing becomes interesting, and is • Only few negative experiences ET vs ST … and the need to experiment Comparing ET and ST • Experimental set-up – Two treatments, ET and ST – Subjects were 24 industry practitioners and 46 M.Sc. Students • Instrumentation – Testing an open source text editor with seeded defects – ST group receives test design document and test reporting document restricting their exploration space; first do design then execution – ET group received test charter and logging document (Bach’s session based testing) • Experimental data – True defects (considering difficulty of finding, type, severity) – False defects • Also survey conducted Comparison results (1/2) • True defects – ET detected significantly more defects (8.342 mean defect count in comparison to 1.828 for ST). – Within the same time frame ET was more effective • Difficulty of defects – ET allows to find a significantly larger number of defects regardless of difficulty levels – ET achieved higher percentages for hard to find defects relative to other types of defects Mode ET ST Mode 0 = easiest 25% 35% Mode 1 40% 44% Mode 2 25% 18% Mode 3 = hardest 10% 3% Comparison results (2/2) • Type of defects – Overall ET found more defects in defect categories – ST revealed a higher percentage of GUI and usability defects (would be expected for ET) • Severity of defects – ET detects more defects across all severity levels – ET detects more severe and critical defects, while ST detects more negligible, minor, and normal defects Severity Negligible ET (% distr.) ST (% distr) 4.45 14.52 Minor 16.78 19.35 Normal 33.90 40.32 Severe 36.99 22.58 Critical 7.88 3.22 Implications • ET provides interesting results (powerful on larger parts of the system – integration, acceptance) • Not widely used on unit testing level • Finds different defects (scripted testing may complement) • Overall empirical results indicate positive outcomes for ET Proposition: The effectiveness testing could be increased by combining both exploratory and scripted testing Hybrid testing (HT) processes • Advantages of one approach are often disadvantages of the other • Developed a hybrid process combining exploratory and scripted testing • Very early results indicate that: – For defect detection efficiency we should do ET – For high functional coverage we should do HT – If we would like to achieve the best from the combination we should do HT – ET performs best for experienced testers, but worse for less experienced