Question Answering

advertisement

SIMS 290-2:

Applied Natural Language Processing

Marti Hearst

November 15, 2004

1

Question Answering

Today:

Introduction to QA

A typical full-fledged QA system

A very simple system, in response to this

An intermediate approach

Wednesday:

Using external resources

– WordNet

– Encyclopedias, Gazeteers

Incorporating a reasoning system

Machine Learning of mappings

Other question types (e.g., biography, definitions)

2

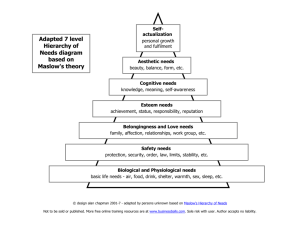

A

of Search Types

Question/Answer

What is the typical height of a giraffe?

Browse and Build

What are some good ideas for landscaping my

client’s yard?

Text Data Mining

What are some promising untried treatments

for Raynaud’s disease?

3

Beyond Document Retrieval

Document Retrieval

Users submit queries corresponding to their information

needs.

System returns (voluminous) list of full-length documents.

It is the responsibility of the users to find information of

interest within the returned documents.

Open-Domain Question Answering (QA)

Users ask questions in natural language.

What is the highest volcano in Europe?

System returns list of short answers.

… Under Mount Etna, the highest volcano in Europe,

perches the fabulous town …

A real use for NLP

Adapted from slide by Surdeanu and Pasca

4

Questions and Answers

What is the height of a typical giraffe?

The result can be a simple answer, extracted from

existing web pages.

Can specify with keywords or a natural language

query

– However, most web search engines are not set up to

handle questions properly.

– Get different results using a question vs. keywords

5

6

7

8

9

The Problem of Question Answering

When was the San Francisco fire?

… were driven over it. After the ceremonial tie was removed - it burned in the San

Francisco fire of 1906 – historians believe an unknown Chinese worker probably drove

the last steel spike into a wooden tie. If so, it was only…

What is the nationality of Pope John Paul II?

… stabilize the country with its help, the Catholic hierarchy stoutly held out for pluralism,

in large part at the urging of Polish-born Pope John Paul II. When the Pope

emphatically defended the Solidarity trade union during a 1987 tour of the…

Where is the Taj Mahal?

… list of more than 360 cities around the world includes the Great Reef in

Australia, the Taj Mahal in India, Chartre’s Cathedral in France, and

Serengeti National Park in Tanzania. The four sites Japan has listed include…

Adapted from slide by Surdeanu and Pasca

10

The Problem of Question Answering

Natural language question,

not keyword queries

What is the nationality of Pope John Paul II?

… stabilize the country with its help, the Catholic hierarchy stoutly held out for pluralism,

in large part at the urging of Polish-born Pope John Paul II. When the Pope

emphatically defended the Solidarity trade union during a 1987 tour of the…

Short text fragment,

not URL list

Adapted from slide by Surdeanu and Pasca

11

Question Answering from text

With massive collections of full-text documents,

simply finding relevant documents is of limited

use: we want answers

QA: give the user a (short) answer to their

question, perhaps supported by evidence.

An alternative to standard IR

The first problem area in IR where NLP is really

making a difference.

Adapted from slides by Manning, Harabagiu, Kushmeric, and ISI

12

People want to ask questions…

Examples from AltaVista query log

who invented surf music?

how to make stink bombs

where are the snowdens of yesteryear?

which english translation of the bible is used in official catholic

liturgies?

how to do clayart

how to copy psx

how tall is the sears tower?

Examples from Excite query log (12/1999)

how can i find someone in texas

where can i find information on puritan religion?

what are the 7 wonders of the world

how can i eliminate stress

What vacuum cleaner does Consumers Guide recommend

Adapted from slides by Manning, Harabagiu, Kushmeric, and ISI

13

A Brief (Academic) History

In some sense question answering is not a new research area

Question answering systems can be found in many areas of NLP

research, including:

Natural language database systems

– A lot of early NLP work on these

Problem-solving systems

– STUDENT (Winograd ’77)

– LUNAR

(Woods & Kaplan ’77)

Spoken dialog systems

– Currently very active and commercially relevant

The focus is now on open-domain QA is new

First modern system: MURAX (Kupiec, SIGIR’93):

– Trivial Pursuit questions

– Encyclopedia answers

FAQFinder (Burke et al. ’97)

TREC QA competition (NIST, 1999–present)

Adapted from slides by Manning, Harabagiu, Kushmeric, and ISI

14

AskJeeves

AskJeeves is probably most hyped example of

“Question answering”

How it used to work:

Do pattern matching to match a question to their

own knowledge base of questions

If a match is found, returns a human-curated answer

to that known question

If that fails, it falls back to regular web search

(Seems to be more of a meta-search engine now)

A potentially interesting middle ground, but a fairly

weak shadow of real QA

Adapted from slides by Manning, Harabagiu, Kushmeric, and ISI

15

Question Answering at TREC

Question answering competition at TREC consists of answering

a set of 500 fact-based questions, e.g.,

“When was Mozart born?”.

Has really pushed the field forward.

The document set

Newswire textual documents from LA Times, San Jose Mercury

News, Wall Street Journal, NY Times etcetera: over 1M documents

now.

Well-formed lexically, syntactically and semantically (were

reviewed by professional editors).

The questions

Hundreds of new questions every year, the total is ~2400

Task

Initially extract at most 5 answers: long (250B) and short (50B).

Now extract only one exact answer.

Several other sub-tasks added later: definition, list, biography.

Adapted from slides by Manning, Harabagiu, Kushmeric, and ISI

16

Sample TREC questions

1. Who is the author of the book, "The Iron Lady: A Biography of

Margaret Thatcher"?

2. What was the monetary value of the Nobel Peace Prize in 1989?

3. What does the Peugeot company manufacture?

4. How much did Mercury spend on advertising in 1993?

5. What is the name of the managing director of Apricot Computer?

6. Why did David Koresh ask the FBI for a word processor?

7. What is the name of the rare neurological disease with

symptoms such as: involuntary movements (tics), swearing,

and incoherent vocalizations (grunts, shouts, etc.)?

17

TREC Scoring

For the first three years systems were allowed to return 5

ranked answer snippets (50/250 bytes) to each question.

Mean Reciprocal Rank Scoring (MRR):

– Each question assigned the reciprocal rank of the first correct

answer. If correct answer at position k, the score is 1/k.

1, 0.5, 0.33, 0.25, 0.2, 0 for 1, 2, 3, 4, 5, 6+ position

Mainly Named Entity answers (person, place, date, …)

From 2002 on, the systems are only allowed to return a single

exact answer and the notion of confidence has been introduced.

18

Top Performing Systems

In 2003, the best performing systems at TREC can answer

approximately 60-70% of the questions

Approaches and successes have varied a fair deal

Knowledge-rich approaches, using a vast array of NLP

techniques stole the show in 2000-2003

– Notably Harabagiu, Moldovan et al. ( SMU/UTD/LCC )

Statistical systems starting to catch up

AskMSR system stressed how much could be achieved by very

simple methods with enough text (and now various copycats)

People are experimenting with machine learning methods

Middle ground is to use large collection of surface matching

patterns (ISI)

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

19

Example QA System

This system contains many components used by other systems,

but more complex in some ways

Most work completed in 2001; there have been advances by this

group and others since then.

Next slides based mainly on:

Paşca and Harabagiu, High-Performance Question Answering

from Large Text Collections, SIGIR’01.

Paşca and Harabagiu, Answer Mining from Online

Documents, ACL’01.

Harabagiu, Paşca, Maiorano: Experiments with Open-Domain

Textual Question Answering. COLING’00

20

QA Block Architecture

Extracts and ranks passages

using surface-text techniques

Captures the semantics of the question

Selects keywords for PR

Extracts and ranks answers

using NL techniques

Question Semantics

Q

Question

Processing

Keywords

WordNet

Parser

NER

Adapted from slide by Surdeanu and Pasca

Passage

Retrieval

Document

Retrieval

Passages

Answer

Extraction

A

WordNet

Parser

NER

21

Question Processing Flow

Construction of the

question representation

Q

Question

parsing

Answer type detection

Keyword selection

Adapted from slide by Surdeanu and Pasca

Question

semantic

representation

AT category

Keywords

22

Question Stems and Answer Types

Identify the semantic category of expected answers

Question

Question stem

Answer type

Q555: What was the name of Titanic’s captain?

What

Person

Q654: What U.S. Government agency registers

trademarks?

What

Organization

Q162: What is the capital of Kosovo?

What

City

Q661: How much does one ton of cement cost?

How much

Quantity

Other question stems: Who, Which, Name, How hot...

Other answer types: Country, Number, Product...

Adapted from slide by Surdeanu and Pasca

23

Detecting the Expected Answer Type

In some cases, the question stem is sufficient to indicate the

answer type (AT)

Why REASON

When DATE

In many cases, the question stem is ambiguous

Examples

– What was the name of Titanic’s captain ?

– What U.S. Government agency registers trademarks?

– What is the capital of Kosovo?

Solution: select additional question concepts (AT words)

that help disambiguate the expected answer type

Examples

– captain

– agency

– capital

Adapted from slide by Surdeanu and Pasca

24

Answer Type Taxonomy

Encodes 8707 English concepts to help recognize expected answer type

Mapping to parts of Wordnet done by hand

Can connect to Noun, Adj, and/or Verb subhierarchies

25

Answer Type Detection Algorithm

Select the answer type word from the question representation.

Select the word(s) connected to the question. Some

“content-free” words are skipped (e.g. “name”).

From the previous set select the word with the highest

connectivity in the question representation.

Map the AT word in a previously built AT hierarchy

The AT hierarchy is based on WordNet, with some concepts

associated with semantic categories, e.g. “writer”

PERSON.

Select the AT(s) from the first hypernym(s) associated with a

semantic category.

Adapted from slide by Surdeanu and Pasca

26

Answer Type Hierarchy

PERSON

inhabitant,

dweller, denizen

American westerner

islander,

island-dweller

scientist,

man of science

chemist

researcher

oceanographer

What

PERSON

Hepatitis-B

researcher

discovered

vaccine

What researcher discovered the

vaccine against Hepatitis-B?

Adapted from slide by Surdeanu and Pasca

performer,

performing artist

dancer

actor

ballet

tragedian

actress

dancer

name

PERSON

What

oceanographer

French

Calypso

owned

What is the name of the French

oceanographer who owned Calypso?

27

Evaluation of Answer Type Hierarchy

This evaluation done in 2001

Controlled the variation of the number of WordNet synsets

included in the answer type hierarchy.

Test on 800 TREC questions.

Hierarchy

coverage

0%

3%

10%

25%

50%

Precision score

(50-byte answers)

0.296

0.404

0.437

0.451

0.461

The derivation of the answer type is the main source of

unrecoverable errors in the QA system

Adapted from slide by Surdeanu and Pasca

28

Keyword Selection

Answer Type indicates what the question is looking

for, but provides insufficient context to locate the

answer in very large document collection

Lexical terms (keywords) from the question, possibly

expanded with lexical/semantic variations provide the

required context.

Adapted from slide by Surdeanu and Pasca

29

Lexical Terms Extraction

Questions approximated by sets of unrelated words

(lexical terms)

Similar to bag-of-word IR models

Question (from TREC QA track)

Lexical terms

Q002: What was the monetary value of the Nobel monetary, value, Nobel,

Peace Prize in 1989?

Peace, Prize

Q003: What does the Peugeot company

manufacture?

Peugeot, company,

manufacture

Q004: How much did Mercury spend on

advertising in 1993?

Mercury, spend, advertising,

1993

Q005: What is the name of the managing

director of Apricot Computer?

name, managing, director,

Apricot, Computer

Adapted from slide by Surdeanu and Pasca

30

Keyword Selection Algorithm

1.

2.

3.

4.

5.

6.

7.

8.

Select

Select

Select

Select

Select

Select

Select

Select

all non-stopwords in quotations

all NNP words in recognized named entities

all complex nominals with their adjectival modifiers

all other complex nominals

all nouns with adjectival modifiers

all other nouns

all verbs

the AT word (which was skipped in all previous steps)

Adapted from slide by Surdeanu and Pasca

31

Keyword Selection Examples

What researcher discovered the vaccine against Hepatitis-B?

Hepatitis-B, vaccine, discover, researcher

What is the name of the French oceanographer who owned

Calypso?

Calypso, French, own, oceanographer

What U.S. government agency registers trademarks?

U.S., government, trademarks, register, agency

What is the capital of Kosovo?

Kosovo, capital

Adapted from slide by Surdeanu and Pasca

32

Passage Retrieval

Extracts and ranks passages

using surface-text techniques

Captures the semantics of the question

Selects keywords for PR

Extracts and ranks answers

using NL techniques

Question Semantics

Q

Question

Processing

Keywords

WordNet

Parser

NER

Adapted from slide by Surdeanu and Pasca

Passage

Retrieval

Document

Retrieval

Passages

Answer

Extraction

A

WordNet

Parser

NER

33

Passage Extraction Loop

Passage Extraction Component

Extracts passages that contain all selected keywords

Passage size dynamic

Start position dynamic

Passage quality and keyword adjustment

In the first iteration use the first 6 keyword selection

heuristics

If the number of passages is lower than a threshold

query is too strict drop a keyword

If the number of passages is higher than a threshold

query is too relaxed add a keyword

Adapted from slide by Surdeanu and Pasca

34

Passage Retrieval Architecture

Keywords

Keyword

Adjustment

No

Passage

Quality

Yes

Passages

Passage Extraction

Passage

Scoring

Passage

Ordering

Ranked

Passages

Documents

Document

Retrieval

Adapted from slide by Surdeanu and Pasca

35

Passage Scoring

Passages are scored based on keyword windows

For example, if a question has a set of keywords: {k1, k2, k3, k4}, and

in a passage k1 and k2 are matched twice, k3 is matched once, and k4

is not matched, the following windows are built:

Window 1

Window 2

k1

k2

k2

k3

k1

Window 3

k2

k2

k3

k1

Window 4

k1

k2

k1

k2

k3

k1

Adapted from slide by Surdeanu and Pasca

k1

k2

k2

k3

k1

36

Passage Scoring

Passage ordering is performed using a radix sort that

involves three scores:

SameWordSequenceScore (largest)

– Computes the number of words from the question that

are recognized in the same sequence in the window

DistanceScore (largest)

– The number of words that separate the most distant

keywords in the window

MissingKeywordScore (smallest)

– The number of unmatched keywords in the window

Adapted from slide by Surdeanu and Pasca

37

Answer Extraction

Extracts and ranks passages

using surface-text techniques

Captures the semantics of the question

Selects keywords for PR

Extracts and ranks answers

using NL techniques

Question Semantics

Q

Question

Processing

Keywords

WordNet

Parser

NER

Adapted from slide by Surdeanu and Pasca

Passage

Retrieval

Document

Retrieval

Passages

Answer

Extraction

A

WordNet

Parser

NER

38

Ranking Candidate Answers

Q066: Name the first private citizen to fly in space.

Answer type: Person

Text passage:

“Among them was Christa McAuliffe, the first private

citizen to fly in space. Karen Allen, best known for her

starring role in “Raiders of the Lost Ark”, plays McAuliffe.

Brian Kerwin is featured as shuttle pilot Mike Smith...”

Best candidate answer: Christa McAuliffe

Adapted from slide by Surdeanu and Pasca

39

Features for Answer Ranking

relNMW number of question terms matched in the answer passage

relSP number of question terms matched in the same phrase as the

candidate answer

relSS number of question terms matched in the same sentence as the

candidate answer

relFP flag set to 1 if the candidate answer is followed by a punctuation

sign

relOCTW number of question terms matched, separated from the candidate

answer by at most three words and one comma

relSWS number of terms occurring in the same order in the answer

passage as in the question

relDTW average distance from candidate answer to question term matches

SIGIR ‘01

Adapted from slide by Surdeanu and Pasca

40

Answer Ranking based on

Machine Learning

Relative relevance score computed for each pair of

candidates (answer windows)

relPAIR = wSWS relSWS + wFP relFP

+ wOCTW relOCTW + wSP relSP + wSS relSS

+ wNMW relNMW + wDTW relDTW + threshold

If relPAIR positive, then first candidate from pair is more

relevant

Perceptron model used to learn the weights

Scores in the 50% MRR for short answers, in the 60%

MRR for long answers

Adapted from slide by Surdeanu and Pasca

41

Evaluation on the Web

- test on 350 questions from TREC (Q250-Q600)

- extract 250-byte answers

Google

Answer

extraction from

Google

AltaVista

Answer extraction

from AltaVista

Precision score

0.29

0.44

0.15

0.37

Questions with a

correct answer

among top 5

returned answers

0.44

0.57

0.27

0.45

Adapted from slide by Surdeanu and Pasca

42

Can we make this simpler?

One reason systems became so complex is that they have to

pick out one sentence within a small collection

The answer is likely to be stated in a hard-to-recognize

manner.

Alternative Idea:

What happens with a much larger collection?

The web is so huge that you’re likely to see the answer

stated in a form similar to the question

Goal: make the simplest possible QA system by exploiting this

redundancy in the web

Use this as a baseline against which to compare more

elaborate systems.

The next slides based on:

– Web Question Answering: Is More Always Better? Dumais, Banko, Brill, Lin,

Ng, SIGIR’02

– An Analysis of the AskMSR Question-Answering System, Brill, Dumais,

and Banko, EMNLP’02.

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

43

AskMSR System Architecture

2

1

3

5

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

4

44

Step 1: Rewrite the questions

Intuition: The user’s question is often syntactically

quite close to sentences that contain the answer

Where is the Louvre Museum located?

The Louvre Museum is located in Paris

Who created the character of Scrooge?

Charles Dickens created the character of Scrooge.

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

45

Query rewriting

Classify question into seven categories

Who is/was/are/were…?

When is/did/will/are/were …?

Where is/are/were …?

a. Hand-crafted category-specific transformation rules

e.g.: For where questions, move ‘is’ to all possible locations

Look to the right of the query terms for the answer.

“Where

is the Louvre Museum located?”

“is the Louvre Museum located”

“the is Louvre Museum located”

“the Louvre is Museum located”

“the Louvre Museum is located”

“the Louvre Museum located is”

Nonsense,

but ok. It’s

only a few

more queries

to the search

engine.

b. Expected answer “Datatype” (eg, Date, Person, Location, …)

When was the French Revolution? DATE

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

46

Query Rewriting - weighting

Some query rewrites are more reliable than others.

Where is the Louvre Museum located?

Weight 5

if a match,

probably right

Weight 1

Lots of non-answers

could come back too

+“the Louvre Museum is located”

+Louvre +Museum +located

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

47

Step 2: Query search engine

Send all rewrites to a Web search engine

Retrieve top N answers (100-200)

For speed, rely just on search engine’s “snippets”, not

the full text of the actual document

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

48

Step 3: Gathering N-Grams

Enumerate all N-grams (N=1,2,3) in all retrieved snippets

Weight of an n-gram: occurrence count, each weighted by

“reliability” (weight) of rewrite rule that fetched the document

Example: “Who created the character of Scrooge?”

Dickens

Christmas Carol

Charles Dickens

Disney

Carl Banks

A Christmas

Christmas Carol

Uncle

117

78

75

72

54

41

45

31

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

49

Step 4: Filtering N-Grams

Each question type is associated with one or more

“data-type filters” = regular expression

When…

Date

Where…

What …

Location

Who …

Person

Boost score of n-grams that match regexp

Lower score of n-grams that don’t match regexp

Details omitted from paper….

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

50

Step 5: Tiling the Answers

Scores

20

Charles Dickens

Dickens

15

10

merged,

discard

old n-grams

Mr Charles

Score 45

Mr Charles Dickens

tile highest-scoring n-gram

N-Grams

N-Grams

Repeat, until no more overlap

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

51

Results

Standard TREC contest test-bed (TREC 2001):

~1M documents; 900 questions

Technique doesn’t do too well (though would have

placed in top 9 of ~30 participants)

– MRR: strict: .34

– MRR: lenient: .43

– 9th place

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

52

Results

From EMNLP’02 paper

MMR of .577; answers 61% correctly

Would be near the top of TREC-9 runs

Breakdown of feature contribution:

53

Issues

Works best/only for “Trivial Pursuit”-style fact-based

questions

Limited/brittle repertoire of

question categories

answer data types/filters

query rewriting rules

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

54

Intermediate Approach:

Surface pattern discovery

Based on:

Ravichandran, D. and Hovy E.H. Learning Surface Text Patterns for a

Question Answering System, ACL’02

Hovy, et al., Question Answering in Webclopedia, TREC-9, 2000.

Use of Characteristic Phrases

"When was <person> born”

Typical answers

– "Mozart was born in 1756.”

– "Gandhi (1869-1948)...”

Suggests regular expressions to help locate correct answer

– "<NAME> was born in <BIRTHDATE>”

– "<NAME> ( <BIRTHDATE>-”

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

55

Use Pattern Learning

Examples:

“The great composer Mozart (1756-1791) achieved

fame at a young age”

“Mozart (1756-1791) was a genius”

“The whole world would always be indebted to the

great music of Mozart (1756-1791)”

Longest matching substring for all 3 sentences is

"Mozart (1756-1791)”

Suffix tree would extract "Mozart (1756-1791)" as an

output, with score of 3

Reminiscent of IE pattern learning

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

56

Pattern Learning (cont.)

Repeat with different examples of same question type

“Gandhi 1869”, “Newton 1642”, etc.

Some patterns learned for BIRTHDATE

a. born in <ANSWER>, <NAME>

b. <NAME> was born on <ANSWER> ,

c. <NAME> ( <ANSWER> d. <NAME> ( <ANSWER> - )

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

57

QA Typology from ISI

Typology of typical question forms—94 nodes (47 leaf nodes)

Analyzed 17,384 questions (from answers.com)

(THING

((AGENT

(NAME (FEMALE-FIRST-NAME (EVE MARY ...))

(MALE-FIRST-NAME (LAWRENCE SAM ...))))

(COMPANY-NAME (BOEING AMERICAN-EXPRESS))

JESUS ROMANOFF ...)

(ANIMAL-HUMAN (ANIMAL (WOODCHUCK YAK ...))

PERSON)

(ORGANIZATION (SQUADRON DICTATORSHIP ...))

(GROUP-OF-PEOPLE (POSSE CHOIR ...))

(STATE-DISTRICT (TIROL MISSISSIPPI ...))

(CITY (ULAN-BATOR VIENNA ...))

(COUNTRY (SULTANATE ZIMBABWE ...))))

(PLACE

(STATE-DISTRICT (CITY COUNTRY...))

(GEOLOGICAL-FORMATION (STAR CANYON...))

AIRPORT COLLEGE CAPITOL ...)

(ABSTRACT

(LANGUAGE (LETTER-CHARACTER (A B ...)))

(QUANTITY

(NUMERICAL-QUANTITY INFORMATION-QUANTITY

MASS-QUANTITY MONETARY-QUANTITY

TEMPORAL-QUANTITY ENERGY-QUANTITY

TEMPERATURE-QUANTITY ILLUMINATION-QUANTITY

(SPATIAL-QUANTITY

(VOLUME-QUANTITY AREA-QUANTITY DISTANCEQUANTITY)) ... PERCENTAGE)))

(UNIT

((INFORMATION-UNIT (BIT BYTE ... EXABYTE))

(MASS-UNIT (OUNCE ...)) (ENERGY-UNIT (BTU ...))

(CURRENCY-UNIT (ZLOTY PESO ...))

(TEMPORAL-UNIT (ATTOSECOND ... MILLENIUM))

(TEMPERATURE-UNIT (FAHRENHEIT KELVIN CELCIUS))

(ILLUMINATION-UNIT (LUX CANDELA))

(SPATIAL-UNIT

((VOLUME-UNIT (DECILITER ...))

(DISTANCE-UNIT (NANOMETER ...))))

(AREA-UNIT (ACRE)) ... PERCENT))

(TANGIBLE-OBJECT

((FOOD (HUMAN-FOOD (FISH CHEESE ...)))

(SUBSTANCE

((LIQUID (LEMONADE GASOLINE BLOOD ...))

(SOLID-SUBSTANCE (MARBLE PAPER ...))

(GAS-FORM-SUBSTANCE (GAS AIR)) ...))

(INSTRUMENT (DRUM DRILL (WEAPON (ARM GUN)) ...)

(BODY-PART (ARM HEART ...))

(MUSICAL-INSTRUMENT (PIANO)))

... *GARMENT *PLANT DISEASE)

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

58

Experiments

6 different question types

from Webclopedia QA Typology

–

–

–

–

–

–

BIRTHDATE

LOCATION

INVENTOR

DISCOVERER

DEFINITION

WHY-FAMOUS

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

59

Experiments: pattern precision

BIRTHDATE:

1.0

0.85

0.6

0.59

0.53

0.50

0.36

<NAME> ( <ANSWER> - )

<NAME> was born on <ANSWER>,

<NAME> was born in <ANSWER>

<NAME> was born <ANSWER>

<ANSWER> <NAME> was born

- <NAME> ( <ANSWER>

<NAME> ( <ANSWER> -

INVENTOR

1.0

1.0

1.0

<ANSWER> invents <NAME>

the <NAME> was invented by <ANSWER>

<ANSWER> invented the <NAME> in

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

60

Experiments (cont.)

DISCOVERER

1.0

1.0

0.9

when <ANSWER> discovered <NAME>

<ANSWER>'s discovery of <NAME>

<NAME> was discovered by <ANSWER> in

DEFINITION

1.0

1.0

0.94

<NAME> and related <ANSWER>

form of <ANSWER>, <NAME>

as <NAME>, <ANSWER> and

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

61

Experiments (cont.)

WHY-FAMOUS

1.0

1.0

0.71

<ANSWER> <NAME> called

laureate <ANSWER> <NAME>

<NAME> is the <ANSWER> of

LOCATION

1.0

1.0

0.92

<ANSWER>'s <NAME>

regional : <ANSWER> : <NAME>

near <NAME> in <ANSWER>

Depending on question type, get high MRR (0.6–0.9),

with higher results from use of Web than TREC QA

collection

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

62

Shortcomings & Extensions

Need for POS &/or semantic types

– "Where are the Rocky Mountains?”

– "Denver's new airport, topped with white fiberglass

cones in imitation of the Rocky Mountains in the

background , continues to lie empty”

– <NAME> in <ANSWER>

NE tagger &/or ontology could enable system to

determine "background" is not a location

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

63

Shortcomings... (cont.)

Long distance dependencies

"Where is London?”

"London, which has one of the busiest airports in the

world, lies on the banks of the river Thames”

would require pattern like:

<QUESTION>, (<any_word>)*, lies on <ANSWER>

Abundance & variety of Web data helps system to

find an instance of patterns w/o losing answers to

long distance dependencies

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

64

Shortcomings... (cont.)

System currently has only one anchor word

Doesn't work for Q types requiring multiple words

from question to be in answer

– "In which county does the city of Long Beach lie?”

– "Long Beach is situated in Los Angeles County”

– required pattern:

<Q_TERM_1> is situated in <ANSWER> <Q_TERM_2>

Does not use case

– "What is a micron?”

– "...a spokesman for Micron, a maker of

semiconductors, said SIMMs are..."

If Micron had been capitalized in question, would be

a perfect answer

Adapted from slides by Manning, Harabagiu, Kusmerick, ISI

65

Question Answering

Today:

Introduction to QA

A typical full-fledged QA system

A very simple system, in response to this

An intermediate approach

Wednesday:

Using external resources

– WordNet

– Encyclopedias, Gazeteers

Incorporating a reasoning system

Machine Learning of mappings

Alternative question types

66