presentation_Vedran_cmd

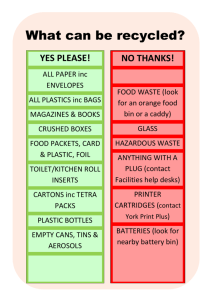

advertisement

Linux / UNIX command examples

(hopefully useful! Warning: I am a biologist!)

Vienna meeting poster session

22 / 10 / 2013

Vedran Bozicevic

Contents

1. Tar command tutorial with 10 practical examples

2. Get a grip on the grep!

3. Mommy, I found it! The Linux find command

4. Advanced sed substitution examples

5. Some powerful awk built-in variables: FS, OFS, RS, ORS, NR, NF, FILENAME, FNR

6. How to record and play in the Vim editor

7. Unix ls command

8. Awesome cd command hacks

9. Unix less command – tips for effective navigation

A short tar command tutorial

$ tar cvzf archive_name.tar.gz dirname/ – create a new (-c) tar gzipped (-z) archive verbosely (-v) with the following (-f) filename

$ tar cvfj archive_name.tar.bz2 dirname/ – do the same, only filter the archive through bzip2 instead – takes more time, but has better

compression

$ tar xvf archive_name.tar – extract (-x) file from archive; add -z for .gz or -j for .bz2

$ tar tvf archive_name.tar – view the archive content without extracting; add same for .gz, .bz2

$ tar xvf archive_file.tar /path/to/file – extract only a specific file from a large archive

$ tar xvf archive_file.tar /path/to/dir/ - extract a specific directory

$ tar xvf archive_file.tar /path/to/dir1/ /path/to/dir2/ - extract multiple directories

$ tar xvf archive_file.tar --wildcards '*.pl' – extract a group of files using regular expression

$ tar rvf archive_name.tar newfile – add an additional file to an existing tar archive

$ tar -cf - /directory/to/archive/ | wc -c – estimate the tar file size in KB before creating it

Get a grip on the grep!

First create the following demo_file to be used in examples below for demonstration purposes:

$ cat demo_file

THIS LINE IS THE 1ST UPPER CASE LINE IN THIS FILE.

this line is the 1st lower case line in this file.

This Line Has All Its First Character Of The Word With Upper Case.

Two lines above this line is empty.

And this is the last line.

Basic usage: search for lines containing a specific string:

$ grep "this" demo_file

this line is the 1st lower case line in this file.

Two lines above this line is empty.

Get a grip on the grep!

Copy the demo_file to demo_file1. Check for the given string in both using a pattern:

$ cp demo_file demo_file1

$ grep "this" demo_*

demo_file:this line is the 1st lower case line in this file.

demo_file:Two lines above this line is empty.

demo_file:And this is the last line.

demo_file1:this line is the 1st lower case line in this file.

demo_file1:Two lines above this line is empty.

demo_file1:And this is the last line.

Do a case-insensitive (-i) search:

$ grep -i "the" demo_file

THIS LINE IS THE 1ST UPPER CASE LINE IN THIS FILE.

this line is the 1st lower case line in this file.

This Line Has All Its First Character Of The Word With Upper Case.

And this is the last line.

Get a grip on the grep!

Match a regular expression in files:

$ grep "lines.*empty" demo_file

Two lines above this line is empty.

Check for full words, not for sub-strings (“is” in “This” will NOT match):

$ grep -iw "is" demo_file

THIS LINE IS THE 1ST UPPER CASE LINE IN THIS FILE.

this line is the 1st lower case line in this file.

Two lines above this line is empty.

And this is the last line.

Highlight the search using GREP_OPTIONS:

$ export GREP_OPTIONS='--color=auto' GREP_COLOR='100;8'

$ grep this demo_file

this line is the 1st lower case line in this file.

Two lines above this line is empty.

And this is the last line.

Get a grip on the grep!

Search for the string “vedran” within all files under the current directory and all of its sub-directories recursively:

$ grep -r "vedran" *

Display the lines that do not contain a given string / pattern:

$ grep -v "file" demo_file

This Line Has All Its First Character Of The Word With Upper Case.

Two lines above this line is empty.

And this is the last line.

The same, only for multiple patterns:

$ cat test-file.txt

a

b

c

d

$ grep -v -e "a" -e "b" -e "c" test-file.txt

d

Get a grip on the grep!

Count how many lines do NOT match the given pattern / string:

$ grep -v -c "file" demo_file1

3

Use a regular expression as a pattern, but show only the matched string, and not the entire line:

$ grep -o "is.*line" demo_file

is line is the 1st lower case line

is line

is is the last line

Show the position where grep matched the pattern in the file:

$ cat temp-file.txt

12345

12345

$ grep -o -b "3" temp-file.txt

2:3

8:3

Mommy, I found it! The Linux find

command

Same as before, let's create some sample files to use the find command examples on:

$ vim create_sample_files.sh

touch MybashProgram.sh

touch mycprogram.c

touch MyCProgram.c

touch Program.c

mkdir backup

cd backup

touch MybashProgram.sh

touch mycprogram.c

touch MyCProgram.c

touch Program.c

$ chmod +x create_sample_files.sh

$ ./create_sample_files.sh

$ ls -R

.:

backup

MybashProgram.sh MyCProgram.c

Mommy, I found it! The Linux find

command

The basic usage: find all files with (-name) “MyCProgram.c”, ignoring (-i) the case in the current dir and all of its

sub-dirs:

$ find -iname "MyCProgram.c"

./mycprogram.c

./backup/mycprogram.c

./backup/MyCProgram.c

./MyCProgram.c

Limit the search to particular directory levels using the options -mindepth and -maxdepth; for instance, find the

“passwd” file under root and two levels down, i.e. root = level 1, and two sub-dirs = level 2 and 3:

$ find / -maxdepth 3 -name passwd

./usr/bin/passwd

./etc/pam.d/passwd

./etc/passwd

Mommy, I found it! The Linux find

command

Or, find the password file between sub-dir levels 2 and 4:

$ find -mindepth 3 -maxdepth 5 -name passwd

./usr/bin/passwd

./etc/pam.d/passwd

Invert the match in the following way; the following will show all the files and dirs that do not match the name

“MyCProgram.c” to the depth of 1 (i.e. current dir):

$ find -maxdepth 1 -not -iname "MyCProgram.c"

.

./MybashProgram.sh

./create_sample_files.sh

./backup

./Program.c

Mommy, I found it! The Linux find

command

Execute commands on the files found using -exec, e.g. let's calculate the md5sums of all the files with the name

MyCProgram.c, ignoring case. {} is replaced by the current file name:

$ find -iname "MyCProgram.c" -exec md5sum {} \;

d41d8cd98f00b204e9800998ecf8427e ./mycprogram.c

d41d8cd98f00b204e9800998ecf8427e ./backup/mycprogram.c

d41d8cd98f00b204e9800998ecf8427e ./backup/MyCProgram.c

d41d8cd98f00b204e9800998ecf8427e ./MyCProgram.c

Find the top 5 big files or the top 5 small files:

$ find . -type f -exec ls -s {} \; | sort -n -r | head -5

$ find . -type f -exec ls -s {} \; | sort -n | head -5

Or files bigger than or smaller than the given size:

$ find ~ -size +100M

$ find ~ -size -100M

Find all (“.*”) non-hidden (-not) zero-byte (-empty) files in only the current directory (-maxdepth 1):

$ find . -maxdepth 1 -empty -not -name ".*"

Mommy, I found it! The Linux find

command

Use the option -type to find e.g. all the hidden (-type f) files:

$ find . -type f -name ".*"

Or all the hidden directories (-type d):

$ find -type d -name ".*"

Or only the normal (-type f) files:

$ find . -type f

Slightly more useful: find all the files created or modified after the file “ordinary_file”:

$ ls -lrt

total 0

-rw-r----- 1 root root 0 2009-02-19 20:27 others_can_also_read

----r----- 1 root root 0 2009-02-19 20:27 others_can_only_read

-rw------- 1 root root 0 2009-02-19 20:29 ordinary_file

-rw-r--r-- 1 root root 0 2009-02-19 20:30 everybody_read

-rwxrwxrwx 1 root root 0 2009-02-19 20:31 all_for_all

$ find -newer ordinary_file

Mommy, I found it! The Linux find

command

You can also create an alias for frequent find operations. For instance, let's create a command called “rmao” to

remove the files named “a.out”:

$ alias rmao="find . -iname a.out -exec rm {} \;"

$ rmao

Another example: remove the “core” files (“rmc”) generated by a C program:

$ alias rmc="find . -iname core -exec rm {} \;"

$ rmc

Remove all “.zip” files bigger than 100 MB:

$ find / -type f -name *.zip -size +100M -exec rm -i {} \;"

Put together, let's remove all “.tar” files over 100 MB, creating alias “rm100m”:

$ alias rm100m="find / -type f -name *.tar -size +100M -exec rm -i {} \;"

$ rm100m

Or, similarly, remove all the “.zip” files over 2 GB with the alias “rm2g”:

$ alias rm2g=”find / -type f -name *.zip -size +2G -exec rm -i {} \;”

$ rm1g

Mommy, I found it! The Linux find

command

You can also create an alias for frequent find operations. For instance, let's create a command called “rmao” to

remove the files named “a.out”:

$ alias rmao="find . -iname a.out -exec rm {} \;"

$ rmao

Another example: remove the “core” files (“rmc”) generated by a C program:

$ alias rmc="find . -iname core -exec rm {} \;"

$ rmc

Remove all “.zip” files bigger than 100 MB:

$ find / -type f -name *.zip -size +100M -exec rm -i {} \;"

Put together, let's remove all “.tar” files over 100 MB, creating alias “rm100m”:

$ alias rm100m="find / -type f -name *.tar -size +100M -exec rm -i {} \;"

$ rm100m

Or, similarly, remove all the “.zip” files over 2 GB with the alias “rm2g”:

$ alias rm2g=”find / -type f -name *.zip -size +2G -exec rm -i {} \;”

$ rm1g

Advanced sed substitution

examples

Again, let us create an example file first:

$ cat path.txt

/usr/kbos/bin:/usr/local/bin:/usr/jbin:/usr/bin:/usr/sas/bin

/usr/local/sbin:/sbin:/bin/:/usr/sbin:/usr/bin:/opt/omni/bin:

/opt/omni/lbin:/opt/omni/sbin:/root/bin

People typically use sed for substitutions involving regular expressions, e.g. to substitute path names. However, since path names

contain a lot of slashes, and slash is a special character in sed (it delimits a regex), all of them have to be “escaped” using

backslashes. This can result in a “picket-fence”-like pattern (/\/\/\) that can be hard to read, for instance:

$ sed 's/\/opt\/omni\/lbin/\/opt\/tools\/bin/g' path.txt

Luckily, chars such as “@”, “%”, “|”, “:” and “;” can also be used as delimiters:

$ sed 's@/opt/omni/lbin@/opt/tools/bin@g' path.txt

/usr/kbos/bin:/usr/local/bin:/usr/jbin/:/usr/bin:/usr/sas/bin

/usr/local/sbin:/sbin:/bin/:/usr/sbin:/usr/bin:/opt/omni/bin:

/opt/tools/bin:/opt/omni/sbin:/root/bin

Advanced sed substitution

examples

Grouping can also be used with sed; a group is opened using “(“ and closed using “)”. In the newer versions of sed one doesn't have to

escape the parentheses. Grouping can be used in combination with back-referencing. This will re-use a part of the regex that was

selected by grouping. For example, the following will change the order of the field in the last line of the file “path.txt”:

$ sed '$s@([^:]*):([^:]*):([^:]*)@\3:\2:\1@g' path.txt

Here, “$” specifies substitution to happen only for the last line, and “@” are the delimiters. The output shows that the order of the path

values in the last line has been reversed:

/usr/kbos/bin:/usr/local/bin:/usr/jbin:/usr/bin:/usr/sas/bin

/usr/local/sbin:/sbin:/bin:/usr/sbin:/usr/bin:/opt/omni/bin:

/root/bin:/opt/omni/sbin:/opt/omni/lbin

This example displays only the first field from the /etc/passwd file:

$sed 's/\([^:]*\).*/\1/' /etc/passwd

root

bin

daemon

adm

lp

Advanced sed substitution

examples

For the following file:

$ cat numbers

1234

12121

3434

123

This expression will insert commas into the numbers, to separate out the thousands from the rest:

$ sed 's/\(^\|[^0-9.]\)\([0-9]\+\)\([0-9]\{3\}\)/\1\2,\3/g' numbers

1,234

12,121

3,434

123

The following example prints the first character of every word in parentheses:

$ echo "Welcome To The Geek Stuff" | sed 's/\(\b[A-Z]\)/\(\1\)/g'

(W)elcome (T)o (T)he (G)eek (S)tuff

Some powerful awk built-in

variables

There are two types of built-in variables in awk, (1) those that define values which can be changed, such as field separators and record

separators, and (2) those which can be used for processing and reports, such as the number of records / the number of fields.

Awk reads and parses each line from input (by default, separated by whitespace), and sets each field to the variables $1, $2, $3 etc.

Awk FS is any single character or regex which you wanna use as an input field separator instead of the default whitespace. It can be

changed any number of times and retains its values until it's explicitly changed.

Here is an awk FS example to read the /etc/passwd file which has “:” as a field delimiter:

$ cat etc_passwd.awk

BEGIN{

FS=":";

print "Name\tUserID\tGroupID\tHomeDirectory";

}

{

print $1"\t"$3"\t"$4"\t"$6;

}

END {

print NR,"Records Processed";

}

Some powerful awk built-in

variables

If we run the script (-f for execute):

$awk -f etc_passwd.awk /etc/passwd

...the output should look like this:

Name

UserID GroupID

HomeDirectory

gnats 41

41

/var/lib/gnats

libuuid 100

101

/var/lib/libuuid

syslog 101

102

/home/syslog

hplip 103

7

/var/run/hplip

avahi 105

111

/var/run/avahi-daemon

saned 110

116

/home/saned

pulse 111

117

/var/run/pulse

gdm 112

119

/var/lib/gdm

8 Records Processed

Some powerful awk built-in

variables

Awk OFS is an output equivalent of FS. By default, awk OFS is a single space character.

The concatenator in the print statement (“,”) concatenates two parameters with a space, which is the value of awk OFS by default. So,

if in the input the numbers on each line are separated by space, the awk OFS value (here, “=”) will be inserted between fields in

the output instead:

$ awk -F':' 'BEGIN{OFS="=";} {print $3,$4;}' /etc/passwd

41=41

100=101

101=102

103=7

105=111

110=116

111=117

112=119

Some powerful awk built-in

variables

Awk RS defines a line. Recall that by default awk reads line-by-line. As an example, let's take students' marks stored in a file, where

each record is separated by a double new line, and each field is separated by a newline character:

$cat student.txt

Jones

2143

78

84

Gondrol

2321

56

58

RinRao

2122

38

37

Some powerful awk built-in

variables

Now the below Awk script prints the student name and Rollno from the above input file:

$cat student.awk

BEGIN {

RS="\n\n";

FS="\n";

}

{

print $1,$2;

}

$ awk -f student.awk student.txt

Jones 2143

Gondrol 2321

RinRao 2122

Some powerful awk built-in

variables

Awk ORS is an Output equivalent of RS. Each record in the output will be printed with this delimiter. In the following script, each record

in the student-marks file is delimited by the character “=”:

$ awk 'BEGIN{ORS="=";} {print;}' student-marks

Jones 2143 78 84 77=Gondrol 2321 56 58 45=RinRao 2122 38 37 65=

Awk NR gives you the total number of records being processed or line number. In the following awk NR example, NR variable has line

number, in the END section awk NR tells you the total number of records in a file.

$ awk '{print "Processing Record - ",NR;}END {print NR, "Students Records are processed";}' student-marks

Processing Record - 1

Processing Record - 2

Processing Record - 3

Processing Record - 4

Processing Record - 5

5 Students Records are processed

Some powerful awk built-in

variables

Awk NF gives you the total number of fields in a record. It'll be very useful for validating whether all the fields exist in a record. In the

following student-marks file, Test3 score is missing for 2 students, RinRao and Dayan:

$cat student-marks

Jones 2143 78 84 77

Gondrol 2321 56 58 45

RinRao 2122 38 37

Edwin 2537 78 67 45

Dayan 2415 30 47

The following awk script prints the record (line) number, and the number of fields in that record. Therefore, it will be very simple to find

out if the Test3 score is missing:

$ awk '{print NR,"->",NF}' student-marks

1 -> 5

2 -> 5

3 -> 4

4 -> 5

5 -> 4

Some powerful awk built-in

variables

When awk reads from a multiple input file, the NR variable will give the total number of records relative to all the input files. Awk FNR

will give the number of records for each input file:

$ awk '{print FILENAME, FNR;}' student-marks bookdetails

student-marks 1

student-marks 2

student-marks 3

student-marks 4

student-marks 5

bookdetails 1

bookdetails 2

bookdetails 3

bookdetails 4

Bookdetails 5

Here, if we had used NR instead (for the file bookdetails), we would instead get between 6 and 10 for each record.

Some powerful awk built-in

variables

The FILENAME variable gives the name of the file being read. In this example, it prints the FILENAME, i.e. student-marks, for each

record of the input file:

$ awk '{print FILENAME}' student-marks

student-marks

student-marks

student-marks

student-marks

student-marks

How to record and play in Vim editor

Using the Vim Macro feature you can record and play a sequence of actions inside the editor.For example, you can generate a

sequence of numbers.

First, start a sequence-test.txt file to generate the sequence:

$ vim sequence-test.txt

Next, go to insert mode and type “1 .”; type: Esc i followed by 1.:

$ vim sequence-test.txt

1.

Now type “Esc q” followed by “a”. Here, “q” starts the recording, and “a” stores the recording in the register “a”. At the bottom of the

screen, vim will display the message “recording”.

Next, type “Esc yy” followed by “p”. The “yy” (short for “yank”) will copy the current line, and “p” will paste it.

$ vim sequence-test.txt

1.

1.

Now, increment the number by placing the cursor at the 2nd line, and typing “Ctrl a”.

$ vim sequence-test.txt

1.

2.

How to record and play in Vim editor

Press “q” to stop the recording. You’ll notice that the recording message at the bottom of the vim editor is now gone.

Type “98 @ a” now. This will repeat the stored macro “a” 98 times. This will generate a sequence of numbers from 1. to 100.

The mighty UNIX ls command

Unix users and sysadmins cannot live without this two letter command. Whether you use it 10 or 100 times a day, knowing the power

of the ls command can make your command line journey much more enjoyable.

For instance, if it had been the following two text files that we were editing before:

$ vi first-long-file.txt

$ vi second-long-file.txt

Then the following will open the last file we edited (i.e “second-long-file.txt”)

$ vi `ls -t | head -1`

To show a single entry per line, use the “-1” option:

$ ls -1

bin

boot

cdrom

dev

etc

home

initrd

initrd.img

The mighty UNIX ls command

The ls -l command will show long listing information about a file/directory. Use ls -lh (“h” stands for “human readable” form) to display

the file size in an easy-to-read format, i.e M for MB, K for KB, G for GB:

$ ls -l

-rw-r----- 1 ramesh team-dev 9275204 Jun 12 15:27 arch-linux.txt.gz*

$ ls -lh

-rw-r----- 1 ramesh team-dev 8.9M Jun 12 15:27 arch-linux.txt.gz

If you want the directory details, you can use the “-ld” option:

$ ls -ld /etc

drwxr-xr-x 21 root root 4096 Jun 15 07:02 /etc

And if you want to order the files based on last time modified, use “-lt”:

$ ls -lt

total 76

drwxrwxrwt 14 root root 4096 Jun 22 07:36 tmp

drwxr-xr-x 121 root root 4096 Jun 22 07:05 etc

drwxr-xr-x 13 root root 13780 Jun 22 07:04 dev

drwxr-xr-x 13 root root 4096 Jun 20 23:12 root

drwxr-xr-x 12 root root 4096 Jun 18 08:31 home

The mighty UNIX ls command

The “-ltr” option will order the files in reverse order of last time modified:

$ ls -ltr

total 76

drwxr-xr-x 15 root root 4096 Jul 2 2008 var

drwx------ 2 root root 16384 May 17 20:29 lost+found

lrwxrwxrwx 1 root root

11 May 17 20:29 cdrom -> media/cdrom

drwxr-xr-x 2 root root 4096 May 17 21:21 sbin

drwxr-xr-x 12 root root 4096 Jun 18 08:31 home

To show all the hidden files in the directory, use ‘-a option’. Hidden files in Unix starts with ‘.’ in its file name:

$ ls -a

[rnatarajan@asp-dev ~]$ ls -a

.

Debian-Info.txt

..

CentOS-Info.txt

.bash_history

Fedora-Info.txt

.bash_logout

.lftp

.bash_profile

libiconv-1.11.tar.tar

The mighty UNIX ls command

To show all the files recursively, use the “-R” option. When you do this from /, it shows all the unhidden files in the whole file system

recursively:

$ ls /etc/sysconfig/networking

devices profiles

/etc/sysconfig/networking/devices:

/etc/sysconfig/networking/profiles:

default

/etc/sysconfig/networking/profiles/default:

Instead of doing the ‘ls -l’ and then checking for the first character to determine the type of file, you can use -F which classifies the file

with different special character for different kind of files:

$ ls -F

Desktop/ Documents/ Ubuntu-App@ firstfile Music/ Public/ Templates/

In the above, “/” is a directory, “@” is a link file, and “*” is an executable file.

The mighty UNIX ls command

Recognizing the file type by the color in which it gets displayed is another possible kind of file classification. Here the directories get

displayed in blue, soft links get displayed in green, and ordinary files gets displayed in the default color:

$ ls --color=auto

Desktop Documents Examples firstfile Music Pictures Public Templates Videos

You can take some required ls options in the above, and make aliases for easier use, e.g. the following:

Long list the file with size in human understandable form.

$ alias ll="ls -lh"

Classify the file type by appending special characters.

$ alias lv="ls -F"

Classify the file type by both color and special character.

$ alias ls="ls -F --color=auto"

Some awesome cd command hacks

Hack #1: Use CDPATH to define the base directory for cd command

If you are frequently doing cd to subdirectories of a specific parent directory, you can set the CDPATH to the parent directory and

perform cd to the subdirectories without giving the parent directory path:

[ramesh@dev-db ~]$ pwd

/home/ramesh

[ramesh@dev-db ~]$ cd mail

-bash: cd: mail: No such file or directory

[Note: This is looking for mail directory under current directory]

[ramesh@dev-db ~]$ export CDPATH=/etc

[ramesh@dev-db ~]$ cd mail

[Note: This is looking for mail under /etc and not under current directory]

[ramesh@dev-db /etc/mail]$ pwd

/etc/mail

Some awesome cd command hacks

Hack #2: Use cd alias to navigate up the directory effectively

When you are navigating up a very long directory structure, you may be using cd ..\..\ with multiple ..\ depending on how many

directories you want to go up. Instead of executing cd ../../../.. to navigate four levels up, use aliases:

alias ..="cd .."

alias ..2="cd ../.."

alias ..3="cd ../../.."

alias ..4="cd ../../../.."

alias ..5="cd ../../../../.."

$ cd /tmp/very/long/directory/structure/that/is/too/deep

$..4

[Note: use ..4 to go up 4 directory level]

$ pwd

/tmp/very/long/directory/structure/

Some awesome cd command hacks

Hack #3: Perform mkdir and cd using a single command

Sometimes when you create a new directory, you may cd to the new directory immediately to perform some work as shown below.

$ mkdir -p /tmp/subdir1/subdir2/subdir3

$ cd /tmp/subdir1/subdir2/subdir3

$ pwd

/tmp/subdir1/subdir2/subdir3

Wouldn’t it be nice to combine both mkdir and cd in a single command? Add the following to the .bash_profile and re-login.

$ function mkdircd () { mkdir -p "$@" && eval cd "\"\$$#\""; }

Now, perform both mkdir and cd at the same time using a single command as shown below:

$ mkdircd /tmp/subdir1/subdir2/subdir3

[Note: This creates the directory and cds to it automatically]

$ pwd

/tmp/subdir1/subdir2/subdir3

Some awesome cd command hacks

Hack #4: Use “cd -” to toggle between the last two directories

You can toggle between the last two current directories using “cd –“:

$ cd /tmp/very/long/directory/structure/that/is/too/deep

$ cd /tmp/subdir1/subdir2/subdir3

$ cd $ pwd

/tmp/very/long/directory/structure/that/is/too/deep

$ cd $ pwd

/tmp/subdir1/subdir2/subdir3

$ cd $ pwd

/tmp/very/long/directory/structure/that/is/too/deep

Some awesome cd command hacks

Hack #5: Use “shopt -s cdspell” to automatically correct mistyped directory names on cd

Use shopt -s cdspell to correct the typos in the cd command automatically as shown below. If you are not good at typing and make lot

of mistakes, this will be very helpful:

$ cd /etc/mall

-bash: cd: /etc/mall: No such file or directory

$ shopt -s cdspell

$ cd /etc/mall

$ pwd

/etc/mail

[Note: By mistake, when I typed mall instead of mail,

cd corrected it automatically]

UNIX less command – tips for

effective navigation

I personally prefer to use the “less” command to view files (instead of opening the file to view in an editor). Less is similar to “more”, but

it allows both forward and backward movements. Moreover, less doesn't require loading the whole file before viewing. Try opening

a large log file in Vim editor and less — you’ll see the speed difference.

The navigation keys in less are similar to the Vim editor. These few less command navigation and other operations will make you a

better command line warrior:

Forward Search

/ – search for a pattern which will take you to the next occurrence.

n – for next match in forward

N – for previous match in backward

Backward Search

? – search for a pattern which will take you to the previous occurrence.

n – for next match in backward direction

N – for previous match in forward direction

Tip: If you don't care about which direction the search is happening, and you want to look for a file path, or URL, such as

“/home/ramesh/”, you can use the backward search (?pattern) which will be handy as you don’t want to escape slashes each

time.

Search Path

In forward: /\/home\/ramesh\/

In backward: ?/home/ramesh/

UNIX less command – tips for

effective navigation

Use the following screen navigation commands while viewing large log files.

CTRL+F – forward one window

CTRL+B – backward one window

CTRL+D – forward half window

CTRL+U – backward half window

In a smaller chunk of data, where you want to locate a particular error, you may want to navigate line by line using these keys:

j – navigate forward by one line

k – navigate backward by one line

The following are other navigation operations that you can use inside the less pager.

G – go to the end of file

g – go to the start of file

q or ZZ – exit the less pager

UNIX less command – tips for

effective navigation

Once you’ve opened a file using the less command, any content that is appended to the file after that will not be displayed

automatically. However, you can press F to show the status ‘waiting for data‘. This is similar to ‘tail -f’.

Similarly to the Vim editor navigation command, you can enter 10j to scroll 10 lines down, or 10k to go up by 10 lines. Ctrl-G will show

the current file name along with line, byte and percentage statistics.

Other useful Less Command Operations

v – using the configured editor edit the current file.

h – summary of less commands

&pattern – display only the matching lines, not all.

When you are viewing a large log file using less command, you can mark a particular position and return back to that place again by

using that mark.

ma – mark the current position with the letter ‘a’,

‘a – go to the marked position ‘a’.

UNIX less command – tips for

effective navigation

Less Command – Multiple file paging

Method 1: You can open multiple files by passing the file names as arguments.

$ less file1 file2

Method 2: While you are viewing file1, use :e to open the file2 as shown below.

$ less file1

:e file2

Navigation across files: When you opened more than two files ( for e.g – less * ), use the following keys to navigate between files.

:n – go to the next file.

:p – go to the previous file.