Sampling and experimental design

School of Information

University of Michigan

Sampling and experimental design

Exercise 4.63 (to help out with problem 1 on

PS3)

Pr(domestic abuse) = 1/3, or maybe 1/10

Sample of 15 women; 4 have been abused

If p=1/3, what is Pr(X>=4)?

If p=1/10, what is pr(X>=4)?

Given evidence from the sample, which abuse rate seems more plausible?

Note: this is a preview of thinking about sampling distributions

Exercise 4.63 (to help out with problem 1 on

PS3)

p = 0.33 or 0.1

n = 15 women, x=4

If p=1/3, what is Pr(X>=4)?

> pbinom(3,15,0.33,lower.tail=F)

[1] 0.7828694

If p=1/10, what is pr(X>=4)?

> pbinom(3,15,0.1,lower.tail=F)

[1] 0.05555563

Given evidence from the sample, which abuse rate seems more plausible?

Types of statistical studies

Surveys

Experiments

Observational

Surveys

Subjects fill out questionnaires

Choices:

Random sample

Same characteristics as the population

list the whole population

draw random numbers to select a subset (sampling without replacement)

Quota sampling (stratified sampling)

Every subset of the population has an specified chance of being selected

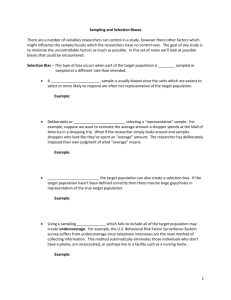

Sampling frame and target population target population sampling frame nonresponse sampled population ineligible

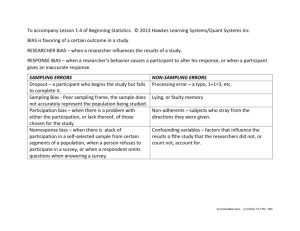

errors in the survey process target population coverage error sampling frame sampling error sample nonresponse error respondents

definitions of non-sampling errors

selection bias – sample frame does not correspond to target population nonresponse bias – respondents with a certain characteristic are more likely to not fill out a survey

(e.g. US Census undersamples blacks vs. other ethnicities) self-selection – if survey is mailed out or available to a wide audience online, those who fill it out may have a bias

e.g NRA survey

ABC’s online survey of ‘addiction to the internet’

Kinsey reports

studies of human sexuality

widely used to support the claim that 10% of the male population is homosexual (also an example of a persistent statistic, that was never explicitly made by Kinsey)

criticism:

over-representation of some groups in the sample:

25% were, or had been, prison inmates

5% were male prostitutes.

non-response bias

definitions of non-sampling errors (cont’d)

question effects – differently posed questions can yield measurably different results

e.g. “Poll finds 1 out of 3 Americans Open to Doubt

There is a Holocaust”, Los Angeles Times , April 20,

1993

Roper poll: “Does it seem possible or does it seem impossible to you that the Nazi extermination of the Jews never happened?”

22% - possible

12% didn’t know

Year later, second Roper poll: “Does it seem possible to you that the Nazi extermination of the Jews never happened, or do you feel certain that it happened?

1% - possible

8% didn’t know

definitions of non-sampling errors (cont’d)

survey format

in person interview, mail, phone, online

sensitive questions are especially affected

position of a question on the survey

survey length

placement of instructions

interviewer effects

behavioral considerations

subjects may want to give the “right” answer

Family size sampling

How many children are there in your family including you?

data for this class

national average # kids/per family for families having kids ~ 20 years ago: 2.0

difficulty in sampling: what is a family?

Telephone survey example target population: inhabitants of a town sampling frame: people listed in the phonebook nonresponse: people who could not be reached or declined to take the survey sampled population undersampled: households without a landline ineligible: people who have moved away

telephone survey sampling strategy

Call a random telephone #

Ask to speak to the person in the household whose birthday falls next

What kind of sampling bias occurs with this strategy?

people in large households underrepresented

young people underrepresented

Phone book experiment

sampling by last name

is it really random?

what are the sources of non-randomness?

two people with the same last name sharing the same phone #

one person having multiple phone lines

combination of the two

worst statistic ever (from damned lies and statistics)

"Every year since 1950, the number of American children gunned down has doubled."

from a 1995 journal article

how do we know this statistic is bad?

exponential growth

assume the # of children gunned down in 1950 is 1

the number of children gunned down in

1951 : 2

1952 :

1953 :

1960 :

1980 :

2000 :

# of children gunned down over time (assuming doubling every year since 1950)

1950 1952 1954 year

1956 1958

# of children gunned down over first 20 years

(assuming doubling every year since 1950)

1950 1955 1960 year

1965

# kids gunned down (45 years of exponential growth)

1950 1960 1970 year

1980 1990 2000

what the study actually said

The CDF's The State of America's Children

Yearbook--1994 does state: "The number of

American children killed each year by guns has doubled since 1950."[1]

How does the difference in wording change things?

Are there phenomena that truly are exponential?

world population and production source: federal reserve bank of Minneapolis

the growth of the internet

growth of wikipedia

population projection bias: are we most likely to be alive during the population peak?

a bit of philosophy: anthropic principle

Why are the parameters of the universe fine tuned exactly the way they are (out of all the possible parameters)

How did everything balance out exactly right to allow for life to exist?

(weak) anthropic principle, a truism:

conditions that are observed in the universe must allow the observer to exist

http://en.wikipedia.org/wiki/Anthropic_principle

Experimentation

Control one variable – measure effect of another

Randomization

taste tester tastes soda in random order

survey questions appear in random order

Blindness

subject does not know which treatment they are receiving

(placebo vs. new medication, or diet vs. regular)

double-blind: experimenter making observation does not know which treatment the subject received

e.g. ESP research

UN Survey

Please complete the survey individually without consulting with your neighbors

Experimentation vs. observation

In an experiment,

the experimenter determines which experimental units receive which treatments

when carefully designed can be used to prove causation

e.g. stomach ulcer Nobel prize

Barry Marshall and Robin Warren conducted a 100-patient study with

65 suffering from gastritis directly due to the presence of the bacteria.

In all the patients with duodenal ulcer and 80 percent of patients with gastric ulcer, the spiral organism H. pylori was present.

Marshall then took the unusual step of using himself as guinea pig and drank a solution containing the newlydiscovered bacteria. “I planned to give myself an ulcer, then treat myself, to prove that H. pylori can be a pathogen in normal people,” he explained in one interview. He did not develop an ulcer, but the resulting stomach inflammation was clearly surrounded by the distinctive curved bacteria.

Experimentation vs. observation

In an observational study ,

we compare units that happen to have received each of the treatments

useful for identifying possible causes but cannot reliably establish causation

Useful when it would be impractical or unethical to conduct and experiment

e.g. smoking causes lung cancer

observational studies

claims made by test-prep organizations students taking a test-prep class increase their SAT scores by 100 on average is this proof that test-prep classes are the cause of the improvement?

What are possible lurking variables?

Before After

Control group

Treated group

freakonomics

which studies were observational?

which studies were experiments?

how did the studies minimize sampling bias?

freakonomics: studies

bagels

crime stats

parenting

school choice

cheating teachers

sumo wrestlers

real estate agents

summary

3 types of studies

surveys

observational

experiments

in all of them, we have to consider

population

sampling frame

non-response

bias resulting from survey/experimental design and sampling