Microsoft PowerPoint

advertisement

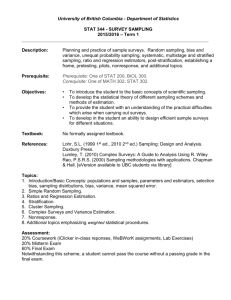

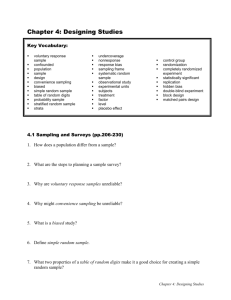

Do We Still Need Probability Sampling in Surveys? Robert M. Groves University of Michigan and Joint Program in Survey Methodology, USA Outline • The total survey error paradigm in scientific surveys • The decline in survey participation • The rise of internet panels • The “second era” of internet panels • So... do we need probability sampling? Outline • The total survey error paradigm in scientific surveys • The decline in survey participation • The rise of internet panels • The “second era” of internet panels • So... do we need probability sampling? The Ingredients of Scientific Surveys • • • • • • A target population A sampling frame A sample design and selection A set of target constructs A measurement process Statistical estimation Deming (1944) “On Errors in Surveys” • American Sociological Review! • First listing of sources of problems, beyond sampling, facing surveys Comments on Deming (1944) • Includes nonresponse, sampling, interviewer effects, mode effects, various other measurement errors, and processing errors • Includes nonstatistical notions (auspices) • Includes estimation step errors (wrong weighting) • Omits coverage errors • “total survey error” not used as a term Sampling Text Treatment of Total Survey Error • Kish, Survey Sampling, 1965 – 65 of 643 pages on various errors, with specified relationship among errors – Graphic on biases Frame biases Sampling Biases “Consistent” Sampling Bias Constant Statistical Bias Noncoverage Nonobservation Nonresponse Field: data collection Nonsampling Biases Observation Office: processing Total Survey Error (1979) Anderson, Kasper, Frankel, and Associates • Empirical studies on nonresponse, measurement, and processing errors for health survey data • Initial total survey error framework in more elaborated nested structure Sampling Variable Error Field Nonsampling Processing Frame Total Error Sampling Consistent Noncoverage Bias Nonobservation Nonsampling Nonresponse Field Observation Processing Survey Errors and Survey Costs (1989), Groves • Attempts conceptual linkages between total survey error framework and – psychometric true score theories – econometric measurement error and selection bias notions • Ignores processing error • Highest conceptual break on variance vs. bias • Second conceptual break on errors of nonobservation vs. errors of observation Mean Square Error construct validity theoretical validity empirical validity reliability Variance Errors of Nonobservation Coverage Nonresponse Observational Errors Sampling Interviewer Respondent Instrument Mode criterion validity - predictive validity - concurrent validity Bias Observational Errors Errors of Nonobservation Coverage Nonresponse Sampling Interviewer Respondent Instrument Mode Nonsampling Error in Surveys (1992), Lessler and Kalsbeek • Evokes “total survey design” more than total survey error • Omits processing error Components of Error Frame errors Topics Missing elements Nonpopulation elements Unrecognized multiplicities Improper use of clustered frames Sampling errors Nonresponse errors Deterministic vs. stochastic view of nonresponse Unit nonresponse Item nonresponse Measurement errors Error models of numeric and categorical data Studies with and without special data collections Introduction to Survey Quality, (2003), Biemer and Lyberg • Major division of sampling and nonsampling error • Adds “specification error” (a la “construct validity”) • Formally discusses process quality • Discusses “fitness for use” as quality definition Sources of Error Specification error Frame error Types of Error Concepts Objectives Data element Omissions Erroneous inclusions Duplications Nonresponse error Whole unit Within unit Item Incomplete Information Measurement error Information system Setting Mode of data collection Respondent Interview Instrument Processing error Editing Data entry Coding Weighting Tabulation Survey Methodology, (2004) Groves, Fowler, Couper, Lepkowski, Singer, Tourangeau • Notes twin inferential processes in surveys – from a datum reported to the given construct of a sampled unit – from estimate based on respondents to the target population parameter • Links inferential steps to error sources The Total Survey Error Paradigm Measurement Representation Inferential Population Construct Validity Target Population Measurement Sampling Frame Measurement Error Response Sample Processing Error Coverage Error Sampling Error Nonresponse Error Edited Data Respondents Survey Statistic Summary of the Evolution of “Total Survey Error” • Roots in cautioning against sole attention to sampling error • Framework contains statistical and nonstatistical notions • Most statistical attention on variance components, most on measurement error variance • Late 1970’s attention to “total survey design” • 1980’s-1990’s attempt to import psychometric notions • Key omissions in research 5 Myths of Survey Practice that TSE Debunks 1. “Nonresponse rates are everything” 2. “Nonresponse rates don’t matter” 3. Give as many cases to the good interviewers as they can work 4. Postsurvey adjustments eliminate nonresponse error 5. Usual standard errors reflect all sources of instability in estimates (measurement error variance, interviewer variance, etc.) Outline • The total survey error paradigm in scientific surveys • The decline in survey participation • The rise of internet panels • The “second era” of internet panels • So... do we need probability sampling? Response Rates • In most rich countries response rates on household and organizational surveys are declining • deLeeuw and deHeer (2002) model a 2 percentage point decline per year • Probability sampling inference is unbiased from nonresponse with 100% response rate • Recent studies challenge a simple link between response rates and nonresponse error • Reading Keeter et al. (2000), Curtin et al. (2000), Merkle and Edelman (2002) suggests response rates don’t matter • Standard practice urges maximizing response rates What’s a practitioner to do? Mismatches between Statistical Expressions for Nonresponse Error and Practice yp m y r y n (y r y m ) y n n p where yp covariance between the survey variable, y, and the response propensity, p What does the Stochastic View of Response Propensity Imply? • Key issue is whether the influences on survey participation are shared with the influences on the survey variables • Increased nonresponse rates do not necessarily imply increased nonresponse error • Hence, investigations are necessary to discover whether the estimates of interest might be subject to nonresponse errors Assembly of Prior Studies of Nonresponse Bias • Search of peer-reviewed and other publications • 47 articles reporting 59 studies • About 959 separate estimates (566 percentages) – mean nonresponse rate is 36% – mean bias is 8% of the full sample estimate • We treat this as 959 observations, weighted by sample sizes, multiply-imputed for item missing data, standard errors reflecting clustering into 59 studies and imputation variance Percentage Absolute Relative Bias 100 * ( y r y n ) yn where y r is the unadjusted respondent mean y n is the unadjusted full sample mean Percentage Absolute Relative Nonresponse Bias by Nonresponse Rate for 959 Estimates from 59 Studies Percentage Absolute Relative Bias 100 90 80 70 60 50 40 30 20 10 0 0 10 20 30 40 50 Nonresponse Rate 60 70 80 1. Nonresponse Bias Happens Percentage Absolute Relative Bias 100 90 80 70 60 50 40 30 20 10 0 0 10 20 30 40 50 Nonresponse Rate 60 70 80 30 2. Large Variation in Nonresponse Bias Across Estimates Within the Same Survey, or Percentage Absolute Relative Bias 100 90 80 70 60 50 40 30 20 10 0 0 10 20 30 40 50 Nonresponse Rate 60 70 80 31 3. The Nonresponse Rate of a Survey is a Poor Predictor of the Bias of its Various Estimates (Naïve OLS, R2=.04) Percentage Absolute Relative Bias of Respondent Mean 100 90 80 70 60 50 40 30 20 10 0 0 10 20 30 40 50 60 70 80 Nonresponse Rate 32 Conclusions • It’s not that nonresponse error doesn’t exist • It’s that nonresponse rates aren’t good predictors of nonresponse error • We need auxiliary variables to help us gauge nonresponse error A Practical Question “What attraction does a probability sample have for representing a target population if its nonresponse rate is very high and its respondent count is lower than equallycostly nonprobability surveys?” Outline • The total survey error paradigm in scientific surveys • The decline in survey participation • The rise of internet panels • The “second era” of internet panels • So... do we need probability sampling? A “Solution” to Response Rate Woes • Web surveys offer a very different cost structure than telephone and face-to-face surveys – Almost all fixed costs – Very fast data collection • But there is no sampling frame – Often probability sampling from large volunteer groups • Internet access varies across and within countries Access/Volunteer Internet Panels • Massive change in US commercial survey practice, moving from telephone and mail paper questionnaires to web surveys • Survey Sampling, a major supplier of telephone samples over the past two decades now reports that 80% of their business is web panel samples • Some businesses do only web survey measurement The Method • Recruitment of email ID’s from internet users – At survey organization’s web site – Through pop-ups or banners on others’ sites – Through third party vendors • A June 15, 2008, Google search of “make money doing surveys” yields 19,300 hits – “make $10 in 5 minutes” www.SurveyMonster.com U.S. Online MR Spending There is a new industry Baker, 2008 $1,600 $1,400 $1,200 $1,000 $800 $600 $400 $200 $0 19 9 19 7 9 19 8 9 20 9 0 20 0 0 20 1 0 20 2 0 20 3 0 20 4 0 20 5 20 06 07 E Greenfield Online Survey Sampling e-Rewards Lightspeed ePocrates Knowledge Networks Private company panels Proprietary panels Millions – – – – – – – – $1,800 Inside Research, 2007 40 Reward Systems Vary • Payment per survey • Points per survey, yielding eligibility for rewards • Points for sweepstakes Adjustment in Estimation • Estimation usually involves adjustment to some population totals • Some firms have propensity model-based adjustments – “proprietary estimation systems” abound Outline • The total survey error paradigm in scientific surveys • The decline in survey participation • The rise of internet panels • The “second era” of internet panels • So... do we need probability sampling? September, 2007, Respondent Quality Summit • Head of Proctor and Gamble market research 1. Cites Comscore: 0.25% of internet users responsible for 30% of responses to internet panels 2. Cites average number of panel memberships of respondents of 5-8 3. Presents examples of failure to predict behaviors The number of surveys taken matters. 90% 80% 70% 78% 73% 66% 60% 60% 51% 50% 46% 43% 38% 40% 33% 30% 20% 10% 0% Like Product 1-3 Surveys Intend to Buy 4-19 Surveys Expected Purchase Frequency 20+ Surveys Coen et al., 2005 in Baker, 2008 45 • • • • • • • The Practical Indicators of “Quality” Cheating on qualifying questions Internal inconsistencies Overly fast completion “Straightlining” in grids Gibberish or duplicated open end responses Failure of “verification” items in grids Selection of bogus or low-probability answers • Non-comparability of results with non-panel sample 46 Baker, 2008 Panel response rates are in decline as panelists do more surveys. 80% 69% 59% 60% 61% 54% 40% 20% 18% 20% 11% 5% 0% Web1 Web2 More than 15 Surveys Web3 Web4 Response Rate MSI, 2005 in Baker, 2008 47 Where are we now? • An industry in turmoil • Active study of correlates of low quality conducted by sophisticated clients • Professional associations attempting to define quality indicators Outline • The total survey error paradigm in scientific surveys • The decline in survey participation • The rise of internet panels • The “second era” of internet panels • So... do we need probability sampling? Access Panels and Inference • Access panels have conjoined frame development and sample selection • Without documentation of the frame development, assessment of coverage properties are not tractable • Many use probability sampling from the volunteer set, but ignore this in estimation A Better Question • Not “do we still need probability sampling?” but “can we develop good sampling frames with rich auxiliary variables?” Target Population Target Population Modelassisted Sampling Frame Sampling Frame Randomization theory ? Modelassisted Sample Sample Modelassisted Respondents Respondents The Value of Probability Sampling From Well-defined Frames • Randomization theory is the powerful linking tool between the sample and the frame • Models of nonresponse adjustment are enhanced by auxiliary variables measured on respondents and nonrespondents The Role of Probability Sampling in this Context • Probability sampling has low marginal costs within a defined sampling frame • Probability sampling offers stratification benefits • A sampling frame with rich auxiliary variables can improve stratification effects Access panels should strive for well-defined frame development Speculation • As adjustment for nonresponse becomes more important, – Richness of auxiliary variables is primary – Coverage of population becomes relatively less important • Hence, frame data and field observations on nonrespondents and respondents are valued Outline • The total survey error paradigm in scientific surveys • The decline in survey participation • The rise of internet panels • The “second era” of internet panels • So... do we need probability sampling?