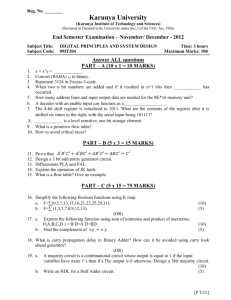

Optimizing Matrix Multiply

advertisement

CS294, Lecture #3

Fall, 2011

Communication-Avoiding Algorithms

www.cs.berkeley.edu/~odedsc/CS294

How to Compute and Prove

Lower and Upper Bounds

on the

Communication Costs

of Your Algorithm

Part II: Geometric embedding

Oded Schwartz

Based on:

D. Irony, S. Toledo, and A. Tiskin:

Communication lower bounds for distributed-memory matrix multiplication.

G. Ballard, J. Demmel, O. Holtz, and O. Schwartz:

Minimizing communication in linear algebra.

Last time: the models

Two kinds of costs:

Arithmetic (FLOPs)

Communication: moving data between

•

•

levels of a memory hierarchy (sequential case)

over a network connecting processors (parallel case)

Sequential

Hierarchy

CPU

Cache

M1

M2

Parallel

CPU

RAM

CPU

RAM

CPU

RAM

CPU

RAM

M3

RAM

Mk =

2

Last time: Communication Lower Bounds

Proving that your algorithm/implementation is as good as it gets.

Approaches:

1. Reduction

[Ballard, Demmel, Holtz, S. 2009]

2. Geometric Embedding

[Irony,Toledo,Tiskin 04],

[Ballard, Demmel, Holtz, S. 2011a]

3. Graph Analysis

[Hong & Kung 81],

[Ballard, Demmel, Holtz, S. 2011b]

3

Last time: Lower bounds for matrix multiplication

Bandwidth:

[Hong & Kung 81]

• Sequential

n 3

M

M

[Irony,Toledo,Tiskin 04]

• Sequential and parallel

n 3 M

M P

Latency:

Divide by M.

4

Last time: Reduction

(1st approach)

[Ballard, Demmel, Holtz, S. 2009a]

Thm:

Cholesky and LU decompositions are

(communication-wise) as hard as matrix-multiplication

Proof:

By a reduction (from matrix-multiplication) that

preserves communication bandwidth, latency, and arithmetic.

Cor:

Any classical O(n3) algorithm for Cholesky and LU decomposition

requires:

Bandwidth: (n3 / M1/2)

Latency:

(n3 / M3/2)

(similar cor. for the parallel model).

5

Today: Communication Lower Bounds

Proving that your algorithm/implementation is as good as it gets.

Approaches:

1. Reduction

[Ballard, Demmel, Holtz, S. 2009]

2. Geometric Embedding

[Irony,Toledo,Tiskin 04],

[Ballard, Demmel, Holtz, S. 2011a]

3. Graph Analysis

[Hong & Kung 81],

[Ballard, Demmel, Holtz, S. 2011b]

6

Lower bounds: for matrix multiplication

using geometric embedding

[Hong & Kung 81]

• Sequential

n 3

M

M

[Irony,Toledo,Tiskin 04]

• Sequential and parallel

n 3 M

M P

Now: prove both, using the geometric embedding approach

of [Irony,Toledo,Tiskin 04].

7

Geometric Embedding (2nd approach)

[Irony,Toledo,Tiskin 04], based on [Loomis & Whitney 49]

Matrix multiplication form:

(i,j) n x n,

C(i,j) = k A(i,k) B(k,j),

Thm: If an algorithm agrees with this form

(regardless of the order of computation) then

BW = (n3/ M1/2)

BW = (n3/ PM1/2)

in P-parallel model.

8

Geometric Embedding (2nd approach)

[Irony,Toledo,Tiskin 04], based on [Loomis & Whitney 49]

Read

Read

S1

Read

FLOP

For a given run (algorithm, machine, input)

1. Partition computations into segments

of M reads / writes

M

Write

FLOP

S2

2. Any segment S has 3M inputs/outputs.

Read

Read

3. Show that #multiplications in S k

FLOP

FLOP

FLOP

S3

Write

4. The total communication BW is

BW = BW of one segment #segments

M #mults / k

FLOP

...

Write

...

Time

Read

Example of a partition,

M=3

9

Geometric Embedding (2nd approach)

[Irony,Toledo,Tiskin 04], based on [Loomis & Whitney 49]

Matrix multiplication form:

(i,j) n x n, C(i,j) = k A(i,k)B(k,j),

“C shadow”

x

y

C

A B

C

A B

z

V

V

y

z

x

“A shadow”

Volume of box

V = x·y·z

= ( xz · zy · yx)1/2

Thm: (Loomis & Whitney, 1949)

Volume of 3D set

V ≤ (area(A shadow)

· area(B shadow)

· area(C shadow) ) 1/2

10

Geometric Embedding (2nd approach)

[Irony,Toledo,Tiskin 04], based on [Loomis & Whitney 49]

Read

Read

S1

Read

FLOP

For a given run (algorithm, machine, input)

1. Partition computations into segments

of M reads / writes

M

Write

FLOP

S2

2. Any segment S has 3M inputs/outputs.

Read

Read

3. Show that #multiplications in S k

FLOP

FLOP

FLOP

S3

Write

4. The total communication BW is

BW = BW of one segment #segments

M #mults / k = M n3 / k

FLOP

...

Write

...

Time

Read

Example of a partition,

M=3

5. By Loomis-Whitney:

BW M n3 / (3M)3/2

11

From Sequential Lower bound

to Parallel Lower Bound

We showed:

Any classical O(n3) algorithm for matrix multiplication on

sequential model requires:

Bandwidth: (n3 / M1/2)

Latency:

(n3 / M3/2)

Cor:

Any classical O(n3) algorithm for matrix multiplication

on P-processors machine

(with balanced workload) requires:

2D-layout: M=O(n2/P)

Bandwidth: (n3 /PM1/2)

(n2/P1/2)

Latency:

(n3 / PM3/2) (P1/2)

12

From Sequential Lower bound

to Parallel Lower Bound

“C shadow”

C

A B

Proof:

Observe one processor.

Is it always true?

Let Alg be an algorithm

with communication lower bound B = B(n,M).

“A shadow”

?

Then any parallel implementation of Alg has a

communication lower bound B’(n, M, p) = B(n, M)/p

13

Proof of Loomis-Whitney inequality

T(x=i | y)

• Nx = |Tx| = #squares in Tx, same for Ty, Ny, etc

• Goal: N ≤ (Nx · Ny · Nz)1/2

•

•

•

•

T(x=i) = subset of T with x=i

T(x=i | y ) = projection of T(x=i) onto y=0 plane

N(x=i) = |T(x=i)| etc

N = i N(x=i) = i (N(x=i))1/2 · (N(x=i))1/2

≤ i (Nx)1/2 · (N(x=i))1/2

≤ (Nx)1/2 · i (N(x=i | y ) · N(x=i | z ) )1/2

= (Nx)1/2 · i (N(x=i | y ) )1/2 · (N(x=i | z ) )1/2

≤ (Nx)1/2 · (i N(x=i | y ) )1/2 · (i N(x=i | z ) )1/2

= (Nx)1/2 · (Ny)1/2 · (Nz)1/2

y

T

x

x=i

T(x=i)

N(x=i) N(x=i|y) ·N(x=i|z)

T(x=i)

N(x=i|z)

• T = 3D set of 1x1x1 cubes on lattice

• N = |T| = #cubes

• Tx = projection of T onto x=0 plane

z

N(x=i|y)

14

Communication Lower Bounds

Proving that your algorithm/implementation is as good as it gets.

Approaches:

1. Reduction

[Ballard, Demmel, Holtz, S. 2009]

2. Geometric Embedding

[Irony,Toledo,Tiskin 04],

[Ballard, Demmel, Holtz, S. 2011a]

3. Graph Analysis

[Hong & Kung 81],

[Ballard, Demmel, Holtz, S. 2011b]

15

How to generalize this lower bound

Matrix multiplication form:

(i,j) n x n, C(i,j) = k A(i,k)B(k,j),

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

• C(i,j) any unique memory location.

Same for A(i,k) and B(k,j). A,B and C may overlap.

• Lower bound for all reorderings. Incorrect ones too.

• It does assume each operand generate load/store.

•

•

Turns out QR, eig, SVD all may do this

Need a different analysis. Not today…

• fij and gijk are “nontrivial” functions

16

Geometric Embedding (2nd approach)

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

Thm: [Ballard, Demmel, Holtz, S. 2011a]

If an algorithm agrees with the generalized form then

BW = (G/ M1/2)

where G = |{g(i,j,k) | (i,j) S, k Sij }

BW = (G/ PM1/2)

in P-parallel model.

17

Example:

Application to Cholesky decomposition

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

Li, i Ai, i

Ai, k

2

k i 1

1

Ai, j Ai, j A j, k , i j

Li, j

L j, j

ki 1

18

From Sequential Lower bound

to Parallel Lower Bound

We showed:

Any algorithm that agrees with Form (1)

on sequential model requires:

Bandwidth: (G / M1/2)

Latency:

(G / M3/2)

where G is the gijk count.

Cor:

Any algorithm that agrees with Form (1), on a Pprocessors machine, where at least two processors

perform (1/P) of G each requires:

Bandwidth: (G /PM1/2)

Latency:

(G / PM3/2)

19

Geometric Embedding (2nd approach)

[Ballard, Demmel, Holtz, S. 2011a]

Follows [Irony,Toledo,Tiskin 04], based on [Loomis & Whitney 49]

Lower bounds: for algorithms with “flavor” of 3 nested loops

BLAS, LU, Cholesky, LDLT, and

QR factorizations, eigenvalues and SVD,

i.e., essentially all direct methods of linear algebra.

• Dense or sparse matrices

In sparse cases: bandwidth is a function NNZ.

• Bandwidth and latency.

• Sequential, hierarchical, and

parallel – distributed and shared memory models.

• Compositions of linear algebra operations.

• Certain graph optimization problems

For dense:

n 3

M

M

n 3 M

M P

[Demmel, Pearson, Poloni, Van Loan, 11], [Ballard, Demmel, S. 11]

• Tensor contraction

20

Do conventional dense algorithms as implemented in

LAPACK and ScaLAPACK attain these bounds?

Mostly not.

Are there other algorithms that do?

Mostly yes.

21

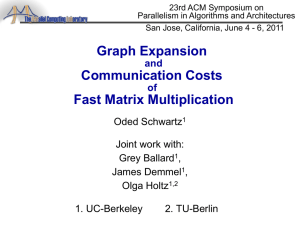

Dense Linear Algebra: Sequential Model

Lower bound

Algorithm

Bandwidth

Latency

MatrixMultiplication

Bandwidth

Latency

[Frigo, Leiserson, Prokop,

Ramachandran 99]

Cholesky

n 3

M

M

LU

[Ballard,

Demmel,

Holtz,

S. 11]

QR

Attaining algorithm

n 3

M

[Ballard,

Demmel,

Holtz,

S. 11]

[Ahmad, Pingali 00]

[Ballard, Demmel, Holtz, S. 09]

[Toledo97]

[EG98]

[DGX08]

[DGHL08a]

Symmetric

Eigenvalues

[Ballard,Demmel,Dumitriu 10]

SVD

[Ballard,Demmel,Dumitriu 10]

(Generalized)

Nonsymetric

Eigenvalues

[Ballard,Demmel,Dumitriu 10]

22

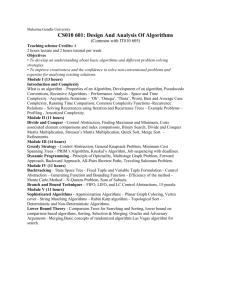

Dense 2D parallel algorithms

• Assume nxn matrices on P processors, memory per processor = O(n2 / P)

• ScaLAPACK assumes best block size b chosen

• Many references (see reports), Blue are new

Relax: 2.5D Algorithms

Solomonik & Demmel ‘11

• Recall lower bounds:

#words_moved = ( n2 / P1/2 )

and

#messages = ( P1/2 )

Algorithm

Reference

Factor exceeding

lower bound for

#words_moved

Factor exceeding

lower bound for

#messages

Matrix multiply

[Cannon, 69]

1

1

Cholesky

ScaLAPACK

log P

log P

LU

[GDX08]

ScaLAPACK

log P

log P

log P

( N / P1/2 ) · log P

QR

[DGHL08]

ScaLAPACK

log P

log P

log3 P

( N / P1/2 ) · log P

Sym Eig, SVD

[BDD10]

ScaLAPACK

log P

log P

log3 P

N / P1/2

Nonsym Eig

[BDD10]

ScaLAPACK

log P

P1/2 · log P

log3 P

N · log P

Geometric Embedding (2nd approach)

Read

Read

S1

Read

FLOP

For a given run (algorithm, machine, input)

1. Partition computations into segments

of M reads / writes

M

Write

FLOP

S2

2. Any segment S has 3M inputs/outputs.

Read

Read

3. Show that S performs k FLOPs gijk

FLOP

FLOP

FLOP

S3

Write

FLOP

...

Write

...

Time

Read

Example of a partition,

M=3

4. The total communication BW is

BW = BW of one segment #segments

M G / k,

where G is #gi,j,k

24

Geometric Embedding (2nd approach)

[Ballard, Demmel, Holtz, S. 2011a]

Follows [Irony,Toledo,Tiskin 04], based on [Loomis & Whitney 49]

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

“C shadow”

x

y

C

A B

C

A B

z

V

V

y

z

x

“A shadow”

Volume of box

V = x·y·z

= ( xz · zy · yx)1/2

Thm: (Loomis & Whitney, 1949)

Volume of 3D set

V ≤ (area(A shadow)

· area(B shadow)

· area(C shadow) ) 1/2

25

Geometric Embedding (2nd approach)

Read

Read

S1

Read

FLOP

For a given run (algorithm, machine, input)

1. Partition computations into segments

of M reads / writes

M

Write

FLOP

S2

2. Any segment S has 3M inputs/outputs.

Read

Read

3. Show that S performs k FLOPs gijk

FLOP

FLOP

FLOP

S3

Write

FLOP

...

Write

...

Time

Read

Example of a partition,

M=3

4. The total communication BW is

BW = BW of one segment #segments

MG/k

where G is #gi,j,k

5. By Loomis-Whitney:

BW M G / (3M)3/2

26

Applications

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

BW = (G/ M1/2)

BW = (G/ PM1/2)

where G = |{g(i,j,k) | (i,j) S, k Sij }

in P-parallel model.

27

Geometric Embedding (2nd approach)

[Ballard, Demmel, Holtz, S. 2011a]

Follows [Irony,Toledo,Tiskin 04], based on [Loomis & Whitney 49]

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

But many algorithms just don’t fit

the generalized form!

For example:

Strassen’s fast matrix multiplication

28

Beyond 3-nested loops

How about the communication costs of algorithms

that have a more complex structure?

29

Communication Lower Bounds

– to be continued…

Proving that your algorithm/implementation is as good as it gets.

Approaches:

1. Reduction

[Ballard, Demmel, Holtz, S. 2009]

2. Geometric Embedding

[Irony,Toledo,Tiskin 04],

[Ballard, Demmel, Holtz, S. 2011a]

3. Graph Analysis

[Hong & Kung 81],

[Ballard, Demmel, Holtz, S. 2011b]

30

Further reduction techniques:

Imposing reads and writes

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

Example: Computing ||A∙B|| where each matrix element is a formulas,

computed only once.

Problem: Input/output do not agree with Form (1).

Solution:

• Impose writes/reads of (computed) entries of A and B.

• Impose writes of the entries of C.

n 3

M

• The new algorithm has lower bound BW

M

3

n

2

• For the original algorithm BW

M cn

M

n 3

M for n3 / M c' n2 M c' n2

i.e., BW

M

(which we assume anyway).

31

Further reduction techniques:

Imposing reads and writes

The previous example can be generalized to other

“black-box” uses of algorithms that fit Form (1).

Consider a more general class of algorithms:

• Some arguments of the generalized form may be

computed “on the fly” and discarded immediately after

used. …

32

Recall…

Read

Read

S1

Read

FLOP

For a given run (Algorithm, Machine, Input)

1. Partition computations into segments

of 3M reads / writes

M

Write

FLOP

S2

2. Any segment S has M inputs/outputs.

Read

Read

3. Show that S performs G(3M) FLOPs gijk

FLOP

FLOP

FLOP

S3

Write

4. The total communication BW is

BW = BW of one segment #segments

M G / G(3M)

FLOP

...

Write

...

Time

Read

Example of a partition,

M=3

But now some operands inside a segment

may be computed on-the fly and discarded.

So no read/write performed.

33

How to generalize this lower bound:

How to deal with on-the-fly generated operands

Read

Read

S1

Read

FLOP

Write

FLOP

S2

Read

Read

Read

FLOP

FLOP

FLOP

Write

Write

...

FLOP

S3

• Need to distinguish Sources, Destinations

of each operand in fast memory during a segment:

• Possible Sources:

R1: Already in fast memory at start of segment,

or read; at most 2M

R2: Created during segment;

no bound without more information

• Possible Destinations:

D1: Left in fast memory at end of segment,

or written; at most 2M

D2: Discarded;

no bound without more information

34

How to generalize this lower bound:

How to deal with on-the-fly generated operands

Read

Read

S1

Read

FLOP

Write

FLOP

S2

Read

Read

Read

“C shadow”

C

A B

“A shadow”

FLOP

FLOP

FLOP

There are at most 4M of types:

R1/D1, R1/D2, R2/D1.

Need to assume/prove:

not too many R2/D2 arguments;

Then we can use LW, and obtain

the lower bound of Form (1).

S3

V

Bounding R2/D2 is sometimes

quite subtle.

Write

Write

...

FLOP

35

Composition of algorithms

Many algorithms and applications use composition of

other (linear algebra) algorithms.

How to compute lower and upper bounds for such cases?

Example - Dense matrix powering

Compute An by (log n times) repeated squaring:

A A2 A4 … An

Each squaring step agrees with Form (1).

3

Do we get

n

BW log n

M

M

or is there a way to reorder (interleave) computations to

reduce communication?

36

Communication hiding vs.

Communication avoiding

Q.

The Model assumes that computation and communication

do not overlap.

Is this a realistic assumption?

Can we not gain time by such overlapping?

A.

Right. This is called communication hiding.

It is done in practice, and ignored in our model.

It may save up to a factor of 2 in the running time.

Note that the speedup gained by avoiding (minimizing)

communication is typically larger than a constant factor.

37

Two-nested loops:

when the input/output size dominates

Q. Do two-nested-loops algorithms fall into the paradigm of Form (1)?

For example, what lower bound do we obtain for computing Matrixvector multiplication?

n2

A. Yes, but the lower bound we obtain is BW

M

Where just reading the input costs BW n 2

More generally, the communication cost lower bound

for algorithms that agree with Form (1) is

BW MaxLW , # inputs# outputs

where LW is the one we obtain from the geometric embedding, and

#inputs+#outputs is the size of the inputs and outputs.

For some algorithms LW dominates, for others #inputs+#outputs

dominate.

38

Composition of algorithms

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

Claim: any implementation of An by (log n times) repeated

3

squaring requires

n

BW log n

M

M

Therefore we cannot reduce communication by more than

a constant factor

(compared to log n separate calls to matrix multiplications)

by reordering computations.

39

Composition of algorithms

(1) Generalized form:

(i,j) S,

C(i,j) = fij( gi,j,k1 (A(i,k1), B(k1,j)),

gi,j,k2 (A(i,k2), B(k2,j)),

…,

k1,k2,… Sij

other arguments)

Proof: by imposing reads/writes on each entry of every

intermediate matrix.

The total number of gi,j,k is (n3log n).

The total number of imposed reads/writes is (n2log n).

The lower bound for the original algorithm

is

3

n

2

BW log n

M n log n

M

3

n

log n

M

40

M

Composition of algorithms:

when interleaving does matter

Example 1:

Input: A,v1,v2,…,vn

Output: Av1,Av2,…,Avn

The phased solution costs

BW n3

But we already know that we can save a M1/2 factor:

Set B = (v1,v2,…,vn), and compute AB, then the cost is

n 3

BW

M

M

Other examples?

41

Composition of algorithms:

when interleaving does matter

Example 2:

Input: A,B, t

Output: C(k) = A B(k) for k = 1,2,…,t

where Bi,j(k) = Bi,j1/k

Phased solution:

Upper bound:

n 3

BW O t

M

M

(by adding up the BW cost of t matrix multiplication calls).

Lower bound:

n 3

BW t

M

M

(by imposing writes/reads between phases).

42

Composition of algorithms:

when interleaving does matter

Example 2:

Input: A,B, t

Output: C(k) = A B(k) for k = 1,2,…,t

where Bi,j(k) = Bi,j1/k

n 3

Can we do better than BW t

M ?

M

43

Composition of algorithms:

when interleaving does matter

Example 2:

Input: A,B, t

Output: C(k) = A B(k) for k = 1,2,…,t

where Bi,j(k) = Bi,j1/k

n 3

Can we do better than BW t

M ?

M

Yes.

Claim:

There exists an implementation for the above algorithm,

with communication cost (tight lower and upper bounds):

3

n

BW t

M

M

44

Composition of algorithms:

when interleaving does matter

Example 2:

Input: A,B, t

Output: C(k) = A B(k) for k = 1,2,…,t

where Bi,j(k) = Bi,j1/k

Proofs idea:

• Upper bound: Having both Ai,k and Bk,j in fast memory

lets us do up to t evaluations of gijk.

• Lower bound: The union of all these tn3 operations does

not match Form (1), since the inputs Bk,j cannot be

indexed in a one-to-one fashion.

We need a more careful argument regarding the

numbers of gijk. Operations in a segment as a function of

the number of accessed elements of A, B and C(k).

45

Composition of algorithms:

when interleaving does matter

Can you think of natural examples where

reordering / interleaving of known algorithms

may improve the communication costs,

compared to the phased implementation?

46

Summary

How to compute an upper bound

on the communication costs of your algorithm?

Typically straightforward. Not always.

How to compute and prove a lower bound

on the communication costs of your algorithm?

Reductions:

from another algorithm/problem

from another model of computing

By using the generalized form (“flavor” of 3 nested loops)

and imposing reads/writes – black-box-wise

or bounding the number of R2/D2 operands

By carefully composing the lower bounds of the building blocks.

Next time: by graph analysis

47

Open Problems

Find algorithms that attain the lower bounds:

• Sparse matrix algorithms

• that auto-tune or are cache oblivious

• cache oblivious for parallel (distributed memory)

• Cache oblivious parallel matrix multiplication? (Cilk++ ?)

Address complex heterogeneous hardware:

• Lower bounds and algorithms

[Demmel, Volkov 08],[Ballard, Demmel, Gearhart 11]

48

CS294, Lecture #2

Fall, 2011

Communication-Avoiding Algorithms

How to Compute and Prove

Lower Bounds

on the

Communication Costs

of your Algorithm

Oded Schwartz

Based on:

D. Irony, S. Toledo, and A. Tiskin:

Communication lower bounds for distributed-memory matrix multiplication.

G. Ballard, J. Demmel, O. Holtz, and O. Schwartz:

Minimizing communication in linear algebra.

Thank you!