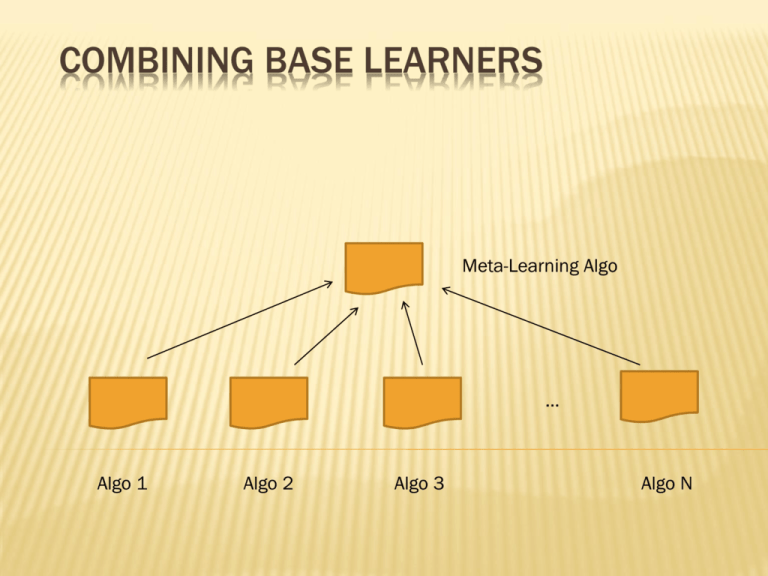

CombiningBaseLearners

advertisement

COMBINING BASE LEARNERS Meta-Learning Algo … Algo 1 Algo 2 Algo 3 Algo N RATIONALE Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) No Free Lunch theorem: There is no algorithm that is always the most accurate. Generate a group of algorithms which when combined display higher accuracy. 2 DATA AND ALGO VARIATION … Different Datasets … Different Algorithms Bagging Bagging • Bagging can easily be extended to regression. • Bagging is most efficient when the base-learner is unstable. • Bagging typically increases accuracy. • Interpretability is lost. “Breiman's work bridged the gap between statisticians and computer scientists, particularly in the field of machine learning. Perhaps his most important contributions were his work on classification and regression trees and ensembles of trees fit to bootstrap samples. Bootstrap aggregation was given the name bagging by Breiman. Another of Breiman's ensemble approaches is the random forest.” (Extracted from Wikipedia). Boosting Boosting • Boosting tries to combine weak learners into a strong learner. • Originally all examples have the same weight, but in following iterations examples wrongly classified increase their weight. • Boosting can be applied to any learner. Boosting Initialize all weights wi to 1/N (N: no. of examples) error = 0 Repeat (until error > 0.5 or max. iterations reached) Train classifier and get hypothesis ht(x) Compute error as the sum of weights for misclassified exs. error = Σ wi if wi is incorrectly classified. Set αt = log ( 1-error / error ) Updates weights wi = [ wi e - (αt Output f(x) = sign ( Σt αt ht(x) ) yi ht(xi) ] /Z Boosting Misclassified Example Increase Weights DATA AND ALGO VARIATION … Different Datasets … Different Algorithms 12 MIXTURE OF EXPERTS Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Voting where weights are input-dependent (gating) (Jacobs et al., 1991) Experts or gating can be nonlinear 13 MIXTURE OF EXPERTS Robert Jacobs University of Rochester Michael Jordan UC Berkeley Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Stacking Stacking • Variations are among learners. • The predictions of the base learners form a new meta-dataset. • A testing example is first transformed into a new meta-example and then classified. • Several variations have been proposed around stacking. Cascade Generalization Cascade Generalization • Variations are among learners. • Classifiers are used in sequence rather than in parallel as in stacking. • The prediction of the first classifier is added to the example feature vector to form an extended dataset. • The process can go on through many iterations. Cascading Cascading • Like boosting, distribution changes across datasets. • But unlike boosting we will vary the classifiers. • Classification is based on prediction confidence. • Cascading creates rules that account for most instances catching exceptions at the final step. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) ERROR-CORRECTING OUTPUT CODES K classes; L problems (Dietterich and Bakiri, 1995) Code matrix W codes classes in terms of learners One per class L=K Pairwise L=K(K-1)/2 1 1 W 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 1 1 1 0 1 0 0 1 1 0 W 0 1 0 1 0 1 0 1 0 1 1 0 20 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) 1 1 W 1 1 Full code L=2(K-1)-1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 With reasonable L, find W such that the Hamming distance between rows and columns is maximized. 21