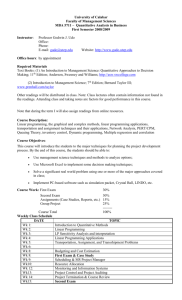

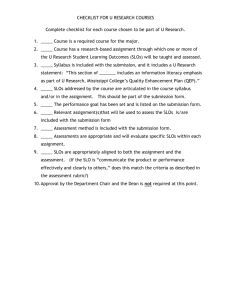

Chapter 15 Course Assessment Basics

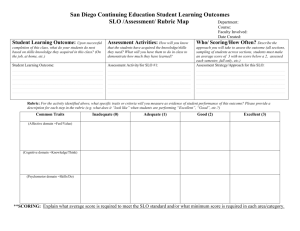

advertisement