1. dia

advertisement

Applied Quantitative Methods

MBA course Montenegro

Peter Balogh

PhD

baloghp@agr.unideb.hu

Non-parametric tests

13. Non-parametric tests

• When looking at hypothesis testing in the previous

chapter we were concerned with specific statistics

(parameters), which represent statements about the

population, and these are then tested by using further

statistics derived from the sample.

• Such parametric tests are extremely important in the

development of statistical theory and for testing of

many sample results, but they do not cover all types of

data, particularly when parameters cannot be

calculated in a meaningful way.

• We therefore need to develop other tests which will be

able to deal with such situations; a small range of these

non-parametric tests are covered in this chapter.

13. Non-parametric tests

• Parametric tests require the following conditions to be

satisfied:

1. A null hypothesis can be stated in terms of

parameters.

2. A level of measurement has been achieved that gives

validity to differences.

3. The test statistic follows a known distribution.

• It is not always possible to define a meaningful

parameter to represent the aspect of the population in

which we are interested.

• For instance, what is an average eye-colour?

• Equally it is not always possible to give meaning to

differences in values, for instance if brands of soft drink

are ranked in terms of value for money or taste.

13. Non-parametric tests

• Where these conditions cannot be met, nonparametric tests may be appropriate, but note that

for some circumstances, there may be no suitable

test.

• As with all tests of hypothesis, it must be

remembered that even when a test result is

significant in statistical terms, there may be

situations where it has no importance in practice.

• A non-parametric test is still a hypothesis test, but

rather than considering just a single parameter of

the sample data, it looks at the overall distribution

and compares this to some known or expected

value, usually based upon the null hypothesis.

13. Non-parametric tests

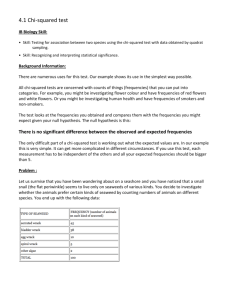

13.1 Chi-squared tests

• The format of this test is similar to the parametric tests

already considered (see Chapter 12).

• As before, we will define hypotheses, calculate a test

statistic, and compare this to a value from tables in

order to decide whether or not to reject the null

hypothesis.

• As the name may suggest, the statistic calculated

involves squaring values, and thus the result can only

be positive.

• This is by far the most widely used non-parametric

hypothesis test and is almost invariably used in the

early stages of the analysis of questionnaire results.

• As statistical programs become easier and easier to use,

more people are able to conduct this test, which, in the

past, took a long time to construct and calculate.

13.1 Chi-squared tests

• The shape of the chi-squared distribution is

determined by the number of degrees of freedom

(cf. the t-distribution used in the previous chapter).

• In general, for relatively low degrees of freedom,

the distribution is skewed to the left, as shown in

Figure 13.2.

• As the number of degrees of freedom approaches

infinity, then shape of the distribution approaches a

Normal distribution.

13.1 Chi-squared tests

13.1 Chi-squared tests

• We shall look at two particular applications of the

chi-squared χ2) test.

• The first considers survey data, usually from

questionnaires, and tries to find if there is an

association between the answers given to a pair of

questions.

• Secondly, we will use a chi-squared test to check

whether a particular set of data follows a known

statistical distribution.

13.1.1 Tests of association

Case study

• In the Arbour Housing Survey we might be

interested in how the responses to question 4 on

type of property and question 10 on use of the local

post office relate.

• Looking directly at each question would give us the

following:

13.1.1 Tests of association

13.1.1 Tests of association

• In addition to looking at one variable at a time, we can

construct tables to show how the answers to one

question relate to the answers to another; these are

commonly referred to as cross-tabulations.

• The single tabulations tell us that 150 respondents (or

50%) live in a house and that 40 respondents (or

13.3%) use their local post office 'once a month'.

• They do not tell us how often people who live in a

house use the local post office and whether their

pattern of usage is different from those that live in a

flat.

• To begin to answer these questions we need to

complete the table (see Table 13.1).

13.1.1 Tests of association

13.1.1 Tests of association

• It would be an extremely boring and time-consuming

job to manually fill a table like Table 13.1, even with a

small sample such as this.

• In fact, in some cases we might want to cross-tabulate

three or more questions.

• However, most statistical packages will produce this

type of table very quickly.

• For relatively small sets of data you could use Excel, but

for larger scale surveys it would be advantageous to use

a more specialist package such as SPSS (the Statistical

Package for the Social Sciences).

• With this type of program it can take rather longer to

prepare and enter the data, but the range of analysis

and the flexibility offered make this well worthwhile.

13.1.1 Tests of association

• The cross-tabulation of questions 4 and 10 will

produce the type of table shown as Table 13.2.

13.1.1 Tests of association

• We are now in a better position to relate the two

answers, but, because different numbers of people

live in each of the types of accommodation, it is not

immediately obvious if different behaviours are

associated with their type of residence.

• A chi-squared test will allow us to find if there is a

statistical association between the two sets of

answers; and this, together with other information,

may allow the development of a proposition that

there is a causal link between the two.

13.1.1 Tests of association

• To carry out the test we will follow the seven steps used in

Chapter 12.

• Step 1 State the hypotheses.

• H0: There is no association between the two sets of answers.

• H1: There is an association between the two sets of answers.

• Step 2 State the significance level.

• As with a parametric test, the significance level can be set at

various values, but for most business data it is usually 5%.

• Step 3 State the critical value.

• The chi-squared distribution varies in shape with the number

of degrees of freedom (in a similar way to the t-distribution),

and thus we need to find this value before we can look up the

appropriate critical value.

13.1.1 Tests of association

Degrees of freedom

• Consider Table 13.1. There are four rows and four

columns, giving a total of 16 cells.

• Each of the row and column totals is fixed (i.e.

these are the actual numbers given by the

frequency count for each question), and thus the

individual cell values must add up to the

appropriate totals.

• In the first row, we have freedom to put any

numbers into three of the cells, but the fourth is

then fixed because all four must add to the (fixed)

total (i.e. three degrees of freedom).

13.1.1 Tests of association

Degrees of freedom

• The same will apply to the second row (i.e. three

more degrees of freedom).

• And again to the third row (three more degrees of

freedom).

• Now all of the values on the fourth row are fixed

because of the totals (zero degrees of freedom).

• Totaling these, we have 3 + 3 + 3 + 0 = 9 degrees of

freedom for this table.

• This is illustrated in Table 13.3.

• As you can see, you can choose any three cells on

the first row, not necessarily the first three.

13.1.1 Tests of association

Degrees of freedom

13.1.1 Tests of association

Degrees of freedom

• There is a short cut!

• If you take the number of rows minus one and

multiply by the number of columns minus one you

get the number of degrees of freedom

ν= (r- 1) x (c- 1)

• Using the tables in Appendix E, we can now state

the critical value as 16.9.

13.1.1 Tests of association

Degrees of freedom

• Step 4 Calculate the test statistic.

• The chi-squared statistic is given by the following

formula:

• where O is the observed cell frequencies (the actual

answers) and E is the expected cell frequencies (if the

null hypothesis is true).

• Finding the expected cell frequencies takes us back to

some simple probability rules, since the null hypothesis

makes the assumption that the two sets of answers are

independent of each other. If this is true, then the cell

frequencies will depend only on the totals of each row

and column.

13.1.1 Tests of association

Calculating the expected values

• Consider the first cell of the table (i.e. the first row

and the first column).

• The number of people living in houses is 150 out of

a total of 300, and thus the probability of someone

living in a house is:

• The probability of 'once a month' is:

13.1.1 Tests of association

Calculating the expected values

• Thus the probability of living in a house and 'once a

month' is:

0.5 x 0.13333 = 0.066665

• Since there are 300 people in the sample, one

would expect there to be

0.066665 x 300 = 19.9095

• people who fit the category of the first cell.

• (Note that the observed value was 30.)

13.1.1 Tests of association

Calculating the expected values

• Again there is a short cut!

• Look at the way in which we found the expected

value.

13.1.1 Tests of association

Calculating the expected values

• We need to complete this process for the other cells

in the table, but remember that, because of the

degrees of freedom, you only need to calculate

nine of them, the rest being found by subtraction.

• Statistical packages will, of course, find these

expected cell frequencies very quickly.

• The expected cell frequencies are shown in Table

13.4.

13.1.1 Tests of association

Calculating the expected values

13.1.1 Tests of association

Calculating the expected values

• If we were to continue with the chi-squared test by

hand calculation we would need to produce the

type of table shown as Table 13.5.

• Step 5 Compare the calculated value and the

critical value.

• The calculated χ2 value of 140.875 > 16.9.

13.1.1 Tests of association

Calculating the expected values

• Step 6 Come to a conclusion.

• We already know that chi-squared cannot be below zero.

• If all of the expected cell frequencies were exactly equal to

the observed cell frequencies, then the value of chi-squared

would be zero.

• Any differences between the observed and expected cell

frequencies may be due to either sampling error or to an

association between the answers; the larger the differences,

the more likely it is that there is an association.

• Thus, if the calculated value is below the critical value, we will

be unable to reject the null hypothesis, but if it is above the

critical value, we reject the null hypothesis.

• In this example, the calculated value is above the critical

value, and thus we reject the null hypothesis.

13.1.1 Tests of association

Calculating the expected values

• Step 7 Put the conclusion into English.

• There appears to be an association between the

type of property people are living in and the

frequency of using the local post office.

• We need now to examine whether such an

association is meaningful within the problem

context and the extent to which the association can

be explained by other factors.

• The chi-squared test is only telling you that the

association (a word we use in this context in

preference to relationship) is likely to exist but not

what it is.

13.1.1 Tests of association

Calculating the expected values

13.1.1 Tests of association

An adjustment

• In fact, although the basic methodology of the test is

correct, there is a problem.

• One of the basic conditions for the chi-squared test is

that all of the expected frequencies must be above

five.

• This is not true for our example!

• In order to make this condition true, we need to

combine adjacent categories until their expected

frequencies are equal to five or more.

• To do this, we will combine the two categories 'Bedsit'

and 'Other' to represent all non-house or flat dwellers;

it will also be necessary to combine 'Twice a week' with

'More often' to represent anything above once a week.

13.1.1 Tests of association

An adjustment

• The new three-by-three cross-tabulation is shown as Table

13.6.

• The number of degrees of freedom now becomes (3 - 1) x (3 1) = 4, and the critical value (from tables) is 9.49.

• Re-computing the value of chi-squared from Step 4, we have

a value of approximately 107.6, which is still substantially

above the critical value of chi-squared.

• However, in other examples, the amalgamation of categories

may affect the decision.

• In practice, one of the problems of meeting this condition is

deciding which categories to combine, and deciding what, if

anything, the new category represents.

• (One of the most difficult examples is the need to combine

ethnic groups in a sample.)

13.1.1 Tests of association

An adjustment

• This has been a particularly long example since we

have been explaining each step as we have gone

along.

• Performing the tests is much quicker in practice,

even if a computer package is not used.

13.1.1 Tests of association

An adjustment

• Table 13.6

How often

Observed

frequencies:

Once a month

Once a week

More often

Expected

frequencies:

Once a month

Once a week

More often

House

Type of property

Rat

Other

30

110

10

5

80

15

5

10

35

20

100

30

13.3

66.7

20

6.7

3.33

10

13.1.1 Tests of association

An adjustment

Example

• Purchases of different strengths of lager are

thought to be associated with the gender of the

drinker and a brewery has commissioned a survey

to find if this is true.

• Summary results are shown below.

Strength

High

Medium

Low

Male

20

50

30

Female

10

55

35

13.1.1 Tests of association

An adjustment

1. H0: No association between gender and strength

bought.

H1: An association between the two.

2. Significance level is 5%.

3. Degrees of freedom = (2 -1) x (3 -1) = 2.

Critical value = 5.99.

4. Find totals:

13.1.1 Tests of association

An adjustment

Strength

High

Medium

Low

Total

Male

20

50

30

100

Female

10

55

35

100

Total

30

105

65

200

13.1.1 Tests of association

An adjustment

• Expected frequency for Male and High Strength is

13.1.1 Tests of association

An adjustment

High

Medium

Low

Total

Male

15

52.5

32.5

100

Female

15

52.5

32.5

100

Total

30

105

65

200

Calculating chi-squared:

2

O

E

(0-E)

(0-E)

20

15

5

25

(0-E)2/E

1.667

10

15

-5

25

1.667

50

52.5

-2.5

6.25

0.119

55

52.5

2.5

6.25

0.119

30

32.5

-2.5

6.25

0.192

35

32.5

2.5

6.25

0.192

13.1.1 Tests of association

An adjustment

• Chi-squared = 3.956

5. 3.956 < 5.99.

6. Therefore we cannot reject the null hypothesis.

7. There appears to be no association between the

gender of the drinker and the strength of lager

purchased at the 5% level of significance.

13.1.2 Tests of goodness-of-fit

• If the data has been collected and seems to follow

some pattern, it would be useful to identify that

pattern and to determine whether it follows some

(already) known statistical distribution.

• If this is the case, then many more conclusions can

be drawn about the data.

• (We have seen a selection of statistical distributions

in Chapters 9 and 10.)

13.1.2 Tests of goodness-of-fit

• The chi-squared test provides a suitable method for

deciding if the data follows a particular distribution,

since we have the observed values and the expected

values can be calculated from tables (or by simple

arithmetic).

• For example, do the sales of whisky follow a Poisson

distribution?

• If the answer is 'yes', then sales forecasting might

become a much easier process.

• Again, we will work our way through examples to clarify

the various steps taken in conducting goodness-of-fit

tests.

• The statistic used will remain as:

13.1.2 Tests of goodness-of-fit

• where 0 is the observed frequencies and E is the

expected (theoretical) frequencies.

13.1.2 Tests of goodness-of-fit

Test for a uniform distribution

• You may recall the uniform distribution from

Chapter 9; it implies that each item or value occurs

the same number of times.

• Such a test would be useful where we want to find

if several individuals are all working at the same

rate, or if sales of various 'reps' are the same.

• Suppose we are examining the number of tasks

completed in a set time by five machine operators

and have available the data shown in Table 13.7.

13.1.2 Tests of goodness-of-fit

Test for a uniform distribution

13.1.2 Tests of goodness-of-fit

Test for a uniform distribution

• We can again follow the seven steps:

1. State the hypotheses:

• H0: All operators complete the same number of tasks.

• H1: All operators do not complete the same number of

tasks.

• (Note that the null hypothesis is just another way of

saying that the data follows a uniform distribution.)

2.

The significance level will be taken as 5%.

3.

The degrees of freedom will be the number of cells

minus the number of parameters required to calculate

the expected frequencies minus one.

• Here ν = 5 — 0—1=4.

• Therefore (from tables) the critical value is 9.49.

13.1.2 Tests of goodness-of-fit

Test for a uniform distribution

4.

Since the null hypothesis proposes a uniform

distribution, we would expect all of the operators to

complete the same number of tasks in the allotted

time.

• This number is:

13.1.2 Tests of goodness-of-fit

Test for a uniform distribution

13.1.2 Tests of goodness-of-fit

Test for a uniform distribution

5.

6.

7.

0.5714 < 9.49.

Therefore we do not reject H0.

There is no evidence that the operators work at

different rates in the completion of these tasks.

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• A similar procedure may be used in this case, but in

order to find the theoretical values we will need to

know one parameter of the distribution.

• In the case of a Binomial distribution this will be the

probability of success, p (see Chapter 9).

• For a Poisson distribution it will be the mean (λ).

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

Example

• The components produced by a certain

manufacturing process have been monitored to find

the number of defective items produced over a

period of 96 days.

• A summary of the results is contained in the

following table:

Number of

defective items:

0

1

2

3

4

5

Number of days:

15

20

20

18

13

10

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• You have been asked to establish whether or not

the rate of production of defective items follows a

Binomial distribution, testing at the 1% level of

significance.

1. H0: the distribution of defectives is Binomial.

H1: the distribution is not Binomial.

2. The significance level is 1%.

3. The number of degrees of freedom is:

No. of cells - No. of parameters -1 = 6-1-1 = 4

• From tables (Appendix E) the critical value is 13.3

(but see below for modification of this value).

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• In order to find the expected frequencies, we need

to know the probability of a defective item.

• This may be found by firts calculating the average of

the sample results.

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• Using this value and the Binomial formula we can

now work out the theoretical values, and hence the

expected frequencies.

• The Binomial formula states

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• Note that because two of the expected frequencies

are less than five, it has been necessary to combine

adjacent groups.

• This means that we must modify the number of

degrees of freedom, and hence the critical value.

Degrees of freedom = 4-1-1 = 2

Critical value = 9.21

• We are now in a position to calculate chi-squared.

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

r

0

E

(0-E)

(0 - E)2

(0 - E)2 /E

0, 1

35

24.6

10.4

108.16

4.3967

2

20

32.34 -12.34 152.28

4.7086

3

18

26.47

-8.47

2.7103

4, 5

23

12.59

10.41 108.37

71.74

8.6075

chi-squared=20.4231

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

5. 20.4231 > 9.21.

6. We therefore reject the null hypothesis.

7. There is no evidence to suggest that the

production of defective items follows a Binomial

distribution at the 1% level of significance.

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

Example

• Items are taken from the stockroom of a jewellery

business for use in producing goods for sale.

• The owner wishes to model the number of items

taken per day, and has recorded the actual numbers

for a hundred day period.

• The results are shown below.

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

Number of

items

0

1

2

3

4

5

6

Number of

days

7

17

26

22

17

9

2

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• If you were to build a model for the owner, would it be

reasonable to assume that withdrawals from stock

follow a Poisson distribution ?

• (Use a significance level of 5%.)

1. H0: the distribution of withdrawals is Poisson.

H1: the distribution is not Poisson.

2. Significance level is 5%.

3. Degrees of freedom = 7-1-1 = 5.

Critical value = 11.1 (but see below).

4. The parameter required for a Poisson distribution is

the mean, and this can be found from the sample data.

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• We can now use the Poisson formula to find the

various probabilities and hence the expected

frequencies:

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• ('Note that, since the Poisson distribution goes off

to infinity, in theory, we need to account for all of

the possibilities.

• This probability is found by summing the

probabilities from 0 to 5, and subtracting the result

from one.)

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

• ('Note that, since the Poisson distribution goes off to

infinity, in theory, we need to account for all of the

possibilities.

• This probability is found by summing the probabilities

from 0 to 5, and subtracting the result from one.)

• Since one expected frequency is below 5, it has been

combined with the adjacent one.

• The degrees of freedom therefore are now 6-1-1 = 4,

and the critical value is 9.49.

• Calculating chi-squared, we have:

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

(x)

0

E

(0 - E)

(0 - E)2

(O-E 2 )/ E

0

7

7.43

-0.43

0.1849

0.0249

1

17

19.31

-2.31

5.3361

0.2763

2

26

25.1

0.9

0.81

0.0323

3

22

21.76

0.24

0.0576

0.0027

4

17

14.14

2.86

8.1796

0.5785

5+

11

12.26

-1.26

1.5876

0.1295

chi-squared = 1.0442

13.1.2 Tests of goodness-of-fit

Test for binomial and Poisson distributions

5. 1.0442 < 9.49.

6. We therefore cannot reject H0.

7. The evidence from the monitoring suggests that

withdrawals from stock follow a Poisson

distribution.

13.1.2 Tests of goodness-of-fit

Test for the Normal distribution

• This test can involve more data manipulations since

it will require grouped (tabulated) data and the

calculation of two parameters (the mean and the

standard deviation) before expected frequencies

can be determined.

• (In some cases, the data may already be arranged

into groups.)

13.1.2 Tests of goodness-of-fit

Test for the Normal distribution

Example

• The results of a survey of 150 people contain details

of their income levels which are summarized here.

• Test at the 5% significance level if this data follows a

Normal distribution.

•

•

•

•

•

•

13.1.2 Tests of goodness-of-fit

Test for the Normal distribution

1. H0: The distribution is Normal.

H1. The distribution is not Normal.

2. Significance level is 5%.

3. Degrees of freedom = 6- 2-1 = 3.

Critical value (from tables) = 7.81.

4. The mean of the sample = £266 and the standard deviation

= £239.02 calculated from original figures.

•

(N.B. see Section 11.2.2 for formulae.)

To find the expected values we need to:

– convert the original group limits into z-scores (by subtracting the

mean and then dividing by the standard deviation)

– find the probabilities by using the Normal distribution tables

(Appendix C); and

– find our expected frequencies

13.1.2 Tests of goodness-of-fit

Test for the Normal distribution

• This is shown in the table below.

• (Note that the last two groups are combined since the

expected frequency of the last group is less than five.

• This changes the degrees of freedom to 5 - 2 - 1 = 2, and

the critical value to 5.99.)

13.1.2 Tests of goodness-of-fit

Test for the Normal distribution

• 5. 45.8605 > 5.99.

• 6. We therefore reject the null hypothesis.

• 7. There is no evidence that the income

distribution is Normal.

13.1.3 Summary note

It is worth noting the following characteristics of chi-squared:

• χ2 is only a symbol; the square root of χ2 has no meaning.

• χ2 has never be less than zero. The squared term in the formula

ensures positive values.

• χ2 is concerned with comparisons of observed and expected

frequencies (or counts).

We therefore only need a classification of data to allow such

counts and not the more stringent requirements of measurement.

• If there is a close correspondence between the observed and

expected frequencies, χ2 will tend to a low value attributable to

sampling error, suggesting the correctness of the null hypothesis.

• If the observed and expected frequencies are very different we

would expect a large positive value (not explicable by sampling

errors alone), which would suggest that we reject the null

hypothesis in favour of the alternative hypothesis.

13.2 Mann-Whitney U test

• This non-parametric test deals with two samples

that are independent and may be of different sizes.

• It is the equivalent of the t-test that we considered

in Chapter 12.

• Where the samples are small (<30) we need to use

tables of critical values (Appendix G) to find

whether or not to reject the null hypothesis; but

where the sample size is large, we can use a test

based on the Normal distribution.

13.2 Mann-Whitney U test

• The basic premise of the test is that once all of the values in

the two samples are put into a single ordered list, if they

come from the same parent population, then the rank at

which values from Sample 1 and Sample 2 appear will be by

chance (at random).

• If the two samples come from different populations, then the

rank at which sample values appear will not be random and

there will be a tendency for values from one of the samples

to have lower ranks than values from the other sample.

• We are therefore testing whether the positioning of values

from the two samples in an ordered list follows the same

pattern or is different.

• While we will show how to conduct this test by hand

calculation, packages such as SPSS and MINITAB will perform

the test by using the appropriate commands.

13.2.1 Small sample test

• Consider the situation where samples have been

taken from two branches of a chain of stores.

• The samples relate to the daily takings and both

branches are situated in city centres.

• We wish to find if there is any difference in turnover

between the two branches.

• Branch 1: £235, £255, £355, £195, £244, £240,

£236, £259, £260

• Branch 2: £240, £198, £220, £215, £245

13.2.1 Small sample test

1. H0: the two samples come from the same population.

H1: the two samples come from different populations.

2. We will use a significance level of 5%.

3. To find the critical level for the test statistic, we look in

the tables (Appendix G) and locate the value from the

two sample sizes.

Here the sizes are 9 and 5, and so the critical value of

U is 8.

4. To calculate the value of the test statistic, we need to

rank all of the sample values, keeping a note of which

sample each value came from.

13.2.1 Small sample test

Rank

1

2

3

4

5

6

7.5

7.5

9

10

11

12

13

14

Value

195

198

215

220

235

236

240

240

244

245

255

259

260

355

Sample

1

2

2

2

1

1

1

2

1

2

1

1

1

1

• (Note that in the event of a tie in ranks, an average is

used.)

13.2.1 Small sample test

• We now sum the ranks for each sample:

Sum of ranks for Sample 1 = 78.5

Sum of ranks for Sample 2 = 26.5

• We select the smallest of these two, i.e. 26.5 and

put it into the following formula:

• where S is the smallest sum of ranks, and n, is the

number in the sample whose ranks we have

summed.

13.2.1 Small sample test

5. 11.5 > 8.

6. Therefore we reject H0.

7. Thus we conclude that the two samples come

from different populations.

13.2.2 Large sample test

• Consider the situation where the awareness of a

company's product has been measured amongst

groups of people in two different countries.

• Measurement is on a scale of 0-100, and each

group has given a score in this range; the scores are

shown below.

• Country A: 21, 34, 56, 45, 45, 58, 80, 32, 46, 50, 21,

11, 18, 89, 46, 39, 29,67, 75, 31, 48

• Country B: 68, 77, 51, 51, 64, 43, 41, 20, 44, 57, 60

13.2.2 Large sample test

• Test to discover whether the level of awareness is

the same in both countries.

1. H0: the levels of awareness are the same.

H1: the levels of awareness are different.

2. We will take a significance level of 5%.

3. Since we are using an approximation based on the

Normal distribution, the critical values will be ±1.96

for this two-sided test.

4. Ranking the values, we have:

13.2.2 Large sample test

Rank

1

2

3

4.5

4.5

6

7

8

9

10

11

12

13

14.5

14.5

16.5

Value

11

18

20

21

21

29

31

32

34

39

41

43

44

45

45

46

Sample

A

A

B

A

A

A

A

A

A

A

B

B

B

A

A

A

Rank

16.5

18

19

20.5

20.5

22

23

24

25

26

27

28

29

30

31

32

Value

46

48

50

51

51

56

57

58

60

64

67

68

75

77

80

89

Sample

A

A

A

B

B

A

B

A

B

B

A

B

A

B

A

A

13.2.2 Large sample test

• Sum of ranks of A = 316.

13.2.2 Large sample test

• The approximation based on the Normal

distribution requires the calculation of the mean

and standard deviation as follows:

13.2.2 Large sample test

• We can now calculate the z value which gives the

number of standard errors from the mean and can be

compared to the critical value from the Normal

distribution.

5. 1.21 < 1.06.

6. Therefore we cannot reject the null hypothesis.

7. There appears to be no difference in the awareness of

the product between the two countries.

13.3 Wilcoxon test

• This test is the non-parametric equivalent of the ttest for matched pairs and is used to identify if

there has been a change in behaviour.

• This could be used when analysing a set of panel

results, where information is collected both before

and after some event (for example, an advertising

campaign) from the same people.

• Here the basic premise is that while there will be

changes in behaviour, or opinions, the ranking of

these changes will be random if there has been no

overall change (since the positive and negative

changes will cancel each other out).

13.3 Wilcoxon test

• Where there has been an overall change, then the

ranking of those who have moved in a positive

direction will be different from the ranking of those

who have moved in a negative direction.

• As with the Mann-Whitney test, where the sample

size is small we shall need to consult tables to find

the critical value (Appendix H); but where the

sample size is large we can use a test based on the

Normal distribution.

• While we will show how to conduct this test by

hand calculation, again packages such as SPSS and

MINITAB will perform the test by using the

appropriate commands.

13.3.1 Small sample test

• Consider the situation where a small panel of eight

members have been asked about their perception

of a product before and after they have had an

opportunity to try it.

• Their perceptions have been measured on a scale,

and the results are given below.

13.3.1 Small sample test

13.3.1 Small sample test

• You have been asked to test if the favourable perception

(shown by a high score) has changed after trying the product.

1. H0: There is no difference in the perceptions.

H1: There is a difference in the perceptions.

2. We will take a significance level of 5%, although others could

be used.

3. The critical value is found from tables (Appendix H); here it

will be 2.

We use the number of pairs (8) minus the number of draws

(1).

Here the critical value is for n= 8 - 1 = 7.

4. To calculate the test statistic we find the differences

between the two scores and rank them by absolute size (i.e.

ignoring the sign).

Any ties are ignored.

13.3.1 Small sample test

Before

8

3

After

9

4

Difference

+1

+1

Rank

2

2

6

4

-2

4

4

5

7

1

6

7

-3

+1

0

5.5

2

ignore

6

9

+3

5.5

7

2

-5

7

• Sum of ranks of positive differences = 11.5.

• Sum of ranks of negative differences = 16.5.

13.3.1 Small sample test

• We select the minimum of these (i.e. 11.5) as our

test statistic.

5. 11.5 > 2.

6. Therefore we cannot reject the null hypothesis.

(This may seem an unusual result, but you need to

look carefully at the structure of the test.)

7. There has been a change in perception after trying

the product.

13.3.2 Large sample test

• Consider the following example.

• A group of workers has been packing items into

boxes for some time and their productivity has been

noted.

• A training scheme is initiated and the workers'

productivity is noted again one month after the

completion of the training.

• The results are shown below.

13.3.2 Large sample test

13.3.2 Large sample test

1. H0: there has been no change in productivity.

H1: there has been a change in productivity.

2. We will use a significance level of 5%.

3. The critical value will be ±1.96, since the large

sample test is based on a Normal approximation.

4. To find the test statistic, we must rank the

differences, as shown below:

13.3.2 Large sample test

Person

A

B

C

D

E

F

G

H

I

J

K

L

M

N

O

P

Q

R

S

T

U

V

W

X

Y

Before

10

20

30

25

27

19

8

17

14

18

21

23

32

40

21

11

19

27

32

41

33

18

25

24

16

After

21

19

30

26

21

22

20

16

25

16

24

24

31

41

25

16

17

25

33

40

39

22

24

30

12

Difference

11

-1

0

1

-6

3

12

-1

11

-2

3

1

-1

1

4

5

-2

-2

1

-1

6

4

-1

6

-4

Rank

23.5

5.5

ignore

5.5

21

14.5

25

5.5

23.5

12

14.5

5.5

5.5

5.5

17

19

12

12

5.5

5.5

21

17

5.5

21

17

13.3.2 Large sample test

• (Note the treatment of ties in absolute values when

ranking.

• Also note that n is now equal to 25.)

• Sum of positive ranks = 218

• Sum of negative ranks = 107 (minimum)

• The mean is given by:

13.3.2 Large sample test

• The standard error is given by:

• Therefore the value of z is given by

5. -1.96 <-1.493.

6. Therefore we cannot reject the null hypothesis.

7. A month after the training there has been no

change in the productivity of the workers.

13.4 Runs test

• This is a test for randomness in a dichotomized variable, for

example gender is either male or female.

• The basic assumption is that if gender is unimportant then

the sequence of occurrence will be random and there will be

no long runs of either male or female in the data.

• Care needs to be taken over the order of the data when using

this test, since if this is changed it will affect the result.

• The sequence could be chronological, for example the date

on which someone was appointed if you were checking on a

claimed equal opportunity policy.

• The runs test is also used in the development of statistical

theory, for example looking at residuals in time series

analysis.

• While we will show how to conduct this test by hand

calculation, packages such as SPSS and MINITAB will perform

the test by using the appropriate commands.

13.4 Runs test

Example

• With equal numbers of men and women employed

in a department there have been claims that there

is discrimination in choosing who should attend

conferences. During April, May and June of last year

the gender of those going to conferences was noted

and is shown below.

13.4 Runs test

13.4 Runs test

1. H0: there is no pattern in the data (i.e. random

order).

H1: there is a pattern in the data.

2. We will use a significance level of 5%.

3. Critical values are found from tables (Appendix I).

Here, since there are six men and five women and

we are conducting a two-tailed test, we can find

the values of 3 and 10.

4. We now find the number of runs in the data:

13.4 Runs test

Date

Person attending

Run no.

April 10

April 12

Male

Female

1

April 16

April 25

Female

Male

2

May 10

Male

May 14

Male

May 16

June 2

Male

Female

3

June 10

June 14

Female

Male

4

5

13.4 Runs test

• Note that a run may constitute just a single

occurrence (as in run 5) or may be a series of values

(as in run 3).

• The total number of runs is 6.

5. 3 < 6 < 10.

6. Therefore we cannot reject the null hypothesis.

7. There is no evidence that there is discrimination

in the order in which people are chosen to attend

conferences.

13.4 Runs test

• Many of the tests which we have considered use

the ranking of values as a basis for deciding

whether or not to reject the null hypothesis, and

this means that we can use non-parametric tests

where only ordinal data is available.

• Such tests do not suggest that the parameters (e.g.

the mean and variance), are unimportant, but that

we do not need to know the underlying distribution

in order to carry out the test.

• They are also called distribution free tests.

13.4 Runs test

• In general, non-parametric tests are less efficient

than parametric tests (since they require larger

samples to achieve the same level of Type II error).

• However, we can apply non-parametric tests to a

wide range of applications and in many cases they

may be the only type of test available.

• This chapter has only considered a few of the many

non-parametric tests but should have given you an

understanding of how they differ from the more

common, parametric tests and how they can be

constructed.

Part 4 Conclusions

• This part of the book has considered the ideas of generalizing

the results of a survey to the whole population, and the

testing of assertions about that population on the basis of

sample data.

• Both of these concepts are fundamental to the use of

statistics in making business decisions.

• They are essential if you want to take a set of sample results,

say from market testing a new product, and make predictions

about it's likely sales success, or otherwise.

• They need to be used in quality control systems which

monitor items of production (or service) and compare them

to set levels (derived from confidence intervals).

• We saw in Part 1 that sampling was necessary in order to

report meaningful results in a timely fashion.

• Now we have a tool which can take these results and

generalize them to the parent population.

Part 4 Conclusions

• As we already knew, some data sets cannot be

adequately described by statistical parameters such

as the mean and standard deviation.

• In these cases we are still able to test assertions

about the population from the sample results by

using non-parametric tests.

• In much survey research, the chi-squared test is the

most widely used test since the ideas and attitudes

being measured do not use a cardinal scale.

Part 4 Conclusions

• There will always be some dispute about the

meaning of testing results and a formal mechanism

such as the hypothesis test is not going to

completely dispel this.

• What it can do, however, give some agreement on

the method used for testing whilst allowing much

more discussion of the hypotheses, the means of

deriving the sample, and the ways of interpreting

the result.

• This might even lead to more considered decisions

being taken!