Cloud Cyberinfrastructure and its Challenges & Applications

advertisement

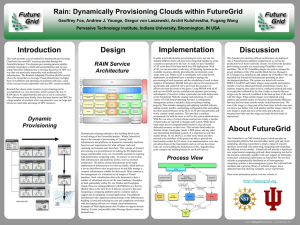

Cloud Cyberinfrastructure and its Challenges & Applications 9th International Conference on Parallel Processing and Applied Mathematics PPAM 2011 Torun Poland September 14 2011 Geoffrey Fox gcf@indiana.edu http://www.infomall.org http://www.salsahpc.org Director, Digital Science Center, Pervasive Technology Institute Associate Dean for Research and Graduate Studies, School of Informatics and Computing Indiana University Bloomington Work with Judy Qiu and many students https://portal.futuregrid.org Some Trends • The Data Deluge is clear trend from Commercial (Amazon, transactions) , Community (Facebook, Search) and Scientific applications • Exascale initiatives will continue drive to high end with a simulation orientation • Clouds offer from different points of view – NIST: On-demand service (elastic); Broad network access; Resource pooling; Flexible resource allocation; Measured service – Economies of scale – Powerful new software models https://portal.futuregrid.org 2 Why need cost effective Computing! (Note Public Clouds not allowed for human genomes) https://portal.futuregrid.org Cloud Issues for Genomics • Operating cost of a large shared (public) cloud ~20% that of traditional cluster • Gene sequencing cost decreasing much faster than Moore’s law • Much biomedical computing does not need low cost (microsecond) synchronization of HPC Cluster – Amazon a factor of 6 less effective on HPC workloads than state of art HPC cluster – i.e. Clouds work for biomedical applications if we can make convenient and address privacy and trust • Current research infrastructure like TeraGrid pretty inconsistent with cloud ideas • Software as a Service likely to be dominant usage model – Paid by “credit card” whether commercial, government or academic – “standard” services like BLAST plus services with your software • Standards needed for many reasons and significant activity here including IEEE/NIST effort – Rich cloud platforms makes hard but infrastructure level standards like OCCI (Open Cloud Computing Interface) emerging – We are still developing many new ideas (such as new ways of handling large data) so some standards premature • Communication performance – this issue will be solved if we bring computing to data Clouds and Grids/HPC • Synchronization/communication Performance Grids > Clouds > HPC Systems • Clouds appear to execute effectively Grid workloads but are not easily used for closely coupled HPC applications • Service Oriented Architectures and workflow appear to work similarly in both grids and clouds • Assume for immediate future, science supported by a mixture of – Clouds – Grids/High Throughput Systems (moving to clouds as convenient) – Supercomputers (“MPI Engines”) going to exascale https://portal.futuregrid.org Clouds and Jobs • Clouds are a major industry thrust with a growing fraction of IT expenditure that IDC estimates will grow to $44.2 billion direct investment in 2013 while 15% of IT investment in 2011 will be related to cloud systems with a 30% growth in public sector. • Gartner also rates cloud computing high on list of critical emerging technologies with for example “Cloud Computing” and “Cloud Web Platforms” rated as transformational (their highest rating for impact) in the next 2-5 years. • Correspondingly there is and will continue to be major opportunities for new jobs in cloud computing with a recent European study estimating there will be 2.4 million new cloud computing jobs in Europe alone by 2015. • Cloud computing spans research and economy and so attractive component of curriculum for students that mix “going on to PhD” or “graduating and working in industry” (as at Indiana University where most CS Masters students go to industry) 2 Aspects of Cloud Computing: Infrastructure and Runtimes • Cloud infrastructure: outsourcing of servers, computing, data, file space, utility computing, etc.. • Cloud runtimes or Platform: tools to do data-parallel (and other) computations. Valid on Clouds and traditional clusters – Apache Hadoop, Google MapReduce, Microsoft Dryad, Bigtable, Chubby and others – MapReduce designed for information retrieval but is excellent for a wide range of science data analysis applications – Can also do much traditional parallel computing for data-mining if extended to support iterative operations – Data Parallel File system as in HDFS and Bigtable Guiding Principles • Clouds may not be suitable for everything but they are suitable for majority of data intensive applications – Solving partial differential equations on 100,000 cores probably needs classic MPI engines • Cost effectiveness, elasticity and quality programming model will drive use of clouds in many areas such as genomics • Need to solve issues of – Security-privacy-trust for sensitive data – How to store data – “data parallel file systems” (HDFS), Object Stores, or classic HPC approach with shared file systems with Lustre etc. • Programming model which is likely to be MapReduce based – – – – Look at high level languages Compare with databases (SciDB?) Must support iteration to do “real parallel computing” Need Cloud-HPC Cluster Interoperability https://portal.futuregrid.org 8 MapReduce “File/Data Repository” Parallelism Instruments Map = (data parallel) computation reading and writing data Reduce = Collective/Consolidation phase e.g. forming multiple global sums as in histogram MPI orCommunication Iterative MapReduce Disks Map Map1 Reduce Map Reduce Map Reduce Map2 Map3 Portals /Users Application Classification: MapReduce and MPI (a) Map Only Input (b) Classic MapReduce (c) Iterative MapReduce Iterations Input Input (d) Loosely Synchronous map map map Pij reduce reduce Output BLAST Analysis High Energy Physics Expectation maximization Many MPI scientific Smith-Waterman (HEP) Histograms clustering e.g. Kmeans applications such as Distances Distributed search Linear Algebra solving differential Parametric sweeps Distributed sorting Multimensional Scaling equations and particle PolarGrid Matlab data Information retrieval Page Rank dynamics analysis Domain of MapReduce and Iterative Extensions https://portal.futuregrid.org MPI 10 High Energy Physics Data Analysis An application analyzing data from Large Hadron Collider (1TB but 100 Petabytes eventually) Input to a map task: <key, value> key = Some Id value = HEP file Name Output of a map task: <key, value> key = random # (0<= num<= max reduce tasks) value = Histogram as binary data Input to a reduce task: <key, List<value>> key = random # (0<= num<= max reduce tasks) value = List of histogram as binary data Output from a reduce task: value value = Histogram file Combine outputs from reduce tasks to form the final histogram CAP3 Sequence Assembly Performance https://portal.futuregrid.org MapReduceRoles4Azure Architecture Azure Queues for scheduling, Tables to store meta-data and monitoring data, Blobs for input/output/intermediate data storage. MapReduceRoles4Azure • Use distributed, highly scalable and highly available cloud services as the building blocks. – Azure Queues for task scheduling. – Azure Blob storage for input, output and intermediate data storage. – Azure Tables for meta-data storage and monitoring • Utilize eventually-consistent , high-latency cloud services effectively to deliver performance comparable to traditional MapReduce runtimes. • Minimal management and maintenance overhead • Supports dynamically scaling up and down of the compute resources. • MapReduce fault tolerance • http://salsahpc.indiana.edu/mapreduceroles4azure/ SWG Sequence Alignment Performance Smith-Waterman-GOTOH to calculate all-pairs dissimilarity Twister v0.9 March 15, 2011 New Interfaces for Iterative MapReduce Programming http://www.iterativemapreduce.org/ SALSA Group Bingjing Zhang, Yang Ruan, Tak-Lon Wu, Judy Qiu, Adam Hughes, Geoffrey Fox, Applying Twister to Scientific Applications, Proceedings of IEEE CloudCom 2010 Conference, Indianapolis, November 30-December 3, 2010 Twister4Azure released May 2011 http://salsahpc.indiana.edu/twister4azure/ MapReduceRoles4Azure available for some time at http://salsahpc.indiana.edu/mapreduceroles4azure/ K-Means Clustering map map reduce Compute the distance to each data point from each cluster center and assign points to cluster centers Time for 20 iterations Compute new cluster centers User program Compute new cluster centers • Iteratively refining operation • Typical MapReduce runtimes incur extremely high overheads – New maps/reducers/vertices in every iteration – File system based communication • Long running tasks and faster communication in Twister enables it to perform close to MPI Twister Pub/Sub Broker Network Worker Nodes D D M M M M R R R R Data Split MR Driver M Map Worker User Program R Reduce Worker D MRDeamon • • Data Read/Write File System Communication • • • • Static data Streaming based communication Intermediate results are directly transferred from the map tasks to the reduce tasks – eliminates local files Cacheable map/reduce tasks • Static data remains in memory Combine phase to combine reductions User Program is the composer of MapReduce computations Extends the MapReduce model to iterative computations Iterate Configure() User Program Map(Key, Value) δ flow Reduce (Key, List<Value>) Combine (Key, List<Value>) Different synchronization and intercommunication mechanisms used by the parallel runtimes Close() Performance of Pagerank using ClueWeb Data (Time for 20 iterations) using 32 nodes (256 CPU cores) of Crevasse High Level Flow Twister4Azure Job Start Map Combine Map Combine Reduce Merge Add Iteration? Map Combine Reduce Job Finish No Yes Data Cache Hybrid scheduling of the new iteration Merge Step In-Memory Caching of static data Cache aware hybrid scheduling using Queues as well as using a bulletin board (special table) https://portal.futuregrid.org BLAST Sequence Search Smith Waterman Sequence Alignment 100.00% 90.00% 3000 70.00% 2500 60.00% 50.00% 40.00% 30.00% Twister4Azure 20.00% Hadoop-Blast DryadLINQ-Blast 10.00% Adjusted Time (s) Parallel Efficiency 80.00% 2000 1500 Twister4Azure 1000 Amazon EMR 0.00% 128 228 328 428 528 Number of Query Files 628 728 500 Apache Hadoop 0 Parallel Efficiency Cap3 Sequence Assembly 100% 95% 90% 85% 80% 75% 70% 65% 60% 55% 50% Num. of Cores * Num. of Blocks Twister4Azure Amazon EMR Apache Hadoop Num. of Cores * Num. of Files Performance with/without data caching Scaling speedup Speedup gained using data cache Increasing number of iterations Cache aware scheduling • New Job (1st iteration) – Through queues • New iteration – Publish entry to Job Bulletin Board – Workers pick tasks based on in-memory data cache and execution history (MapTask Meta-Data cache) – Any tasks that do not get scheduled through the bulletin board will be added to the queue. https://portal.futuregrid.org Visualizing Metagenomics • Multidimensional Scaling MDS natural way to map sequences to 3D so you can visualize • Minimize Stress • Improve with deterministic annealing (gives lower stress with less variation between random starts) • Need to iterate Expectation Maximization • N2 dissimilarities (Needleman-Wunsch) i j • Communicate N positions X between steps https://portal.futuregrid.org 24 100,043 Metagenomics Sequences Aim to do 10 million by end of Year https://portal.futuregrid.org Multi-Dimensional-Scaling • • • • • Many iterations Memory & Data intensive 3 Map Reduce jobs per iteration Xk = invV * B(X(k-1)) * X(k-1) 2 matrix vector multiplications termed BC and X BC: Calculate BX Map Reduce Merge X: Calculate invV (BX) Merge Reduce Map New Iteration https://portal.futuregrid.org Calculate Stress Map Reduce Merge MDS Execution Time Histogram 30 Initialization BC Calculation MR 25 Map Task Execution Time (s) X Calculation MR 20 Stress Calculation MR 15 10 5 0 1 91 181 271 361 451 Map Task 541 631 721 10 iterations, 30000 * 30000 data points, 15 Azure Instances https://portal.futuregrid.org 811 MDS Executing Task Histogram Initialization 16 14 Executing Map Tasks 12 10 8 6 4 2 0 0 50 100 BC Calculation 150 200 Time Since Job Start (s) X Calculation 250 300 Stress Calculation 10 iterations, 30000 * 30000 data points, 15 Azure Instances https://portal.futuregrid.org MDS Performance 700 Speedup 500 # Instances 400 6 6 12 16.4 24 35.3 48 52.8 600 300 Execution Time 200 100 0 5 10 Number of Iterations 15 20 45 Probably super linear as used small instances 40 Time Per Iteration 35 30 25 20 5 10 Number of Iterations 15 20 30,000*30,000 Data points, 15 instances, 3 MR steps per iteration 30 Map tasks per application https://portal.futuregrid.org Twister4Azure Conclusions • Twister4Azure enables users to easily and efficiently perform large scale iterative data analysis and scientific computations on Azure cloud. – Supports classic and iterative MapReduce – First Non pleasingly parallel use of Azure? • Utilizes a hybrid scheduling mechanism to provide the caching of static data across iterations. • Can integrate with Trident and other workflow systems • Plenty of testing and improvements needed! • Open source: Please use http://salsahpc.indiana.edu/twister4azure https://portal.futuregrid.org Map Collective Model • Combine MPI and MapReduce ideas • Implement collectives optimally on Infiniband, Azure, Amazon …… Iterate Input map Initial Collective Step Network of Brokers Generalized Reduce Final Collective Step Network of Brokers https://portal.futuregrid.org 31 Research Issues for (Iterative) MapReduce • Quantify and Extend that Data analysis for Science seems to work well on Iterative MapReduce and clouds so far. – Iterative MapReduce (Map Collective) spans all architectures as unifying idea • Performance and Fault Tolerance Trade-offs; – Writing to disk each iteration (as in Hadoop) naturally lowers performance but increases fault-tolerance – Integration of GPU’s • Security and Privacy technology and policy essential for use in many biomedical applications • Storage: multi-user data parallel file systems have scheduling and management – NOSQL and SciDB on virtualized and HPC systems • Data parallel Data analysis languages: Sawzall and Pig Latin more successful than HPF? • Scheduling: How does research here fit into scheduling built into clouds and Iterative MapReduce (Hadoop) – important load balancing issues for MapReduce for heterogeneous workloads https://portal.futuregrid.org Authentication and Authorization: Provide single sign in to All system architectures Workflow: Support workflows that link job components between Grids and Clouds. Provenance: Continues to be critical to record all processing and data sources Data Transport: Transport data between job components on Grids and Commercial Clouds respecting custom storage patterns like Lustre v HDFS Program Library: Store Images and other Program material Blob: Basic storage concept similar to Azure Blob or Amazon S3 DPFS Data Parallel File System: Support of file systems like Google (MapReduce), HDFS (Hadoop) or Cosmos (dryad) with compute-data affinity optimized for data processing Table: Support of Table Data structures modeled on Apache Hbase/CouchDB or Amazon SimpleDB/Azure Table. There is “Big” and “Little” tables – generally NOSQL SQL: Relational Database Queues: Publish Subscribe based queuing system Worker Role: This concept is implicitly used in both Amazon and TeraGrid but was (first) introduced as a high level construct by Azure. Naturally support Elastic Utility Computing MapReduce: Support MapReduce Programming model including Hadoop on Linux, Dryad on Windows HPCS and Twister on Windows and Linux. Need Iteration for Datamining Software as a Service: This concept is shared between Clouds and Grids Components of a Scientific Computing Platform Web Role: This is used in Azure to describe user interface and can be supported by portals in https://portal.futuregrid.org Grid or HPC systems Traditional File System? Data S Data Data Archive Data C C C C S C C C C S C C C C C C C C S Storage Nodes Compute Cluster • Typically a shared file system (Lustre, NFS …) used to support high performance computing • Big advantages in flexible computing on shared data but doesn’t “bring computing to data” https://portal.futuregrid.org Data Parallel File System? Block1 Replicate each block Block2 File1 Breakup …… BlockN Data C Data C Data C Data C Data C Data C Data C Data C Data C Data C Data C Data C Data C Data C Data C Data C Block1 Block2 File1 Breakup …… Replicate each block BlockN https://portal.futuregrid.org • No archival storage and computing brought to data Trustworthy Cloud Computing • Public Clouds are elastic (can be scaled up and down) as large and shared – Sharing implies privacy and security concerns; need to learn how to use shared facilities • Private clouds are not easy to make elastic or cost effective (as too small) – Need to support public (aka shared) and private clouds • “Amazon is 100X more secure than your infrastructure” (BioIT Boston April 2011) – But how do we establish this trust? • “Amazon is more or less useless as NIH will only let us run 20% of our genomic data on it so not worth the effort to port software to cloud” (Bio-IT Boston) – Need to establish trust https://portal.futuregrid.org Trustworthy Cloud Approaches • Rich access control with roles and sensitivity to combined datasets • Anonymization & Differential Privacy – defend against sophisticated datamining and establish trust that it can • Secure environments (systems) such as Amazon Virtual Private Cloud – defend against sophisticated attacks and establish trust that it can • Application specific approaches such as database privacy • Hierarchical algorithms where sensitive computations need modest computing on non-shared resources https://portal.futuregrid.org US Cyberinfrastructure Context • There are a rich set of facilities – Production XSEDE (used to be called TeraGrid) facilities with distributed and shared memory – Experimental “Track 2D” Awards • FutureGrid: Distributed Systems experiments cf. Grid5000 • Keeneland: Powerful GPU Cluster • Gordon: Large (distributed) Shared memory system with SSD aimed at data analysis/visualization – Open Science Grid aimed at High Throughput computing and strong campus bridging 38 FutureGrid key Concepts I • FutureGrid is an international testbed modeled on Grid5000 • Supporting international Computer Science and Computational Science research in cloud, grid and parallel computing (HPC) – Industry and Academia – Note much of current use Education, Computer Science Systems and Biology/Bioinformatics • The FutureGrid testbed provides to its users: – A flexible development and testing platform for middleware and application users looking at interoperability, functionality, performance or evaluation – Each use of FutureGrid is an experiment that is reproducible – A rich education and teaching platform for advanced cyberinfrastructure (computer science) classes https://portal.futuregrid.org FutureGrid key Concepts II • Rather than loading images onto VM’s, FutureGrid supports Cloud, Grid and Parallel computing environments by dynamically provisioning software as needed onto “bare-metal” using Moab/xCAT – Image library for MPI, OpenMP, Hadoop, Dryad, gLite, Unicore, Globus, Xen, ScaleMP (distributed Shared Memory), Nimbus, Eucalyptus, OpenNebula, KVM, Windows ….. • Growth comes from users depositing novel images in library • FutureGrid has ~4000 (will grow to ~5000) distributed cores with a dedicated network and a Spirent XGEM network fault and delay generator Image1 Choose Image2 … ImageN https://portal.futuregrid.org Load Run FutureGrid: a Grid/Cloud/HPC Testbed Cores 11TF IU 1024 IBM 4TF IU 192 12 TB Disk 192 GB mem, GPU on 8 nodes 6TF IU 672 Cray XT5M 8TF TACC 768 Dell 7TF SDSC 672 IBM 2TF Florida 256 IBM 7TF Chicago 672 IBM NID: Network Impairment Device Private FG Network Public https://portal.futuregrid.org FutureGrid Partners • Indiana University (Architecture, core software, Support) • Purdue University (HTC Hardware) • San Diego Supercomputer Center at University of California San Diego (INCA, Monitoring) • University of Chicago/Argonne National Labs (Nimbus) • University of Florida (ViNE, Education and Outreach) • University of Southern California Information Sciences (Pegasus to manage experiments) • University of Tennessee Knoxville (Benchmarking) • University of Texas at Austin/Texas Advanced Computing Center (Portal) • University of Virginia (OGF, Advisory Board and allocation) • Center for Information Services and GWT-TUD from Technische Universtität Dresden. (VAMPIR) • Red institutions have FutureGrid hardware https://portal.futuregrid.org 5 Use Types for FutureGrid • ~122 approved projects over last 10 months • Training Education and Outreach (11%) – Semester and short events; promising for non research intensive universities • Interoperability test-beds (3%) – Grids and Clouds; Standards; Open Grid Forum OGF really needs • Domain Science applications (34%) – Life sciences highlighted (17%) • Computer science (41%) – Largest current category • Computer Systems Evaluation (29%) – TeraGrid (TIS, TAS, XSEDE), OSG, EGI, Campuses • Clouds are meant to need less support than other models; FutureGrid needs more user support ……. https://portal.futuregrid.org 43 Software Components • Portals including “Support” “use FutureGrid” “Outreach” • Monitoring – INCA, Power (GreenIT) • Experiment Manager: specify/workflow • Image Generation and Repository “Research” • Intercloud Networking ViNE Above and below • Virtual Clusters built with virtual networks Nimbus OpenStack • Performance library Eucalyptus • Rain or Runtime Adaptable InsertioN Service for images • Security Authentication, Authorization, https://portal.futuregrid.org