Solutions to TSP using Convex Hull

Obtaining a Solution to

TSP using Convex Hulls

Eric Salmon & Joseph Sewell

Traveling Salesman Problem

Distances between n cities are stores in a distance matrix D with elements the diagonal elements d ii d ij where i , j = 1 … n and are zero. A tour can be represented by a cyclic permutation π of { 1, 2, …, n } where π(i) represents the city that follows city i on the tour. The traveling salesman problem is then the optimization problem to find a permutation π that minimizes the length of the tour denoted by: 𝑛 𝑑 𝑖 π

(𝑖) 𝑖=1

Hasler & Hornik (Journal of Statistical Software, 2007 V. 23 I. 22)

Convex Hull

• For any subset of the plane (set of points, rectangle, simple polygon), its convex hull is the smallest convex set that contains that subset.

• Given a set of N points

• The convex hull is the bounded region of a set of N points creating the smallest convex polygon.

Convex hull - Grahams Scan Algorithm

• The first step in this algorithm is to find the point with the lowest y-coordinate. If the lowest ycoordinate exists in more than one point in the set, the point with the lowest x-coordinate out of the candidates should be chosen. Call this point P. This step takes O(n), where n is the number of points in question.

• Next, the set of points must be sorted in increasing order of the angle they and the point P make with the x-axis. Any general-purpose sorting algorithm is appropriate for this, for example heapsort (which is O(n log n)).

• The algorithm proceeds by considering each of the points in the sorted array in sequence. For each point, it is determined whether moving from the two previously considered points to this point is a "left turn" or a "right turn". If it is a "right turn", this means that the second-to-last point is not part of the convex hull and should be removed from consideration. This process is continued for as long as the set of the last three points is a "right turn". As soon as a "left turn" is encountered, the algorithm moves on to the next point in the sorted array. (If at any stage the three points are collinear, one may opt either to discard or to report it, since in some applications it is required to find all points on the boundary of the convex hull.)

Partial ordering from convex hull

• It has been shown that if the costs c of the nodes in the 2-dimensional space, then the order in which the nodes on the boundary of H ij represent Euclidean distance and H is the convex hull appear in the optimal tour will follow the order in which they appear in H*

• *(Eilon, Watson-Gabdy, Christofides, 1971).

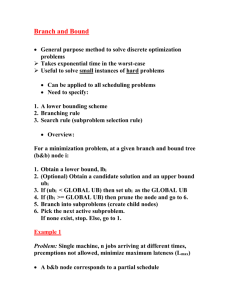

Branch & Bound

• An algorithm design for optimization problems.

• Enumerations are possible solutions

• Candidate partial solutions are child nodes from the root

• Before enumerating child node, this branch is checked against upper/lower bounds compared to optimal solution

• In the case of TSP this would be total distance up to that node

• If this value is greater than the bound, discard entire branch

• No added distance would ever decrease total distance

• Continue enumeration through tree until solution found

Convex Partial Ordering and Branch & Bound

• The convex set C defines a partial ordering of the shortest path. We will discard any branch of tours that violate this partial ordering, and also † maintain a record of the shortest tour length encountered thus far. If adding a city to a tour would exceed this length, we discard the branch.

• This reduces the complexity to h*((n-h)+1)!

• † Graham scan: http://www.math.ucsd.edu/~ronspubs/72_10_convex_hull.pdf

Minimum Spanning Tree

Given a connected, undirected graph, a spanning tree of that graph is a sub graph that is a tree and connect all the vertices together.

Speedy preprocessing for further computation:

Kruskal’s : O(E log V)

Proving Lower & Upper Bound

• The cost(minimum spanning tree) is the shortest cost to connect every node in the tree. This provides a lower bound for TSP because there does not exist a path that visits all nodes in a shorter cost.

• Likewise, the 2 * cost(minimum spanning tree) can provide a convenient upper bound by imagining that we start from some node and explore the sub tree branching from that node, doing the same for each child node, and then retracing the path back to the starting node.

How the algorithm works

• Initially, we conduct a graham scan of the nodes in the tree T to obtain a partial ordering H from the convex hull.

• Next, we find the minimum spanning tree to obtain the lower and upper bounds of the cost of the shortest tour.

• We choose a starting node S as the first node in our partial ordering.

• We explore each permutation P of the remaining nodes in the tree.

• If the total cost of the edges in the permutation ever exceeds the upper bound established by 2 * cost(MST), we stop exploring that entire branch. This eliminates (T-P)! permutations from the solution space.

• If the most recent node Z added to P is the first node in H, then remove Z from H and continue. The partial ordering has been maintained.

• Otherwise, if Z is in H, the partial ordering has been violated, then discard the entire branch.

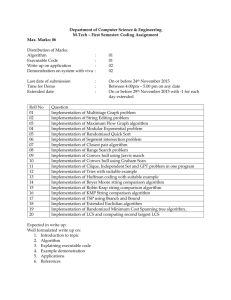

Benchmarks for assessment

• Bases for assessment: The algorithm will be assessed based on its complexity and running time as compared with an exhaustive search of the entire solution space from a fixed starting point which searches (n-1)! solutions. A count of method calls will be kept to determine how many solutions are being searched by the devised algorithm. Currently, our algorithm searches h * ((n-h)+1)! points, where n is the number of cities, and

h is the number of points on the boundary contour of the convex hull.

• A similar approximation algorithm we have devised is Σ(h * (n-h-i)h) from i=0 to i=n;

Complexity

• Exhaustive search in its basic form must compute n! possible tours

• If n = 14

• (n-1)! = 6,227,020,800 possible solutions.

• With our algorithm and 4 points laying on the hull

• 4 * ((13-4) + 1)! = 14,515,200 possible solutions before utilizing branch & bound techniques from cost of MST.

Drawbacks

• Large subsets which have numerous cities which lie within the convex hull

(e.g.: do not lie on the line segment defining the hull) raise the complexity due to not being able to maintain a large amount of nodes in the convex hull partial ordering.

• Even if a large number of cities lie on the hull, the complexity is still too large to compute on modern hardware in a reasonable time.

Demonstration

THE FOLLOWINGSLIDES WERE

MERGED WITH OUR PREVIOUS

PRESENTATION

Applications of Dynamic

Programming and

Heuristics to the Traveling

Salesman Problem

Eric Salmon & Joseph Sewell

Traveling Salesman Problem

Distances between n cities are stores in a distance matrix D with elements d n and the diagonal elements d ii i , j = 1 … of { 1, 2, …, salesman problem is then the optimization problem to find a permutation π that minimizes the length of the tour denoted by: ij where are zero. A tour can be represented by a cyclic permutation π n } where π(i) represents the city that follows city i on the tour. The traveling 𝑛 𝑑 𝑖 π

(𝑖) 𝑖=1

Hasler & Hornik (Journal of Statistical Software, 2007 V. 23 I. 22)

Heuristic Algorithms for TSP

A heuristic is a technique for solving a problem quicker when other methods are too slow or for finding an approximate solution to a problem.

• Random Search

Generate random permutation for a tour

• Genetic Algorithm

Mimic evolution to arrive at a tolerable tour

• Simulated Annealing

Find a solution by moving slowly towards a global optimum without being trapped in local optimums

Genetic Algorithm

• A genetic algorithm is a search heuristic that mimics the process of natural selection.

Simulated Annealing

• Name inspired from metal work

• Heating and cooling an object to alter its properties

• While the algorithm is ‘hot’, it is allowed to jump out of its local optimums

• As the algorithm ‘cools’ it begins to hone on the global optimum

Simulated Annealing (cont.)

Dynamic Programming

• A method for solving complex problems by breaking them down into simpler sub-problems

• Exploits sub-problem overlap

• Example: Finding Fibonacci numbers.

• F(n) = F(n-2) + F(n-1)

• To find F(n) you must also compute F(n-2) and F(n-1)

• These values will be recomputed for each F(n) you want to find

• Using Dynamic Programming, every computed value would be stored which would then be looked up before computation.

Computing Fibonacci (naïve)

fib(n) if n <= 2 : f = 1 else : f = fib(n-1) + fib(n-2) return f

Dynamic Programing: Fibonacci

array = {} fib(n): if n in array: return array[n] if n <= 2 : f = 1 else: f = fib(n-1) + fib(n-2) array[n] = f return f

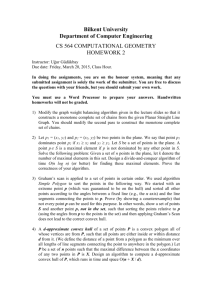

Branch & Bound

• An algorithm design for optimization problems.

• Enumerations are possible solutions

• Candidate partial solutions are child nodes from the root

• Before enumerating child node, this branch is checked against upper/lower bounds compared to optimal solution

• In the case of TSP this would be total distance up to that node

• If this value is greater than the bound, discard entire branch

• No added distance would ever decrease total distance

• Continue enumeration through tree until solution found

Branch & Bound

• is an algorithm design paradigm for discrete and combinatorial optimization problems. A branch-and-bound algorithm consists of a systematic enumeration of candidate solutions by means of state space search: the set of candidate solutions is thought of as forming a rooted tree with the full set at the root. The algorithm explores branches of this tree, which represent subsets of the solution set. Before enumerating the candidate solutions of a branch, the branch is checked against upper and lower estimated bounds on the optimal solution, and is discarded if it cannot produce a better solution than the best one found so far by the algorithm.

http://en.wikipedia.org/wiki/Branch_and_bound

Branch & Bound

• A branch-and-bound procedure requires two tools. The first one is a splitting procedure that, given a set S of candidates, returns two or more smaller sets S

1

, S

2

… whose union covers S. Note that the minimum of f(x) over S is min{v

1

, v

2 where each v i is the minimum of f(x) within S i

,

, …},

. This step is called branching, since its recursive application defines a search tree whose nodes are the subsets of S.

http://en.wikipedia.org/wiki/Branch_and_bound

Branch & Bound

• The second tool is a procedure that computes upper and lower bounds for the minimum value of

f(x) within a given subset of S. This step is called bounding.

• The key idea of the BB algorithm is: if the lower bound for some tree node (set of candidates) A is greater than the upper bound for some other node B, then A may be safely discarded from the search. This step is called pruning, and is usually implemented by maintaining a global variable m

(shared among all nodes of the tree) that records the minimum upper bound seen among all subregions examined so far. Any node whose lower bound is greater than m can be discarded.

http://en.wikipedia.org/wiki/Branch_and_bound

Branch & Bound

• The recursion stops when the current candidate set S is reduced to a single element, or when the upper bound for set S matches the lower bound. Either way, any element of S will be a minimum of the function within S.

http://en.wikipedia.org/wiki/Branch_and_bound

• Given: