ppt

advertisement

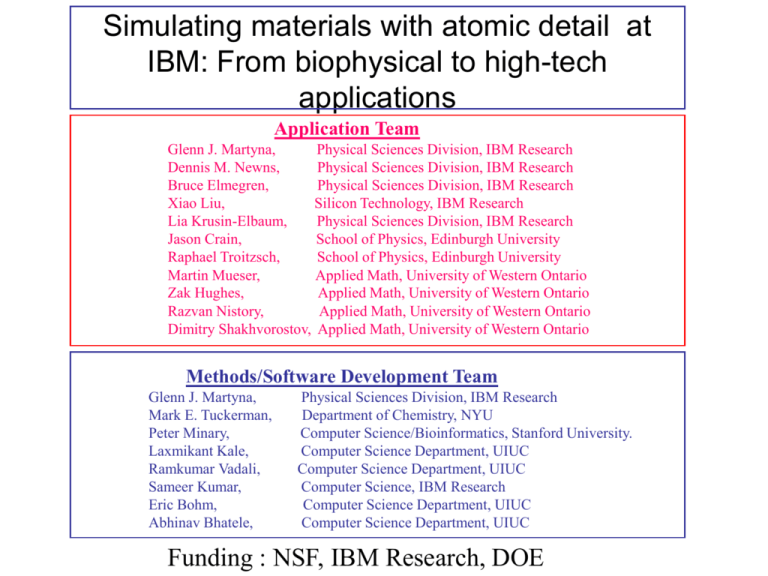

Simulating materials with atomic detail at

IBM: From biophysical to high-tech

applications

Application Team

Glenn J. Martyna,

Physical Sciences Division, IBM Research

Dennis M. Newns,

Physical Sciences Division, IBM Research

Bruce Elmegren,

Physical Sciences Division, IBM Research

Xiao Liu,

Silicon Technology, IBM Research

Lia Krusin-Elbaum,

Physical Sciences Division, IBM Research

Jason Crain,

School of Physics, Edinburgh University

Raphael Troitzsch,

School of Physics, Edinburgh University

Martin Mueser,

Applied Math, University of Western Ontario

Zak Hughes,

Applied Math, University of Western Ontario

Razvan Nistory,

Applied Math, University of Western Ontario

Dimitry Shakhvorostov, Applied Math, University of Western Ontario

Methods/Software Development Team

Glenn J. Martyna,

Mark E. Tuckerman,

Peter Minary,

Laxmikant Kale,

Ramkumar Vadali,

Sameer Kumar,

Eric Bohm,

Abhinav Bhatele,

Physical Sciences Division, IBM Research

Department of Chemistry, NYU

Computer Science/Bioinformatics, Stanford University.

Computer Science Department, UIUC

Computer Science Department, UIUC

Computer Science, IBM Research

Computer Science Department, UIUC

Computer Science Department, UIUC

Funding : NSF, IBM Research, DOE

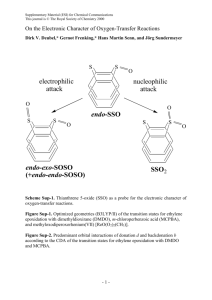

IBM’s Blue Gene/L network torus

supercomputer

Our fancy apparatus.

Goal : The accurate treatment of complex heterogeneous

systems to gain physical insight.

Characteristics of current models

Empirical Models: Fixed charge,

non-polarizable,

pair dispersion.

Ab Initio Models: GGA-DFT,

Self interaction present,

Dispersion absent.

Problems with current models (empirical)

Dipole Polarizability : Including dipole polarizability changes

solvation shells of ions and drives them to the surface.

Higher Polarizabilities: Quadrupolar and octapolar

polarizabilities are NOT SMALL.

All Manybody Dispersion terms: Surface tensions and bulk

properties determined using accurate pair potentials are

incorrect. Surface tensions and bulk properties are both

recovered using manybody dispersion and an accurate pair

potential. An effective pair potential destroys surface

properties but reproduces the bulk.

Force fields cannot treat chemical reactions:

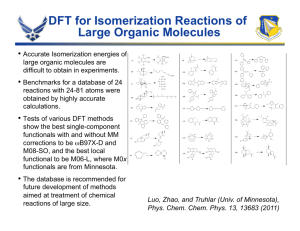

Problems with current models (DFT)

• Incorrect treatment of self-interaction/exchange:

Errors in electron affinity, band gaps …

• Incorrect treatment of correlation: Problematic

treatment of spin states. The ground state of transition

metals (Ti, V, Co) and spin splitting in Ni are in error.

Ni oxide incorrectly predicted to be metallic when

magnetic long-range order is absent.

• Incorrect treatment of dispersion : Both exchange

and correlation contribute.

• KS states are NOT physical objects : The bands of the

exact DFT are problematic. TDDFT with a frequency

dependent functional (exact) is required to treat

excitations even within the Born-Oppenheimer

approximation.

Conclusion : Current Models

•

•

Simulations are likely to provide semi-quantitative

accuracy/agreement with experiment.

Simulations are best used to obtain insight and examine

physics .e.g. to promote understanding.

Nonetheless, in order to provide truthful solutions of the

models, simulations must be performed to long time scales!

Goal : The accurate treatment of complex heterogeneous

systems to gain physical insight.

Evolving the model systems in time:

•Classical Molecular Dynamics : Solve Newton's

equations or a modified set numerically to yield averages in

alternative ensembles (NVT or NPT as opposed to NVE)

on an empirical parameterized potential surface.

•Path Integral Molecular Dynamics : Solve a set of

equations of motion numerically on an empirical potential

surface that yields canonical averages of a classical ring polymer

system isomorphic to a finite temperature quantum system.

•Ab Initio Molecular Dynamics : Solve Newton's

equations or a modified set numerically to yield averages in

alternative ensembles (NVT or NPT as opposed to NVE) on a

potential surface obtained from an ab initio calculation.

•Path Integral ab initio Molecular Dynamics : Solve a

set of equations of motion numerically on an ab initio potential

surface to yield canonical averages of a classical ring polymer

system isomorphic to a finite temperature quantum system.

Recent Improvements :

•Increasing the time step to 100x from 1fs to 100fs for

numerical solvers of empirical model MD simulations.

•Reducing the scaling of non-local pseudopotential

computations in plane wave DFT from N3 to N2.

•Overcoming multiple barriers in empirical model

simulations.

•Treat long range interactions for surfaces, clusters and

wires in order Nlog N computational time.

•A unified formalism for including manybody polarization

and dispersion in molecular simulation

Phys. Rev. Lett. 93, 150201 (2004).

Chem. Phys. Phys. Chem. 6, 1827 (2005).

J. Chem. Phys.. 126, 074104 (2007).

J. Chem. Phys. 118, 2527 (2003).

Chem. Phys. Lett. 424, 409 (2006), PRB (2009)

Siam J. Sci Comp. (2008).

Unified Treatment of Long Range Forces:

Point Particles and Continuous Charge Densities.

Clusters :

Wires:

Surfaces:

3D-Solids/Liquids:

J. Chem. Phys. (2001-2004)

Open Question : Can we `tweak’ current models or

are more powerful formulations required?

Chem. Phys. Lett. 424, 409 (2006) , PRB (2009)

J. Chem. Phys.. 126, 074104 (2007)

Exp.

Quantum Drude

Dynamic Contact Staging REPSWA

Sampling the C50 alkane:

1) The order O(N) Reference Potential Spatial Warping method removes

47 torsional barriers simultaneously whilst preserving the partition fnc.

2) Dynamic contact staging treats intramolecular collisions.

Siam J. Sci. Comp. (2008)

Exciting large scale domain motion

Submitted PNAS

1) Standard HMC (red) cannot sample minor groove narrowing rapidly.

2) The O(N), partition function preserving Rouse Mode Projection HMC

guarantees positive modes allowing fast low frequency mode sampling.

Limitations of ab initio MD

(despite our efforts/improvements!)

Limited to small systems (100-1000 atoms)*.

Limited to short time dynamics and/or sampling

times.

Parallel scaling only achieved for

# processors <= # electronic states

until recent efforts by ourselves and others.

*The methodology employed herein scales as O(N3) with

system size due to the orthogonality constraint, only.

Solution: Fine grained parallelization of CPAIMD.

Scale small systems to 105 processors!!

Study long time scale phenomena!!

(The charm++ QM/MM application is work in progress.)

IBM’s Blue Gene/L network torus

supercomputer

The worlds fastest supercomputer!

Its low power architecture requires fine grain parallel

algorithms/software to achieve optimal performance.

Density Functional Theory : DFT

Electronic states/orbitals of water

Removed by introducing a non-local electron-ion interaction.

Plane Wave Basis Set:

The # of states/orbital ~ N where N is # of atoms.

The # of pts in g-space ~N.

Plane Wave Basis Set:

Two Spherical cutoffs in G-space

gz

y(g)

gz

n(g)

gy

gx

y(g) : radius gcut

gx

gy

n(g) : radius 2gcut

g-space is a discrete regular grid due to finite size of sys

Plane Wave Basis Set:

The dense discrete real space mesh.

z

z

y(r)

x

y

y(r) = 3D-FFT{ y(g)}

n(r)

y

x

n(r) = Sk|yk(r)|2

n(g) = 3D-IFFT{n(r)} exactly

Although r-space is a discrete dense mesh, n(g) is generated exactly!

Simple Flow Chart : Scalar Ops

Memory penalty

Object

States

Density

Orthonormality

: Comp : Mem

: N2 log N : N2

: N log N : N

: N3

: N2.33

Flow Chart : Data Structures

Parallelization under charm++

Transpose

Transpose

Transpose

Transpose

RhoR

Transpose

Effective Parallel Strategy:

The problem must be finely discretized.

The discretizations must be deftly chosen to

–Minimize the communication between

processors.

–Maximize the computational load on the

processors.

NOTE , PROCESSOR AND DISCRETIZATION

ARE

Ineffective Parallel Strategy

The discretization size is controlled by the

number of physical processors.

The size of data to be communicated at a given

step is controlled by the number of physical

processors.

For the above paradigm :

–Parallel scaling is limited to #

processors=coarse grained parameter in the

model.

THIS APPROACH IS TOO LIMITED TO

ACHIEVE FINE GRAINED PARALLEL

Virtualization and Charm++

Discretize the problem into a large number of

very fine grained parts.

Each discretization is associated with some

amount of computational work and

communication.

Each discretization is assigned to a light weight

thread or a ``virtual processor'' (VP).

VPs are rolled into and out of physical processors

as physical processors become available

(Interleaving!)

Mapping of VPs to processors can be changed

easily.

The Charm++ middleware provides the data

Parallelization by over partitioning of parallel objects :

The charm++ middleware choreography!

Decomposition of work

Charm++ middleware maps work to processors dynamically

Available Processors

On a torus architecture, load balance is not enough!

The choreography must be ``topologically aware’’.

Challenges to scaling:

Multiple concurrent 3D-FFTs to generate the states in real space

require AllToAll communication patterns. Communicate N2 data pts.

Reduction of states (~N2 data pts) to the density (~N data pts)

in real space.

Multicast of the KS potential computed from the density (~N pts)

back to the states in real space (~N copies to make N2 data).

Applying the orthogonality constraint requires N3 operations.

Mapping the chare arrays/VPs to BG/L processors in a topologically

aware fashion.

Scaling bottlenecks due to non-local and local electron-ion interactions

removed by the introduction of new methods!

Topologically aware mapping for CPAIMD

Density

N1/12

States

~N1/2

Gspace

~N1/2

(~N1/3)

•The states are confined to rectangular prisms cut from the torus to

minimize 3D-FFT communication.

•The density placement is optimized to reduced its 3D-FFT

communication and the multicast/reduction operations.

Topologically aware mapping for

CPAIMD : Details

Improvements wrought by topological aware mapping

on the network torus architecture

Density (R) reduction and multicast to State (R) improved.

State (G) communication to/from orthogonality chares improved.

‘’Operator calculus for parallel computing”, Martyna and Deng (2009) in preparation.

Parallel scaling of liquid water* as a function of system size

on the Blue Gene/L installation at YKT:

*Liquid water has 4 states per molecule.

•Weak scaling is observed!

•Strong scaling on processor numbers up to ~60x the number of states!

Scaling Water on Blue Gene/L

Software : Summary

Fine grained parallelization of the Car-Parrinello ab

initio MD method demonstrated on thousands of

processors :

# processors >> # electronic states.

Long time simulations of small systems are now

possible on large massively parallel

supercomputers.

Goal : The accurate treatment of complex heterogeneous

systems to gain physical insight.

Novel Switches by Phase Change Materials

The phases are interconverted by heat!

Novel Switches by Phase Change Materials

Ge0.15Te0.85

or

Eutectic GT

Novel Switches by Phase Change Materials

GeSb2Te4

or

GST-124

Piezoelectrically driven memories?

• A pressure driven mechanism would be fast and scalable.

• Studying pressure can lead to new insights into mechanism.

• Can we find suitable materials using a combined exp/theor approach?

GST-225 undergoes pressure

induced “amorphization” both

experimentally and theoretically ….

but the process is not

reversible!

Amorphous

Crystal

Amorphous converts to

crystal and never recovers

Eutectic GS (Ge0.15Sb0.85 ) undergoes an

amorphous to crystalline transformation

under pressure, experimentally!

Is the process amenable to reversible

switching as in the thermal approach???

Utilize tensile load to approach the spinodal and cause pressure

induced amorphization: Eutectic GS alloy

Schematic of a potential device

based on pressure switching

P~1.5 GPa

P~ -1.5 GPa

CPAIMD spinodal line!

Spinodal decomposition under tensile load

Solid is stable at ambient pressure

Postulate : Ge/Sb switch to 4coordinate state in amorphous:

Umbrella-flip model

Alexander V. Kolobov, Paul Fons, Anatoly I. Frenkel, Alexei L. Ankudinov, Junji Tominaga & Tomoya Uruga

Nature Materials 3, 703 - 708 (2004)

Crystalline

Amorphous

Gap driven picture of the transition

Near half-filling pseudogap opens into gap

maximally lowering electronic energy, driving

the structural change.

The electronic energy, total energy, gap and conductivity

track the 3-coordinate to 4-coordinate species conversion.

Gap driven structural relaxation:

IBM’s Piezoelectric Memory

We are investigating other materials, better device designs and

generalizing the principle to produce a fast low power architecture.

Conclusions

• Important physical insight can be gleaned from

high quality, large scale computer simulation

studies.

• The parallel algorithm development required

necessitates cutting edge computer science.

• New methods must be developed hand-in-hand

with new parallel paradigms.

• Using clever hardware with better methods and

parallel algorithms shows great promise to impact

both science and technology.