Analysts behaving badly

advertisement

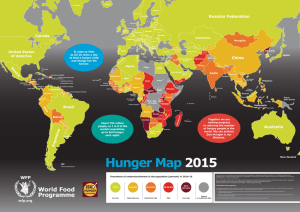

Wednesday October 9th 2013, FAO Headquarters, Ethiopia Room 18:00 – 19:30 Measuring Food Insecurity for Policy Guidance and Evaluation Statistics Division - ESS OPEN ISSUES AND POSSIBLE SOLUTIONS Measuring food security Analysts behaving badly Carlo Cafiero, FAO ESS Different views of “measurement” • In physics, to measure means to provide rules to associate numbers to phenomena • Measuring tools (i.e. “rulers”) can be created relatively easily • Precision and reliability can be assessed by experimenting • Too narrow for the social sciences • In a broader, philosophical sense, to measure is a process towards understanding phenomena • Development of the tool and assessment of its reliability cannot be separated from the establishment of the validity of the concept A tension between data and concepts • Proceeding from data to concepts… • Take stock of existing data -> Interpret them -> Synthesize -> Communicate • (examples: GHI, IPC) • … or from concepts to data • Conceptualize -> Analyze complexity -> Focus -> Define the measurement tool -> Collect data • (example: US – HFSSM scale) Obviously, the process is circular Definition of the metrics Thinking, Analysis, Definitions Synthesis, Filtering, Understanding Concept use of the measures for different purposes (monitoring, targeting, etc.) Data The complementary roles of statistics and communication • Statistics • particularly relevant when observation is partial, affected by noise • Communication • particularly delicate when phenomena are complex and evolving over time The role of theory… • Theory can be used to fill the data gap… • …but you need a “good” theory, and here comes a conundrum: you need data to validate the theory that you need to fill data gaps… • If theory is not sufficiently validated, using it may alter the results of measurement, by contributing to spreading myths • Example: the Law of One Price in commodity markets and the food price crisis of 2007/08 … and of methods • Respect fundamental principles of logical inference • Observed correlation does not imply causality • A certain time sequence does not imply causality • The possibility of concomitant, alternative causes should always be explicitly considered and, if possible, ruled out before drawing conclusions • When data are noisy (and data are always noisy), statistical inference is the only way to go Analysts behaving badly • Ad hoc interpretations of terms and concepts underlying indicators • Rhetoric of science used to support claims • Miscommunicating the meaning and/or hiding the (lack of) precision of estimates • “There are 336,997 persons living in extreme poverty in country X” There is too much at stake … • Halting or misdirecting research efforts • International Symposium 2003/International Symposium 2012: Ten years discussing methods, limited attention to improving data quality. • Encouraging possibly wrong policies • Ban exports? • Produce vegetables in Siberia? • Blame the speculator? … and we are under continuing pressure • The anxiety of measuring “something”, to provide some number • Political pressure • Over-interpretation/Risk of misdirection • The unresolved issue of the quality of the data • Remember, models do not do miracles: garbage in, garbage out Our discussion today • Who needs food security measures, and why? • How to respond to the demand, by balancing rigor and «usability»? • What is FAO Statistics offering?